Home > Information > News

#News ·2025-01-07

Ultraman regrets it!

Recently, Altman revealed in an interview that the original pricing of ChatGTP Pro was decided by his own head.

The result did not expect the user is really too ruthless, directly put OpenAI bald, serious losses!

In a post on X, Altman said ChatGPT Pro is actually losing money. At $200, he thought it was a sure win for OpenAI, given the capital-intensive nature of the business.

Clearly, he misjudged the actual use of users. Therefore, it is urgent to modify the pricing strategy of the model.

picture

picture

However, the discussion about pricing and product is not the focus, in Altman's mind, OpenAI's ultimate goal - building AGI and ASI is the most important.

But the pricing incident also highlights the fact that there is no evidence that scaling up will lead to AGI, but the huge costs of doing so are certain.

If the query cost of the o3 model is as high as $1000, the cost of o4 may be tens of thousands, is AGI really affordable for ordinary people?

In a recent interview with Bloomberg, Altman reflected on the four days of the "palace" incident, the first two years of ChatGPT, how he ran OpenAI, and his relentless pursuit of AGI.

Highlights of the interview:

On November 30, 2022, traffic to the OpenAI website reached a peak near zero. At that time, for such a small, limited activity of the startup, even the boss did not bother to track visits.

It was a quiet day, the last the company knew.

Two months later, OpenAI's website traffic exploded to more than 100 million visitors as people experimented with ChatGPT and were both excited and alarmed by its power.

Since then, no one has ever returned to their original state, especially Ultraman.

Q: Your team suggests that now is a good time to review the past two years, reflect on certain events and decisions, and clarify some issues. But before we start, can you tell the story of OpenAI's founding moment one more time? Because the historical value of this seems to increase by the day.

Altman: Everyone wants a concise story: a story where there's a definite moment when something happens. To be conservative, I would say that OpenAI experienced at least 20 founding moments in 2015. The biggest moment for me personally was the dinner Ilya and I had at Counter in Mountain View, California, just the two of us.

If you go back even further, I've always been very interested in AI. I studied this in my undergraduate years. I was distracted for a while, but in 2012, Ilya and others made AlexNet (Convolutional Neural Networks). I continued to watch what was going on, and I thought to myself, "Oh my God, deep learning seems to be real. And it seems to be scalable. There's a lot to be done, and I should take the opportunity to do something about it."

So I started meeting with a lot of people and asking them who would be good to do this with me. In 2014, AGI was a pure technological illusion, and people didn't want to talk to me at all. When people mentioned AGI, they thought it was a fun idea and could even destroy their careers. But there's one person you should definitely talk to, and that's Ilya. So I found Ilya at a meeting, stopped him in the hallway, and we got talking. I thought to myself, "This man is so smart." I told him roughly what I was thinking, and we decided to have dinner together. At our first dinner, he basically spelled out our strategy for how to build AGI.

Q: How much of the spirit of that dinner continues in OpenAI today?

Altman: Pretty much all of it. We believe in the power of deep learning and believe that AGI can be achieved through a specific technical approach and a collaborative path of research and engineering. It's amazing to me how well all this works. Often, most technical inspirations don't quite work out, and it's clear that some things in our original vision simply don't work, especially the structure of the company. But we believe AGI is entirely possible, and if it is, it would be a major breakthrough for society.

Q: One of the initial strengths of the OpenAI team was recruiting. Somehow, you've managed to attract a large number of top AI research talent, often offering much less compensation than your competitors. How do you attract this talent?

Altman: Our secret is: Come on, build AGI together. It worked because, at the time, it was so bizarre that it was considered heresy to say you were going to build AGI. So 99% of the people are screened out, and the rest are those who are truly talented and have the ability to think for themselves. It's very inspiring. If you're doing the same thing as everyone else, say you're building the 10,000th photo sharing app, it's hard to attract talent. But for jobs that no one else is doing, a small number of really talented people will be attracted to them. So our position, which sounded bold and even questionable, attracted a group of talented young people.

Q: Did you adapt quickly to your roles?

Altman: Most people had full-time jobs at the time, and I had a job at the time, so I was doing less at first, and then as time went on, I fell in love with it more and more. By 2018, I was totally hooked on it. But it was like a "Band of Brothers" approach for a while. Ilya and Greg manage it, but everyone has their own thing to keep them busy.

Q: It seems that the first few years were quite a romantic time.

Altman: Well, that was certainly the most interesting moment in OpenAI's evolution. I mean, it's fun now, too, but given the impact it had on the world, I think it was one of the greatest phases of scientific discovery, a once-in-a-lifetime experience.

Q: In 2019, you took over as CEO. How did that happen?

Altman: I tried to juggle OpenAI and Y Combinator, but it was too hard. The idea that "we can actually build AGI" totally appealed to me. Interestingly, I remember thinking at the time that we would achieve AGI by 2025, but that number was completely arbitrary, and that was only ten years into our existence. People used to joke back then that the only thing I did was walk into a conference room and say, "Scale up!" While that's not true, scaling up was really the central focus back then.

Q: ChatGPT's official release date is November 30, 2022. Does that feel like a long time ago, or does it feel like it happened a week ago?

Altman: I'm going to be 40 next year. On my 30th birthday, I wrote a blog post titled "Days are Long, but 10 years are Short." Someone emailed me this morning and said, "This is my favorite blog, I read it every year. Will you update this blog when you're 40?" I laughed because I definitely wouldn't be updating. I don't have time. But if I updated, the title would be "Days are Long, 10 Years Longer." Anyway, it seemed like a long time ago.

picture

picture

Q: When the first large number of users started pouring in and it was clear that this was a big deal, did you have a 'wow' moment?

Altman: There are a few things. First, I believe ChatGPT has done a pretty good job, but the rest of the company is saying, "Why are you asking us to release this? I rarely make those "we're going to do this" decisions, but this is one of them.

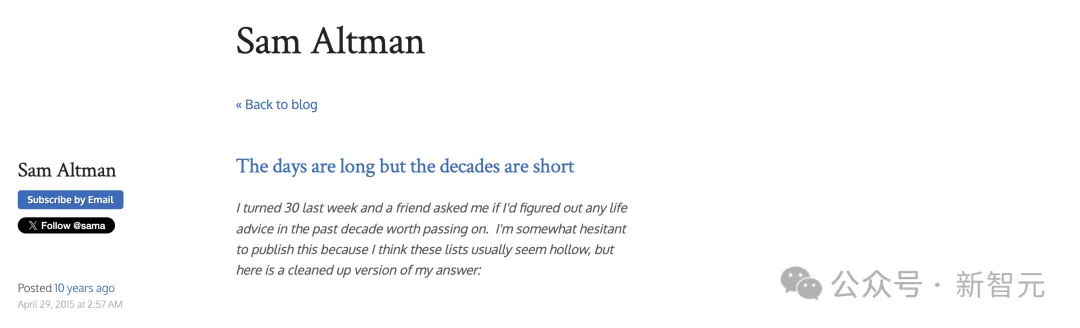

YC has a famous chart, a potential curve drawn by co-founder Paul Graham. As the novelty wears off, new technologies experience a long slump before product and market fit and finally take off. It's part of YC culture. In the first few days, ChatGPT was used more during the day and less at night. Teams are saying, "Ha ha ha, it's going down." But if there's one thing I've learned at YC, it's that as long as the new trough is higher than the previous peak, extraordinary things can happen. For the first five days, it looked like this, and I thought, "We're going to exceed expectations."

Potential curve drawn by Paul Graham

Potential curve drawn by Paul Graham

This has led to a frantic scramble for computing resources. We needed a lot of computing resources, but we weren't ready because we didn't have a clear business model when we launched ChatGPT. I remember at a meeting in December of that year, I said, "I'll look at some ways to pay for this, but we can't continue this discussion." I had a lot of bad ideas, none of them good. So we said, "Well, let's try the subscription model and figure it out later." And so on until now.

We released GPT-3.5, and GPT-4 is coming, and we know it's going to be even better. When I talk to others about uses, I also emphasize, "I know we can do better." We improved it quickly. This made the global media realize that a turning point had been reached.

Some OpenAI executives at the company's headquarters in San Francisco on March 13, 2023.

Some OpenAI executives at the company's headquarters in San Francisco on March 13, 2023.

Q: Are you a person who enjoys success? Or are you already worried about the next phase?

Altman: I would say my career is a little strange: The normal trajectory is you run a big, successful company, and then when you're 50 or 60, you get tired of the hard work and go into venture capital. It's a very rare career path for me to go into venture capital and stay in venture capital for quite a long time and then be a company owner. There are a lot of things I don't feel good about, but one thing I feel really good about is knowing what's going to happen because you've watched and coached multiple people on how to run a company.

Part of me felt grateful, but part of me was like, "I'm strapped to a spaceship, my life's turned upside down, and it's not that fun." My partner often tells funny stories about that time. No matter what I looked like when I came home, he would say, "Great!" And I'd say, "This is really bad, and it's bad for you. You don't realize it yet, but it's really bad."

Q: You've been famous in Silicon Valley for quite some time, but the arrival of GPT has made you world-famous, as fast as stars like Sabrina Carpenter or Timothee Chalamet. Does this affect your ability to manage your employees?

Altman: It makes my life more complicated. But in a company, whether you're famous or not, all people care about is, "Where the hell is my GPU?"

But in other areas of my life, I felt a sense of distance. I'm new to this feeling. I can detect this feeling of strangeness when I am with old friends and new friends (except those I am particularly close to). I think I do feel that way at work if I'm around people who don't normally interact. If I have to attend a meeting with a group I've hardly ever met, I'm pretty sure it's there. But I spend most of my time with researchers. I promise you, come with me to a research conference after this, and you'll see nothing but people treating me badly. That's great.

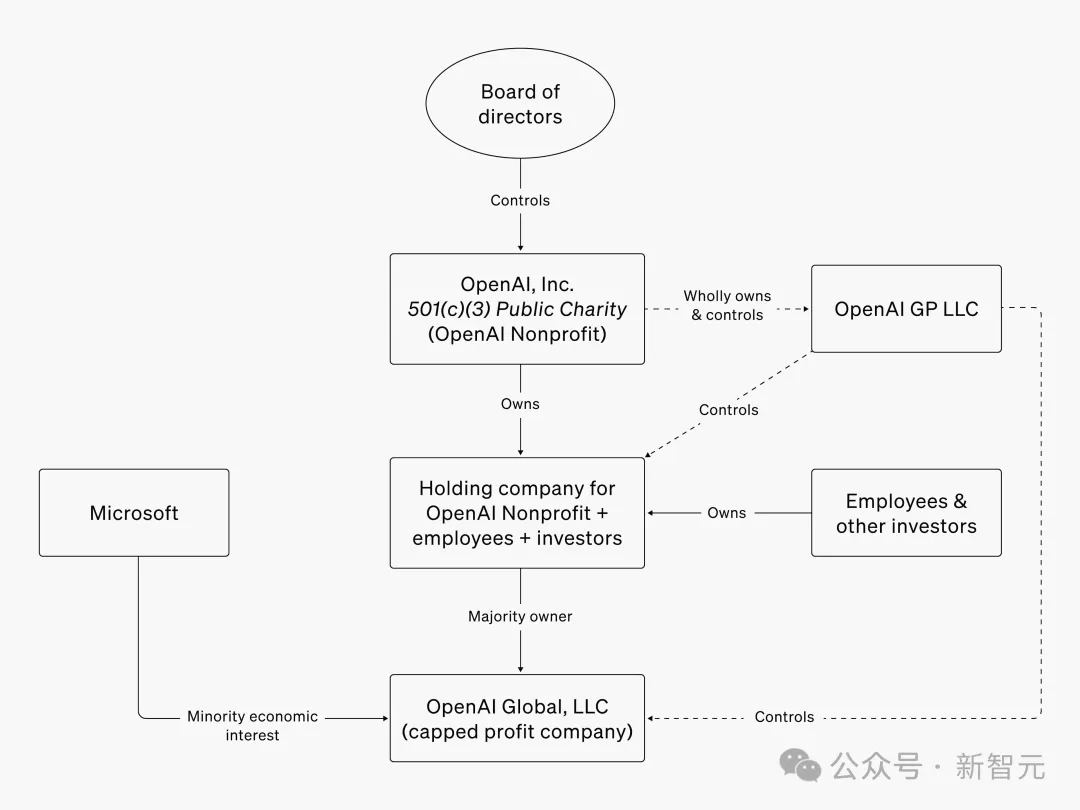

Q: A for-profit company that has billions of dollars in outside investment but needs to report to a nonprofit board can be problematic. Can you remember a moment when you realized this?

Altman: There must be a lot of moments like that. From November 2022 to November 2023, the memory of that whole year is hazy. It felt like in 12 months we'd built an entire company from scratch, and in public. Looking back now, one lesson I've learned is that everyone said they couldn't get the relative order of importance and urgency wrong, but it turns out they couldn't. So I would say that the moment when I woke up to reality and realized that this wasn't going to work would have been 12:05 p.m. that Friday.

Q: When the news broke that the board fired you as CEO, it was quite a shock. But you seem like a very emotionally intelligent person. Did you notice any signs of tension before this? Do you know you're the source of that tension?

Altman: I don't think I'm an emotionally intelligent person at all, but even I can sense the tension. You know, we've been having constant discussions about safety versus capability and the role of the board and how to balance all of those issues. So I knew things were tense, and I wasn't an emotionally intelligent person, and I didn't realize things were getting out of hand.

A lot of crazy stuff happened that weekend. My recollection of that time - and maybe I don't remember the details exactly right - was that they fired me at noon on Friday, and quite a few other colleagues decided to resign that evening. Late at night, I thought, "Why don't we start a new AGI project?" Later that evening, some of the executives said, "Well, we think things are better. Calm down and wait to hear from us."

On Saturday morning, two board members called and wanted to talk about my willingness to go back. At first I was very angry and immediately refused. And I was like, "Okay, okay." I really care about OpenAI. But I also said, "as long as the entire board withdraws." I wish now that I had done it differently, but at the time it felt like a reasonable request. And then we did have a big disagreement on the issue of the board. So we started talking about a new board of directors, and both sides didn't make sense of some of each other's ideas, but they came to an agreement.

Then came Sunday, which was my most fretful day. From Saturday to Sunday, they kept saying, "It's almost done, we're just taking legal advice and the board's consent is being drafted." I have repeatedly said that I do not want to dismantle OpenAI, and I hope that the other side will tell the truth. "Yes, you'll come back. You'll come back."

On Sunday night, they suddenly announced that Emmett Shear was the new CEO. And I thought, "That's it, now I'm really finished," because I've been totally lied to. By Monday morning, a lot of colleagues were threatening to quit, and the board said, "Okay, we need to change our decision."

Q: The board said it conducted an internal investigation and concluded that you were "not always candid" in your communications with them. It's a specific accusation: They think you're lying or withholding some information; But it's also vague: it doesn't tell you exactly where you're not being honest. Do you now know what problems they are referring to?

Altman: I've heard different versions. One of them was, "Sam didn't even tell the board that he was launching ChatGPT." That's not how I remember or understand it. But the truth is, I certainly didn't say, "We're going to release this thing and it's going to be a huge hit." Something like that. I don't think a lot of the characterization from the board is very fair. One thing I know better is that I've had run-ins with different board members. They weren't happy with the way I tried to get them off the board. I learned from it.

Q: You realized at some point that OpenAI's structure was going to kill the company. Because a mission-driven nonprofit will never be able to compete for enough computing resources or achieve the rapid transformation needed for OpenAI to thrive. The board is made up of idealists who put purity above survival. So you start making decisions to let OpenAI compete, which may require a bit of sleight of hand, which is completely unacceptable to the board.

Altman: I don't think I'm playing games. I can only say that in order to take quick action, the board did not fully understand the cause and effect of the problem. There was a mention of "Sam has startup funds, but he didn't tell us." The truth of the matter is that OpenAI's operating structure is very complex, and neither OpenAI nor the people who hold OpenAI equity can directly control the startup fund. And I happen to be the one with no equity in OpenAI. So I'm holding on until we have a mature equity transfer structure. I don't think it needs to be reported to the board, and now I'm open to people asking questions about it, and I'll say so in a clearer way. But OpenAI was growing like a rocket, and I really didn't have time to explain. If you get the chance, you can talk to the current board members and ask them if they think I've played a game, because I've tried to avoid that.

OpenAI's current architecture

OpenAI's current architecture

The previous board was adamant that AGI could go wrong, and I think they were honest in their insistence and concern. Over the weekend, for example, one of the board members told the team here, "Destroying a company might fit the mission of a nonprofit board, too." This statement has attracted ridicule from everyone, but I think this is the true power of faith. I'm sure she meant it when she said it. Although I completely disagree with the specific conclusions, I respect this ideal and persistence. I think the last board acted out of a sincere but mistaken belief that AGI was within reach, after all, and that we weren't accountable for it. I respect their starting point, but I totally disagree with their approach.

Q: Obviously, you won in the end. But don't you feel traumatized?

Altman: Of course, I was horrified. The hardest part is not going through that, because there's a lot of adrenaline in those four days and I can do a lot. I was also very touched by the support of my colleagues and the broad community. Soon it was over, but every day it got worse. Another government investigation, another old board member leaking fake news to the press. The people who screwed me and the company, I feel like they're gone, but now I have to clean up their mess. It was December, it was dark early, about 4:45 p.m., it was wet, cold, and raining, and I was walking around the house by myself, feeling tired and frustrated. I feel so unfair and I don't think I deserve this. But I can't stop, because there are all kinds of "burning issues" to deal with.

Q: When you come back to the company, do you worry about the way people look at you? Are you worried that some people think you're not a good leader at all and need to rebuild their trust?

Altman: It's worse than that. When things cleared up, everything was fine. But for the first few days, no one knew what was going on. When I walk in an office building, people tend to avoid my eyes. It's like I was diagnosed with terminal cancer. There was compassion, there was empathy, but no one knew what to say. It was really hard. But I thought, "We have a complex job to do, and I'm going to get on with it."

Q: Can you talk about how you run your business? What's your day like? For example, do you talk to engineers one-on-one, or do you walk around the building?

Altman: Let me check my schedule. We have a three-hour executive team meeting every Monday, and then, yesterday and today, I had one-on-one meetings with six engineers, as well as research meetings. There are several important collaboration meetings tomorrow, as well as a lot of computing resource related meetings. Tomorrow I have five sessions on building computing resources and three product brainstorming sessions, followed by dinner with a key hardware partner. That's about it. There are a few regular tasks each week, and then most of the time is dealing with unexpected things.

Q: How much time do you spend on internal and external communication?

Altman: Mostly internal communication. I don't write inspirational emails, but I do a lot of one-on-one or group discussions, and I communicate through Slack.

Q: Do you feel trapped?

Altman: I'm a heavy Slack user who's used to sorting through data in a big mess, and there's a lot of information to be had in Slack. While conversations with small research teams can provide insight, extensive conversations can also yield valuable information.

Q: You've talked about ChatGPT's look and user experience before, and it's been very clear. As a CEO, where do you feel you have to be personally involved, rather than acting like a coach?

Altman: For a company the size of OpenAI, there are very few opportunities for direct participation. I had dinner with the Sora team last night and wrote several pages detailing my recommendations, but that doesn't happen very often. Sometimes I come out of a meeting with the research team with a very specific proposal that involves specific work details for the next three months, but that's very unusual.

Q: We've talked before about how scientific research can sometimes conflict with a company's operating structure. Is there any symbolism behind the fact that you've separated the research department from the rest of the company in a different building a few miles away?

Altman: No, that's just because of logistics and space planning. We will build a large campus in the future, and the research department will still have its own dedicated space. Protecting core research is very important to us.

Q: Protect the research department from what?

Altman: Silicon Valley companies typically start with a product, and as they get bigger, revenue growth tends to slow. Then one day, the CEO may launch a new research lab with a set of new ideas to drive further growth. There have been several successful examples of this in history, like Bell LABS and Xerox. But often this is not the case, companies are very successful on the product side, but research and development is getting weaker. We're lucky that OpenAI is growing very fast, probably the fastest growing tech company of all time, but it's also easy to lose sight of the importance of R&D, and I'm not going to let that happen.

We came together to build AGI and superintelligence, and higher goals. In the process, many things can distract us from our final goal. I think it's really important that you don't let yourself get distracted.

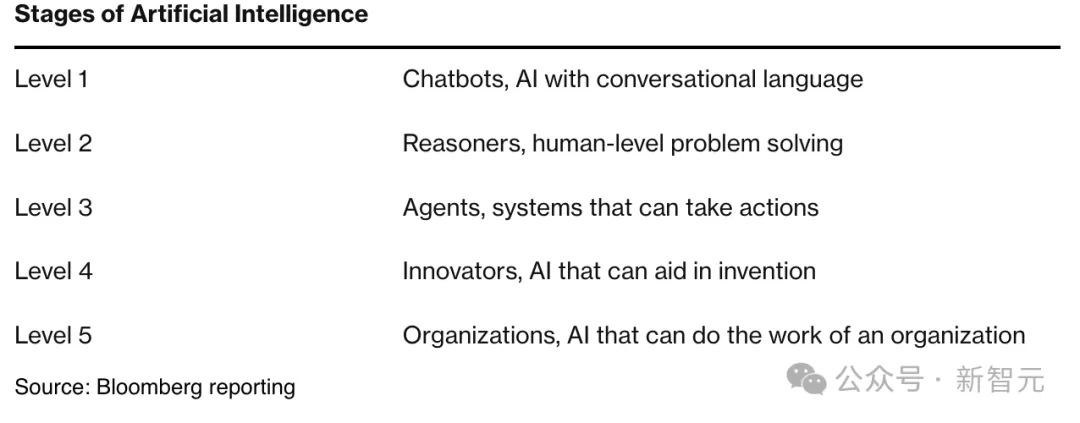

Q: As a company, you seem to have stopped talking publicly about AGI in favor of talking about different levels of AI, and you're still keen to talk about AGI personally.

Altman: I think the word AGI has become very vague now. If you look at our five levels, you'll find that each level has someone who thinks it's AGI. The reason for the different levels is to be more specific about where we are and where we are, rather than discussing whether it is AGI or not.

picture

picture

Q: What is the threshold for you to say, "OK, we have now achieved AGI"?

Altman: My rough understanding is that when an AI system can replace skilled human practitioners in important jobs, I would call it AGI. Of course, there will be a lot of follow-up questions, such as, should we replace all of them or replace some of them? Can a computer program decide on its own that it wants to be a doctor? Is its ability at the top of the industry, compared to the top 2%? How autonomous is it? I don't have deep, precise answers to these questions yet.

But when AI can replace the brilliant software engineers that companies employ, I think a lot of people will think, well, this is the beginning of AGI. Of course, we're always going to tweak the criteria, which is why it's hard to define AGI. And when I talk about super intelligence, the key question is whether it can rapidly increase the rate of scientific discovery.

Q: You have over 300 million users now. What have you learned about ChatGPT from user feedback?

Altman: Talking to users about what they do and don't do with ChatGPT has been very helpful in our product planning. A lot of people are using ChatGPT for search, and that's not what we were originally designed for. And its search performance was really bad. But then search became an important feature. To be honest, I've barely used Google since we launched search in ChatGPT. When I originally designed the prototype internally, I had no idea ChatGPT would replace my use of Google.

Another thing learned from users is how much people rely on it for medical advice. Many people who work at OpenAI receive touching emails, such as someone saying: I was sick for years and no doctor told me what was wrong with me. I put all the symptoms and test results into ChatGPT and it told me I had a rare disease. I went to the doctor, followed the recommended treatment and am now fully recovered. This is an extreme example, but similar things happen all the time. This made us realize that people have this need, and we should invest more development in healthcare.

Q: Your products are priced from free to $20, $200, and even $2,000. How to price unprecedented technology? Based on market research, or just a guess?

Altman: When we first launched ChatGPT, it was free, but then the number of users started to explode, and we had to find a way to support the operating costs. At that time we tested two prices, $20 and $42. It turned out that $42 was a little too high, and users didn't think it was worth it, but they were willing to accept $20. So we settled for $20. That was decided around the end of December 2022 or the beginning of January 2023, and we didn't do a very rigorous pricing study.

We are also considering other directions. A lot of customers tell us they want to pay for what they use. For example, some months I may need to spend $1,000 on computing resources, and some months I want to spend very little. I remember when you had dial-up, AOL would give you 10 or 5 hours a month. I hate the idea of being paid by the hour. I don't like the feeling of being limited. So I'm also thinking about whether there are other pricing models that are more appropriate and also based on actual usage.

Q: What does your safety committee look like now? What has changed in the last year or year and a half?

Altman: The tricky part is that we have a lot of different security mechanisms. We have an internal Safety Advisory Group (SAG) that conducts systematic technical studies and provides advice. We also have an SSC (Safety and Security Committee) under the Board of Directors. In addition, there is a DSB (Decision Oversight Board) jointly established with Microsoft. So, we have an internal mechanism, a board mechanism and a Microsoft co-board. We are working to make these mechanisms more efficient.

Q: Are you involved in all three committees?

Altman: That's a good question. SAG (Safety Advisory Group) reports are sent to me, but I am not a full member. Here's how it works: They make a report and send it to me. I'll take a look and send my opinion to the board. SSC (Safety Oversight Committee) I was not involved. And I'm a member of the DSB (Decision Oversight Board). Now that we have a clearer picture of the security process, I hope to be able to make it more efficient.

Q: Have your views on potential risks changed?

Altman: I think we have some serious or potential short-term problems in cybersecurity and biotech that need to be mitigated. In the long run, a truly powerful system will have some risks that are difficult to accurately imagine and model. But I also believe that these risks are real, and the only way to have a chance of addressing them is to launch a product and learn from it.

Q: When it comes to the short-term future, the industry seems to be focused on three issues: model scaling, chip shortages, and energy shortages. I know these issues are related, can you rank them according to your level of concern?

Altman: We have a plan in place. In terms of model expansion, we have made continuous progress in technology advancement, capability enhancement and security improvement. I think 2025 is going to be an amazing year. Have you heard of the ARC-AGI challenge? Five years ago, it was designed as a guide to AGI. They designed a very difficult benchmark, and our forthcoming model passes that benchmark. This challenge has been around for five years and has not been solved. If you score 85%, you pass. Our system, without any customization, achieved 87.5%. In addition to this, we will also introduce very promising research results and better models.

On the chip side, we have been working hard to build a complete chip supply chain, working together with our partners. We have teams that build data centers and produce chips for us, and we also have our own chip research and development projects. We have a great relationship with NVIDIA, a really amazing company. We will announce more plans next year, and now is a critical time for us to scale up chips.

Q: So the energy issue...

Altman: Controlled fusion will work.

Q: What is the approximate time?

Altman: Soon. There will soon be a net increase in fusion demonstrations. But the next step is to build a fail-safe system, and to scale up and figure out how to build a factory to mass-produce such a system. That would require regulatory approval. All of this could take a few years, and I expect Helion to bring a tangible, controlled fusion solution soon.

Q: In the short term, is there a way to maintain the pace of AI development without compromising climate goals?

Altman: Yes, but in my opinion, nothing would be better than approving controlled fusion reactors as soon as possible. I think a particular type of fusion method is fantastic, and we should go all out to make it happen.

Q: Many of the things you just mentioned involve the government. Now that a new president is about to take office, you have personally donated $1 million. Why is that?

Altman: Because he's the president of the United States. I support any president.

Q: I understand that it seems to make sense for OpenAI to support a president who cares about personal vendetta, and as a personal donor, Trump opposes many of the things you previously supported. Am I supposed to think that this donation is more an act of loyalty than a gesture of patriotism?

Altman: I don't support everything Trump does or says or thinks. And I don't support everything about Biden. But I stand by America and am willing to work with any president in my power to serve the interests of the country." Especially at this juncture, I think this transcends all political issues. I think Artificial General Intelligence (AGI) will probably be developed during this presidency, and it's important to get it right. Supporting the inauguration of the president, I think it's a small thing, I don't think it's a big decision that needs to be considered. But I do think all of us should want the president to succeed.

Q: He says he hates the Chip Act, and you support the Chip Act.

Altman: Actually, I don't support it either. I think the Chip Act is better than doing nothing, but it's not the best course we should take. I think there's an opportunity for a better follow-up. The Chip Act did not do what we all hoped it would.

picture

picture

Q: Clearly, Musk will have some sort of role in the administration. He's suing you, and he's running against you. I read your comment on DealBook that you don't think he's going to use his position to make any small moves in AI.

Altman: Yes, I do think so.

Q: But seriously, over the last few years, he bought Twitter and then tried to Sue it out. He unblocked Alex Jones' account and challenged Mark Zuckerberg to a cage fight, but these are just the tip of the iceberg...

Altman: I think he's going to keep doing all kinds of crazy things. He could go ahead and Sue us, drop the suit, file a new one or something. He challenged me to a cage fight, but it didn't look like he was really challenging Zuckerberg. He'll say a lot, try a lot, and then back out; Being sued by others will also Sue others; Run afoul of the government, get investigated by the government. That's his style. Will he abuse his political power against a business rival? I don't think he will. I really do. Of course, I may turn out to be wrong.

Q: When you two worked best together, what roles did you each play?

Altman: We complement each other. We're not sure exactly what this is going to be, or what we're going to do, or where it's going to go from here, but we have a common belief that this is important and that we need to work toward this general direction and adjust in time.

Q: I'm curious what your actual partnership is like?

Altman: I don't remember having any particularly serious run-ins with Musk before he decided to quit. Despite all the rumours - people say he berates people and throws tantrums and stuff like that - I haven't experienced anything like that.

Q: Were you surprised that he raised so much money for xAI from the Middle East?

Altman: No surprise. They have a lot of money. It's the industry everyone wants to be in right now, and Elon is Elon.

Q: Assuming you're right, and both Musk and the administration have positive intentions, what would be the most helpful move by the Trump administration in the field of AI in 2025?

Altman: Build a lot of infrastructure in the United States. One of the things I really agree with the president on is that it's become incredibly difficult to build things in the United States right now. Whether it's power plants, data centers, or anything like that, it's hard to build. I understand how bureaucracy builds up, but this situation is not good for the country as a whole. This is especially true when you consider that the United States needs to lead in artificial intelligence. And the United States does need to take the lead in AI.

Reference data: https://www.bloomberg.com/features/2025-sam-altman-interview/

2025-02-17

2025-02-14

2025-02-13

friend link

400-000-0000

立即获取方案或咨询

top