Home > Information > News

#News ·2025-01-07

Old Huang put on a new leather suit and brought a new GPU.

The eyes of the world are on Las Vegas this morning.

At 10:30 am on January 7, Beijing time, Nvidia CEO Huang Renxun delivered a keynote speech at the CES 2025 exhibition in Las Vegas, involving topics including GPU, AI, games, robots and so on.

At the beginning of the presentation, Huang reviewed the history of Nvidia's GPU. From 2D to 3D, CUDA birth to RTX. In the era of artificial intelligence, GPU has promoted the evolution of AI from perception to generation, next will be the agent, and the future will soon have artificial intelligence into the physical world.

Machine learning has changed the way every application is built and the way it computes. Now, what would a fully AI-oriented hardware look like? Nvidia gave us a demonstration.

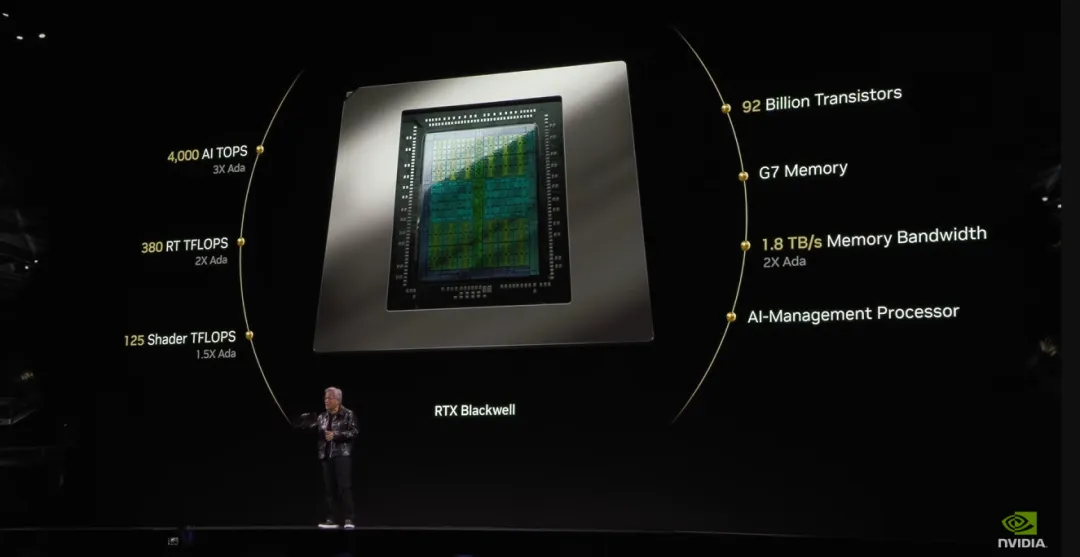

Nvidia's Blackwell architecture AI computing card has been out for a long time, and people have been looking forward to the new architecture of consumer Gpus, and today Nvidia directly came to a one-time release.

CES scene, Huang Renxun holding RTX5090 graphics card, proudly mounted the podium.

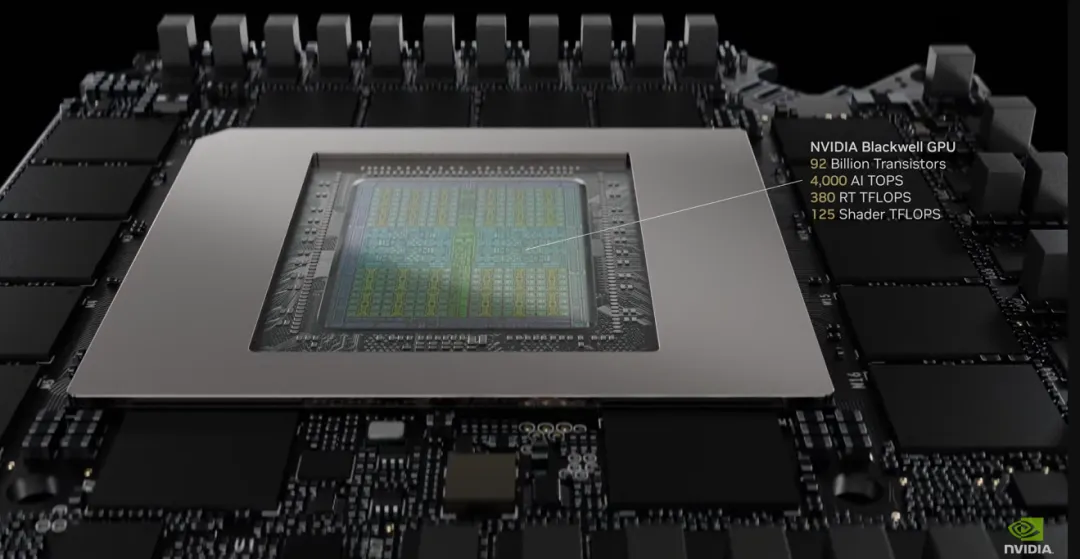

Performance parameters, The Blackwell GPU's RTX 5090 has 92 billion transistors, 3,352 AI TOPS (trillion operations per second), 380 RT TFLOPS (trillion floating-point operations per second), and 125 Shader TFLOPS (shader units).

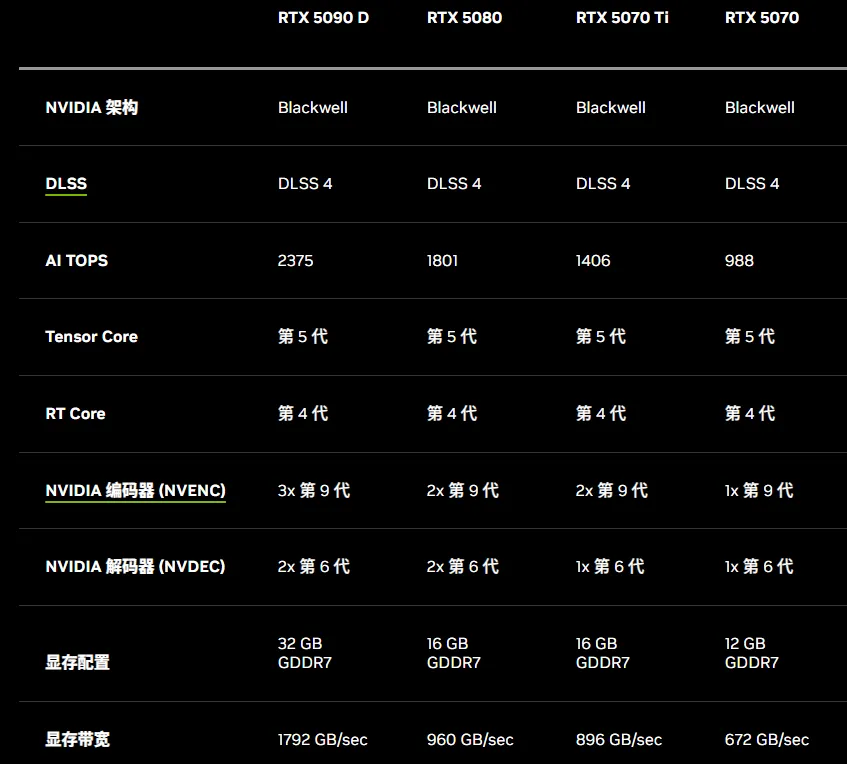

The RTX5090 (and 5090D) has 32 GB GDDR7 video memory, 512 bits of video memory width, 21760 CUDA cores, and 575W power consumption. More detailed metrics are shown below:

The RTX 5090 is the fastest GeForce RTX GPU to date, delivering 2x more performance than the RTX 4090 thanks to Blackwell architecture innovation and DLSS 4.

And there's more new technology: the new generation of super-resolution DLSS 4 improves performance by a factor of eight. For the first time, Nvidia has introduced multi-frame generation, which increases frame rates by using AI to generate up to three frames per rendered frame. DLSS 4 works in conjunction with the DLSS technology suite to improve performance up to 8 times faster than traditional rendering, while maintaining responsiveness through NVIDIA Reflex technology.

DLSS 4 also introduces the graphics industry's first real-time application of the Transformer model architecture. DLSS light reconstruction and super-resolution models based on Transformer use 2x more parameters and 4x more computing power to provide greater stability, better gghost, higher detail and enhanced anti-aliasing in game scenes. On launch day, DLSS 4 will support RTX 50 series Gpus in more than 75 games and applications.

At the same time, NVIDIA Reflex 2 introduces the Frame Warp innovation to reduce gaming latency by updating render frames according to the latest inputs before sending them to the display. Reflex 2 reduces latency by up to 75%, which gives gamers a competitive edge in multiplayer and makes single-player games more responsive.

In addition, Blackwell has introduced AI into shaders. Twenty-five years ago, NVIDIA introduced GeForce 3 and programmable shaders, setting the stage for two decades of graphics innovation, including pixel shading, computational shading, and real-time ray tracing. This time, NVIDIA also introduced the RTX neural shader, bringing small-scale AI networks to programmable shaders to unlock movie-grade materials, lighting, and more in real-time games.

Rendering game characters is one of the most challenging tasks in real-time graphics, and RTX Neural Faces takes simple rasterized faces and 3D pose data as input, and uses generative AI to render time-stable, high-quality digital faces in real time.

The RTX Neural Faces complement the new RTX technology for ray-tracing hair and skin, and together with the new RTX Mega Geometry, can achieve up to 100x ray-tracing triangles in a scene, promising a huge leap of realism for game characters and environments.

Nvidia Chinese official website also shows the parameters of the RTX 50 series.

When unveiling the price, Mr. Huang played a trick: Remember the price of the RTX4090? Now you can buy the RTX5070 for $549 (China Bank sells for 4,599 yuan) and you can buy the performance of 4090.

However, it seems that on the RTX5090, the price of this generation is still increased (RTX4090 is $1599), to $1,999. The price of the RTX 5090D has also come out, starting from 16499 yuan, and the RTX 5080 is 8299 yuan.

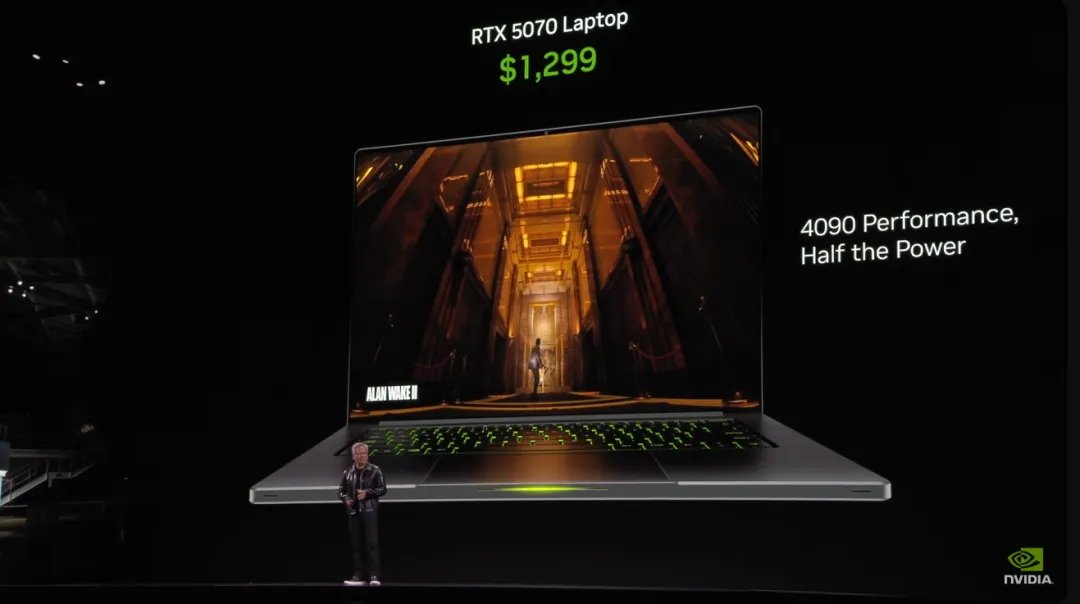

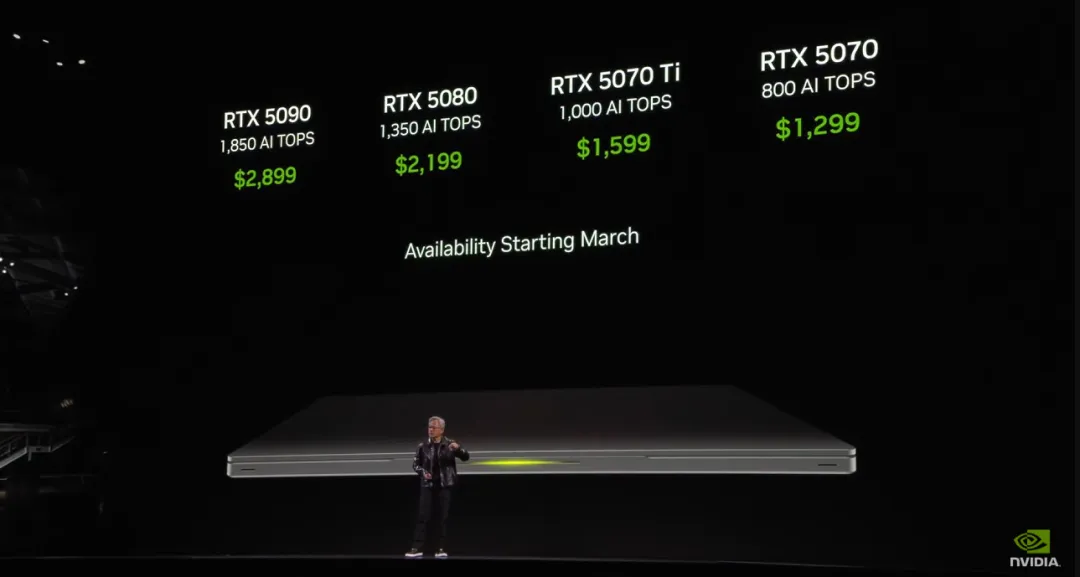

On the mobile side, the performance improvement of the RTX50 series is also very considerable, and Huang Renxun deliberately took out an RTX 5070 notebook. Mobile graphics cards will also be available quickly this year.

The price of more mobile models (complete machine) can be seen in the following figure:

However, Huang Renxun did not carefully introduce the basic performance of each model, but also wait until the real machine test. It is expected that devices with RTX50 series graphics cards will be available as early as March.

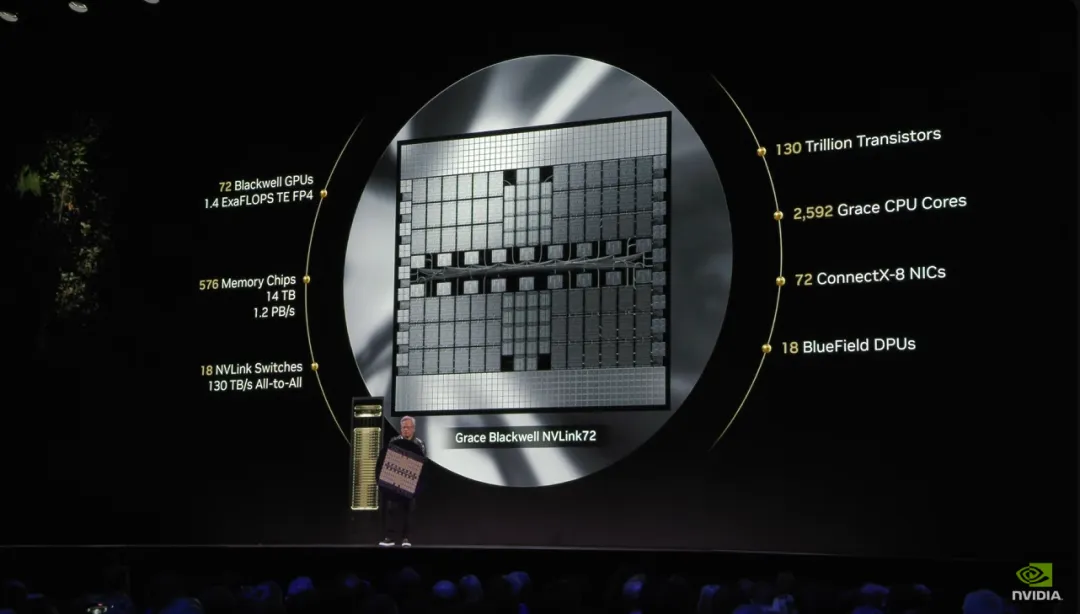

Before continuing, Huang struck a pose: "All the world's Internet traffic can be processed through these chips."

He's holding a large wafer with 72 Blackwell Gpus on it and 1.4 ExaFLOPS of AI floating-point performance: the Grace Blackwell NVLink72.

The Blackwell delivers four times more performance per watt than its predecessor.

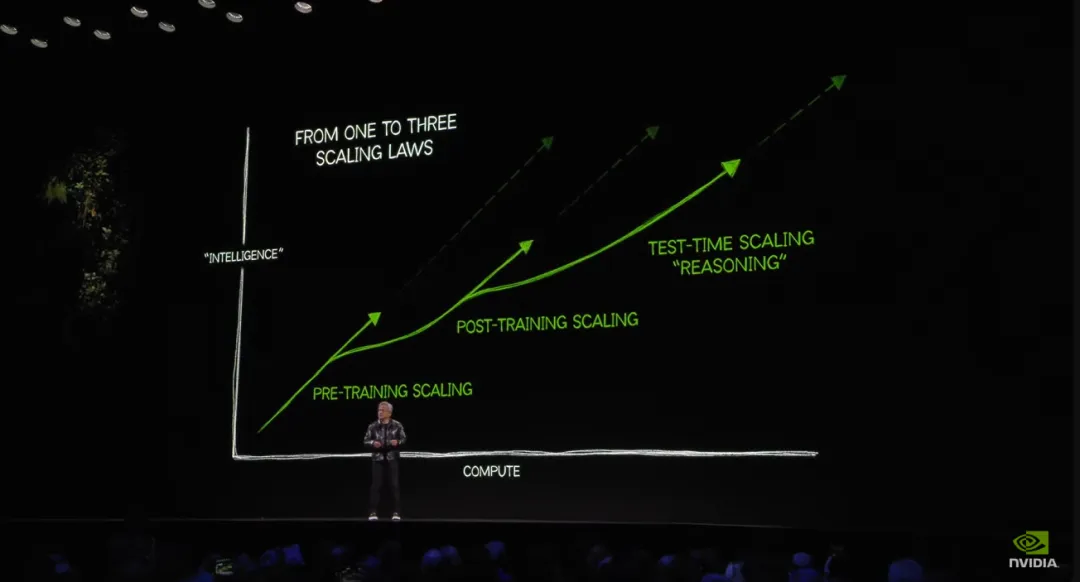

We know that large models follow Scaling Laws, and recently there has been a lively discussion in the AI field about whether scale has run its course.

In Nvidia's view, Scaling Laws are continuing, with all new RTX graphics cards following three new expanded dimensions: pre-training, post-training, and test time (inference), providing better real-time visuals.

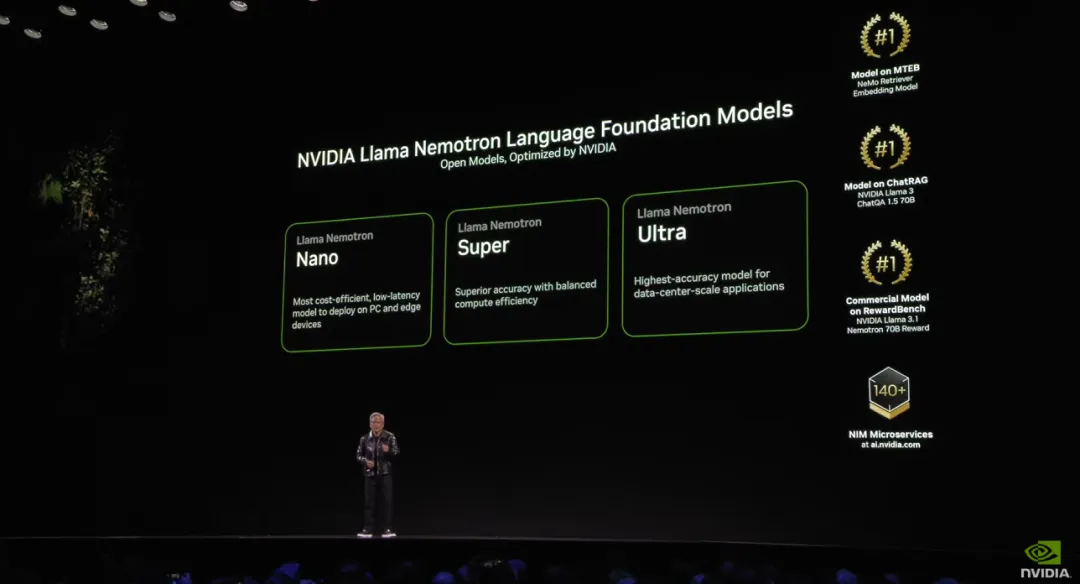

Nvidia announced a series of models based on Llama, including the Llama Nemotron Nano, Super, and Ultra. They cover everything from PCS and edge devices to large data centers.

Nvidia also released a base model running on an RTX AI PC that supports tasks such as digital people, content creation, productivity, and development.

These models are provided in the form of NIM microservices. Nvidia AI Blueprints, built on NIM microservices, provide easy-to-use, pre-configured reference workflows.

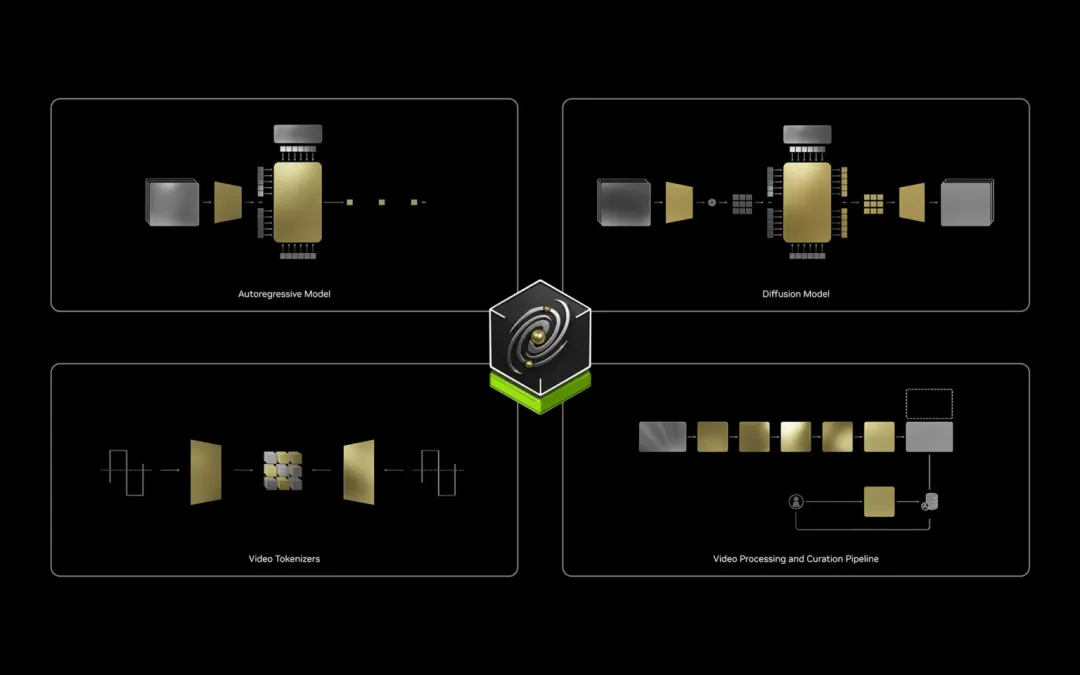

The next frontier of AI is physical AI, and new concepts such as embodied intelligence and spatial intelligence have emerged. At CES, Nvidia announced the Cosmos Platform, a world model that includes SOTA's generative base world model, advanced tokenizer, guardrail, and high-speed video processing processes. Cosmos aims to advance the development of physical AI systems such as autonomous vehicles (AV) and robotics.

Nvidia says physical AI models are expensive to develop and require a lot of real-world data and testing. The Cosmos World Base Model (WFM) provides developers with an easy way to generate large amounts of photo-realistic, physics-based composite data to train and evaluate their existing models. Developers can also build custom models by fine-tuning Cosmos WFM.

The Cosmos model is publicly available, and here are the relevant addresses:

Nvidia says a number of leading robotics and automotive companies have become the first users of Cosmos, including 1X, Agile Robots, Agility, Uber, and others.

Mr Huang said: "Robotics' ChatGPT moment is upon us. As with large-scale language models, world-based models are critical to driving robotics and self-driving car development, but not all developers have the expertise and resources to train their own world models. We created Cosmos to make physical AI universal and to make universal robotics available to every developer."

In his talk, Huang also demonstrated several ways to use the Cosmos model, including video search and understanding, synthetic data generation for physics-based photo-level realism, physical AI model development and evaluation, and the use of Cosmos and Omniverse to generate possible futures.

Advanced world model development tools

Building a physical AI model requires petabytes of video data and tens of thousands of hours of computing time to process, organize, and label that data. To help save huge costs in data collation, training, and model customization, Cosmos offers the following features:

Cosmos is currently being used by pioneers across the physical AI industry, such as AI and humanoid robotics company 1X, which launched the 1X World Model Challenge dataset using Cosmos Tokenizer, Waabi, another pioneer in providing the world with generative AI starting with autonomous vehicles, evaluated Cosmos in a data management environment for autonomous driving software development and simulation.

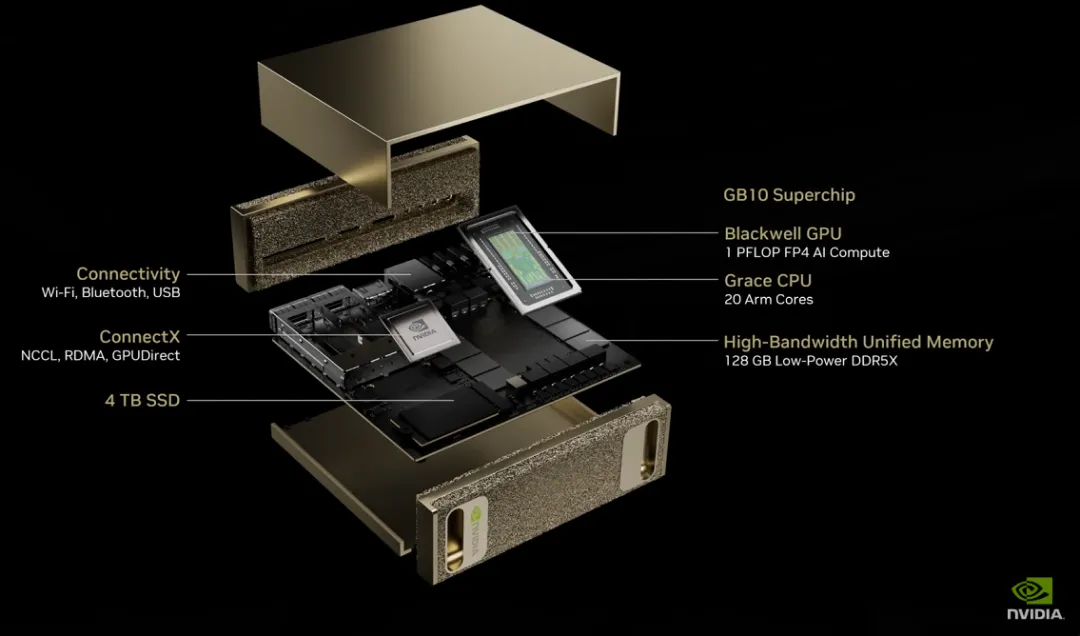

Nvidia also upgraded its previous AI supercomputer, DGX-1, to Project DIGITS. Overall: smaller, more powerful. NVIDIA describes it as "a personal AI supercomputer for AI researchers, data scientists, and students around the world, giving them access to the power of the NVIDIA Grace Blackwell platform."

Project DIGITS is powered by a new Nvidia GB10 Grace Blackwell superchip that delivers PFLOPS of AI computing performance for prototyping, fine-tuning, and running large AI models. With Project DIGITS, users can develop and run model inference on their own desktop systems and then seamlessly deploy models on accelerated cloud or data center infrastructure.

The GB10 superchip delivers PFLOPS and energy-efficient AI performance

The GB10 Superchip is an SoC based on Grace Blackwell architecture that delivers up to 1 PFLOPS of AI performance at FP4 accuracy.

The GB10 is equipped with a Blackwell GPU, which uses the latest generation CUDA Cores and fifth-generation Tensor Cores, and is connected to a high-performance Grace CPU via NVLink-C2C inter-chip interconnect. This includes 20 energy-efficient cores built on the Arm architecture. Nvidia said Mediatek was also involved in the design of the GB10.

The GB10 superchip enables Project DIGITS to deliver powerful performance using only standard power outlets. Each Project DIGITS has 128GB of RAM and up to 4TB of NVMe storage. With this supercomputer, developers can run large language models with up to 200 billion parameters, thus accelerating AI innovation. In addition, with the NVIDIA ConnectX network, two Project DIGITS AI supercomputers can be connected to run models with up to 405 billion parameters.

Put AI supercomputing at your fingertips

With the Grace Blackwell architecture, enterprises and researchers can prototype, fine-tune, and test models on a native Project DIGITS system running NVIDIA DGX OS for Linux. Then seamlessly deploy it to the NVIDIA DGX Cloud, accelerated cloud instances, or data center infrastructure.

This allows developers to prototype AI on Project DIGITS and then scale on cloud or data center infrastructure using the same Grace Blackwell architecture and NVIDIA AI Enterprise software platform.

In addition, Project DIGITS users have access to an extensive NVIDIA AI software library for experimentation and prototyping, including software development kits, orchestration tools, frameworks, and models available in the NVIDIA NGC catalog and the NVIDIA Developer Portal. Developers can fine-tune models with the NVIDIA NeMo framework, accelerate data science with the NVIDIA RAPIDS library, and run common frameworks like PyTorch, Python, and Jupyter Notebooks.

Nvidia says it and its top partners will launch Project DIGITS in May, starting at $3,000.

These are the key points released by Huang Renxun today, what do you think?

2025-02-17

2025-02-14

2025-02-13

friend link

400-000-0000

立即获取方案或咨询

top