Home > Information > News

#News ·2025-01-07

Latest news, Sora Core author, will lead Google World Model Team!

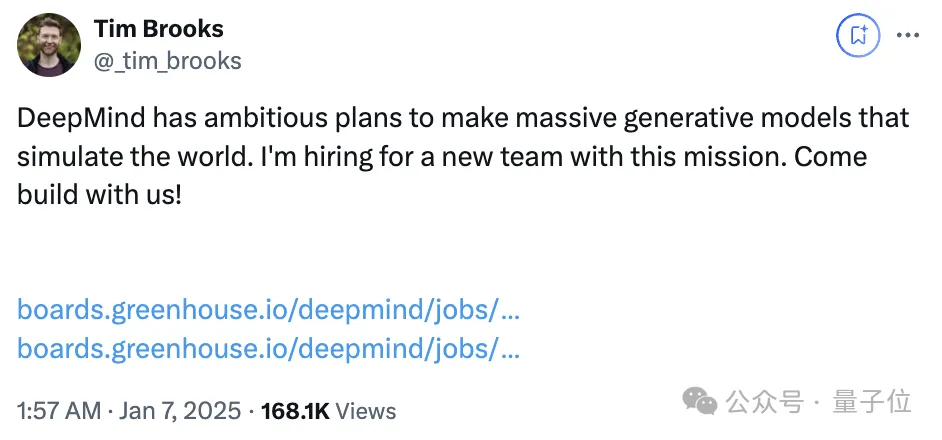

Tim Brooks, one of the two directors leading the Sora study and one of the DALL-E 3 authors, just sent out a gusto tweet, calling out the world's best:

DeepMind has ambitious plans to make massive models of the world.

With that mission in mind, I started recruiting a new team. Come and join us!

Brooks is a very bright rookie in the AI circle, and has just graduated from the UC blog in 2023.

In January 2023, Brooks began to lead the Sora research team; Sora debuted in February 2024.

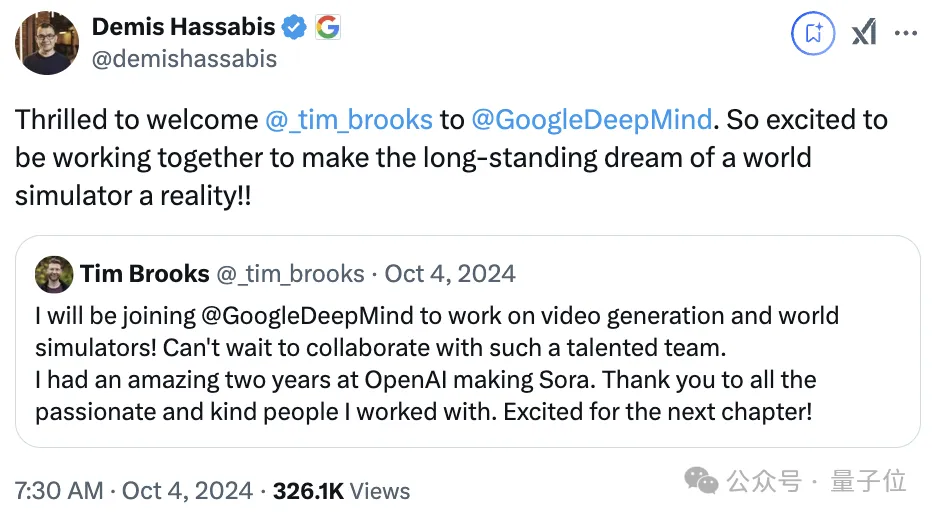

However, in October last year, Brooks suddenly announced his departure from OpenAI and joined Google DeepMind.

In response to the tweet, Demis Hassabis, CEO of Google DeepMind, said Brooks would help "bring the long-held dream of a world simulator to life."

Now, Brooks is actually going to lead Google's World Model team, recruiting and building it from scratch.

And, some people seized on the beauty of the official tweet:

Models, which means Google is going to make not one model of the world, but many!

Below the official tweet, almost all of them are netizens' blessing words:

Currently, Tim Brooks' linkedin and Twitter profiles are shown as follows:

- video gen + world sim at DeepMind

- ex-OpenAI Sora Lead

Regarding "video gen," Brooks joined Google DeepMind at the time, prior to the release of the critically acclaimed Veo 2.0.

a16z investors marveled at Veo 2.0 and couldn't believe how fast the video model had progressed in less than a year.

Veo 2 is currently available on VideoFX, and it's expected to make its way to platforms like YouTube Shorts next year, opening up entirely new possibilities for content creators.

And "world sim" should be the burden on the shoulders of the new team that is currently recruiting.

According to the jobs page linked to Brooks, the new team will work with teams like Google's Gemini, Veo, and Genie to solve key new problems and scale the world model to the highest level of computing.

The team will work on developing "real-time interactive generation" tools on top of the built world model; We also investigate how to integrate world models with existing multimodal models such as Gemini.

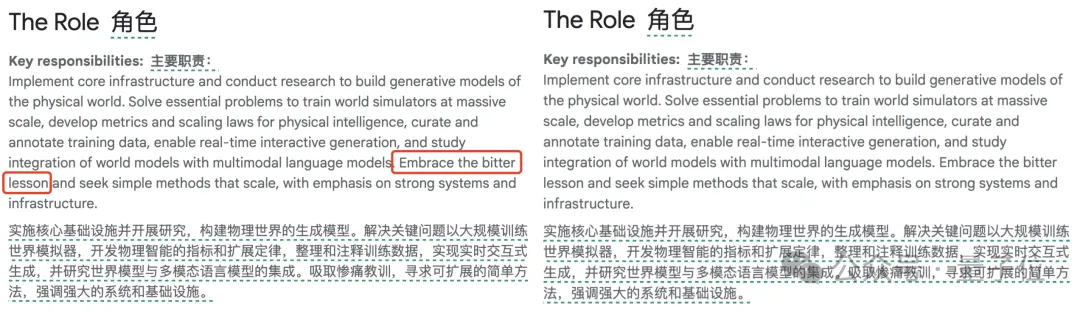

According to the official show, the Google World Model team mainly recruits two kinds of talents, both require master's or doctoral degrees:

(Note: The specific salary range of the target location can be attached when applying for the position)

△ Requirements of scientists (left) and engineers (right)

△ Requirements of scientists (left) and engineers (right)Google also stated that they believe that extending pre-training on video and multimodal data is a critical path to AGI.

World models will drive many areas, such as visual reasoning and simulation, planning of embodied agents, and real-time interactive entertainment.

What is Google's (current) understanding of the world model?

Take a look at Genie 2, released late last year, for a glimpse.

On December 5, 24, Google released Genie 2, an autoregressive latent spatial diffusion model trained on large video datasets.

Genie 2 can realize a map to generate an infinite variety of 3D game world, the generated 3D world can respond to the keyboard and mouse operation, play, and control.

Compared to previous studies, Genie 2 has long-term memory, allowing the player to reliably render existing parts of the world even if they turn away from the player's view; There can also be other AI NPCS in the game world that have complex interactions with player-controlled characters.

But the most important goal is not to play with it

Genie 2 can be used to train and evaluate embodied agents, and by creating a rich and diverse environment, it can generate evaluation tasks that the AI has not seen during training.

While this research is still in its early stages and there is still plenty of room for improvement in terms of agent and environment generation capabilities, Google says:

We believe Genie 2 is the solution to safely train embodied agents while meeting the breadth and versatility required to achieve AGI.

△Genie 2 can train the Agent to open the correct door by prompt

In 2024, AI technology continues to break through in multiple directions, and technologies such as video generation, world model, embodied intelligence and spatial intelligence have promoted human exploration of AGI.

Especially in the field of world models, many startups and large tech companies are chasing world models.

It is no wonder that after seeing Tim Brooks' heroic post, netizens issued such feelings on reddit:

Amazingly, if this news had appeared five years ago, our jaws would have dropped.

But for now, we're treating it like a normal Tuesday.

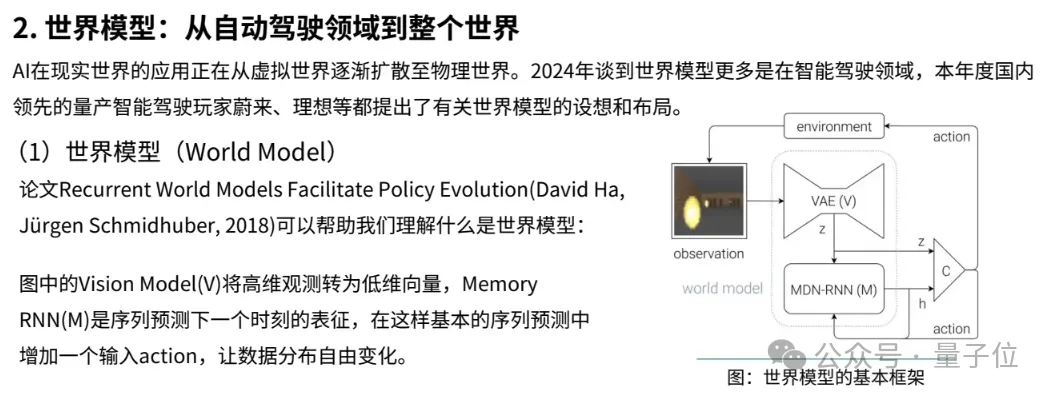

In fact, the world model reflects the frustration and expectation that many AI researchers have long had about Model-based RL work:

If the model is not accurate enough, then the reinforcement learning that is trained entirely in the model is not effective.

If you can get an accurate model of the world, you can trial and error in the world model and find the optimal decision in reality.

According to the qubit think tank's "Top Ten Trends in AI for 2024" report, in the field of world modeling, researchers are committed to developing models that can simulate and understand the real world, and the core is to make the model naturally emerge with new behaviors and decision-making capabilities through learning large amounts of data.

Techcrunch counted that among the many players chasing the world model, in addition to technology giants such as Google, there are many dazzling start-up players.

For example, Li Feifei's World Labs (although it is now more targeted at space intelligence), as well as Decart, Odyssey and other companies.

There is general agreement in the field that world models might be used to create interactive media, such as video games and movies; As well as running realistic simulations such as robotic/embodied intelligence training environments.

At present, in addition to the fact that the technology has not reached the ideal level in mind, there are several obstacles in the way of the world model.

One is copyright, and some world models appear to have been trained on clips of game play.

Then we have to mention the biggest advantage of the new Google World Model team - who owns the hundreds of millions of hours of game video data on Youtube.

The other is the opposition of the content creators involved.

It's worth noting, though, that some of these startups, like Odyssey, promise to work with, rather than replace, creative players in the 3D content space.

We don't know what Google will do.

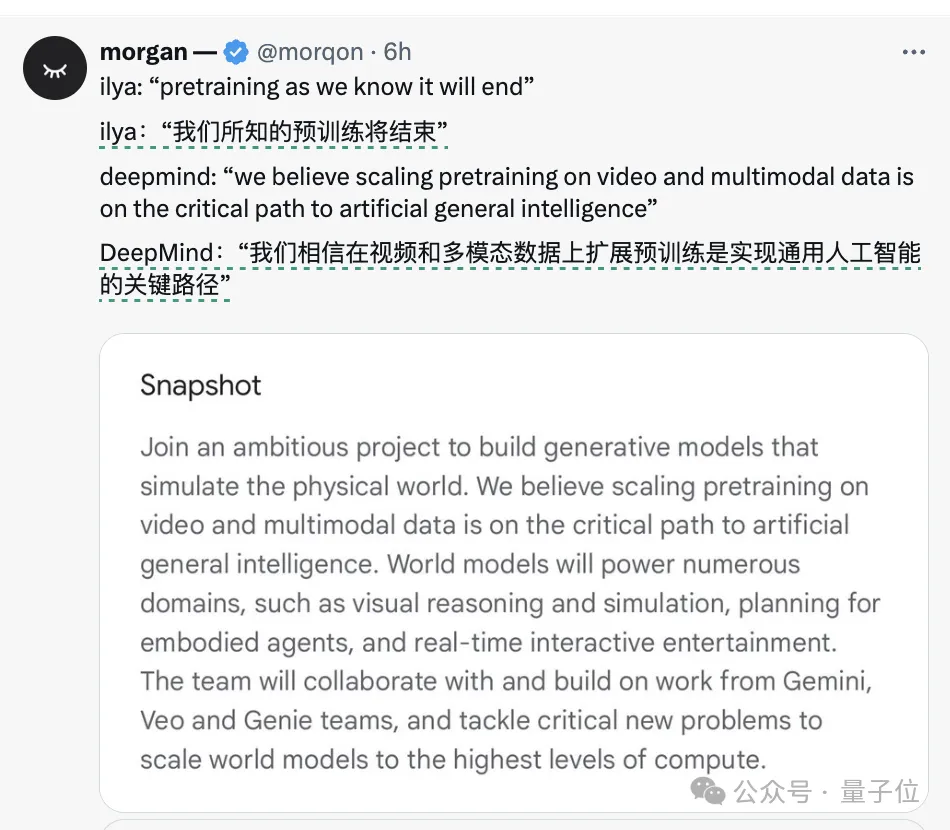

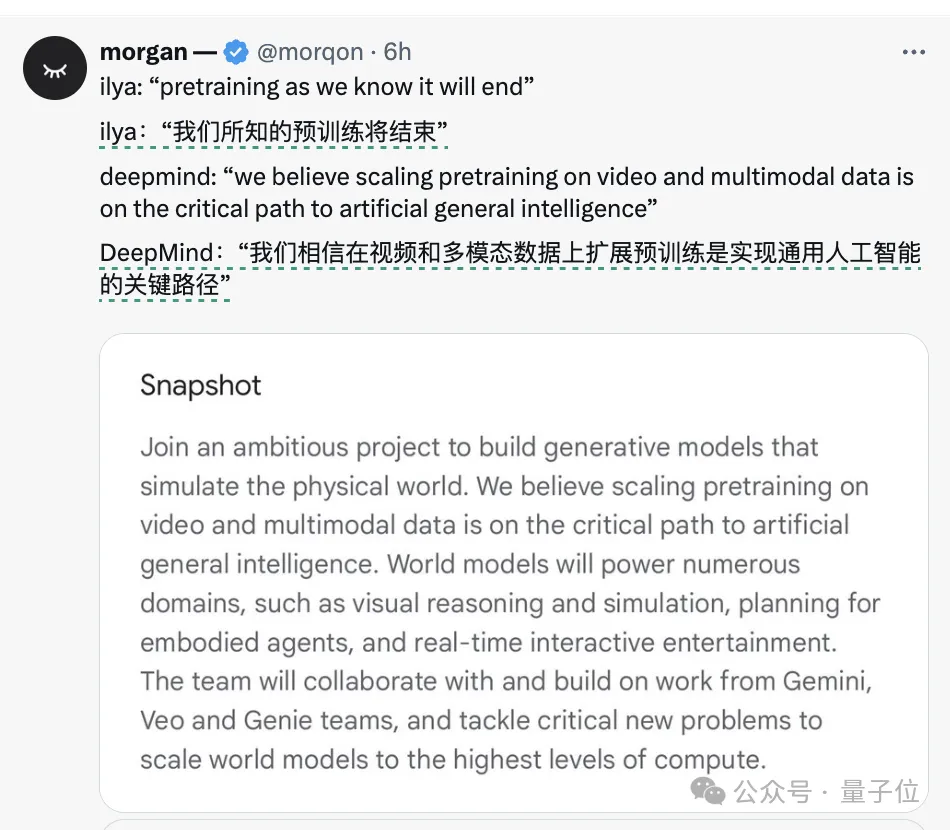

Finally, let me mention one of the highlights that people have found in the JD details of Google's new world Model team.

Google DeepMind wrote:

We believe scaling pretraining on video and multimodal data is on the critical path to artificial general intelligence.

Focus and scale pretraining.

And earlier, on NeurIPS, Ilya publicly pronounced:

The era of pre-training is coming to an end!

Although it can also be understood that Ilya specifically refers to the pre-training era of large language models, Google DeepMind specifically refers to the pre-training of world models.

But yet Who knows, you say no (manual dog head).

Reference link:

[1]https://techcrunch.com/2025/01/06/google-is-forming-a-new-team-to-build-ai-that-can-simulate-the-physical-world/

[2]https://techcrunch.com/2024/10/03/a-co-lead-on-sora-openais-video-generator-has-left-for-google/

[3]https://www.linkedin.com/in/timothyebrooks/

[4]https://x.com/_tim_brooks/status/1876327325916447140

[5] https://www.reddit.com/r/singularity/comments/1hvbzyp/google_is_forming_a_new_team_to_build_ai_that_can/.

2025-02-17

2025-02-14

2025-02-13

friend link

400-000-0000

立即获取方案或咨询

top