Home > Information > News

#News ·2025-01-07

This article is reprinted with the authorization of AIGC Studio public account, please contact the source for reprinting.

Beihang presented the first multi-functional plug and play adapter MV-Adapter. T2I models and their derived models can be enhanced without changing the original network structure or feature space. The MV-Adapter achieves multi-view image generation up to 768 resolution on SDXL and demonstrates excellent adaptability and versatility. It can also be extended to arbitrary view generation, opening new doors for a wider range of applications.

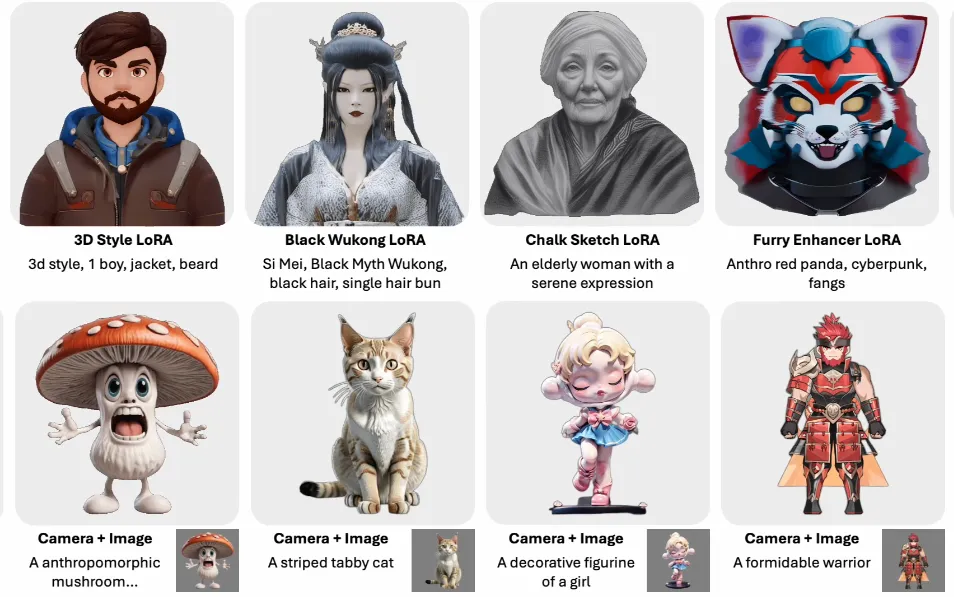

Line 1 of the figure below shows the results of integrating the MV-Adapter with the personalized T2I, the refined Few-step T2I, and ControlNet, demonstrating its adaptability. Line 2 shows the results under various control signals, including view-guided or geometrically guided generation using text or image input, demonstrating its versatility.

MV-Adapter: Easily generate consistent images from multiple views

MV-Adapter: Easily generate consistent images from multiple views

Existing multi-view image generation methods often make invasive modifications to pre-trained text-to-image (T2I) models and require complete fine-tuning, resulting in the following issues:

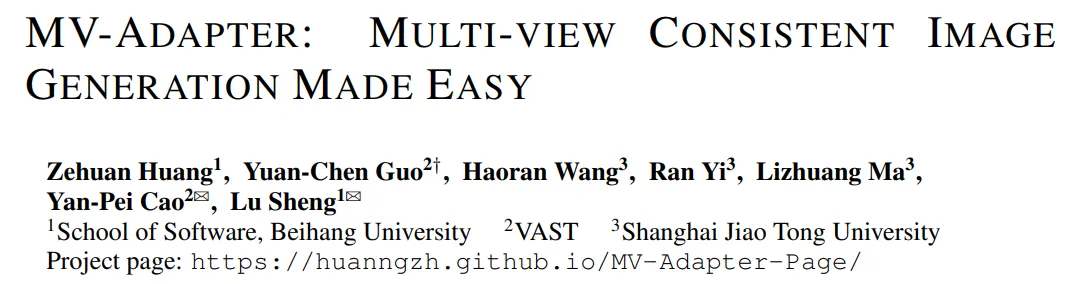

The paper presents the first adapter-based multi-view image generation solution and introduces the MV-Adapter, a versatile plug-and-play adapter that enhances T2I models and their derivatives without changing the original network structure or feature space. By updating fewer parameters, MV-Adapter enables efficient training and preserves the prior knowledge embedded in the pre-trained model, reducing the risk of overfitting.

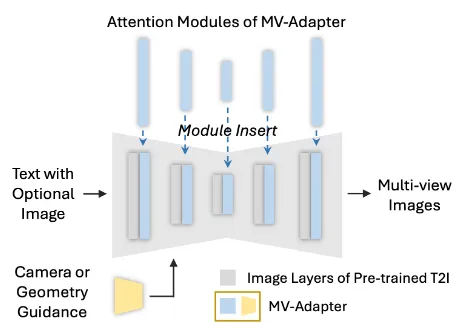

To effectively model 3D geometry knowledge in adapters, the paper introduces innovative designs, including repeated self-attention layers and parallel attention architectures, that enable adapters to inherit powerful priors from pre-trained models to model novel 3D knowledge. In addition, a unified conditional encoder is proposed that seamlessly integrates camera parameters and geometric information, facilitating text - and image-based 3D generation and texturing applications.

The MV-Adapter enables 768 resolution multi-view generation on the Stable Diffusion XL (SDXL) and demonstrates adaptability and versatility. It can also be extended to arbitrary view generation, enabling a wider range of applications. MV-Adapter sets new quality standards for multi-view image generation and opens up new possibilities due to its efficiency, adaptability and versatility.

MV-Adapter is a plug-and-play adapter that learns multi-view prior, transfers to derivatives of T2I models without special adjustments, and enables T2I to generate multi-view consistent images under a variety of conditions. When reasoning, the MV-Adapter contains a conditional bootstrap (yellow) and decouple attention layer (blue) that can be inserted directly into a personalized or distilled T2I to form a multi-view generator.

MV-Adapter is a plug-and-play adapter that learns multi-view prior, transfers to derivatives of T2I models without special adjustments, and enables T2I to generate multi-view consistent images under a variety of conditions. When reasoning, the MV-Adapter contains a conditional bootstrap (yellow) and decouple attention layer (blue) that can be inserted directly into a personalized or distilled T2I to form a multi-view generator.

The MV-Adapter consists of two parts:

The MV-Adapter consists of two parts:

A pre-trained U-Net is used to encode the reference image to extract fine-grained information.

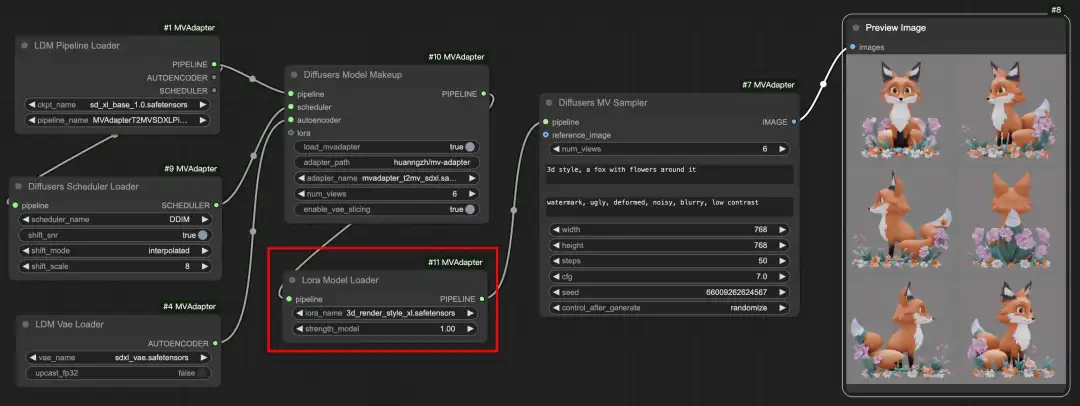

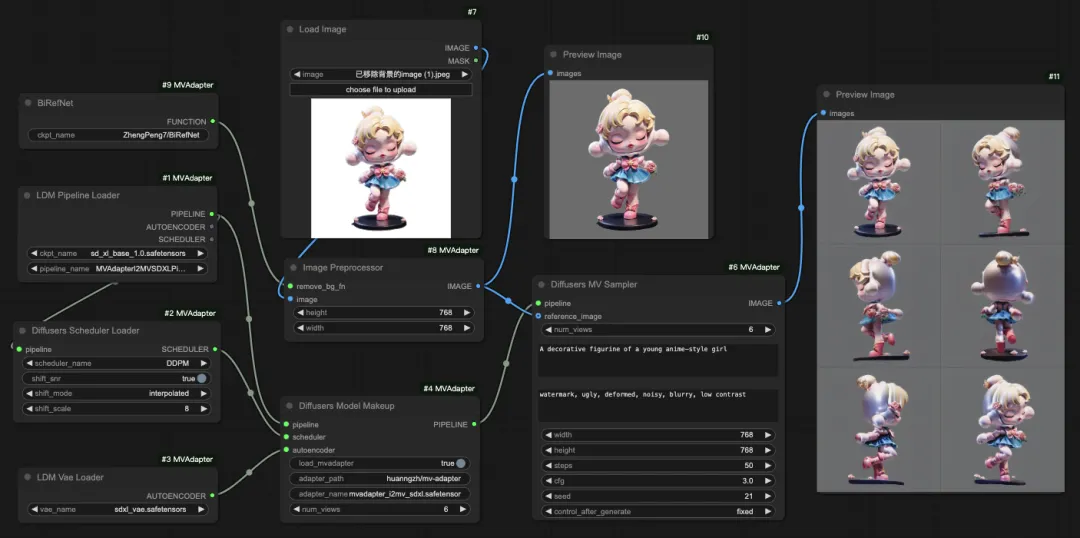

Integrating the MV-Adapter into ComfyUI allows users to generate multi-view consistent images from text prompts or a single image directly within the ComfyUI interface. See the link above for details.

2025-02-17

2025-02-14

2025-02-13

friend link

400-000-0000

立即获取方案或咨询

top