Home > Information > News

#News ·2025-01-07

Title: PanSplat: 4K Panorama Synthesis with Feed-Forward Gaussian Splatting

Authors: Cheng Zhang, Haofei Xu, Qianyi Wu, Camilo Cruz Gambardella, Dinh Phung, Jianfei Cai

Institutions: Monash University, Building 4.0 CRC, Caulfield East, Victoria, Australia, ETH Zurich

The original link: https://arxiv.org/abs/2412.12096

Code link: https://github.com/chengzhag/PanSplat

With the advent of portable 360° cameras, Panorama has gained significant traction in applications such as virtual reality (VR), virtual tourism, robotics, and autonomous driving. Therefore, wide-baseline panoramic view synthesis has become a crucial task, where high resolution, fast reasoning, and storage efficiency are essential. However, existing methods are generally limited by lower resolution (512×1024) due to demanding memory and computational requirements. In this article, we introduce PanSplat, a general-purpose feedforward method that effectively supports resolutions up to 4K (2048) ×4096). Our approach features custom spherical 3D Gaussian pyramids with Fibonacci lattice arrangements that enhance image quality while reducing information redundancy. To accommodate the need for high resolution, we propose a pipeline that integrates layered ball formation ontology and Gaussian head with local operations to achieve two-step delayed backpropagation for memory-efficient training on a single A100 GPU. Experiments have shown that PanSplat achieves best-in-class results on both synthetic and real datasets, with superior efficiency and image quality.

With the rise of 360° cameras and immersive technologies, the demand for rich visual content in virtual reality (VR) and virtual roaming has increased dramatically. The Panoramic Light Field system provides a compelling solution for a realistic, immersive experience by enabling users to explore the environment from a range of arbitrary perspectives within a specified virtual space. Recent advances in 360° cameras have simplified the creation of immersive content, driving the development of applications such as Street View (Google Maps, Apple Maps) and virtual roaming (Matterport, Theasys), where synthesizing new views from wide baseline panoramas is essential for smooth transitions between locations.

Although current methods have extensively explored wide-baseline panoramic view synthesis, they often struggle to strike a balance between computational efficiency, memory consumption, image quality, and resolution. Traditional methods rely on explicit 3D scene representations, such as multiplane images (MPI) or grids, which, while potentially scalable at high resolution, often result in lower image quality due to limited expressive power. In contrast, Nerf-based methods deliver high-quality results, but are computationally heavy and memory intensive, making them less suitable for high-resolution panoramas. Most existing methods have a resolution limit of 512×1024, which is well below the 4K resolution (2048×4096) typically required in VR applications to achieve a truly immersive experience.

The latest trend in 3D Gauss sputtering (3DGS) has yielded remarkable results in synthesizing new views, marking a major advance in image quality and computational efficiency. By representing the scene as a set of Gaussian primitives, 3DGS uses rasterization instead of NeRF's volume sampling for high-quality, efficient rendering, while also supporting differentiable rendering for training. Subsequent work has pushed the boundaries of 3DGS further by introducing a feedforward network to predict Gaussian primions directly from input images, extending this to sparse view inputs. Despite these advances, existing 3DGS methods are not directly applicable to panoramas due to two major challenges: 1) the unique spherical geometry of panoramas, which conflicts with pixel-aligned Gaussian primitives, resulting in overlapping and redundant Gaussian primitives near the poles; 2) The high resolution requirements of VR applications make current methods (such as MVSplat) difficult to scale efficiently due to memory limitations. Recommended course: The first theoretical and practical course for embodied intelligence in China.

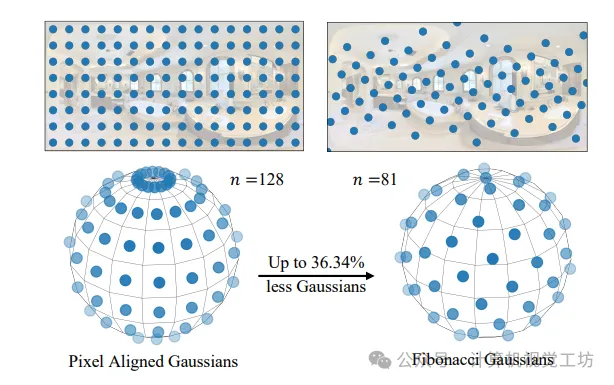

In this work, we present PanSplat, a feedforward approach optimized for 4K resolution inputs, to generate 3D Gaussian representations tailored specifically for panoramic formats to synthesize new 4K views from wide baseline panoramas (see Figure 1 for an example). To solve the first challenge, we introduce a Fibonacci lattice arrangement of 3D Gaussian primitives (see Figure 2), which significantly reduces the number of Gaussian primitives required by distributing them evenly over the sphere. On the other hand, to improve rendering quality, we implemented a 3D Gaussian pyramid, which represents the scene on multiple scales, capturing different levels of fine detail. To address the second challenge, we leverage the layered spherical cost volume based on the Transformer network to estimate high-resolution 3D geometry while improving efficiency. Then, we design Gaussian heads with local operations to predict Gaussian parameters, enabling two-step delayed backpropagation to achieve memory-efficient training at 4K resolution. In addition, we introduce a delay mixing technique that reduces artifacts from unaligned Gaussian primitives due to moving objects and depth inconsistencies, improving rendering quality in real scenes.

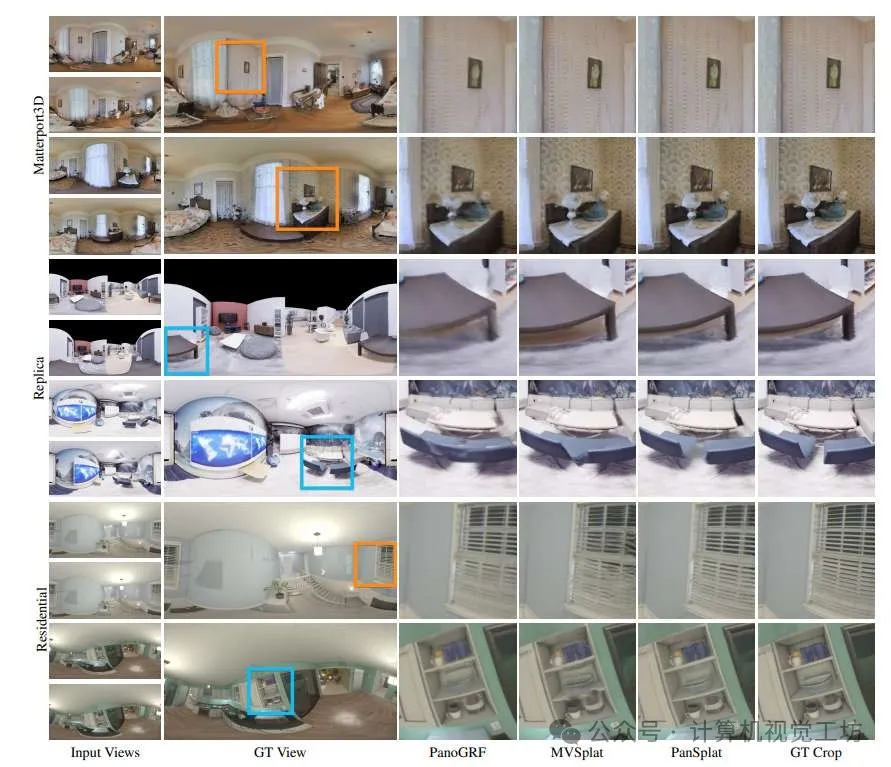

Our PanSplat can generate novel views from two 4K(2048x4096) panoramas. We train based on rendered Matterport3D. The data resolution is 4K(left) and can be generalized to 4K real-world data with just a few tweaks on 360Loc (right).

Our main contributions can be summarized as follows:

• We propose PanSplat, a feedforward method that efficiently generates high-quality new views using spherical 3D Gaussian pyramids customized for panoramic formats.

• We designed a pipeline that includes layered spherical cost volumes and Gaussian heads with local operations, enabling two-step delayed backpropagation that scales efficiently to higher resolutions.

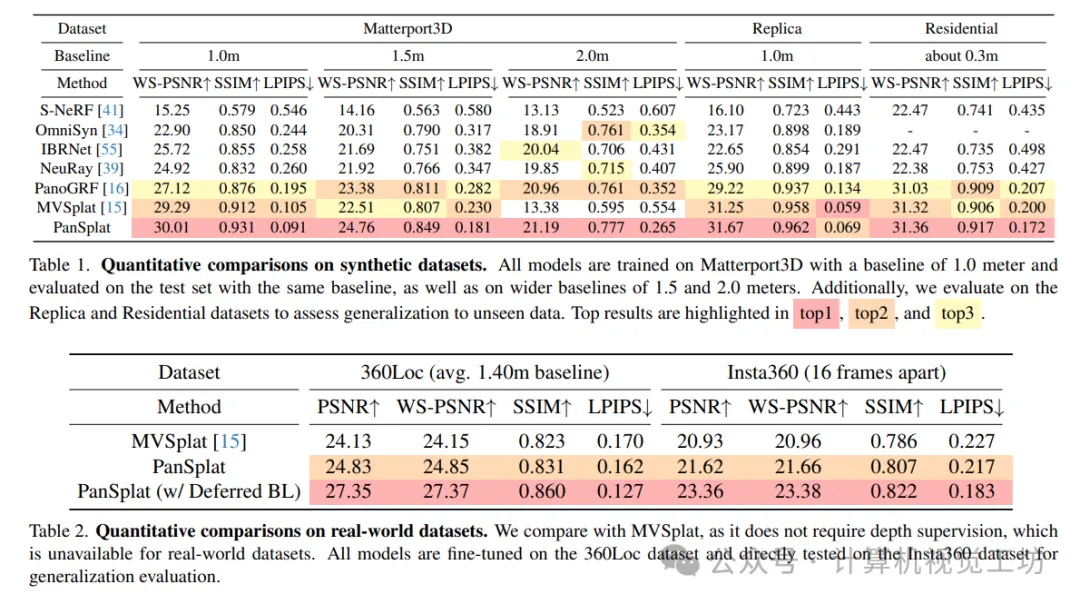

We demonstrated that PanSplat achieves state-of-the-art results on both synthetic and real-world datasets, with excellent image quality and up to 70 times faster inference compared to the best methods. By supporting 4K resolution, PanSplat is a promising solution for immersive VR applications.

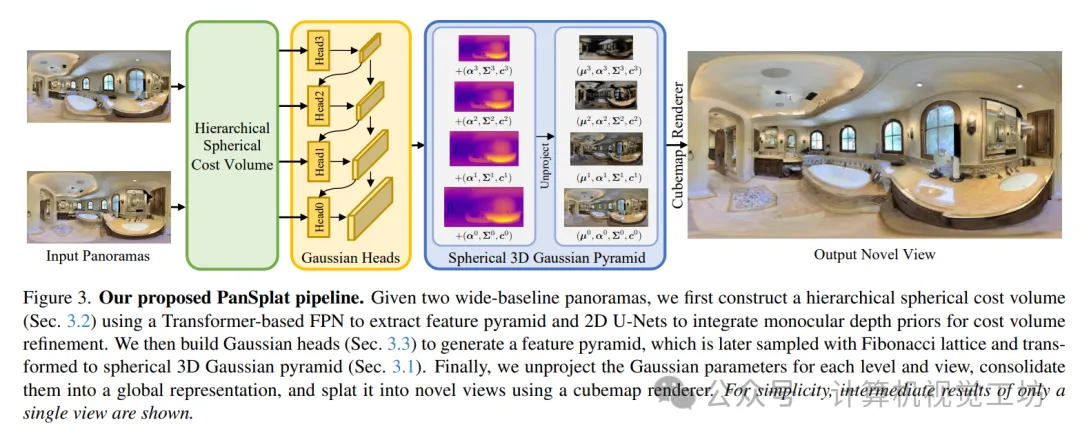

PanSplat is a feedforward model, shown in Figure 3, that efficiently synthesizes high-quality new views from two given wide-baseline panoramas. We introduced a spherical 3D Gaussian pyramid specifically for panoramic images and combined it with a layered spherical cost volume and Gaussian head to achieve a high-resolution output scalable up to 4K resolution for real-world applications.

In this paper, we present PanSplat, a novel generalization and feedforward method for synthesizing new views from a wide baseline panorama. To effectively support 4K resolution (2048x4096) for immersive VR applications, we introduced a pipeline that enables two-step delay backpropagation. In addition, we propose a spherical 3D Gaussian pyramid with Lambonacci lattice arrangement to suit the panoramic format to improve rendering quality and efficiency. Extensive experiments have demonstrated that PanSplat is superior to existing techniques in terms of image quality and resolution.

Readers who are interested in more experimental results and details of the paper can read the original paper

2025-02-17

2025-02-14

2025-02-13

friend link

400-000-0000

立即获取方案或咨询

top