Home > Information > News

#News ·2025-01-06

This article is reprinted with the authorization of AIGC Studio public account, please contact the source for reprinting.

In the field of artificial intelligence, the ability of human-like image text perception, understanding, editing and generation has always been a research hotspot. At present, the large model research in the field of visual text mainly focuses on the single mode generation task. Although these models are unified on some tasks, they are still difficult to fully integrate on most tasks in the OCR domain.

TextHarmony: The ability to proficiently understand and generate visual text

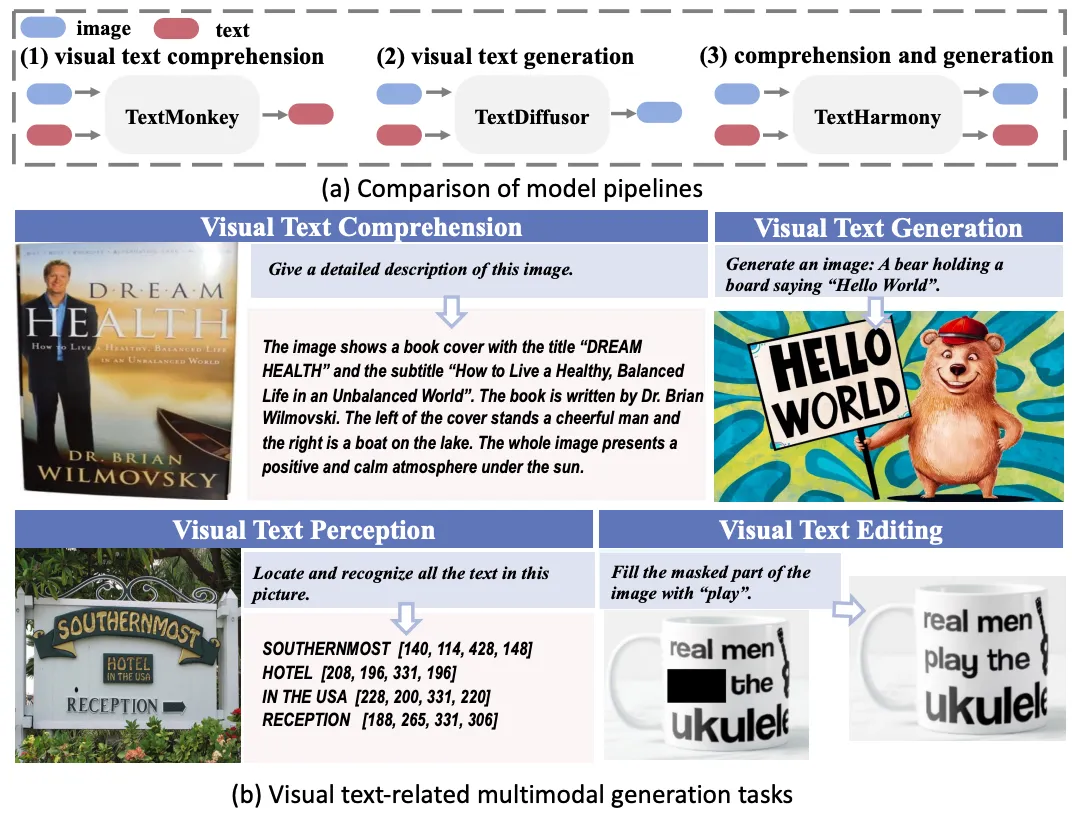

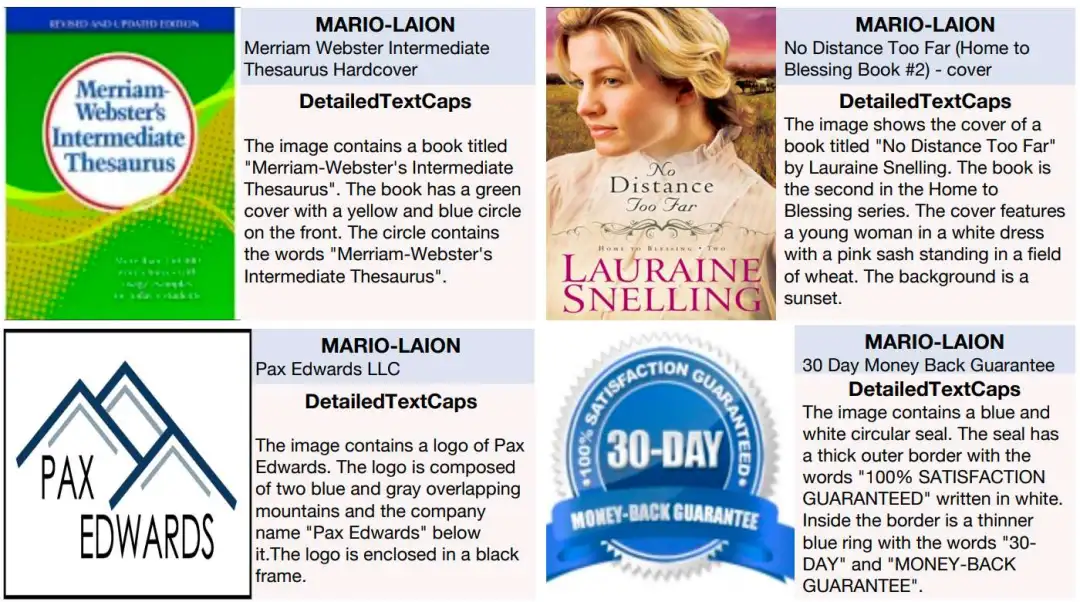

Figure (a) illustrates the different types of image text generation models: visual text understanding models can only generate text, visual text generation models can only generate images, and TextHarmony can generate text and images. Figure (b) illustrates TextHarmony's versatility in generating different modes for a variety of text-centric tasks.

The thesis links: https://arxiv.org/abs/2407.16364

Open source: https://github.com/bytedance/TextHarmony

In this work, we propose TextHarmony, a unified and universal multimodal generation model that skillfully understands and generates visual text. Due to the inherent inconsistencies between visual and linguistic modes, generating images and text at the same time often results in performance degradation.

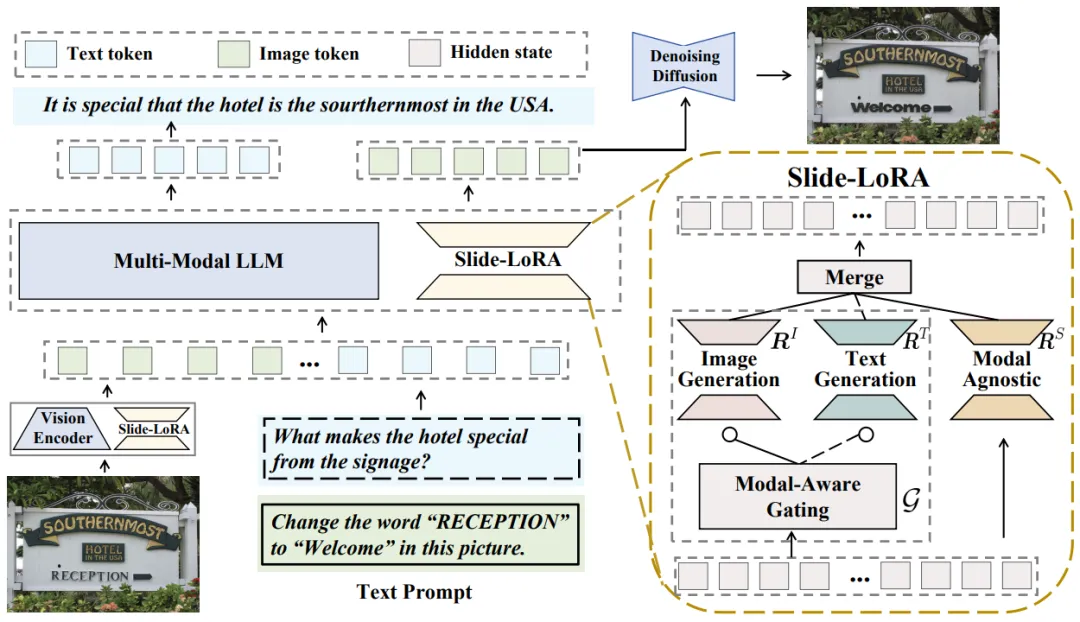

To overcome this challenge, existing methods rely on modal-specific data for supervised fine-tuning, which requires different model instances. We propose Slide-LoRA, which dynamically aggregates mode-specific and mode-independent LoRA experts and partially decouples the multimodal generation space. Slide-LoRA coordinates visual and linguistic generation in a single model instance, facilitating a more unified generation process. In addition, we developed a high-quality image title dataset DetailedTextCaps-100K and synthesized it with a complex closed-source MLLM to further enhance visual text generation capabilities. The effectiveness of the proposed method is demonstrated by comprehensive experiments on various benchmarks.

With Slide-LoRA support, TextHarmony achieved performance comparable to specific modal fine-tuning results with an average increase of 2.5% in visual text understanding tasks and 4.0% in visual text generation tasks. Our work describes the feasibility of an integrated approach to multimodal generation in the field of visual text, laying the foundation for subsequent research.

TextHarmony. TextHarmony generates text and visual content by connecting visual encoders, LLMS, and image decoders. The proposed Slide-LoRA module alleviates the inconsistency problem in multi-pattern generation by partially separating parameter space.

TextHarmony. TextHarmony generates text and visual content by connecting visual encoders, LLMS, and image decoders. The proposed Slide-LoRA module alleviates the inconsistency problem in multi-pattern generation by partially separating parameter space.

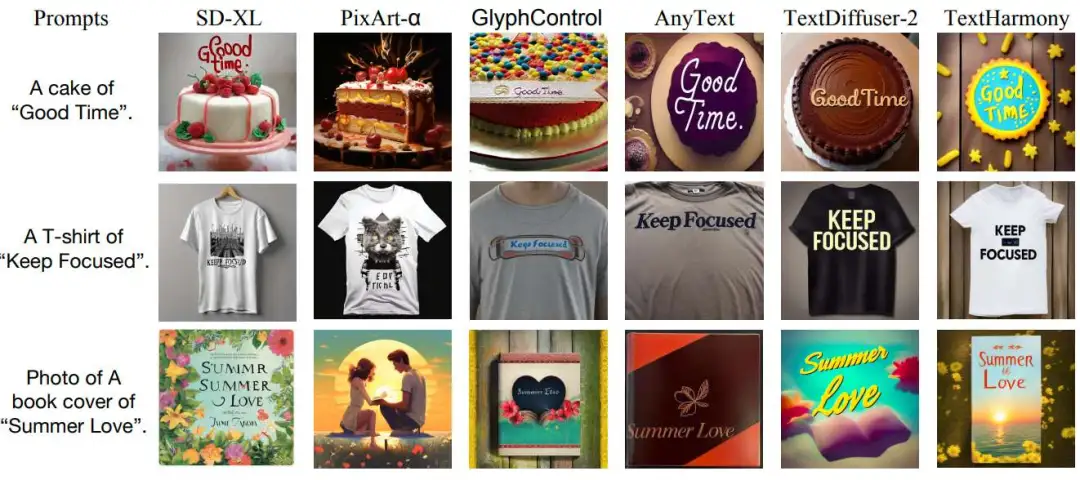

Visual text generation results.

Visual text generation results.

Visualize text editing results.

Visualize text editing results.

More examples of DetailedTextCaps-100K.

More examples of DetailedTextCaps-100K.

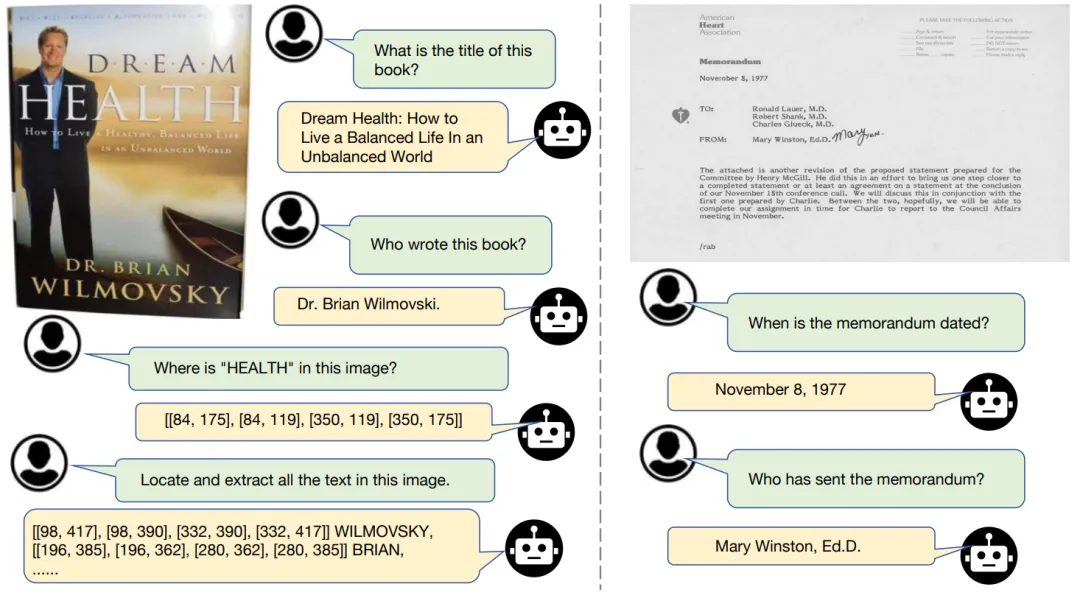

TextHarmony Visualizations of visual text understanding and perception.

TextHarmony Visualizations of visual text understanding and perception.

TextHarmony is a versatile, multimodal generation model that excels at coordinating the different tasks of visual text understanding and generation. Using the proposed Slide-LoRA mechanism, TextHarmony synchronizes the generation process of visual and linguistic modes in a single model instance, effectively solving the inherent inconsistency problem between different modes. The model architecture excels at performing tasks that involve processing and generating images, masks, text, and layouts, especially in the areas of optical character recognition (OCR) and document analysis. TextHarmony's achievement heralds great potential for integrated multimodal generation models in the field of visual text. TextHarmony's adaptability shows that models of similar nature can be effectively applied to a variety of applications, bringing revolutionary prospects to industries that rely on visual text understanding and generating complex interactions.

2025-02-17

2025-02-14

2025-02-13

friend link

400-000-0000

立即获取方案或咨询

top