Home > Information > News

#News ·2025-01-06

The name Jason Wei will be familiar to anyone who follows the AI field. He is a senior research scientist at OpenAI and is often seen at OpenAI launch events. More importantly, he is the first author of Chain-of-Thought Prompting Elicits Reasoning in Large Language Models.

picture

picture

He joined Google right out of college. There, he popularized the concept of chain-of-mind cueing, co-led early work on instruction fine-tuning, and co-authored a paper with Yi Tay, Jeff Dean, and others on the emergence capability of large models. In early 2023, he joined OpenAI, where he worked on building ChatGPT as well as major projects like o1. His work has made techniques and concepts such as mind-chain cueing, instruction fine-tuning, and emergent phenomena widely known.

On November 20 last year, Jason Wei gave a guest lecture of about 40 minutes in the course "CIS 7000: Large Language Models (Fall 2024)" taught by Professor Mayur Naik in the Department of Computer and Information Science at the University of Pennsylvania on the topic of "Extended Paradigms for Large Language Models." Starting with the definition of scaling, Jason Wei introduces the shift in the scaling paradigm of LLM from scaling to scaling of reasoning represented by chain of thought and reinforcement learning. Can be said to be rich in content, dry goods full!

Recently, Prof. Naik posted a video and slide show of Jason Wei's talk on his YouTube channel. The Heart of the Machine has compiled the main contents.

Here's a rundown of the speech:

I. Definition and importance of extension

Extended Paradigm 1: Next Word Prediction (2018-present)

Extended paradigm 2: Extended reinforcement learning based on thought chain

4. Change of AI culture

5. Future Outlook

Jason Wei stressed that although AI has made great progress in the past five years, there is still more room for development in the next five years by continuing to expand. He ended his speech with "just keep scaling," expressing confidence in the expansion strategy.

The following is a graphic version of Jason Wei's speech.

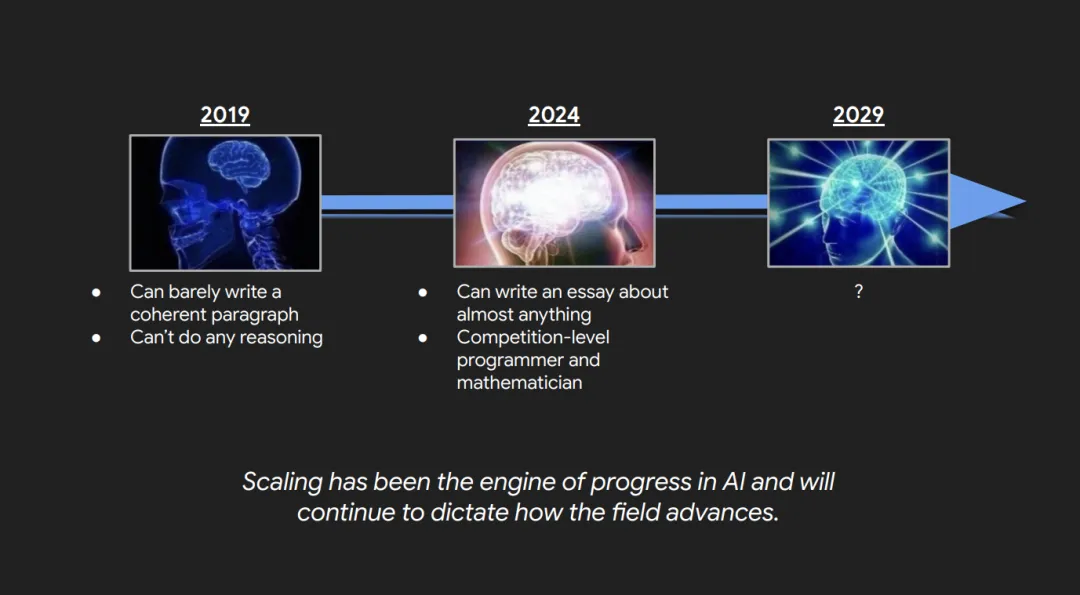

Today's topic is extended paradigms for large language models. Let me start by saying that AI has made amazing progress in the last five years. Just five years ago, in 2019, AI could barely write coherent paragraphs or make any kind of reasoning. Today, AI can write articles on almost any topic and has become a competition-level programmer and mathematician. So how did we get here so quickly?

The point I want to make today is that extension has been the engine driving AI progress and will continue to dominate the direction of the field.

Here's a short outline - I'll speak for about 40 minutes and be happy to take questions afterwards. I'll start by talking about what extensions are and why. And then I'll talk about the first extended paradigm, which is the next word prediction. I'll talk about the challenges to this paradigm. And then I'll talk about the second paradigm that we've moved into recently, which is reinforcement learning along the chain of thought. I'll end with a summary of how the AI culture is being scaled and changed, and what we want to see next.

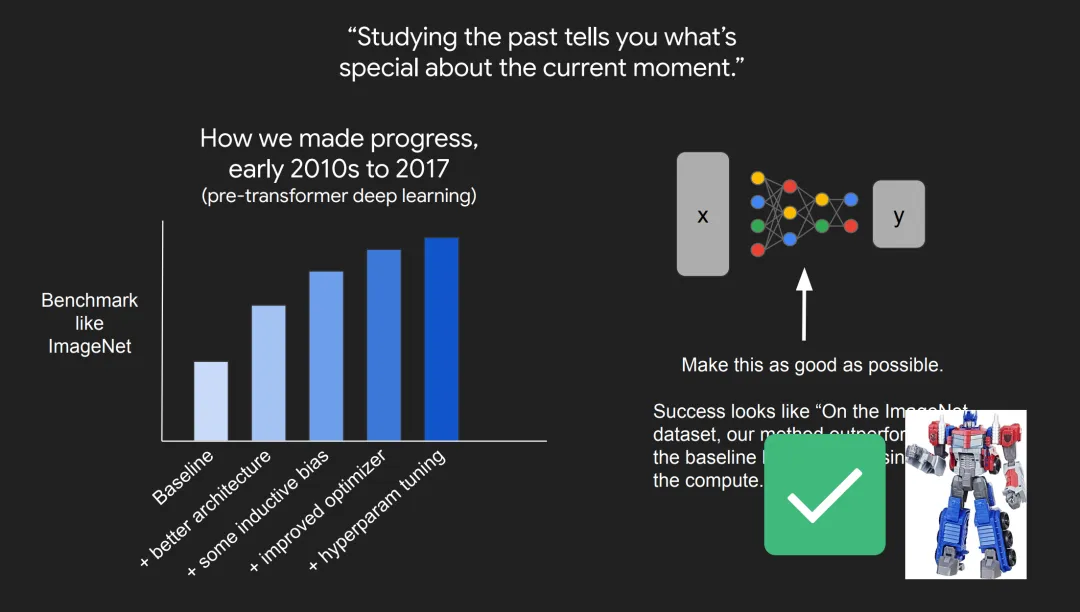

I want to spend a few minutes talking about what we were doing before the first extended paradigm. The reason is that unless you've studied history, you probably won't realize there's anything special about the current moment.

Maybe add a better architecture, add some inductive bias, build a better optimizer, do hyperparameter tuning. All of these things add up to improve your performance on benchmarks.

Let's say you have some XY relationship to learn, and the goal is to learn that relationship as well as possible. Before 2017, we might describe a successful study like this: "On the ImageNet dataset, our approach improved baseline performance by 5% with half the computational effort."

But with Transformer, we have a great way to learn different types of XY relationships. What should we do if we are no longer limited by learning itself?

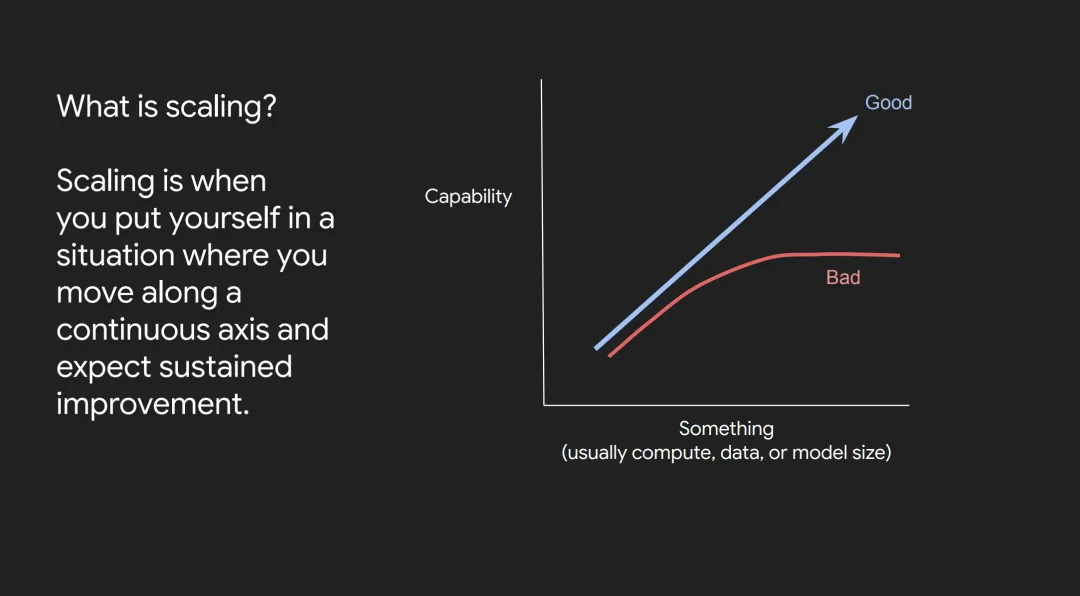

The answer, it turns out, is expansion. Scaling simply means training a bigger model, using more data and more Gpus. But I have a more specific definition here: Scaling is when you put yourself in a situation where you can move along a continuous axis and expect continuous improvement.

An important part of this definition is that scaling is an active process. You have to put yourself in this situation, which usually involves solving some bottleneck or knowing some detail about your setup, to really make the extension work.

This is a typical expansion chart. Your X-axis is usually the amount of computation, the amount of data, or the size of the model, and your Y-axis is some kind of capability that you're trying to improve. What you want to see is this blue line, and the performance continues to improve as you move along the X-axis. What you want to avoid is this red line where, after a certain threshold on the X-axis, performance saturates and no longer improves.

In a nutshell, this is it:

If you look at the papers in the field of large language models, you will see extensions everywhere. Here are some papers from OpenAI, Google Brain, DeepMind, and you can find these extension charts in these different papers. Sometimes you need to flip the chart up and down to find it because losses are drawn instead of performance. These charts are really signs of expansion, and they can be very powerful. At least at OpenAI, if you walk into a conference room with a chart like this, you'll get what you want at the end of the meeting.

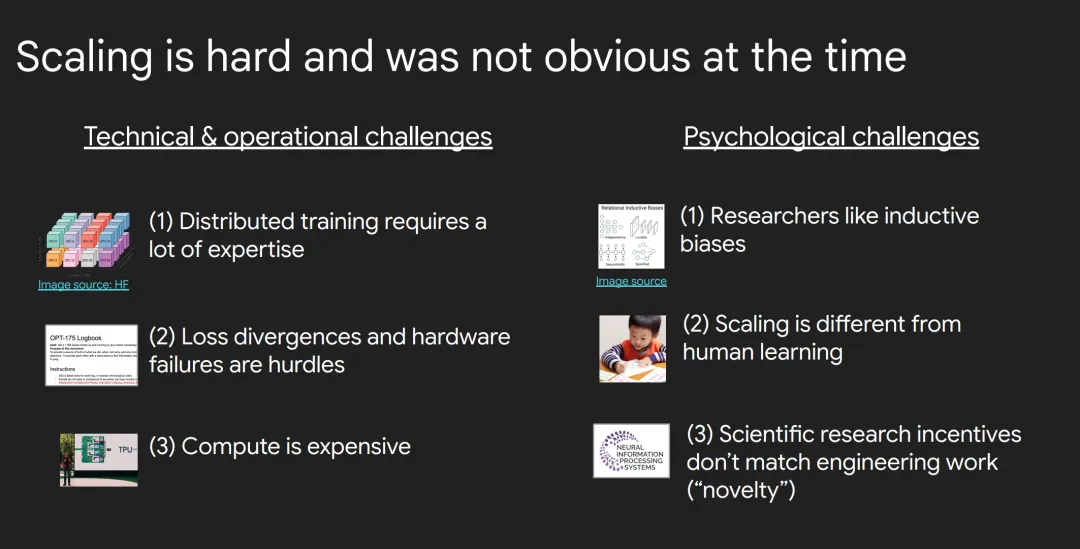

It's important to note that we take the extension paradigm for granted now, but at the time it was very unobvious. It's not obvious for a number of reasons.

The first is the technical and operational challenges that come with scale. First, distributed training requires a lot of expertise, and you need to hire a lot of infrastructure engineers to build this distributed training system. Second, you need machine learning researchers to combat possible loss divergence and hardware failures. The third point is that calculations are very expensive.

In addition to the technical challenges, there were some psychological challenges that made scaling quite difficult at the time. One psychological challenge is that researchers like inductive bias. There's an inherent joy in having a hypothesis about how to improve the algorithm and then actually seeing task performance improve. So researchers like to do the kind of work that changes algorithms.

Second, there has always been an argument that human learning is much more efficient than scaling. You know, a person can learn to write a paragraph of English without reading as many texts as they read during GPT-3 training. So the question is: If humans can do it, why do machines need to learn from so much data?

Third, for a long time, the incentives for scientific research and the engineering work required for expansion did not exactly match. You know, when you present a paper to a conference, they want to see some "innovation," not just that you made the data set bigger or used more Gpus.

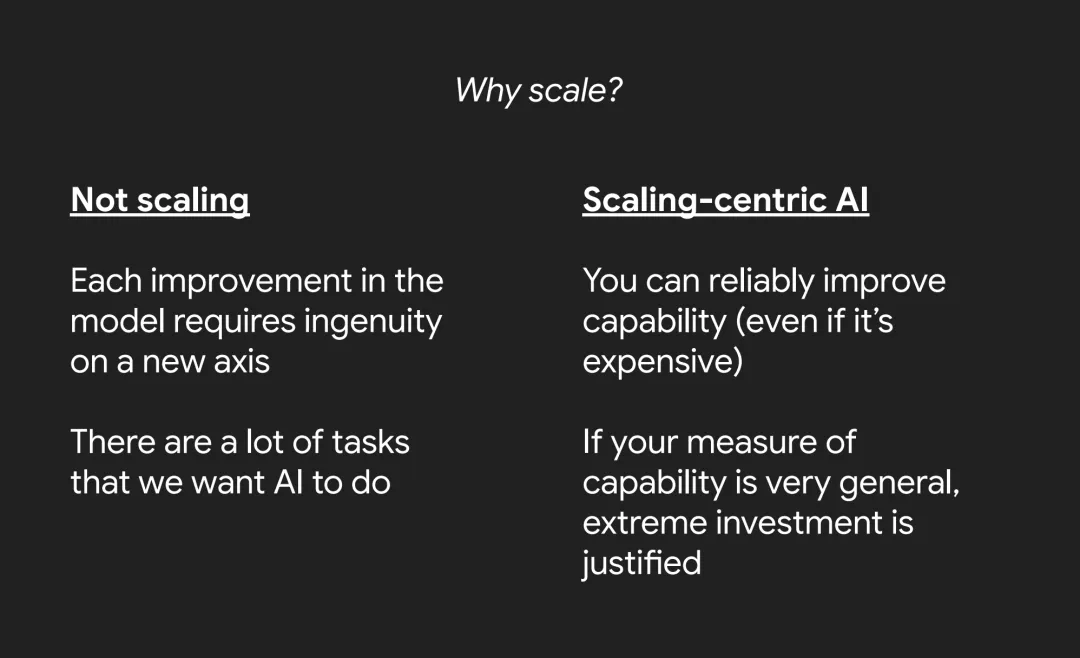

So, if scaling is so challenging, why do we do it?

I would say that if you don't rely on an extended paradigm, then almost every improvement requires new creativity. You have to put in researchers to achieve advances in the model, and it doesn't necessarily work out, which requires a certain level of creativity.

The second challenge is that we want AI to do a lot of tasks, and if you want to train AI individually on each task, that's going to be a big challenge.

In extension-centric AI, by definition, you have a reliable way to improve the capabilities of the model. Now it's important to note that this is usually very expensive. You'll see a lot of scaling charts where the X-axis is logarithmic, so improving performance is actually extremely expensive. But the good news is that if your ability measure (aka the Y-axis) is very generic, then this extreme financial investment can often be justified.

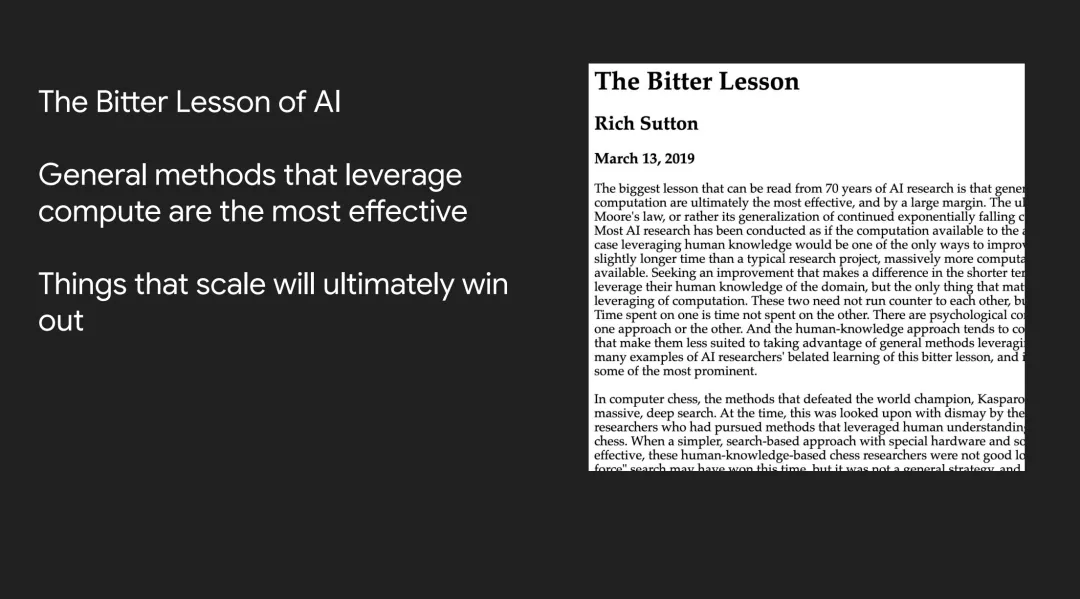

Of course, you can't talk about scaling without talking about Rich Sutton's article "The Bitter Lesson." If you haven't read it, I recommend it, it's very well written. The main point of the paper is that generic approaches to harnessing computing power are the most effective, and that scalable approaches win out in the end.

Now I want to talk about the first extended paradigm, which is the word predict next. The paradigm, which started in 2018 and we're still continuing today, is pretty simple - it's about getting really, really good at predicting the next word.

I don't think it's easy to fully understand that. The question is why do you get so much just by predicting the next word? My answer is: Predicting the next word is actually a massive multi-task learning.

Let's quickly review how the next word prediction works. You have a sentence like "On weekends, Dartmouth students like to ___". The language model then assigns a probability to each word in the vocabulary - from "a" to "aardvark" all the way to "zucchini." Then the model is only as good as how close to 1.0 it is to predicting the actual next word.

In this example, assume that "drink" is the actual next word. When the language model learns from it, it tries to increase the probability of "drink" and decrease the probability of all other words.

I want to show some examples of what can be learned just by doing next word predictions on a large enough database.

First of all, the model will definitely learn the grammar well. For example, In the pre-training data there is a sentence that says "In my free time, I like to {code, banana}", and the next word is "code" instead of "banana", so the language model learns that verbs should have a higher weight here than nouns.

Models learn about the world. For example, there might be a phrase on The Internet that says, "The capital of Azerbaijan is {Baku, London}," and then the model learns to give Baku a higher weight than London, so the model learns something about the world.

Models can learn classic natural language processing tasks, such as sentiment analysis. There's probably a phrase somewhere on the Internet that says, "I was engaged and on the edge of my seat the whole time. The movie was {good, bad}," Then by learning to give higher weight to "good" than "bad," the language model learns something about sentiment analysis.

Models can learn how to translate. There may have been a sentence in The pre-training that said "The word for" neural network "in Russian is {sensity-toner}, and then the model learned something about the Russian language by giving higher weights to the correct Russian words.

Models can learn spatial reasoning. There may be a phrase on the Internet that says "Iroh went into the kitchen to make tea. Standing next to Iroh, Zuko pondered his destiny. Zuko left the {kitchen, store} ", and then by giving "kitchen" a higher weight than "store", the model learned some spatial reasoning about where Zuko was.

Finally, models can even expect to learn something like math. There may be a sentence in the training "Arithmetic exam answer key: 3 + 8 + 4 = {15, 11}", and then the model learns some math by learning to predict 15 correctly.

As you can imagine, there are millions more like it. By training only the prediction of the next word on a huge corpus, the model is actually doing an extremely large amount of multitasking learning.

In 2020, Kaplan et al. published a paper popularizing this extended paradigm. It popularized the concept of scaling law, which basically says that the ability or performance of a language model to predict the next word smoothly improves as we increase the model size, data set size, and training computation.

Here on the X-axis is the training computation, which is the amount of data you train times the size of the model, and you can see that the ability of the model to predict the next word is improving.

They call it scaling law because they can see that the trend spans seven orders of magnitude. They trained the language model with seven orders of magnitude of computation and found that this trend persisted.

The most important thing here is that it doesn't saturate. This is important because if you scale up the computation, you can expect to get a better language model. That sort of gave researchers the confidence to continue to scale up.

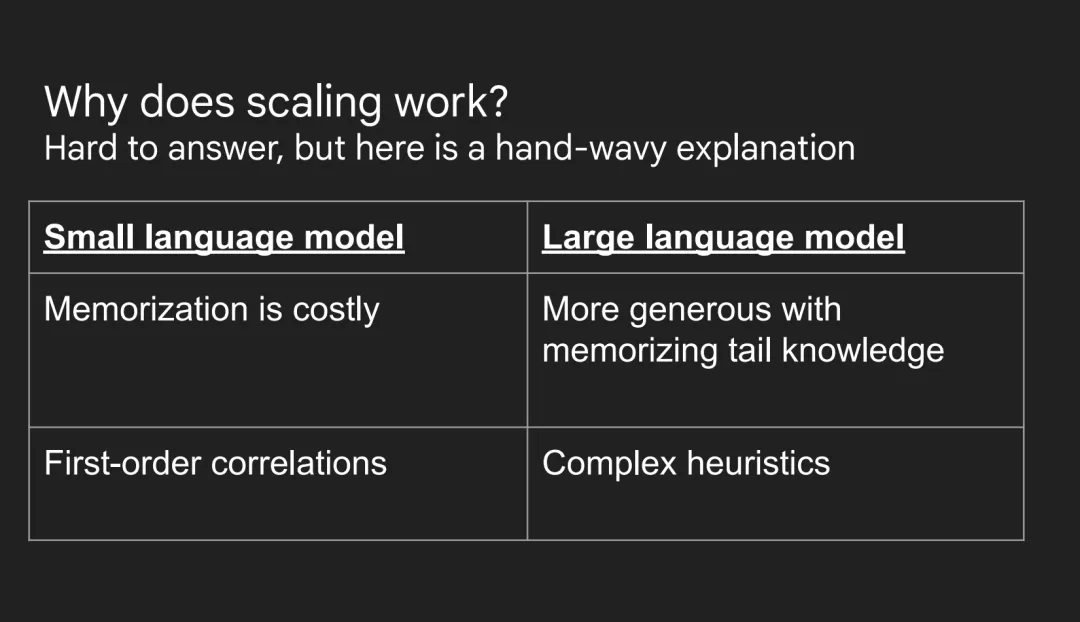

Here's a natural question: Why does scaling work so well? As a field, I don't think we have a good answer yet, but I can give a general explanation of two advantages that scaling can bring.

First of all, if it's a small language model, it's very expensive to remember. Because there are so few parameters, you have to be very careful about what kind of knowledge you want to encode in the parameters. Large language models have a lot of parameters, so they can be more generous in learning long tails and memorizing large numbers of facts.

Second, if it is a small language model, it is much less capable of a single forward propagation. So it probably learns primarily first-order correlations. Whereas if it is a large language model, it gains more computational power in a single forward propagation, and it is much easier to learn complexity when it has additional computational power.

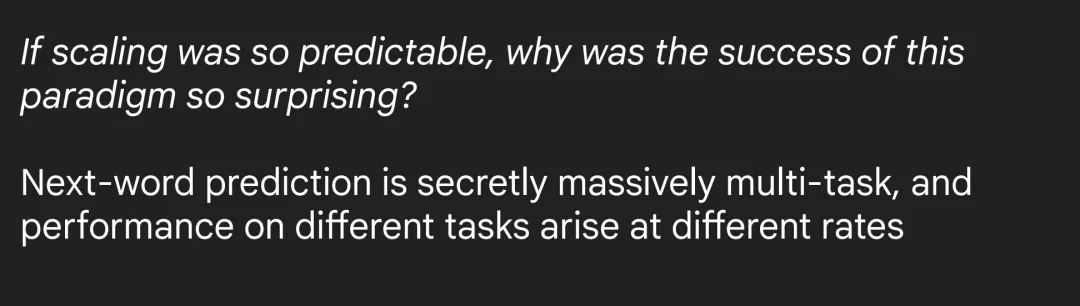

Now you might say, if scaling law is so predictable, why are so many people surprised by ChatGPT's success in the scaling paradigm? My answer is that the next word prediction is actually large-scale multitasking, and the rate of performance improvement varies across tasks, so the emergence of some capabilities may come as a surprise.

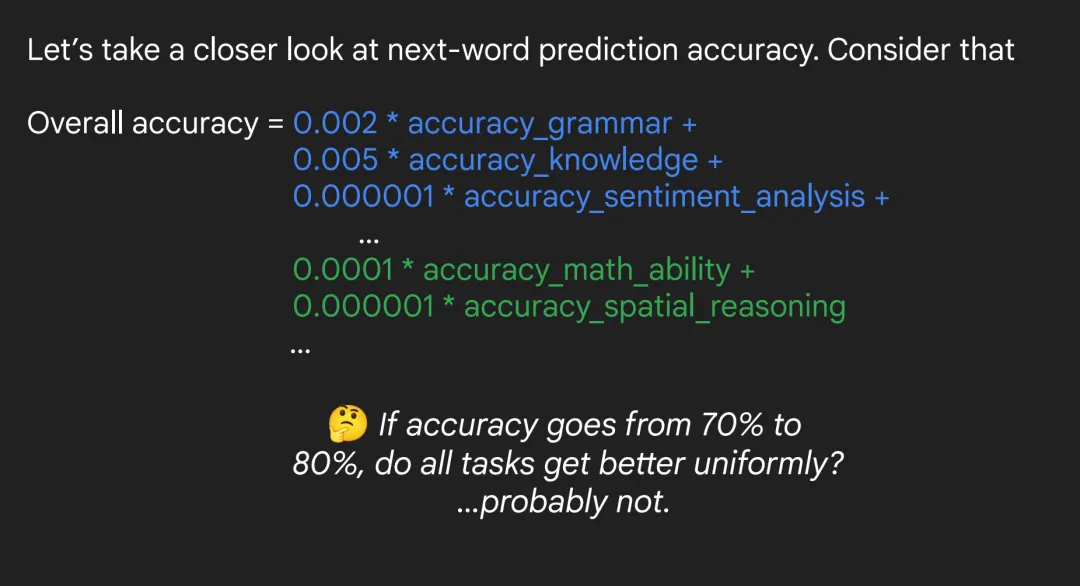

I suggest you look at the accuracy of the next prediction in this way: it's a weighted sum of many individual subtasks. I made these numbers up, but you can calculate the overall accuracy as: some small factor multiplied by grammar accuracy, plus some small factor multiplied by world knowledge accuracy, plus sentiment analysis, mathematical ability, reasoning, and so on.

When you look at it this way, you can ask yourself this question: If the accuracy goes from 70% to, say, 80%, will all tasks improve equally? For example, grammar went from 70 to 80, math went from 70 to 80?

I don't think so. You can see it this way: the overall capability is improving smoothly. For some simple tasks, you don't actually improve performance after a certain point. For example, GPT-3.5 basically has perfect syntax already, so when you train GPT-4, you may not actually lose out in terms of optimizing the syntax.

On the other hand, you may have some tasks where your abilities have improved significantly. For example, you could say that GPT-3 and GPT-2 are both poor at math and can't even do arithmetic, but GPT-4 is really good at math. So you might see math skills improve in this way.

The terms "emergent capacity" or "phase transition" are often used to describe this phenomenon.

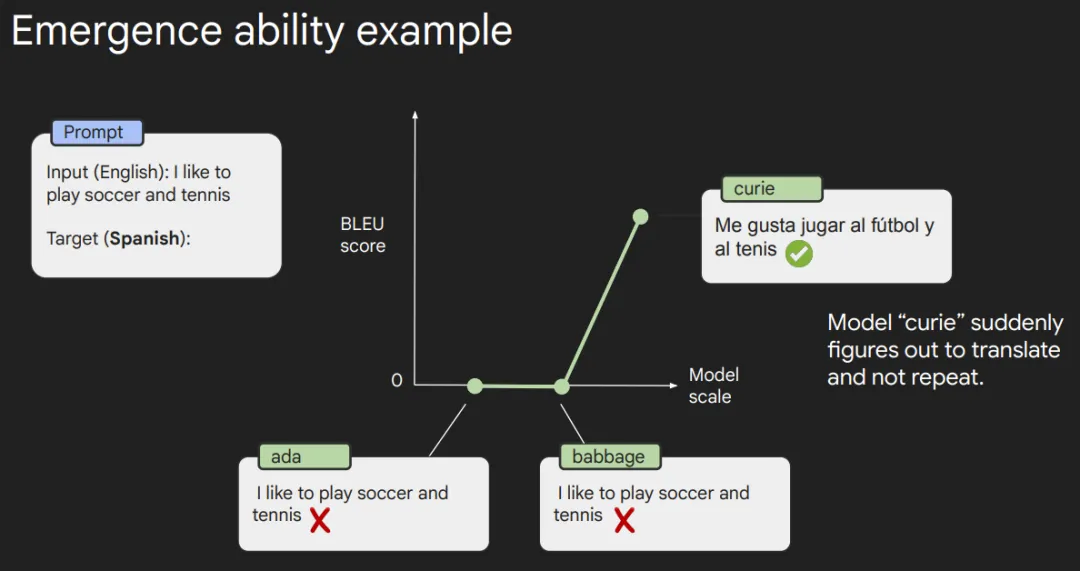

As you can see, there are two phases: poor performance or flat growth of the model before the threshold; Once a certain threshold is exceeded, the performance of the model rapidly improves. A simple example is shown below.

The cue word here is to translate a sentence into Spanish. As you can see, the figure shows the output of the three models. ada and babbage simply repeated the input because they did not really understand that translation should be performed here. And the largest model, curie, suddenly learned to perform this task perfectly.

The point is, if you only trained ada and babbage and tried to predict whether curie could do it, you might get the negative answer that curie couldn't do it either. But this is not the case.

So we can draw a map of the tasks we want the AI to accomplish. Start with the most basic tasks (like getting back basic facts or making sure grammar is correct), and move on to moderately difficult tasks like translating, writing code, and writing poetry, to the most difficult tasks like writing a novel or doing research.

As the models grew, they were able to do more and more tasks; GPT-2 could only do a fraction of these tasks, GPT-3 could do more, and GPT-4 gained more capabilities, such as debugging code and writing poetry.

The question then arises: if the next word prediction works so well, can you reach AGI simply by extending the next word prediction?

My answer is: Maybe, but it will be very difficult, and we need to continue to expand a lot.

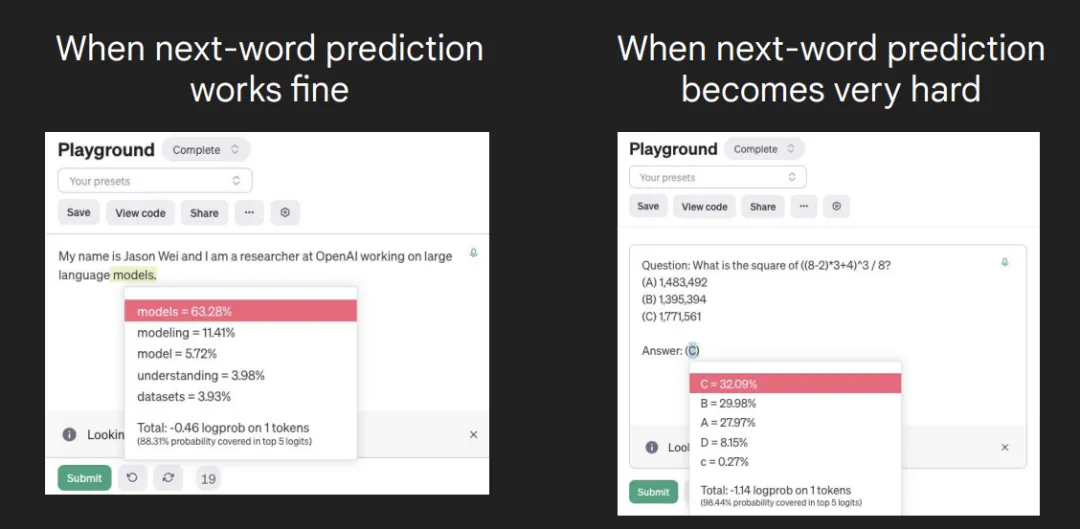

There is a fundamental bottleneck to just predicting the next word: some words are very hard to predict and require a lot of work.

For example, the left side of the figure below shows a good example of the next word prediction, where it is easy to predict that the last word is models. For the math problem on the right, it is difficult to predict which of A, B, or C is the correct answer simply by looking at the next word.

It is important to emphasize that tasks are difficult. If you simply use the next word prediction, you are using the same amount of computation to solve very simple tasks and very difficult tasks.

What we really want is a small amount of computation for easy problems and a large amount of computation for difficult problems (such as getting a multiple-choice answer to a competition math question).

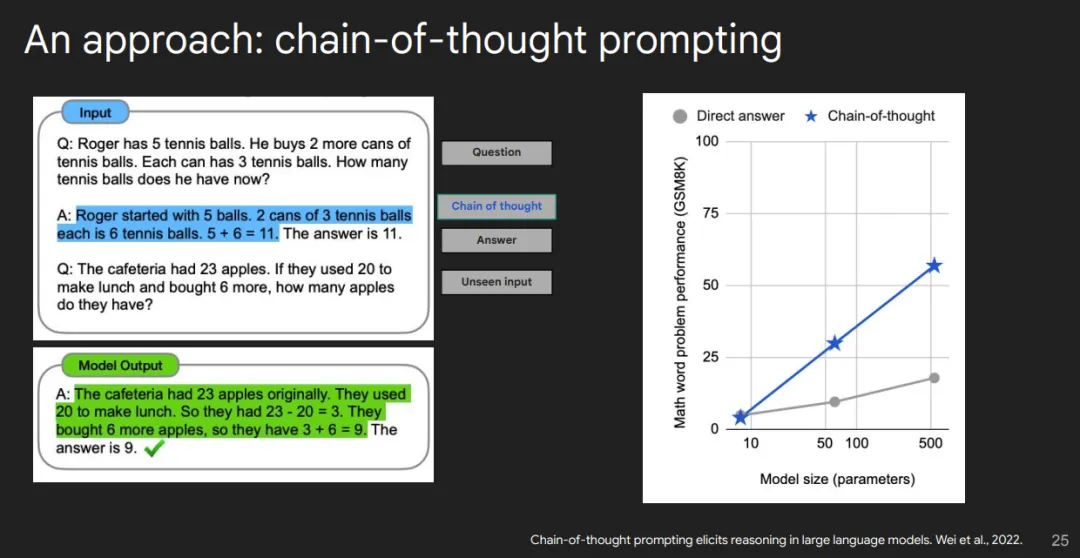

One way to do this is with thought chain prompts, which is something we've been doing for the past few years. The method is simple: just prompt the language model to give the chain of reasoning, just as you would show your teacher how to solve the problem. The language model can then actually output this chain of reasoning before giving a final answer.

The method proved to be quite effective. If you have a math word problem benchmark, you will see a huge improvement in performance as the model grows larger if you use thought chains instead of direct answers.

Another perspective is based on the book Thinking, Fast and Slow, which separates what is called System 1 and System 2 thinking.

System 1 thinking is the next word prediction, and it is automatic, effortless, and intuitive, such as repeating a basic fact or recognizing a face.

The thought chain belongs to System 2 thinking, which is conscious, laborious, and controlled.

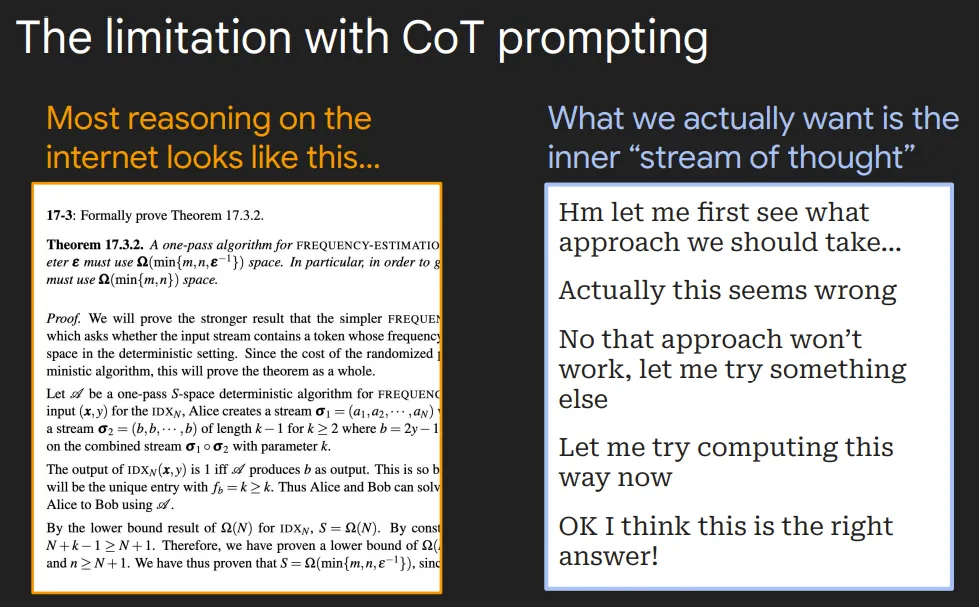

However, there is also a key problem with the chain of thought suggestion: when you train a model on most Internet data, the reasoning that the model trains mostly looks like this on the left side of the figure below. This example comes from a college math assignment where you can spend an hour doing the problem and then 10 minutes transcribing it into LaTeX. In the format, you can see that the answer is given at the beginning, followed by the proof. So this is really an after-the-fact summary of the internal reasoning process.

This is an example of a solution to one of my college math assignments. You'll notice that if you think about where this came from, I probably spent an hour doing this problem on paper and then 10 minutes transcribing it into LaTeX. You can see that, you know, the proof is at the beginning, the answer is at the beginning and so on. So it's actually an after-the-fact summary of the actual internal reasoning process.

But really, the chain of thought wants the model to think like our inner monologue. As shown on the right above, we want the model to say, "Let's see what approach we should take first; I'll try this; this isn't actually right, I'll try something else..."

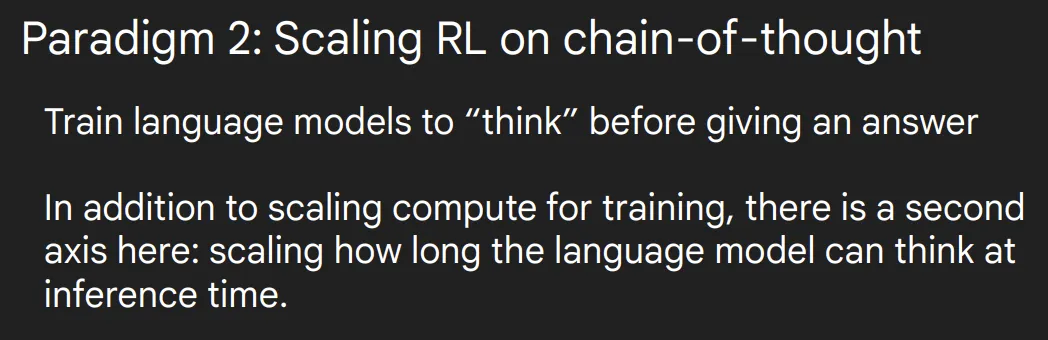

This brings us to the second paradigm: extended reinforcement learning based on thought chains. The idea of this paradigm is to train a language model to think before it gives an answer.

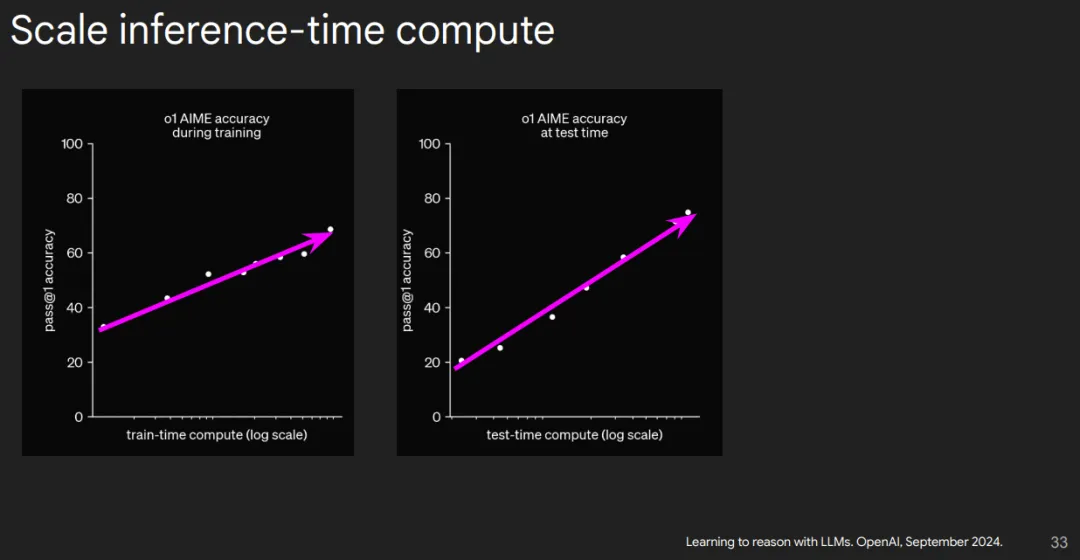

The researchers found that in addition to expanding the computational scale of the training, which has been the dominant practice for the past few decades, there is another possibility: extending the time that language models have to think while performing their reasoning.

With this in mind, OpenAI built o1. The related blog has covered the relevant technology and is worth reading, here is a summary of a few key points.

This blog post shows some of the chains of thought given by o1, from which we can learn a lot.

As shown above, o1 is solving a chemistry problem. First it says, "Let's understand what the problem is," which is an attempt to understand the problem correctly. It then tries to determine which ions and which ions have an effect on pH. It was found that there were both weak acids and weak bases. So o1 came up with another strategy, using Ka and Kb values to calculate pH. Then it did some backtracking and found that it was better to use Kb. It then goes on to think: what is the correct formula, how to calculate it. Finally, it got the final answer.

Another class of problems where the chain of thought plays a big role is the problem of validation asymmetry, that is, situations where it is much easier to verify a solution than to generate one, such as a crossword puzzle, sudoku, or writing a poem that meets certain constraints. The figure below shows an example of a crossword puzzle.

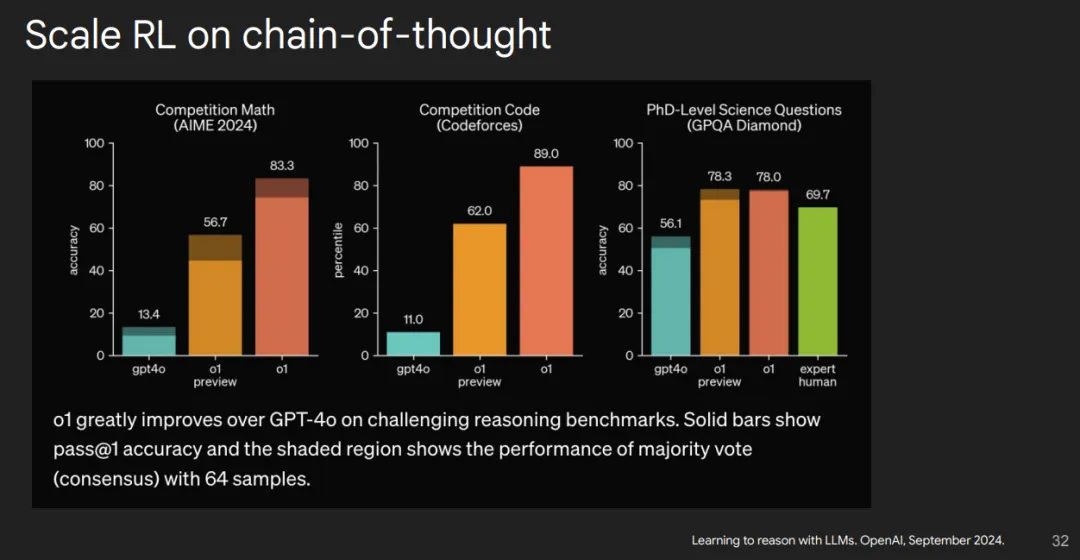

In addition, thought chains can be useful if some problems require a lot of thought, such as competition math or competition programming. In fact, the effect of the chain of thought is so obvious that GPT-4o can only reach the level of ten percent in competition math and competition programming, while O1-Preview and o1 can solve most of the problems. So it's the difference between "basically can't get the job done" and "can solve most problems."

The o1 blog also mentions the first-pass accuracy rate on the contest math dataset. As the amount of training computation increases, the one-pass accuracy rate will also improve. The second graph shows that if the model is given more time to think and reason, there can also be a positive expansion in mathematical benchmarks.

Now I want to talk about why this paradigm is so special. I think for many people, the reason we're so excited about AI is that we hope that one day AI will help us solve the most challenging problems facing humanity, like healthcare, disease, the environment, and so on.

So ideally, the way forward is: you can come up with a very challenging problem (like writing a research paper on how to make AI), and then the language model can spend a lot of computational resources in reasoning to try to solve that problem. Maybe you ask a question, thousands of Gpus run for a month, and eventually it will return a complete answer, like this is a whole set of research results on how to make AI.

What we want to extrapolate is that right now AI may only be able to think for seconds or minutes, but eventually we want AI to be able to think for hours, days, weeks or even months to help us solve some of our most challenging problems.

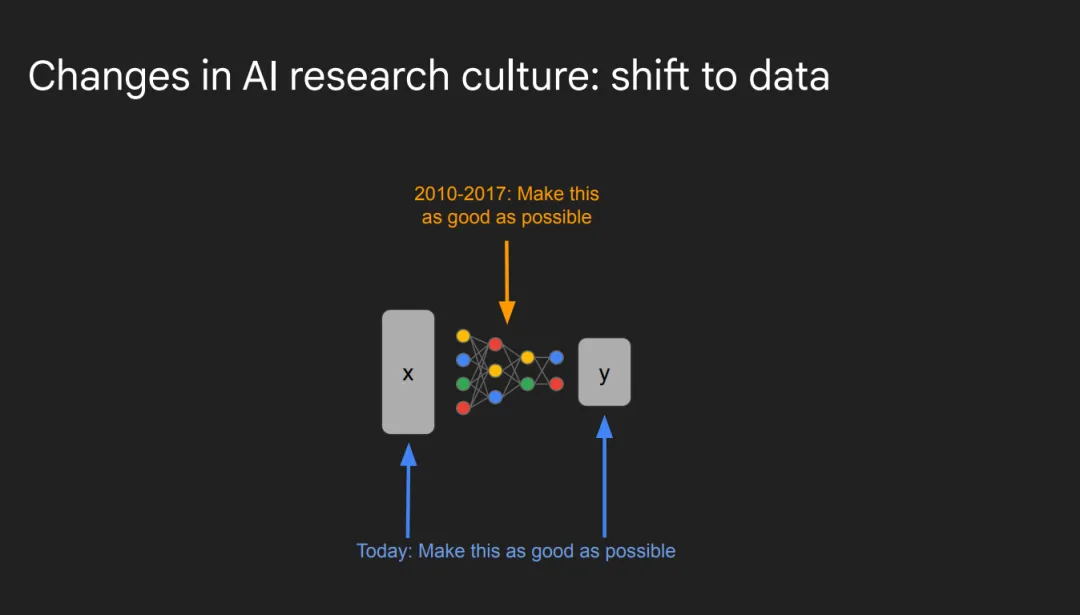

Next, I want to talk about how scaling has changed the culture of AI research. One important shift is the emphasis on data.

In the past, from 2010 to 2017, or even earlier, the goal was to get neural networks to learn a certain X and Y relationship as well as possible, that is, to pursue optimal performance. Now, the goal becomes how to make X and Y as good as possible, because we already have an effective way to learn.

In summary, the potential of AI lies in its ability to help us solve complex problems with vast amounts of computing resources and time, and the shift in research culture is reflected in the shift from simply optimizing models to optimizing the data itself. This change is pushing AI in a more profound direction.

This example is a good illustration of the shift in focus of modern machine learning research. In the past, researchers have typically focused on training the best models on existing datasets, such as ImageNet, in pursuit of higher scores, such as accuracy. The goal was to optimize the model itself, rather than consider expanding or improving the data set, such as expanding ImageNet by a factor of 10, and retraining the model.

However, research trends today suggest that model performance can be significantly improved by improving the quality or domain relevance of datasets (X and Y). A case in point is Google's Minerva model, released two years ago. Instead of designing a new model from scratch, Minerva's research team built on an existing language model and significantly improved its performance on the mathematical task by continuing to train on a large amount of mathematically relevant data, such as papers on arXiv.

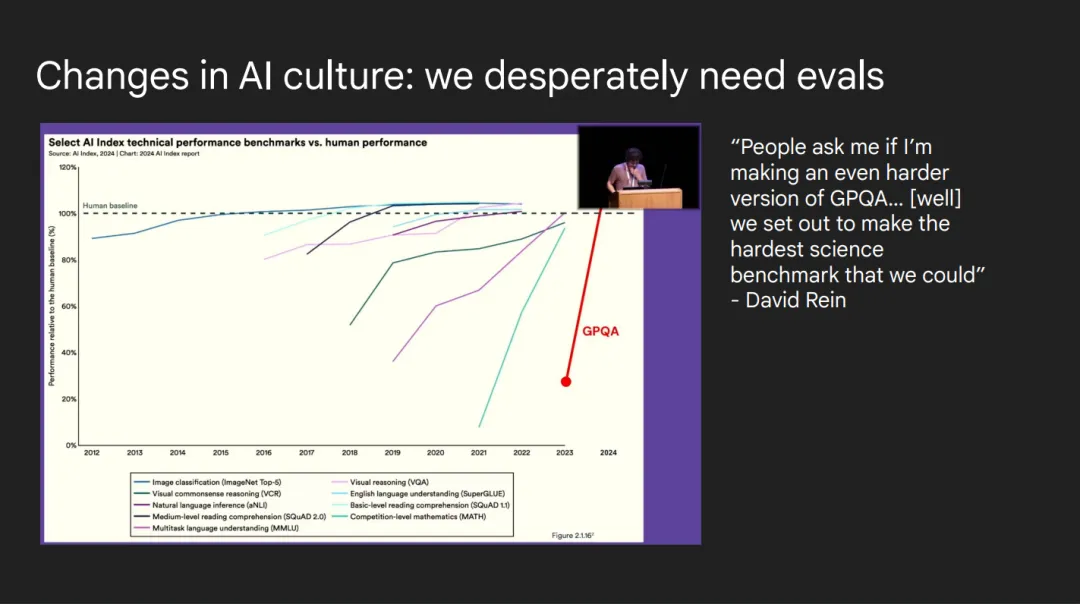

Another shift in AI culture is that we sort of have a lag, where we don't really have evaluation criteria that capture the limits of the capabilities of language models. This is a graph I pulled from a talk by David Ryan. This chart basically shows how quickly benchmarks get "saturated." You can see that about 8 years ago, a benchmark could take a few years to get saturated. Some of the more recent challenging benchmarks, such as question answering (QA), may be saturated in about 0.1 years (about 1 month). When David was asked if he would design a harder benchmark, his answer was that he was working on a harder benchmark. That sounds very interesting.

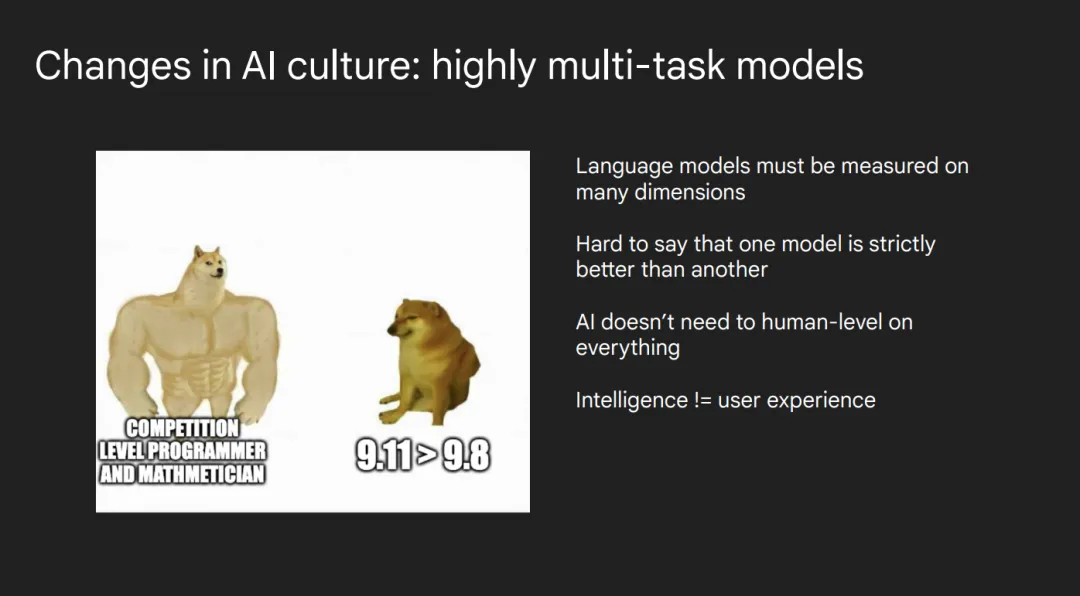

In addition, another change in AI culture is toward highly multi-task models. In the past, each NLP task required a separate model. Now, we have a single model trying to do many different tasks. This leads to some strange phenomena, such as the model may be a competit-level programmer and mathematician, but when you ask it whether 9.11 or 9.8 is bigger, it will say 9.11 is bigger.

The challenge here is that we need to measure the performance of the language model in multiple dimensions. Because there are so many evaluation benchmarks and methods, and the application scenarios of models are so broad, it is difficult to say whether one model is strictly superior to another. As is often the case, no one model strictly outperforms the others in all aspects.

One argument I sometimes hear is that AI can't do something, so it's not useful. But my view is that AI does not need to be human in all aspects. It only needs to perform well in a few use cases to be very useful to humans.

Finally, I would like to say that intelligence and user experience are two dimensions that can be improved separately. Often, people will try to improve the language model by making it better at things like math and coding, but that doesn't mean you'll get a model with a better user experience.

Perhaps the last shift in our culture is toward greater teamwork. In 2015, for example, two people could write a groundbreaking paper that might even be one of the most cited. Today, you need a team to do that. Google's Gemini development team, for example, has a page full of staff.

Now let me talk about the direction that artificial intelligence will continue to develop in the future.

One of the directions that I'm really excited about is the use of AI in science and medicine. I think, as humans, we're pretty good at research, but we have a lot of limitations.

For example, we can't remember all the information on the Internet, we get tired, we get distracted and so on. I think AI does have a lot of potential for scientific and medical innovation because it can learn almost anything and it doesn't get tired and it can work long hours, right?

The other direction is more fact-based AI. Currently, models like ChatGPT still hallucinate more than we expect (i.e. generate inaccurate or fictional content). Ultimately, I think it's possible to develop a model that is almost hallucinatory, is very good at citing sources, and is very precise.

Overall, the potential for AI in science, medicine, and improving factual accuracy is huge, and these areas will continue to drive the development of AI technology.

I think we're going to move towards multimodal AI. Text is a very good medium for learning because it is a highly compressed representation of our world. But we will move toward AI that is more integrated into our world, like Sora and advanced speech modes.

I think tool use will also become another important direction.

At the moment, AI is more like a chat assistant, you can ask it questions and get answers. But I think ultimately we want to get to a state where AI is able to perform actions on behalf of the user and is able to proactively provide services to the user.

Finally, I think we're going to see a lot of AI applications landing. I feel that there is always a lag between the research phase of a technology and its actual deployment. Waymo, for example, has worked well in complex driving environments like San Francisco, but Google has yet to roll out Waymo to much of the world. Another example is that I think AI is good enough right now, like ordering your food in a restaurant, but it's not widely adopted yet.

Ok, so I'm going to go back to the first chart, which is 2019 and 2024, and we're going to add a five-year forecast. In the past, the capabilities of AI were very limited, but today it is very powerful. I'm really excited about the next five years of AI and encourage everyone to think about it.

Finally, I want to end with this: When Nemo in Finding Nemo doesn't know what to do next and gets stuck, Dory says: You just have to keep swimming. All I'm saying is, you just have to keep Scaling.

2025-02-17

2025-02-14

2025-02-13

friend link

400-000-0000

立即获取方案或咨询

top