Home > Information > News

#News ·2025-01-06

Pre-trained language models typically encode a lot of information in their parameters, and as they increase in size, they can recall and use that information more accurately. For dense deep neural networks that primarily encode information into linear matrix transform weights, the expansion of parameter sizes is directly related to the increase in computation and energy requirements. An important subset of information that language models need to learn is simple associations. While a feedforward network can learn any function in principle (given sufficient scale), it is more efficient to use associative memory.

memory layers use a trainable key-value lookup mechanism to add additional parameters to the model without increasing the FLOP. Conceptually, the sparsely activated memory layer complements the computationally dense feedforward layer, providing dedicated capacity to store and retrieve information inexpensively.

Recently, a new study from Meta takes memory layers beyond proof of concept, demonstrating their utility in large language model (LLM) extensions.

In downstream tasks, language models enhanced by an improved memory layer outperform intensive models with more than twice the computational budget, as well as expert hybrid (MoE) models with computational and parametric equivalents.

This work shows that when the memory layer is sufficiently improved and extended, it can be used to enhance dense neural networks, leading to huge performance gains. This is achieved by replacing the feedforward network (FFN) of one or more transformer layers with a memory layer (leaving the other layers unchanged). These advantages were consistent across a variety of base model sizes (from 134 million to 8 billion parameters) and memory capacity (up to 128 billion parameters). This represents a leap of two orders of magnitude in storage capacity.

The trainable memory layer is similar to the attention mechanism. Given a query , a set of keys

, a set of keys , and value

, and value . The output is a soft combination of values, weighted according to the similarity between q and the corresponding key.

. The output is a soft combination of values, weighted according to the similarity between q and the corresponding key.

When used, there are two differences between the memory layer and the attention layer.

The study expands the number of key-value pairs to millions. In this case, only top-k most similar keys and corresponding values are output. A simple memory layer can be described by the following equation:

Where I is a set of indicators, , output

, output 。

。

One bottleneck in extending the memory layer is the "query-key" retrieval mechanism. Simple nearest neighbor searches require comparing each pair of query-keys, which quickly becomes unfeasible for large memory. While approximate vector similarity techniques can be used, it is a challenge to integrate them when keys are constantly being trained and need to be re-indexed. Instead, this paper uses a trainable "product-quantized" key.

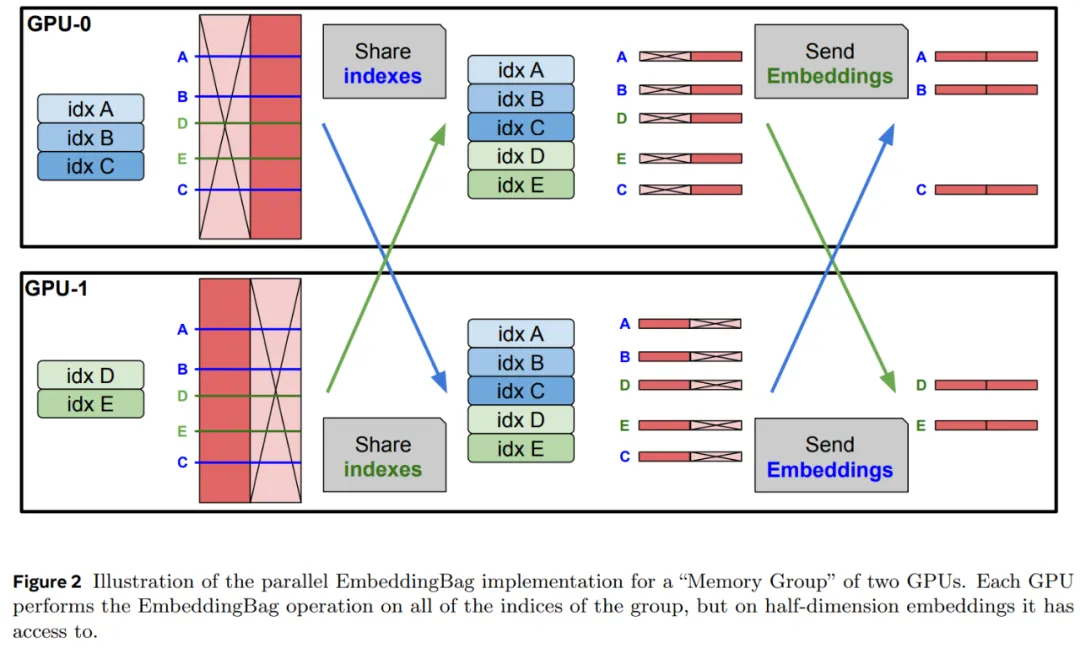

Parallel memory. The memory layer is memory-intensive, mainly due to the large number of trainable parameters and associated optimizer states. This study parallelizes the embedding lookup and aggregation on multiple Gpus, and the memory values are shards on the embedding dimension. At each step, indexes are collected from the process group, each worker is looked up, and then the embedded parts are aggregated into shards. Thereafter, each worker collects the partial embeddings corresponding to its own indexed section. This process is shown in Figure 2.

Shared memories. Deep networks encode information at different levels of abstraction on different layers. Adding memories to multiple layers may help the model use its memories in a more general way. Compared to previous work, this study uses a shared memory parameter pool across all memory layers, thus keeping the number of parameters the same and maximizing parameter sharing.

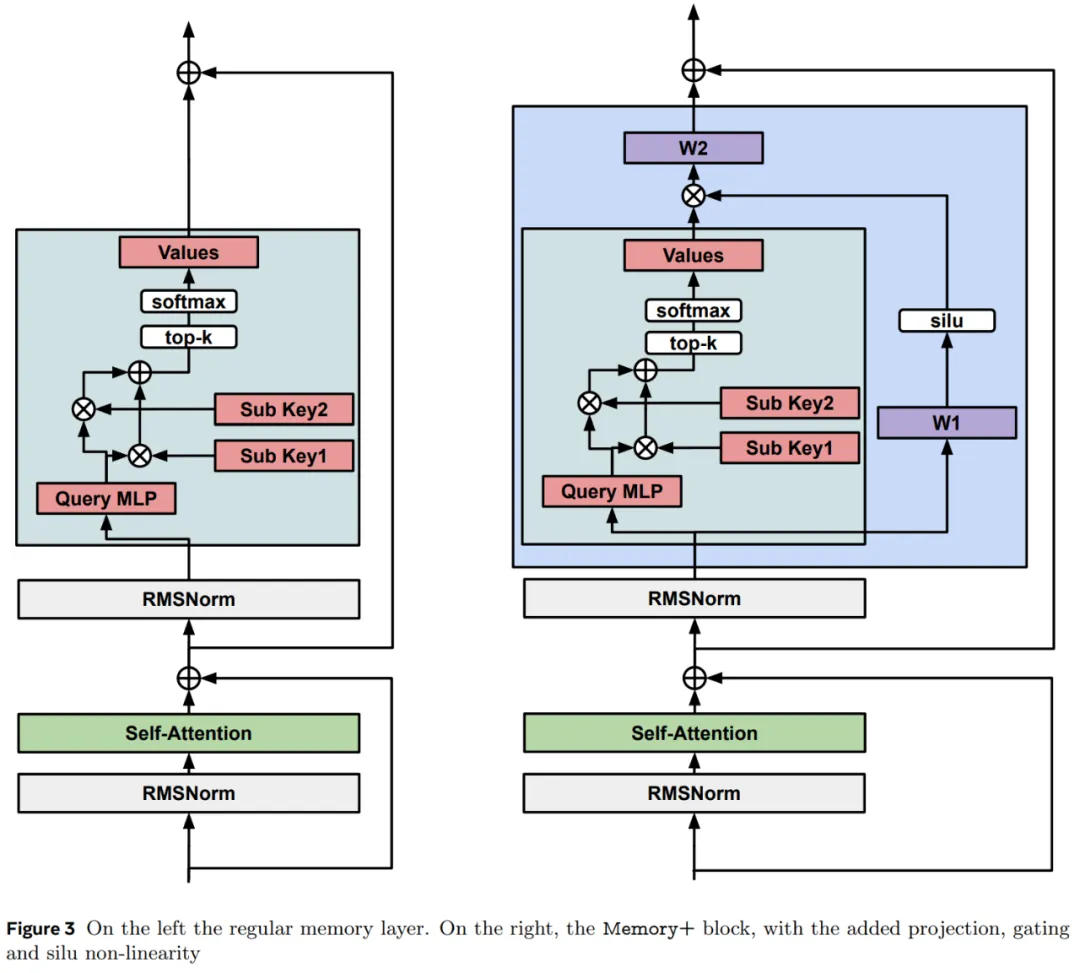

This study improves the training performance of the memory layer by introducing input-dependent gating with silu nonlinearity. The output in equation (1) becomes:

Where silu (x) = x sigmoid (x), ⊙ is the multiplication of elements (see Figure 3).

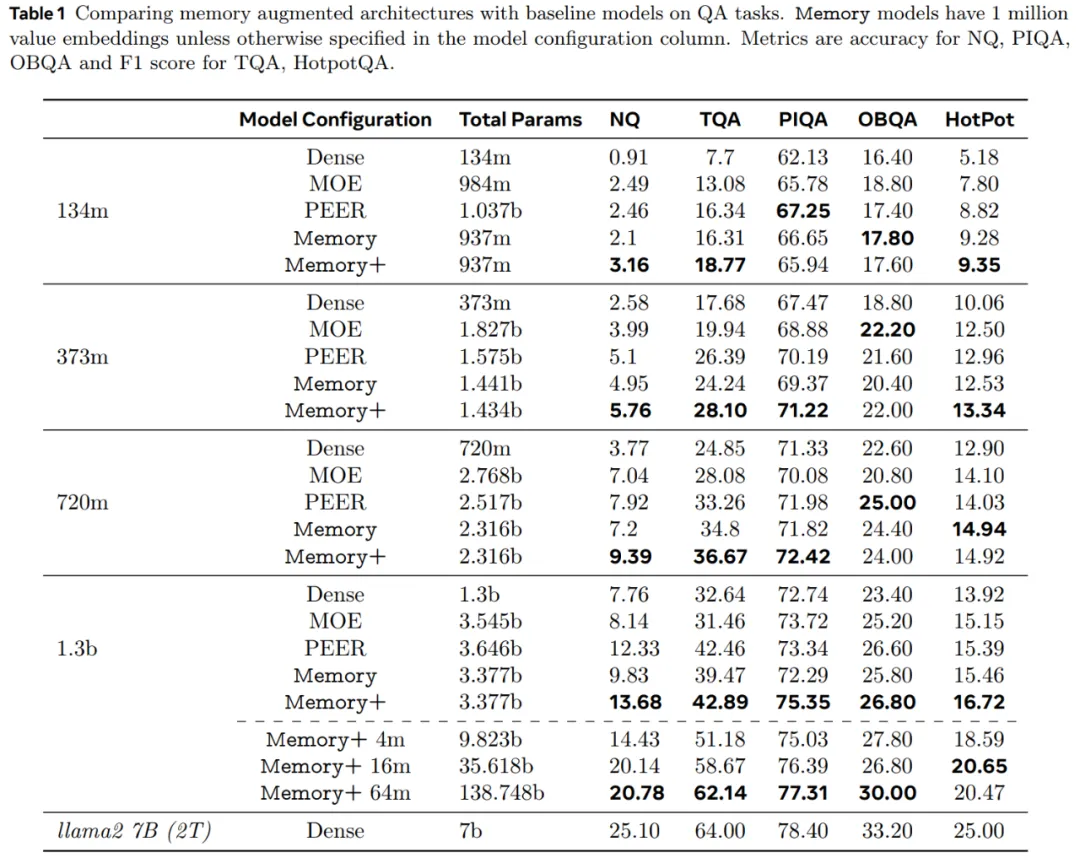

First, the study fixed memory sizes and compared them to dense baseline and MOE and PEER models with roughly matched parameters.

From Table 1, we can see that the Memory model is a significant improvement over the dense baseline model, generally performing on QA tasks as well as models with twice the number of dense parameters.

Memory+ (which has 3 Memory layers) is a further improvement over Memory, with performance typically ranging between dense models with 2 to 4 times more computing power.

For the same number of parameters, the PEER architecture performs similarly to the Memory model, but lags behind Memory+. The MOE model does not perform nearly as well as the Memory variant.

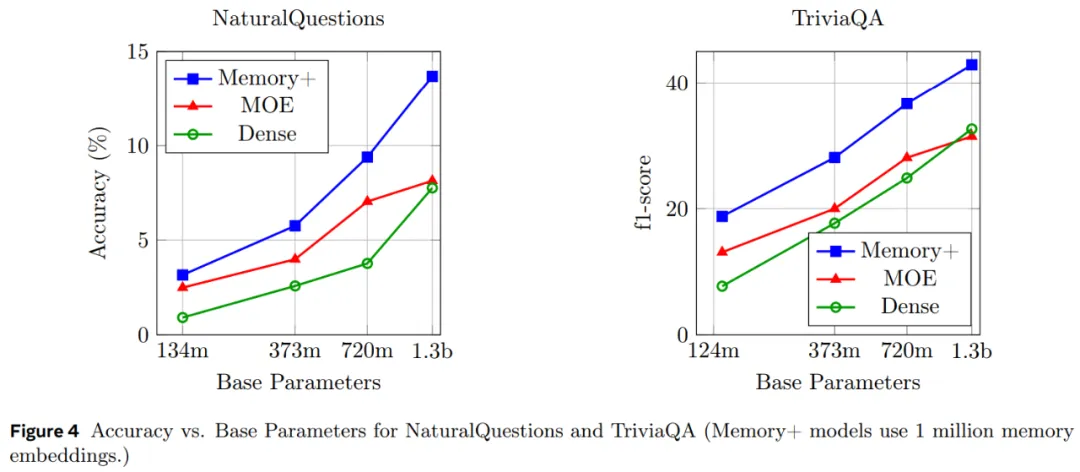

Figure 4 shows the scaling performance of different sizes of Memory, MOE, and dense models on QA tasks.

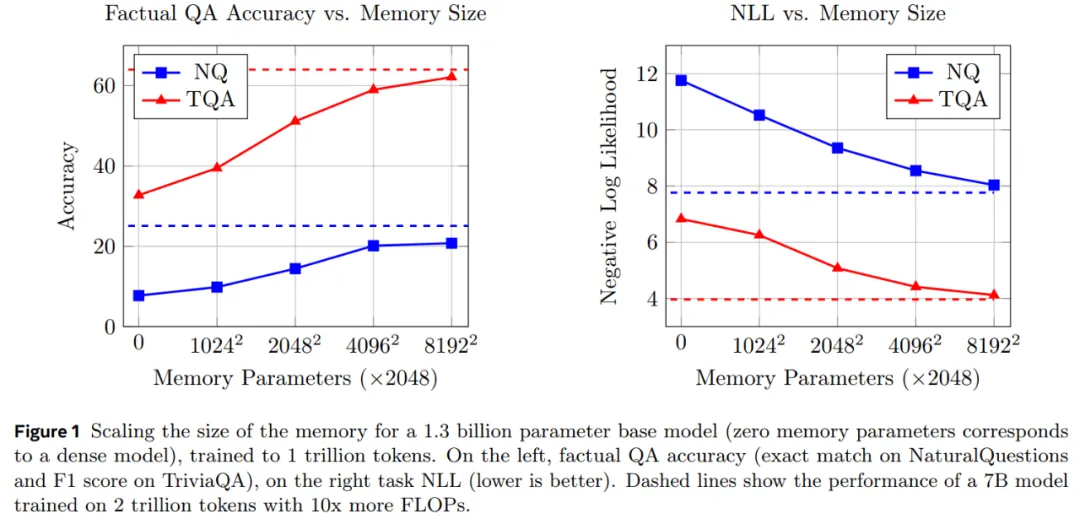

Figure 1 shows that the actual QA performance of the Memory+ model increases with increasing memory size.

At 64 million keys (128 billion Memory parameters), the performance of the 1.3B memory model approaches that of the Llama27B model, which uses more than 10 times FLOPs (see Table 2).

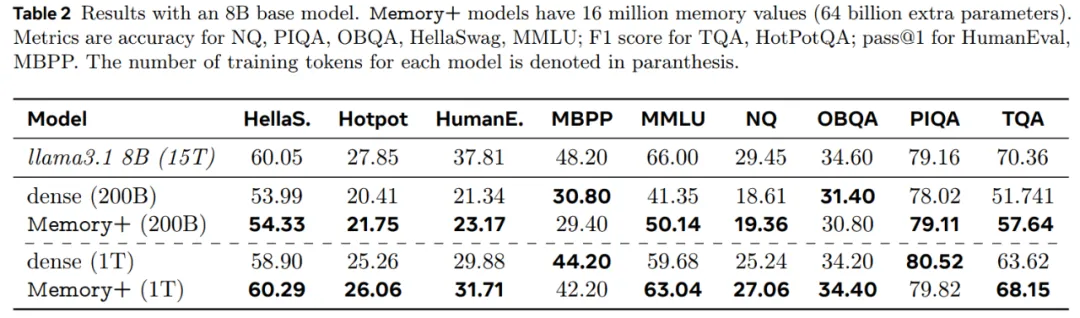

Finally, we extend the Memory+ model on the basis of the 8B base model and 4096^2 memory values (64B memory parameter), and Table 2 reports the results, finding that the memory enhanced model significantly outperforms the intensive baseline.

2025-02-17

2025-02-14

2025-02-13

friend link

400-000-0000

立即获取方案或咨询

top