Home > Information > News

#News ·2025-01-08

Edit | Yi Feng

Just now, Nadella gave the first speech of the New Year at CEOMicrosoft India!

As a big kickoff to the year, the speech was packed.

Nadella said, "In a way, for me, Microsoft has always been focused on two things: We're a platform company and we're a partner company. Even in the age of AI, that's not going to change."

The presentation in Bangalore, India, focused on how Microsoft will be platformized in the AI era.

Talking about the great changes brought about by AI, Nadella also recalled with emotion the influence of Bill Gates on Microsoft. "Whenever we talk about platform change, we have to understand what are the fundamental forces driving platform change. Looking back over my 35 years in technology, that foundational force has always been Moore's Law. I often recall that Bill would convene us every year to look at Moore's Law trends and storage technology developments and simply say, "Fill it with software." This is the only instruction for the entire company. And that is still true today."

Without further ado, let me draw a key point for my speech:

Here is a edited transcript of the speech, enjoy:

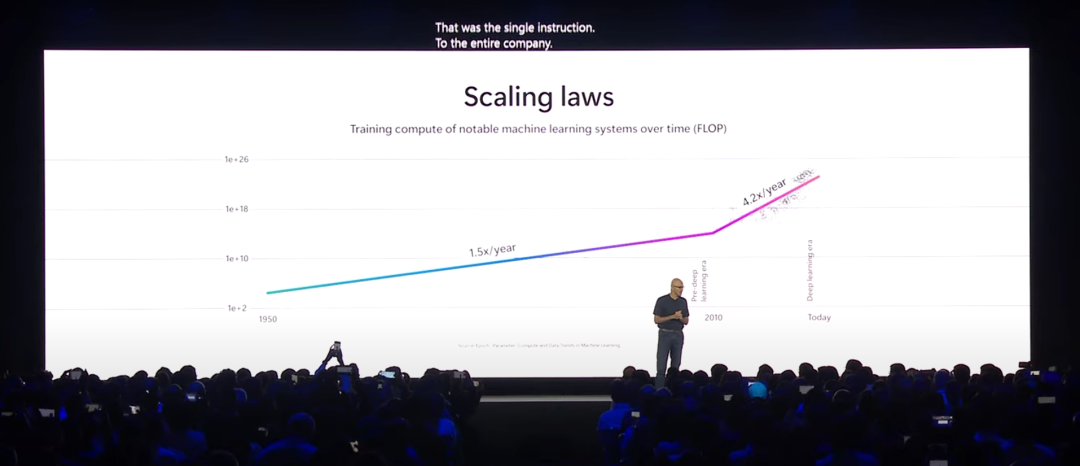

When we talk about the laws of expansion that underpin the development of AI, Moore's Law is once again in play.

This started with Deep neural networks (DNNs) in 2010, and later Gpus drove the trend again, especially the use of transformer models, as the efficiency of data parallel processing increased. Data capacity has gone from doubling every 18 months to doubling every 6 months, such is the power of the law of expansion.

Now, of course, there is an ongoing debate about whether the extended law of pre-training can continue. We firmly believe that these scaling laws are still valid, but as the size of the data, the number of parameters, and the system problems grow, so do the challenges.

More interestingly, the extended law of inference time or test time computation is becoming increasingly important. To some extent, pre-training has a sampling step, and it's about how to use the sampling step more effectively. What we're really excited about right now is the opportunity to take this ability to the next level, especially in reasoning.

Now, there are three key capabilities that are changing software interfaces:

The first is multimodal capability. I recently set the "action button" on my iPhone to call Copilot, and now I can confidently speak to it in Hyderabadi dialect or Urdu and it understands me, like I'm talking to my high school friends. This simple and familiar interface will change all software categories.

Second is planning and reasoning. For example, in the GitHub Copilot workspace, it can perform the ability to plan and execute multi-step processes.

The third is extended memory, tool usage, and permission management outside the model. The focus for developers over the next 12 months will be on how to make the model aware of the tools available, not just function calls, but making sure it understands permissions and has a long-term memory. This will help us create a rich ecosystem of intelligent Agents.

When we think about Agents, it involves bringing together multimodal capabilities, planning and reasoning, memory, and the use of tools, especially authorization. This way, we can start building individual Agents, team Agents, enterprise Agents, and cross-enterprise Agents. This "intelligent" world is the direction we look forward to building together.

Of course, at Microsoft, we never focus on a single technology. We're focused on how we can empower every person and every organization on the planet to achieve more through these technologies. The sense of empowerment that this platform can bring will reach a whole new level.

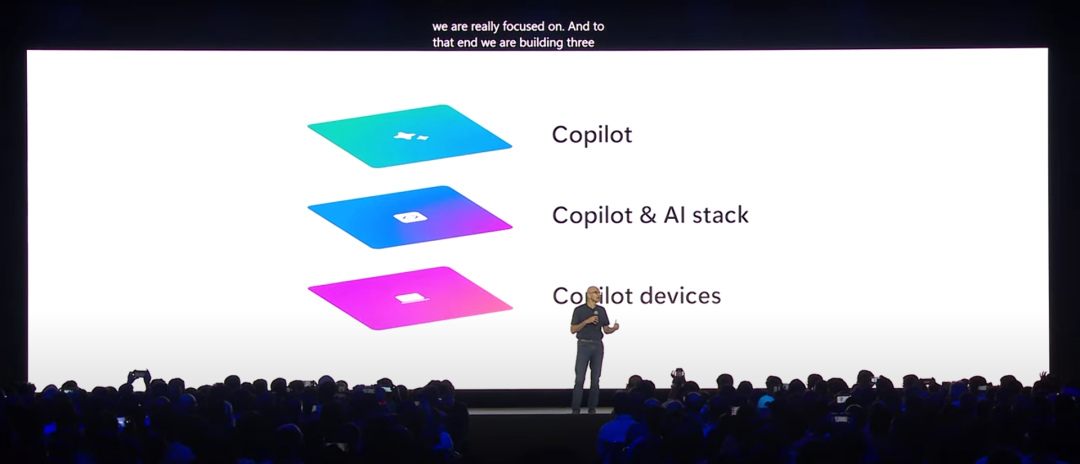

That's where we're really focused. To that end, we're building three platforms: Copilot, the Copilot AI stack, and Copilot devices. Next, I want to outline the main content of these platforms.

picture

picture

Think of Copilot as the user interface (UI) for AI. Even in a rich world, AI still needs to interact with us, and that interaction requires a UI layer. As a result, Copilot as an organizational layer becomes critical in a world full of autonomous Agents.

Our strategy is to build Copilot into existing workflows.

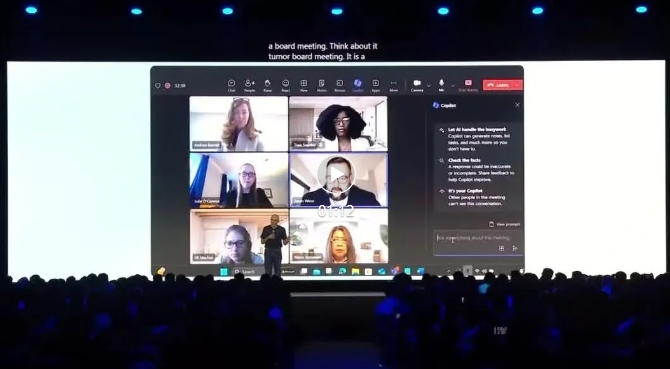

One of the best examples I've seen is about one of the most important scenarios in knowledge work.

For example, a doctor is preparing for an oncology board meeting. Such meetings are critical, meaning she needs to read all the reports and allocate exactly how much time to discuss each case. Thus, the creation of the agenda is itself an inference task, and the AI generates an agenda indicating which cases are more complex and require more time.

The doctors then discuss the cases in Teams meetings, where they can focus on the discussion without taking notes, as the AI takes all the details. When it was over, the doctor was also a teacher, and she wanted to use the discussion for teaching purposes. She can turn her notes into Word documents and then into PowerPoint for use in class. This simple but high-impact workflow is made possible by embedding AI into the process.

This shows an example of how AI can be integrated into existing workflows.

Next, we'll see new forms of workflows through Pages and Chat, Web and Work Scope.

Now, I can access information from the Web or Microsoft 365 diagrams with a single query and elevate that information into an interactive AI-first canvas called Pages. In Pages, I can make changes directly using Copilot. It's a new way to think with AI and collaborate with colleagues. Chat and Pages will be the new AI hubs, just as Word, Excel, and PowerPoint changed the way we work in the past.

But we didn't stop there. Next, we want to focus on scalability.

The expansion of AI begins with Copilot Actions. Just as rules were used in Outlook in the past, rules can now be created for AI, but these rules are not for a single application, but for the entire Microsoft 365 system. This is the power of Actions. Many of our workflows involve the collection and distribution of information, connecting people and information, all of which can be easily achieved through Copilot Actions.

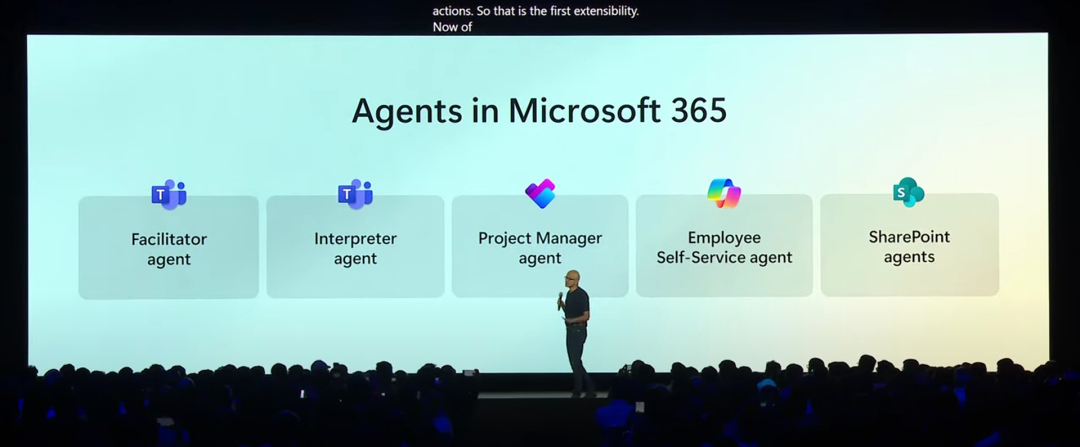

Of course, we can also build complete Agents. We have also developed many Agents with different scopes, such as team-level and process-level Agents.

picture

picture

A typical example is an agent in a team who can take on a translator or coordinator role, like a new member of the team, helping with tasks. SharePoint also now has built-in Agents that intelligently analyze documents to enhance knowledge sharing. We can customize these Agents with simple actions or Copilot Studio and share them in Teams chat.

Next up is "coordinator Agents," which manage agendas in meetings and take notes and tasks in real time, allowing teams to focus on the discussion. In addition, it simplifies communication in chats by summarizing and replying to questions in real time. "Translation Agents", on the other hand, eliminate language barriers and achieve real-time speech translation, enabling everyone to communicate freely.

Project management Agents are able to create project plans, assign tasks, and even complete tasks on behalf of the team, ensuring that everyone is informed and collaborating effectively. Finally, in professional Business processes such as HR and IT, new employee self-service Agents are available in Copilot Business Chat, allowing employees to instantly get answers and perform actions such as submitting a help desk work order. These Agents can be customized through Copilot Studio, taking advantage of pre-built workflows and more. New AI and Microsoft 365 are boosting productivity and completely redefining the way work is done.

These are examples of Agents that we built into the system. But what's really exciting is that you can also build Agents. This is where Copilot Studio comes in.

Our vision for Copilot Studio is simple: it's a low-code/no-code tool designed for building Agents. The analogy is Excel - people can easily make spreadsheets, and building Agents should be just as simple. Copilot Studio is designed to help everyone take control of their own workflow to support our practical needs in knowledge work. It acts as a "swarm of Agents" to help around our daily work, reducing repetitive tasks and improving work flow. For example, for a field service, simply enter prompts and instructions to define an agent's task, then connect it to a knowledge source in SharePoint, and it can automatically generate Agents.

This ability to easily create Agents without coding is at the heart of Copilot Studio. Now you have the AI's user interface, extensibility capabilities, built-in Agents and the ability to build custom Agents, which form a complete system.

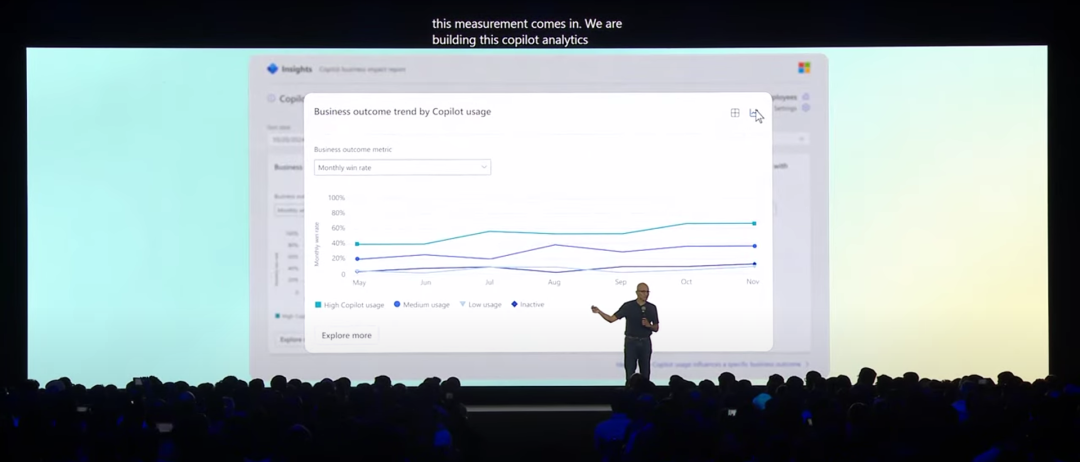

Then there is the question of return on investment (ROI) and measurement. This is another key question: How do we ensure that these technologies lead to substantial motivational change? Ultimately, these tools should not only increase individual productivity, but also improve the business outcomes of the organization. We are developing Copilot Analytics to enable users such as sales managers to correlate metrics such as sales growth and improved output with the use of Copilot features to provide real-time feedback and optimize business results.

Presentation graph: Trends in business outcomes from using Copilot

Presentation graph: Trends in business outcomes from using Copilot

This approach accelerates the adoption cycle of AI applications, and you don't need to wait years to see results. Copilot, an AI user interface with scalability and measurement capabilities, has been the first platform we built.

We have seen significant results within Microsoft, with double-digit productivity gains across business processes such as customer service, HR self-service, IT operations, finance, supply chain and marketing. In marketing, for example, there are many inefficiencies, from purchasing decisions to content creation, and AI brings enormous operational leverage.

The speed of this diffusion has surpassed past patterns that took years to spread globally. In India, I have witnessed many instances of large-scale deployment. For example, this morning I spoke with the team at Cognizant, who have rolled out AI technology across the board to their employees.

Andy Grove, former CEO of Intel, introduced the concept of "knowledge transformation" in the 1990s, which refers to the importance of rapidly creating and disseminating knowledge. This is similar to the retail supply chain transformation, only applied to knowledge industries. Persistent is another example, having developed a contract management agent that can be used in Copilot and handle major changes throughout the contract life cycle. This is another example of Copilot being deployed at scale.

Next I want to talk about the next generation platform - the Copilot stack and the AI platform. For us, Azure has always been conceived and built as the "computer of the world." We are always committed to it because we realize that AI does not stand alone, it needs the support of the entire computing stack, so we are building this system globally.

We have more than 300 data centers in more than 60 locations around the world. In India, we are very excited about our existing regional expansions, including the Central India, South India and West India regions. At the same time, we are also working with Geo to enhance capacity. Today, I'm excited to announce the largest expansion in our history in India, where we're investing $3 billion to increase Azure capacity.

picture

picture

I had the opportunity to meet Prime Minister Modi yesterday and it was a great experience. He shared many examples and visions, especially his strategy for advancing the AI mission. This vision combines the "India Stack," the country's entrepreneurial dynamism, and the demographic dividend on the consumer and corporate side to create a virtuous circle. Therefore, we are very optimistic about introducing the next generation of AI core computing capabilities.

When it comes to infrastructure, we introduce a new formula - simply put, measuring the growth efficiency of any country or business will depend on "the number of tokens per watt, per dollar." Over the next two, five or even ten years, we will see this formula directly correlate with GDP growth. Therefore, infrastructure must be the highest priority.

We innovate at every level. From optimizing the construction of liquid-cooled AI accelerators, to cooperating on renewable energy infrastructure to achieve zero waste, zero water use, and then to the collaborative construction of the entire system.

Then came silicon innovations. Working with Nvidia, we have the first GB 200 cluster online today. At the same time, we are also working with AMD to develop the Maya chip, which already handles a lot of customer service traffic at Microsoft.com. These investments have enabled us to build a world-class AI accelerator infrastructure that optimizes training, inference cores, and drives system-wide innovation.

With the infrastructure in place, the next big issue is data governance. I speak to many customers and partners today, and their first concern is how to organize their data.

Data is the only way to build AI, not only for pre-training, but also for the data needed for RAG (retrieval enhanced generation), post-training, sampling, and inference calculations. Therefore, the first priority is to connect data to the cloud. We're building data asset management systems, whether it's Snowflake, Databricks, Oracle or SQL, that can migrate to the cloud and fully support AI computing.

We have great operational data storage systems designed for AI applications, whether it's Cosmos DB or SQL Hyperscale or Fabric for analytical workloads, and they're all ready to support AI. In fact, some of the largest users of ChatGPT rely on Cosmos DB because it is the database for stateful applications that store ChatGPT user state.

So I think the data layer is very important, and we're doing everything we can to make sure that we can help you organize the data so that you can use and build models in conjunction with these models. This includes model training based on the data, but also using the data for operations such as retrieval enhancement generation (RAG). Therefore, the proximity of data gravity is crucial, and the localized processing of data is also crucial.

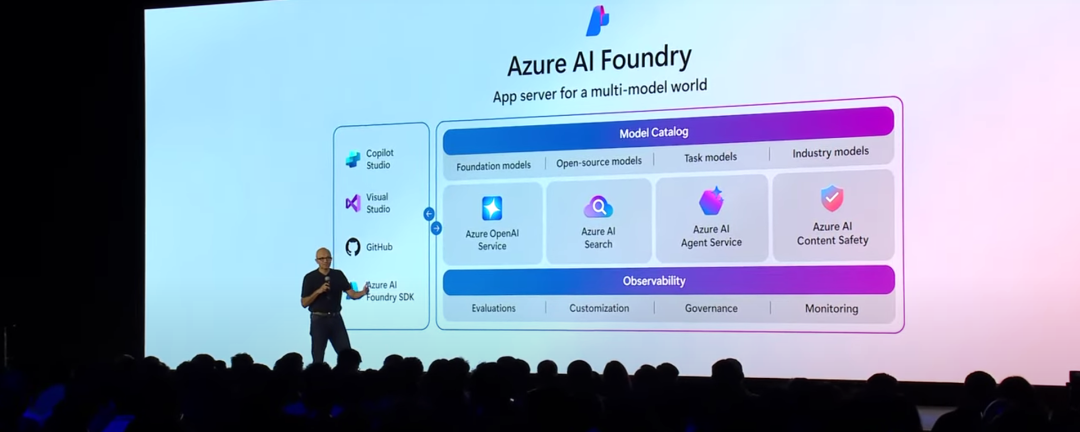

After having the infrastructure and data in place, the third step is to create an AI application server. Back in history, when the web was emerging, we built IIS application servers; When cloud computing rose, we developed cloud-native application servers; The same is true in the mobile era. So every generation of technology needs an application server, and we're building Foundry now.

picture

picture

At the heart of Foundry is the model, and in particular the innovation of OpenAI. We are excited about GPT-4 and upcoming releases, as well as integrating models from the open source community such as Llama and Mistral. In addition, there are models developed for specific industries and local language needs in India. We want to have the richest catalog of models, and some of the popular models will be available as services via API interfaces.

Once we have the model, we need to deploy, fine-tune, distil, evaluate, and test for reliability and safety. To simplify these operations, we integrated them into the application server. Model evaluation will become critical, and my team keeps an eye on cutting-edge models and ensures that the application server layer has the flexibility to quickly adapt to new models. This process includes sample testing, cost and latency optimization, and fine-tuning for specific use cases, which is Foundry's goal - to streamline the entire process and drive great progress.

In India, I have seen many customers who have already started to deploy AI technology and have provided valuable feedback. For example, we learned a lot from experimenting with multiple Agents deployment. Looking ahead, I think the industry's focus will shift from "models" to "model orchestration" and "model evaluation" and how to deploy "future-proof applications" is an important trend in the future.

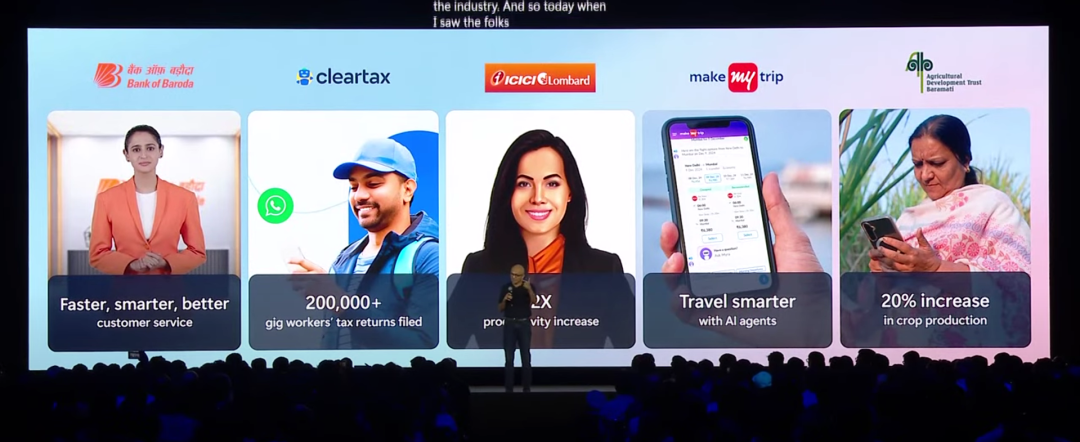

Today, I met the team from Bank of Baroda, who showed off three Agents: a self-service agent, a relationship manager agent for new customers, and an agent for employees. In addition, I visited a startup called ClearTax, whose tax process is as simple as submitting a receipt with WhatsApp and getting a tax refund. I particularly like that. In addition, I also talked with the ICICI Lombard team and learned that medical claim forms in India are not standardized and need to be read manually. This increase in efficiency will directly contribute to economic growth, as ensuring that claims are settled smoothly means that insurance services are guaranteed.

picture

picture

I also met the MakeMyTrip team, whose lofty goal is to automate complex processes in the travel industry - including hotels, flights, and other vehicles - through a multi-agents framework. Not only are large companies and start-ups pushing the technology, but the speed of its adoption in India is equally exciting. Finally, I would like to invite you to watch the video presentation of our partners.

In India, we produce a large number of staple crops such as sugar cane, wheat, rice, pulses and cotton. But compared to other developed countries, our production is still very low. Soil erosion is currently a major problem in India, mainly due to the use of pesticides.

The Balamati Cooperative has been serving the farming community for more than 50 years. Their efforts have helped resource-poor farmers improve their lives. AgriPinado AI helps farmers avoid guesswork and get real data ina scientific way so they can make informed decisions and succeed. For this project, we selected 1,000 advanced farmers from Maharashtra and fitted them with weather stations, soil sensors, and satellite support. Every day we collect real-time data from the soil, AgriPinado AI uses Microsoft FarmBeats to manage agricultural data by running more than 20 algorithms to deliver accurate results based on historical patterns.

Azure OpenAI enables farmers to ask questions on WhatsApp in their native language, enabling precise use of irrigation, fertilizers and pesticides in the field.

To me, this really brings together the various technologies. From Azure IoT connectivity, to data plane processing, to using Azure AI, the ultimate goal is to enable farmers to increase their yields. The integration of these technologies demonstrates the power of technology and what we can achieve.

Now, the last layer is the tools. If you have infrastructure, data, and AI, tools become critical. Microsoft started as a tools company, and we've always been passionate about tools, especially through GitHub.

In India, there are now 17 million GitHub users, the second largest community after the United States. In fact, by 2028, India will have more developers than the United States, which is exciting. In addition, India's developer contribution to AI projects is second only to that of the United States, and the community's activity and talent is exciting. Our progress on GitHub Copilot is equally exciting.

We have now implemented multi-file editing capabilities. From continuous code completion to chat capabilities to multi-file editing, this allows modifications to be made to the entire code base. We also launched a free GitHub Copilot tier, a feature that is growing rapidly in India. Back in 2020, when I first saw GitHub Copilot, I was convinced of the LLM's potential. Then I saw the GitHub Copilot Workspace, a step beyond chat to real Agents that allow developers to generate specifications, edit plans, and execute entire codebase operations from GitHub issues.

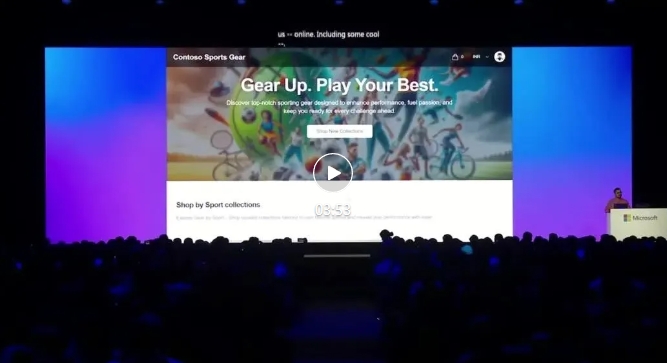

Today, I invited my colleague Karen Napa to demonstrate these features. Copilot Workspace is an AI-native development platform that turns your ideas directly into code through natural language. Next, we'll show you how to use Copilot Workspace to add admin page functionality to an application that sells sports equipment online.

You know, it's really exciting to see the development of the toolchain. In fact, to this day, Copilot Workspace no longer has a waiting list, which we're very excited about. For me personally, perhaps the biggest change was Windows 365, where I got my desktop, plus GitHub Copilot and Copilot Workspace, and Code Spaces. Combine these tools and I'm a happy person no matter where I am in the world. This is really a wonderful step forward that changes the productivity of development.

So the last thing I want to talk about is the Copilot device. We talked about so much innovation and infrastructure, starting with silicon in the cloud, and now it's going to the edge.

We are very excited to work with Qualcomm, AMD and Intel on NPUs." In fact, today, Jensen even talked about the next generation of Gpus coming to regular PCS that will be able to run the entire Nvidia technology stack locally. So we're really excited about what's happening to these Copilot devices and to Copilot PCS more broadly, and even traditional PCS with Gpus. But we're also looking forward to the groundwork, right? When I use my Copilot PC, which has guaranteed battery life all day long, has new AI features built in, third-party developers are starting to use it, whether it's Adobe, Cap Cart, or others. So this is really the beginning of a new platform that's going to be just as exciting at the edge as it is in the cloud.

In fact, we don't think of it as the old client-server model. It's not just about offline native models, it's about hybrid AI.

The idea now is that you can build applications that allow you to offload some of your work to a local NPU as a secondary, sorting process, while calling LLDs in the cloud. Any application will truly be a hybrid application. It's not just running on-premises, or entirely in the cloud. I think this is what we've been waiting for. Let's play a video to show you all about what's on your Copilot device.

To truly ensure that all three platforms can be widely distributed and used, the key consideration is trust.

Trust in security, privacy, and AI security. So we have a set of principles, but more importantly, those principles and initiatives are based on real engineering progress, ensuring that we can effectively build trust throughout the process. For example, how does something like security protect against hostile attacks like prompt injection? A key piece of what we're building. How do you think about confidential computing, not just for PCS, but for Gpus? This is something that we're working with everybody on right now, whether it's Nvidia, Intel, or AI security, and one of the big issues that a lot of people are talking about is illusion.

How do we ensure foundational? So, basic services with evaluation support is one way we can make real progress in AI security. So we really took trust as a number one engineering consideration, set a set of principles, but more importantly translated those principles into toolchains and runtimes that allow us as developers to build more trustworthy AI.

Now, I want to end where I started, which is that our mission is to empower everyone. But before I go there, what I want to talk about is that it's all about doing AI business transformation, right? Ultimately, it's about changing customer service, or changing your marketing, sales, or internal operations. At the end of the day, it's about business outcomes.

The three considerations that I would like to submit are Copilot as the UI for AI, which is making sure that you can make sure that the application server is the platform for building AI applications, which in our view is Foundry, and the other one is your data, combined with Fabric. So those three are probably the three key design decisions that need to be made, not any given model, because models change every year, and every month you have new models, but those three fundamental design choices are really the key, and that's what the UI layer is, how your Agents interface with the UI layer, how you think about your data, And how you think about application servers, it actually gives you model-based agility.

These three are the hopes you should take from the work we do on the platform, and through them, our mission to empower every person and every organization in India drives us. Ultimately, it is about ensuring that the human capital of this country can continue to expand to take advantage of the enormous opportunities and potential that this technology has to offer. So I am very excited to announce today that our ongoing commitment is now to train 10 million Indians in AI skills by 2030. The most important thing for me is not to think of skills training as abstract, but to see how those skills translate into real impact, one community at a time, one industry at a time. So I left a video showing the impact that all skills training has already had in India. Thank you very much, and thank you for the great work you've done on the platform. Thank you.

2025-02-17

2025-02-14

2025-02-13

friend link

400-000-0000

立即获取方案或咨询

top