Home > Information > News

#News ·2025-01-08

Recently, China Telecom Wing pay for the latest research results of large model reasoning acceleration "Falcon: Faster and Parallel Inference of Large Language Models through Enhanced Semi-Autoregressive Drafting and Custom-Designed Decoding Tree has been accepted by AAAI 2025.

The Falcon method proposed in this paper is an enhanced semi-autoregressive speculative decoding framework, which aims to enhance the parallelism and output quality of draft models to effectively improve the inference speed of large models. Falcon can achieve an acceleration ratio of about 2.91-3.51x, has obtained good results on multiple data sets, and has been applied to multiple practical services of wing payment.

Address: https://arxiv.org/pdf/2412.12639

Large language models (LLMs) have demonstrated excellent performance in various benchmarks, however, LLMs also face significant computational overhead and latency bottlenecks in reasoning due to autoregressive (AR) decoding methods.

For this reason, researchers put forward Speculative Decoding (speculative sampling) methods. Speculative Decoding selects an LLM that is lighter than the Target Model as the Draft Model, which is used to generate several candidate tokens consecutively during the Draft phase. In the Verify phase, the obtained candidate Token sequence is put into the original LLM for verification & Next Token generation to achieve parallel decoding. By directing computing resources toward validating pre-generated tokens, Speculative Decoding greatly reduces the memory operations required to access LLM parameters, thereby improving overall reasoning efficiency.

The existing speculative sampling mainly adopts two Draft strategies: autoregressive (AR) and semi-autoregressive (SAR) draft. The AR draft generates tokens sequentially, with each token dependent on the previous tokens. This sequential dependence limits the parallelism of draft models, resulting in significant time overhead. In contrast, SAR draft generates multiple tokens at the same time, enhancing the parallelization of the draft process. However, an important limitation of SAR draft is that it cannot fully capture the interdependencies between draft tokens within the same block, potentially resulting in a low acceptance rate of generated tokens.

Therefore, in speculative sampling, balancing low draft latency with high speculative accuracy to accelerate the reasoning speed of LLMs is a major challenge.

To this end, Wing Pay proposes Falcon, an enhanced semi-autoregressive (SAR) speculative decoding framework designed to enhance the parallelism and output quality of the draft model, thereby improving the reasoning efficiency of the LLMs. Falcon integrates the Coupled Sequential Glancing Distillation (CSGD) method to improve the token acceptance rate of SAR draft models.

In addition, Falcon has designed a special decoding tree to support SAR sampling, enabling the draft model to generate multiple tokens in a single forward propagation and also to support multiple forward propagation. This design effectively improves the LLMs acceptance rate of tokens, further speeding up the reasoning speed.

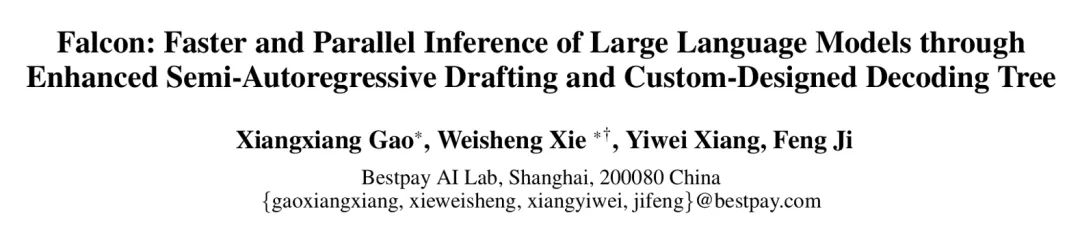

The architecture of Falcon is shown in Figure 1. It can be seen that the semi-autoregressive decoding framework is mainly composed of three components: the Embedding Layer, LM-Head and semi-autoregressive decoding Head.

Figure 1 Falcon frame diagram

Specifically, Falcon connects a sequential feature sequence before a time step with the current token sequence to simultaneously predict the next k tokens. For example, when k = 2, Falcon uses an initial feature sequence (f1, f2) and a marker sequence one time step ahead (t2, t3) to predict the feature sequence (f3, f4). Subsequently, the predicted features (f3, f4) are connected to the next marker sequence (t4, t5) to form a new input sequence. This new input sequence is used to predict subsequent feature sequences (f5, f6) and tag sequences (t6, t7), thus facilitating the continuation of the draft process. After the Draft model is forward many times, the generated tokens are organized into a tree structure and input to the large model for verify. The verified tokens are received by the large model and the next cycle starts based on this basis.

2.1 Coupled Sequential Glancing Distillation

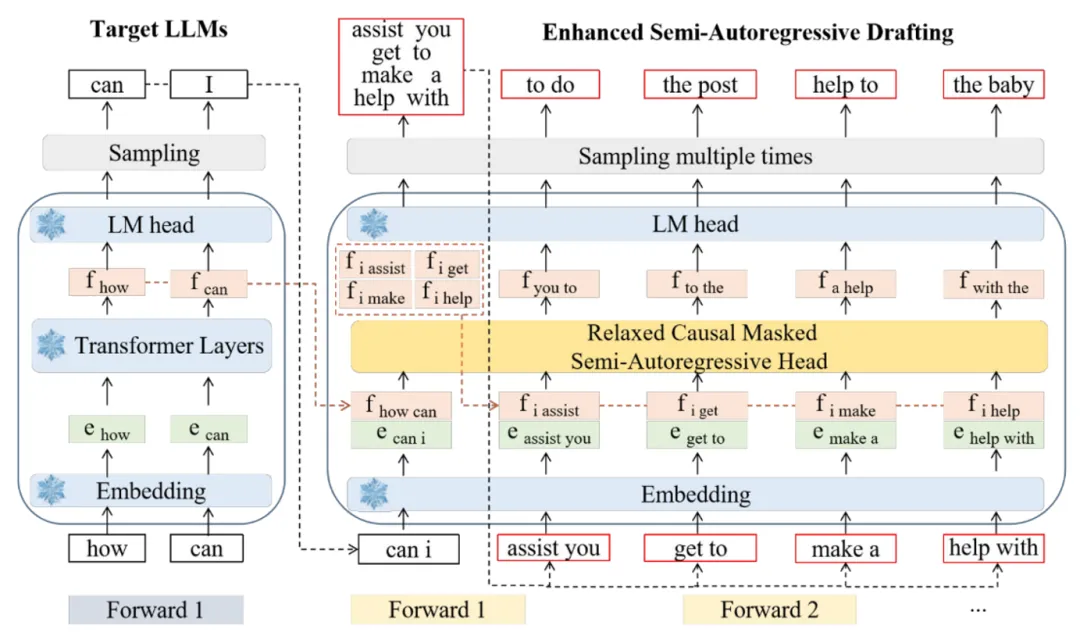

The accuracy of current inferred decoding methods is relatively low, mainly due to insufficient contextual information between tokens. CSGD improves this by replacing some of the initial predictions with real tokens and hidden states, injecting correct information back into the decoding process, thereby improving the accuracy and consistency of subsequent predictions. The model structure and training process are as follows:

Figure 2 Schematic diagram of CGSD method

In the training process, a continuous feature sequence before a time step is connected with the current token sequence and input into the draft model to form a fusion sequence with dimensions of (bs, seq_len, 2 * hidden_dim).

The draft model consists of a hybrid Transformer network consisting of a two-layer LSTM, Relaxed Causal-Masked multi-head attention mechanism, and an MLP network. The LSTM network reduces the dimensions of the fusion sequence to (bs, seq_len, hidden_dim) and retains information about past tokens, thereby improving the accuracy of the model. Relaxed Causal-Masked multi-head attention mechanisms are able to focus on the relevant parts of the input sequence while maintaining causality. The MLP layer further processes this information to make a final prediction.

When the sequence passes the draft model for the first time, an initial token prediction is generated . We then calculate the Hamming distance between the draft model's prediction and the real token Y to measure the accuracy of the prediction. Next, we will have a certain number of consecutive predicted token sequences

. We then calculate the Hamming distance between the draft model's prediction and the real token Y to measure the accuracy of the prediction. Next, we will have a certain number of consecutive predicted token sequences Sum feature sequence

Sum feature sequence Replace with the correct token sequence from the LLMs

Replace with the correct token sequence from the LLMs Sum feature sequence

Sum feature sequence 。

。

CSGD is different from the traditional glancing method, which only randomly replaces tokens. Instead, CSGD selectively replaces the sequential token and feature sequences that precede the prediction simultaneously, as shown by the dotted boxes of choice 1, choice 2, and choice3 in Figure 2. This approach enhances the understanding of the relationships between tokens and ensures that the draft model can effectively take advantage of token sequences with lead time steps, which is particularly important in SAR decoding. Subsequently, the corrected token and feature sequences are re-entered into the draft model to calculate training losses.

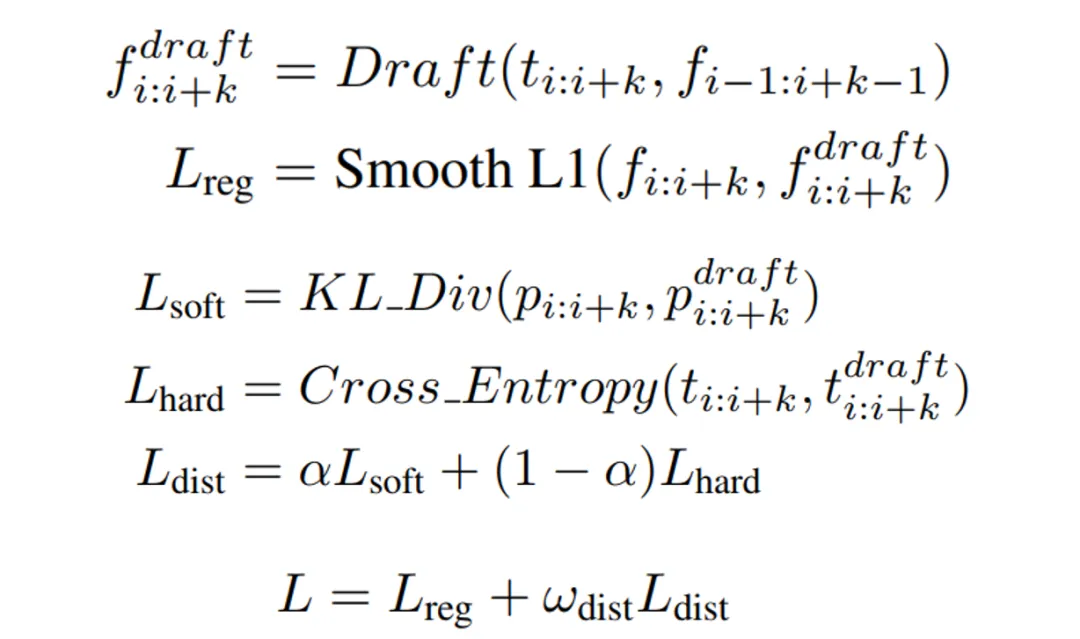

In the training process, we adopted knowledge distillation, and the loss function includes regression loss between the output feature of the draft model and the real feature and distillation loss. The specific loss function is as follows:

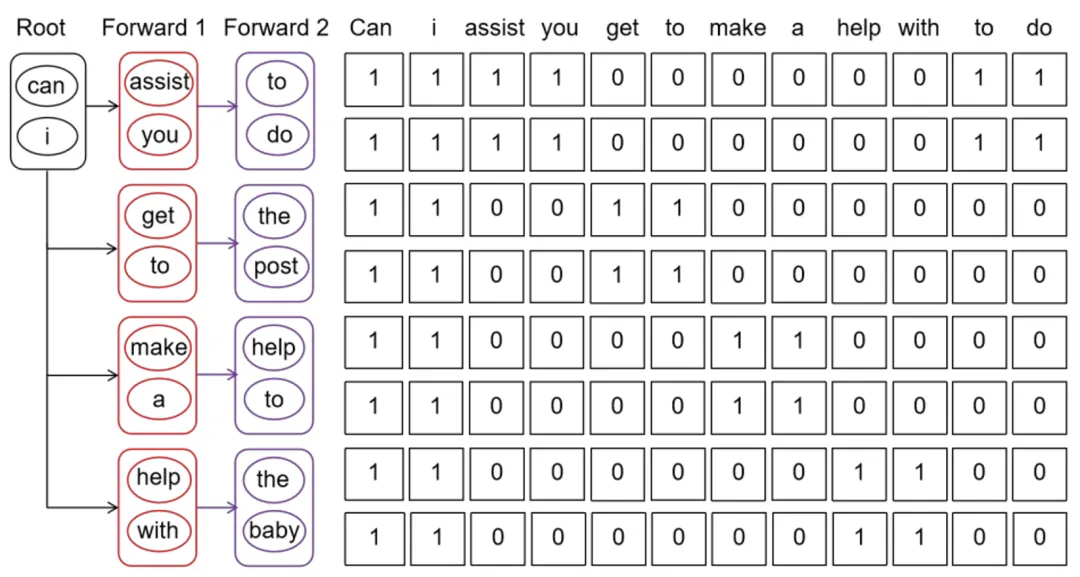

2.2 Custom Designed Decoding Tree

Current tree-based inference decoding methods improve inference efficiency by generating multiple draft tokens at each drafting step. However, these methods still require the draft model to generate tokens sequentially, which limits further improvements in the efficiency of the conjecture. To address this limitation, the CDT (Custom-Designed Decoding Tree) supports the draft model to generate multiple tokens (k) in a single forward pass, and multiple forward passes in each draft step. As a result, CDT generates k times the number of draft marks compared to existing methods.

After the Draft model is forward several times, the generated tokens are organized into a tree structure and input into the large model for verification. LLM uses a tree-based parallel decoding mechanism to verify the correctness of the candidate token sequences, and the accepted tokens and their corresponding feature sequences continue to be passed forward. In traditional autoregressive (AR) decoding, a causal mask is used, and its structure is a lower triangular matrix. It ensures that the earlier token cannot access the later information.

In contrast, Falcon employs a causal mask (shown in Figure 3) that allows the model to access tokens within a block of the same k*k as well as corresponding previous consecutive tokens. This enhancement significantly improves the efficiency of drafter's token generation, enabling LLMS to validate more tokens at the same time, thus speeding up the LLM's overall reasoning speed.

Figure 3. Schematic diagram of Custom-Designed Decoding Tree method

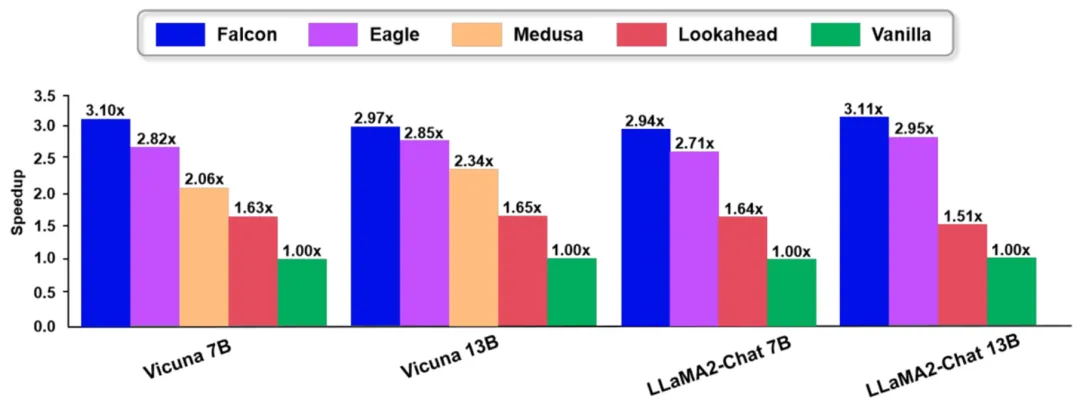

We have carried out extensive experiments on multiple data sets and multiple models to verify the effectiveness of the proposed method. Falcon shows superior performance compared to existing methods, as shown below:

FIG. 4 Results of Falcon experiment

The Falcon large model can achieve an acceleration ratio of about 2.91-3.51 times, which is equivalent to the reasoning cost reduced to about 1/3 of the original under the same conditions, thus greatly reducing the cost related to the reasoning calculation of the large model.

At present, Falcon technology has been transformed into the InsightAI platform of Wing Pay large-scale model product, and has served a number of business applications such as Wing Pay digital human customer service, loan-Wing small Orange, human-wing Dot, finance-wing small financial and so on.

Speculative sampling is one of the core methods for accelerating large model inference. At present, the main challenge is how to improve the accuracy and sampling efficiency of draft model, and improve the verification efficiency of large models. In this paper, Falcon method, an enhanced semi-autoregressive speculative decoding framework, is proposed. Falcon significantly improves the prediction accuracy and sampling efficiency of draft model through CSGD training method and semi-autoregressive model design. In addition, in order to enable the large model to verify more tokens, this paper carefully designs a decoding tree, which effectively improves the efficiency of the draft model and thus improves the verification efficiency. Falcon can achieve an acceleration ratio of about 2.91-3.51x on a variety of data sets and is applied to many businesses of wing payment, obtaining good results.

2025-02-17

2025-02-14

2025-02-13

friend link

400-000-0000

立即获取方案或咨询

top