Home > Information > News

#News ·2025-01-08

The co-corresponding authors of this paper are Tu Zhaopeng and Wang Rui. Tu Zhaopeng is an expert researcher at Tencent, whose research direction is deep learning and large models. He has published more than 100 academic papers in top international journals and conferences, with more than 9000 citations. He is the deputy editor of the SCI journal NeuroComputing and the chairman of international top conferences such as ACL, EMNLP and ICLR for many times. Wang Rui is an associate professor of computational linguistics at Shanghai Jiao Tong University. Co-first authors are Xingyu Chen and Zhiwei He, PhD students at Shanghai Jiao Tong University, and Jiahao Xu and Tian Liang, senior researchers at Tencent AI Lab.

This paper will introduce the first overthinking phenomenon of o1 long thought chain model. The work was done by Tencent AI Lab and a team from Shanghai Jiao Tong University.

Since OpenAI released the o1 model, its strong logical reasoning and problem solving ability has attracted wide attention. o1 model demonstrates powerful Inference-Time Scaling performance by simulating the deep thinking process of human beings and using reasoning strategies such as self-reflection, error correction and exploring multiple solutions in the thought chain. With this mechanism, the o1 model can continuously optimize the quality of its answers. However, under the halo of o1's success, an underlying problem has gradually been magnified - overthinking.

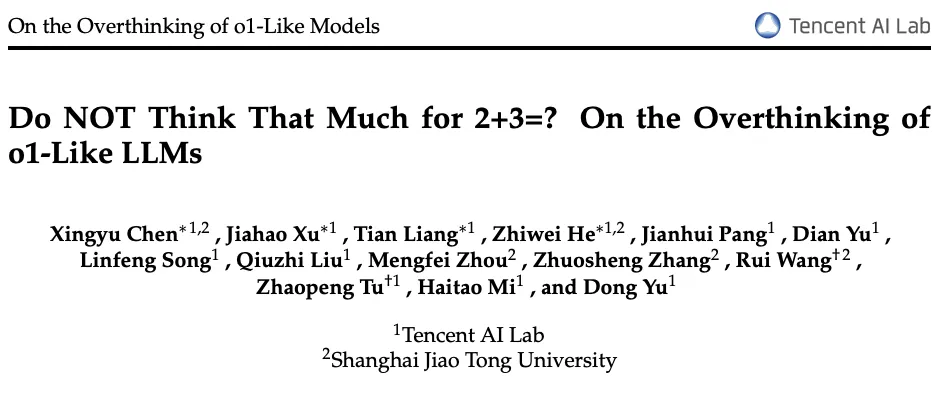

With the advent of the o1 model, many similar models have also appeared, such as QwQ-32B-Preview [1] and Deepseek's R1-Preview [2] model. These models have the same "deep thinking" properties in reasoning, but they also expose a similar problem: generating excessively long thought chains in unnecessary situations wastes computing resources. As a simple example, for the question "2+3=?" The response lengths of different models are shown in the figure below:

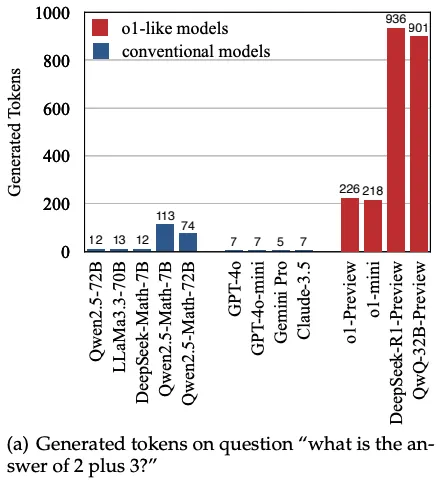

The answer of the traditional model usually requires very few tokens to give the answer, whereas for the o1 model, its consumption of inference tokens directly reaches over 200. Even more extreme, Deepseek-R1-Preview and QwQ-32B-Preview token consumption even reached 900! Why does the QwQ model produce such a long output? The research team further analyzed QwQ's answer to this question, as shown in the right column below:

The QwQ 32B-Preview model tries a variety of different problem-solving strategies during the inference process. For simple addition problems, the model explored the direct use of mathematical calculations, simulation of number line movement, and analog counting apples, and went through many rounds of reasoning before finally determining the result. While this chain-of-mind strategy is great for solving complex problems, it is obviously a waste of computing resources to iterate on existing answers and explore too broadly for simple problems. In order to better investigate this issue, the research team conducted a more detailed definition and in-depth analysis of this type of overthinking phenomenon of o1 models.

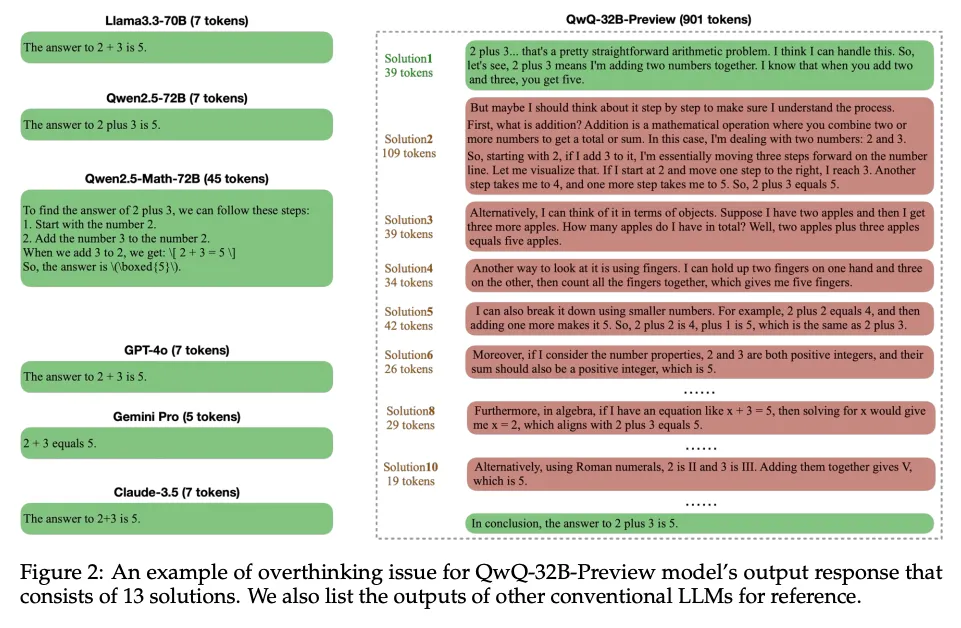

The article first defines the independent Solution in the model response: every time the model gets a complete answer to the input question (whether right or wrong), it is considered an independent solution. As the example shows, each solution contains the answer "5". Based on this definition, the number of solutions for the Qwen-QwQ-32B-Preview model and the Deepseek-R1-Preview model were statistically distributed on three different data sets (the judgment and extraction of the solutions were done by the Llama-3.3-70B model) :

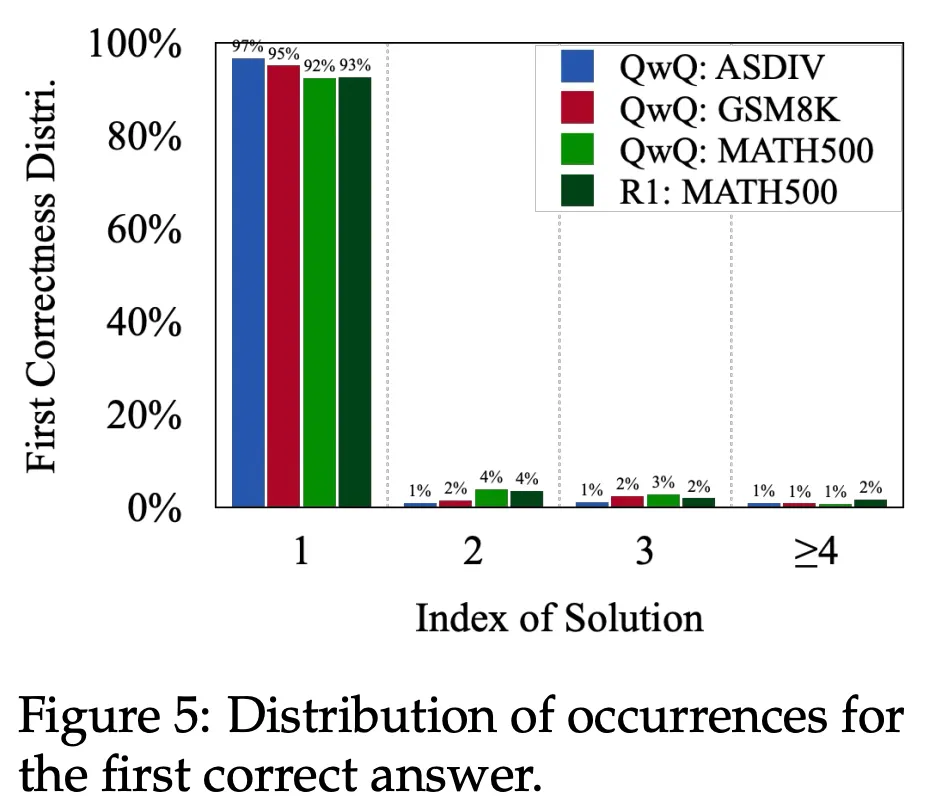

Among them, ASDIV [3] is a test set containing primary school difficulty math problems, GSM8K [4] is a commonly used primary difficulty math problem test set, and MATH500 [5] is a high school math competition difficulty test set. As shown in the figure, samples containing 2-4 solutions account for more than 70% of all samples, whether for QwQ model or R1 model. It can be seen that this Solution-Level reflection behavior is very common in the current o1 model. Are these answers in and of themselves necessary? The figure below shows where the model gets the correct answer for the first time on different data sets:

Surprisingly, experimental analyses of both the QwQ and R1 models show that they succeed in producing the correct answer on the first try more than 90% of the time. In other words, subsequent rounds of thinking made little substantial contribution to the improvement of answer accuracy. This phenomenon reinforces previous observations of model overthinking: in the vast majority of cases, multiple rounds of reflection on the model may simply be repeated validation of existing answers, resulting in a waste of resources.

However, this phenomenon has also led to different arguments. Some researchers believe that a central feature of Class o1 models is their ability to explore different solutions to problems autonomously. From this point of view, if the model uses a variety of different ideas to solve the problem during the reasoning process, then this diversified exploration not only helps deepen the model's understanding of the problem, but also reflects the model's independent exploration ability, and should not be simply regarded as "over-thinking". In order to analyze this problem more deeply, the research team further proposed an analytical method. They used GPT-4o to classify the model's responses, including the following steps:

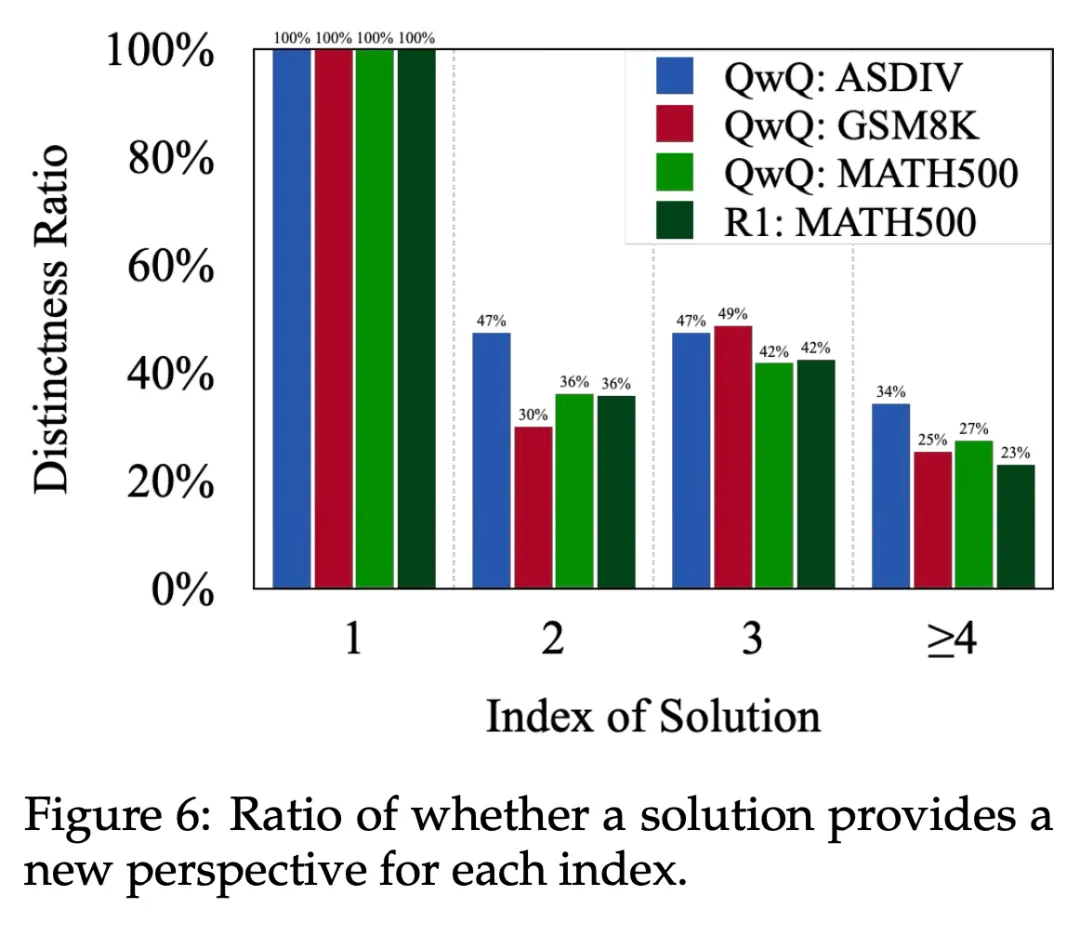

With this approach, the researchers were able to quantify whether there was a true sense of "diverse exploration" in the reasoning process. This analysis provides us with a new perspective to measure the behavior of the model: when the different solution strategies of the model are highly similar or even repeated, it can indicate that the contribution of multiple rounds of reasoning is limited; When the diversity of reasoning strategies increases with the improvement of thinking level, it reflects the further deepening of the model's understanding of the problem. This perspective can help us more accurately distinguish between "effective autonomous exploration" and "inefficient repetitive reasoning." As shown in the picture below:

The figure shows the likelihood that the solution in each position introduces new lines of reasoning. The answer to the first position is always "new ideas", so the probability is 100%. However, as the position of reasoning moves backward, the possibility of bringing new reasoning ideas into the solution gradually decreases. This trend indicates that the model tends to repeat previous reasoning lines the further it goes, leading to redundant and inefficient reasoning behavior. From this point of view, the subsequent solution of the model is more of an ineffective rethinking.

From the above analysis, we can see that these overthinking solutions tend to have the following two key characteristics:

Based on this finding, the research team further defined two core metrics to measure the phenomenon of "overthinking" in the model:

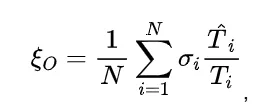

1. Outcome Efficiency: A measure of the contribution of each solution in the model response to the final answer, equal to the number of tokens in the correct solution divided by the total number of tokens in the complete response. The calculation formula is:

Where, N is the number of samples, Is the number of tokens for the first correct solution in the response of sample i of the model,

Is the number of tokens for the first correct solution in the response of sample i of the model, Is the number of tokens for the total reply of sample i,

Is the number of tokens for the total reply of sample i, Represents whether the i - th sample is correct. Intuitively, the fewer rounds of reflection a model has after getting a correct answer, the greater the proportion of correct answers in the overall response, and the higher the output efficiency.

Represents whether the i - th sample is correct. Intuitively, the fewer rounds of reflection a model has after getting a correct answer, the greater the proportion of correct answers in the overall response, and the higher the output efficiency.

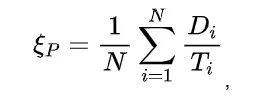

2. Process Efficiency: used to measure the contribution of each solution in the model reply to the diversity of reasoning strategies, equal to the total number of tokens belonging to different ideas in the reply divided by the total number of tokens in the whole reply, the calculation formula is as follows:

Where N is the number of samples, Is the total number of returned tokens for sample i,

Is the total number of returned tokens for sample i, Is the total number of tokens belonging to different inference strategies in sample i.This metric measures how well the model performs multiple rounds of reflection, and the more different reasoning strategies are involved in the response,

Is the total number of tokens belonging to different inference strategies in sample i.This metric measures how well the model performs multiple rounds of reflection, and the more different reasoning strategies are involved in the response, The bigger it is, the more efficient the process will be。

The bigger it is, the more efficient the process will be。

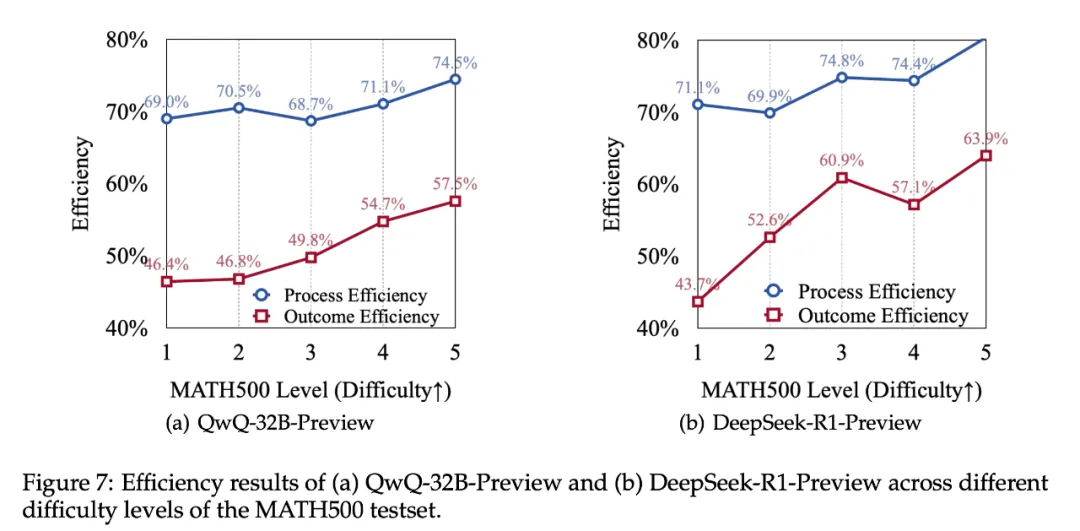

Based on these two indicators, the researchers calculated the performance of efficiency indicators of QwQ model and R1 model on MATH500 data set:

As can be observed from the figure, the R1 model is slightly better than the QwQ model in terms of efficiency, but both models expose the problem of "overthinking" to varying degrees. For the lowest difficulty level 1 problem, the researchers found that the performance of both models had the following characteristics:

From the above analysis, it can be seen that existing o1 models generally have varying degrees of "overthinking", and this problem is especially serious when dealing with simple tasks. These phenomena highlight the shortcomings of the current reasoning mechanism of o1 class models, and also mean that there is still a large room for improvement in the optimization of the long thought chain of models and the allocation of reasoning resources. To this end, the researchers have proposed several methods aimed at alleviating the phenomenon of overthinking the model and improving the efficiency of reasoning.

Since the goal is to reduce the model's overthinking without compromising the model's reasoning ability, the most immediate idea is to encourage the model to produce more streamlined responses by favoring optimization algorithms. Researchers use the open source Qwen-Qwans-32B-Preview model as the base model of the experiment. Based on the multiple sampling results of this model on the data set of PRM12K [10], the longest model response is selected as the negative sample of preference optimization. For the selection of positive sample, there are several strategies as follows:

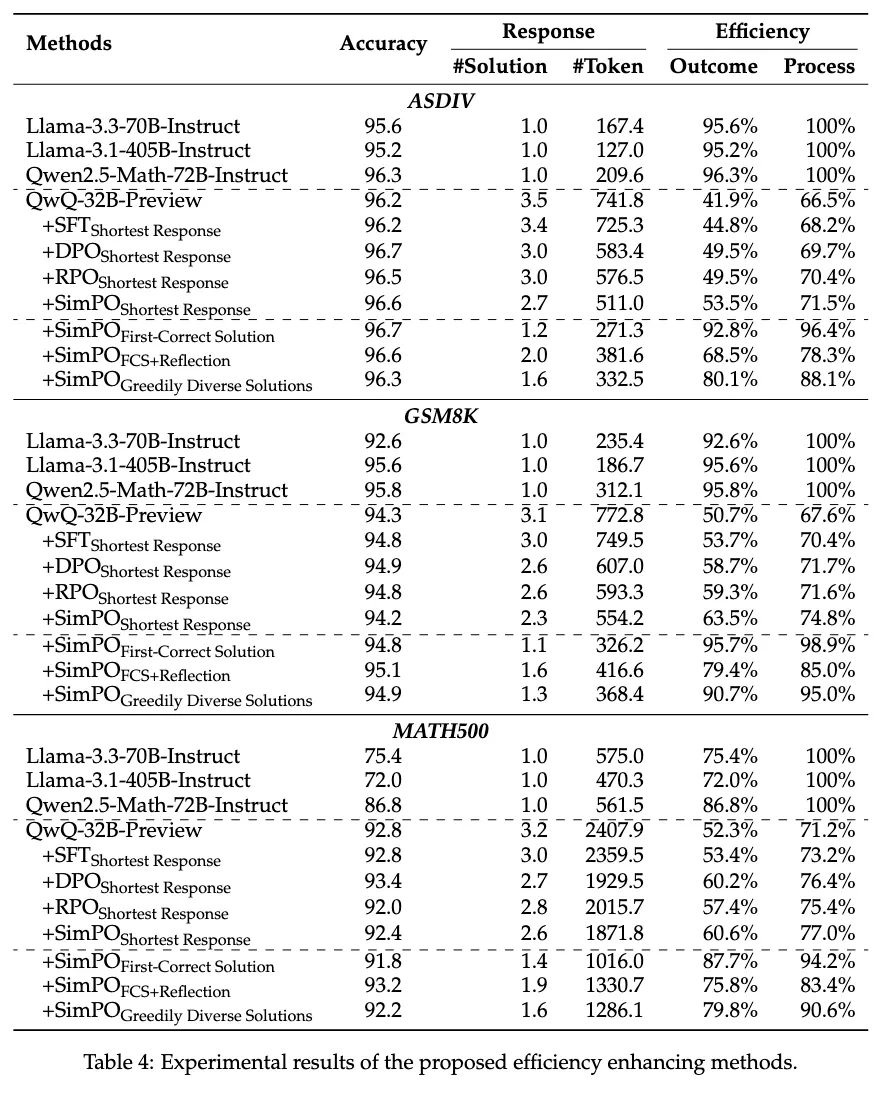

Based on the above preference data, researchers tried the most basic SFT and a variety of preference optimization algorithms, such as DPO [6], RPO [7][8] and SimPO [8]. The experimental results are as follows:

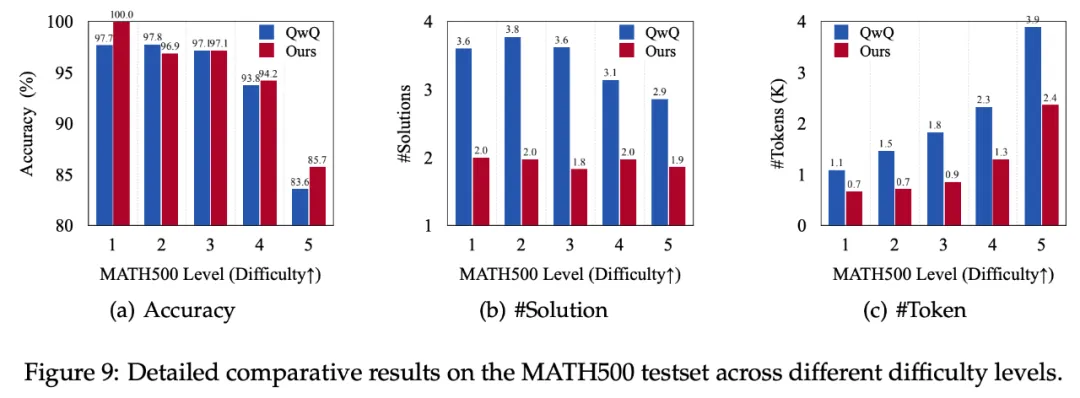

The SFT method in the table refers to fine-tuning using only positive samples. As can be seen from the table, SimPO has the best optimization effect under the same setting of "shortest response", and further experiments based on SimPO show that the strategy of using the first correct answer + check calculation as the positive sample can achieve a good balance between efficiency and performance. It can greatly reduce the number of output tokens and the average number of solution rounds while maintaining the performance of the model, and effectively improve the output efficiency and process efficiency. In order to further analyze the effectiveness of the method, the researchers analyzed the performance of SimPO+FCS+Reflection method on different difficulties of the MATH500 test set, as shown in the figure below:

Interestingly, on the simplest difficulty 1 problem, the method proposed in this paper only uses 63.6% of the original token number to achieve 100% accuracy. Moreover, on the difficult problems (difficulty 4 and 5), the method in this paper can significantly reduce the output redundancy while improving the performance. This demonstrates the effectiveness of the proposed approach in reducing overthinking.

This paper focuses on one of the core challenges facing o1 inference models: how to reasonably control the amount of computation in the reasoning process and improve the efficiency of thinking. This paper reveals a common problem through the analysis of experiments - o1 models tend to fall into overthinking when dealing with simple problems, thus increasing the unnecessary calculation consumption. Based on the detailed analysis of this phenomenon, the researchers propose a series of effective optimization methods, which can significantly reduce redundant reasoning and improve reasoning efficiency while maintaining model performance. The experimental results of these methods show that they significantly optimize the model's resource utilization on simple tasks, which is an important step towards achieving the goal of "efficient thinking". Future research will focus on exploring the following directions:

This research not only improves the reasoning of o1 model, but also provides an important theoretical basis and practical reference for more efficient and intelligent reasoning mechanism in the future.

2025-02-17

2025-02-14

2025-02-13

friend link

400-000-0000

立即获取方案或咨询

top