Home > Information > News

#News ·2025-01-08

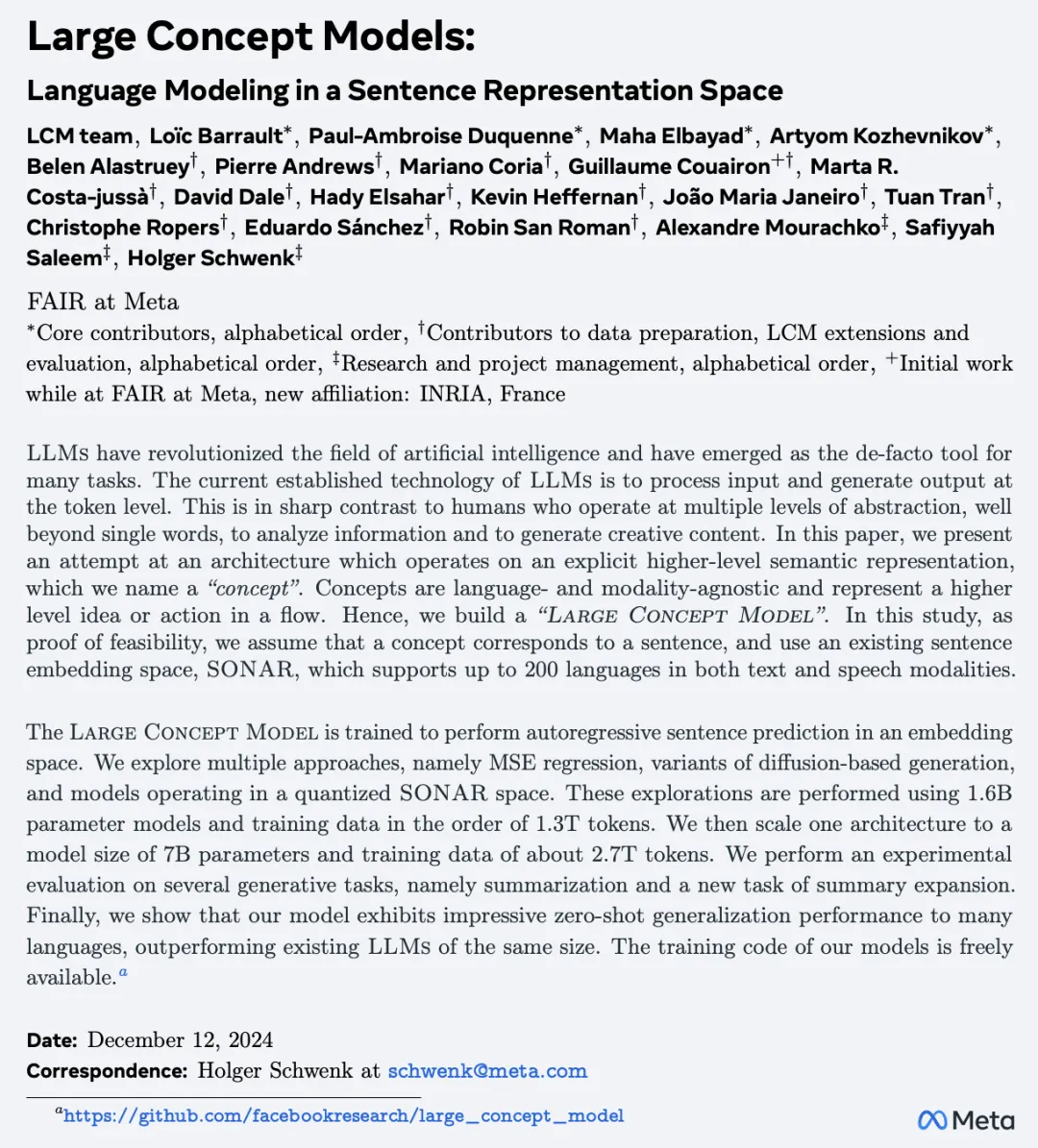

Recently, inspired by the high-level thinking of human conceptual communication, Meta AI researchers have proposed a new paradigm of language modeling, "big concept Model", to decouple language representation and reasoning.

"If Meta's big concept model is really useful, then models of equal or higher efficiency will be smaller in scale. For example, the 1B model will be comparable to Llama 4 in 70B. What a big improvement!"

In a recent interview, Yann LeCun, Meta's chief scientist, spoke about the next generation of AI systems, LCM (Large Concept Model). The new system will no longer be based solely on the next token prediction, but will make sense of the world through observation and interaction like babies and small animals.

Yuchen Jin, a PhD student in computer science and engineering at the University of Washington, agrees with Meta's new paper, saying that the new model increases his confidence that tokenization will never return, and that large language models will need to think more like humans to achieve AGI.

Some people even speculate that Meta is the dark horse of this AI competition, and they will surprise with models.

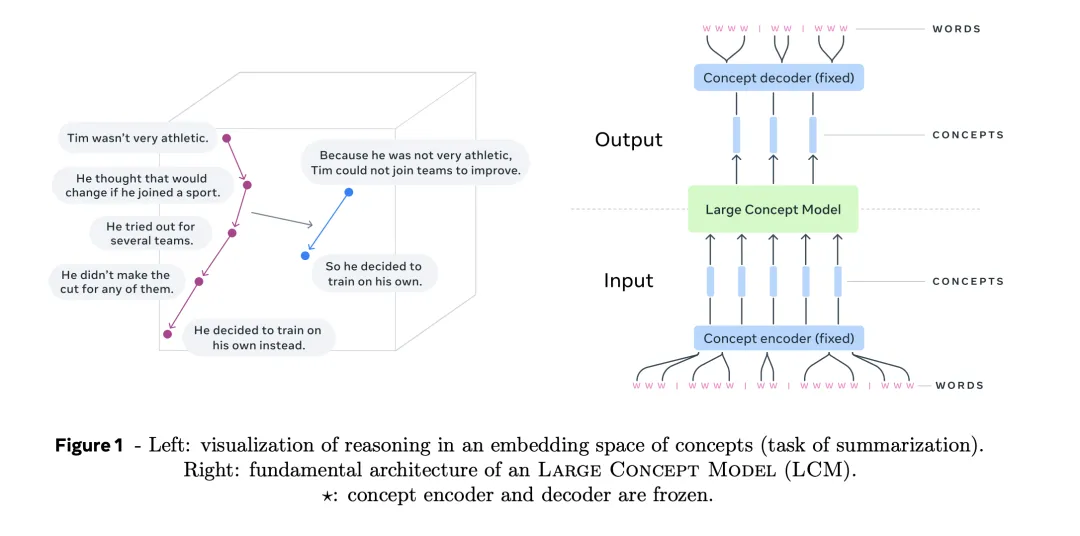

In short, the "Big concept model" (LCM) is to model reasoning in the "sentence representation space", abandon token, directly operate high-level explicit semantic representation information, and completely free reasoning from language and modal constraints.

Specifically, LCM can be constructed by encoders and decoders of fixed length sentences embedded in space, and the process is very simple:

The thesis links: https://arxiv.org/pdf/2412.08821

Code link: https://github.com/facebookresearch/large_concept_model

The analysis of inference efficiency in this paper is quite interesting: At about 1000 tokens, the new model theoretically requires more computational resources than LLama2-7b, and then the greater the number of tokens in the above paper, the greater the advantage of the new model. The specific results are shown in Figure 15 in the paper, where blue represents the LLama2-7b model, and red and green represent the new model respectively. The parameter size of red is 7b, while green is 1.6b; The figure on the right is a partial enlargement of the figure on the left under the number of tokens from 0 to 3000.

Other highlights of the new model are as follows:

Despite the unquestionable success and continued progress of large language models, existing LLMS lack an important feature of human intelligence: explicit reasoning and planning at multiple levels of abstraction.

The human brain doesn't operate at the word level.

When solving a complex task or writing a long document, for example, humans typically use a top-down process: first plan the overall structure at a high level, then gradually add details at a lower level of abstraction.

One could argue that LLM is implicitly learning hierarchical representation, but a hierarchical model with an explicit structure is better suited for creating long-form output.

The new approach will be significantly different from token-level processing, moving closer to (hierarchical) reasoning in the abstract space.

Context is expressed in the abstract space designed by LCM, but the abstract space is independent of language or mode.

That is, to model the basic reasoning process at a purely semantic level, rather than an instance of the reasoning in a particular language.

In order to validate the new method, the abstraction levels are limited to two types: subword tokens and concepts.

The so-called "concept" is defined as the indivisible "abstract atomic insight" of the whole.

In reality, a concept often corresponds to a sentence in a text document, or the equivalent of a sound bite.

The author believes that sentences, compared with words, are the appropriate units to achieve language independence.

This is in stark contrast to the current token-based LLMs technology.

Training large conceptual models requires a decoder and encoder for sentence embedding Spaces. And a new embedded space can be trained, optimized for inference architecture.

In this study, its open source SONAR is used as a decoder and encoder for sentence embedding.

The SONAR decoder and encoder (shown in blue) are fixed and do not require training.

What's more, the concepts that LCM (the green part in the figure) outputs can be decoded into other languages or modes without having to perform the entire reasoning process from scratch.

Similarly, a particular inference operation, such as inductive summarization, can be performed in zero-shot mode on any language or modal input.

Because reasoning only needs to manipulate concepts.

In short, LCM does not hold information about the input language or mode, nor does it generate output in a particular language or mode.

In a way, the LCM architecture is similar to the Jepa method (see below), which also aims to predict the representation of the next observation point in the embedded space.

The thesis links: https://openreview.net/pdf?id=BZ5a1r-kVsf

However, unlike Jepa, which places more emphasis on learning representation Spaces in a self-supervised manner, LCM focuses on making accurate predictions within existing embedded Spaces.

The SONAR text embedding space is trained using the encoder/decoder architecture to replace cross-attention with fixed-size bottlenecks, as shown in Figure 2 below.

SONAR is widely used for machine translation tasks, supporting text input and output in 200 languages, voice input and English output in 76 languages.

Because LCM runs directly on the SONAR concept embed, you can reason about all the languages and modes it supports.

In order to train and evaluate LCM, the original text data set needs to be transformed into a SONAR embedding sequence, where each sentence corresponds to a point in the embedding space.

However, there are several practical limitations to working with large text data sets. Including the difficulty of accurately splitting sentences, some sentences are very long and complex, which can negatively affect the quality of SONAR embedded space.

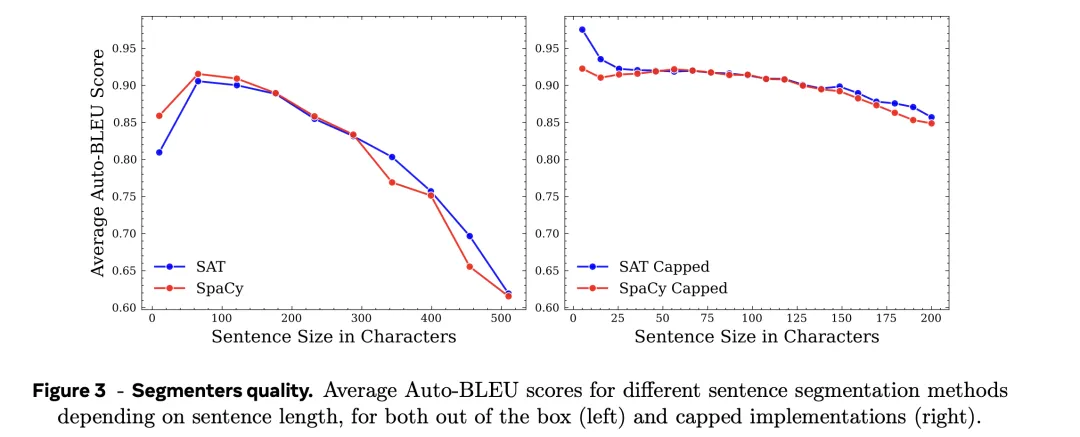

In this paper, the SpaCy splitter (denoted SpaCy) and Segment any Text (denoted SaT) are used.

SpaCy is a rules-based sentence splitter, and SaT predicts the boundary of the sentence at the token level for sentence segmentation.

New splitters SpaCy Capped and SaT Capped are also customized by limiting the length of sentences.

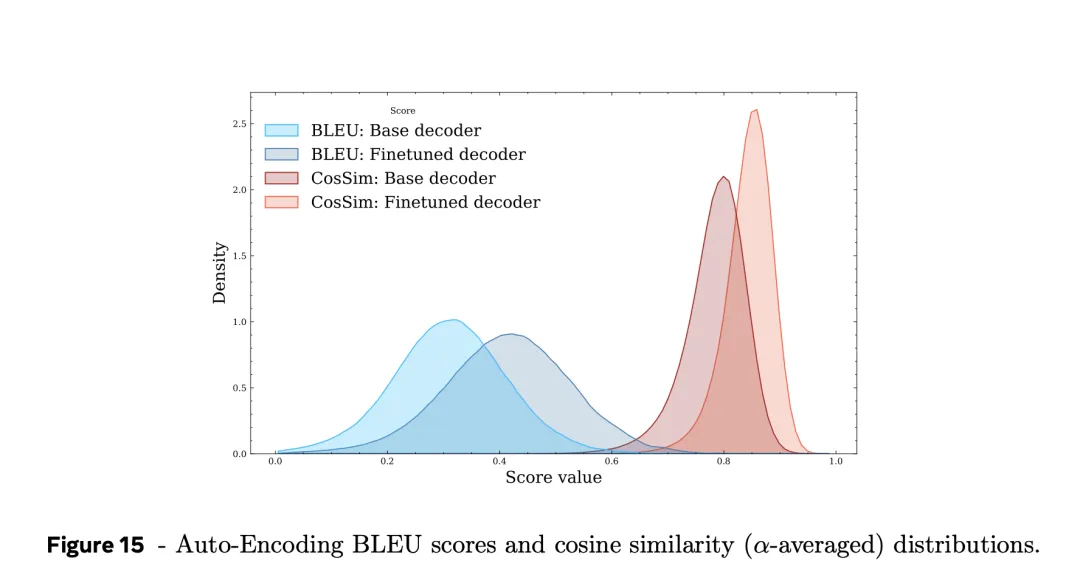

A good divider produces fragments that are encoded and then decoded without losing the signal, and can obtain a higher AutoBLEU score.

To analyze the quality of the splitter, 10k files representing about 500k sentences were extracted from the pre-training data set.

In the test, each splitter is used to process the document, then the sentences are encoded and decoded, and the AutoBLEU score is calculated.

As Figure 3 shows, if the character limit is 200, the SaT Capped method is always slightly better than the SpaCy Capped method.

However, with the increase of sentence length, both types of segmentation showed obvious performance deficiencies.

This low performance is particularly pronounced when sentences are longer than 250 characters, highlighting the limitations of using a splitter without setting an upper limit.

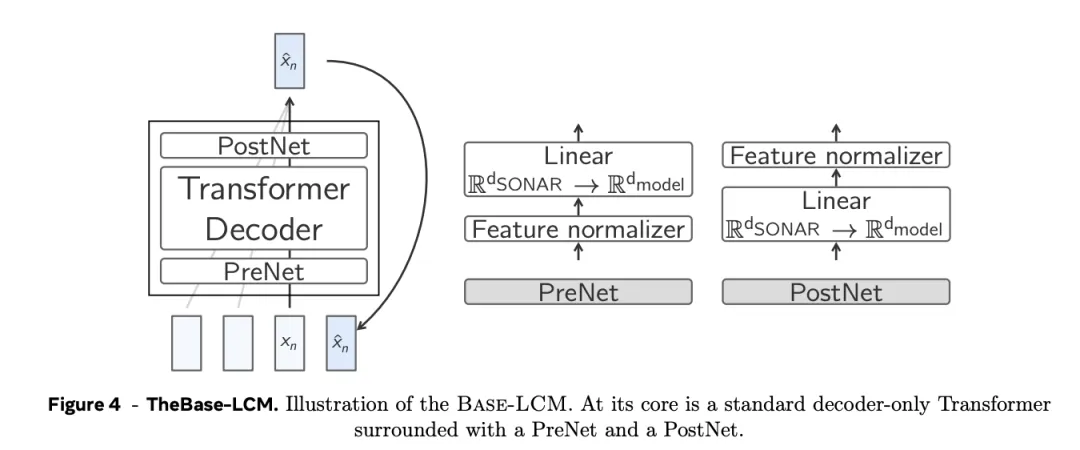

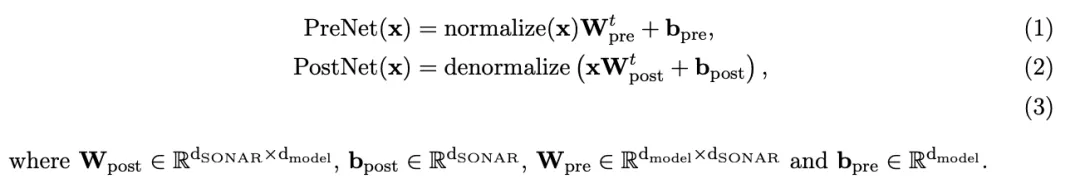

The baseline architecture for the next concept prediction is a standard decoder-only Transformer that transforms a set of antecedent concepts (i.e., sentence embeddings) into a set of future concepts.

As shown in Figure 4, Base-LCM is equipped with "PostNet" and "PreNet". PreNet normalizes SONAR embeddings of inputs and maps them to hidden dimensions of the model.

Base-LCM learns on a semi-supervised task, where the model predicts the next concept and optimizes the parameters by optimizing the distance between the predicted next concept and the real next concept, that is, by MSE regression.

Diffusion-based LCM is a generative latent variable model that learns a model distribution pθ to approximate the data distribution q.

Similar to the base LCM, modeling diffused LCM is treated as an automatic regression model, generating one concept at a time in the document.

"Large Concept Model" is not a simple "next token prediction", but a kind of "next concept predition", that is to say, the generation of the next concept is based on the previous context.

Specifically, at the position n of the sequence, the model predicts the probability of a certain concept here based on all previous concepts, learning the conditional probability of continuous embedding.

To learn the conditional probability of continuous data, the diffusion model in computer vision can be used to generate sentence embedments.

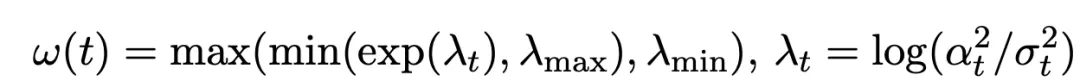

In this paper, we discuss how to design different extension models to generate sentence embeddings, including different types of forward denoising processes and reverse denoising processes.

According to different variance schedules, different noise schedules are generated to generate the corresponding forward process; The influence of different initial states on the model is reflected by different weighting strategies.

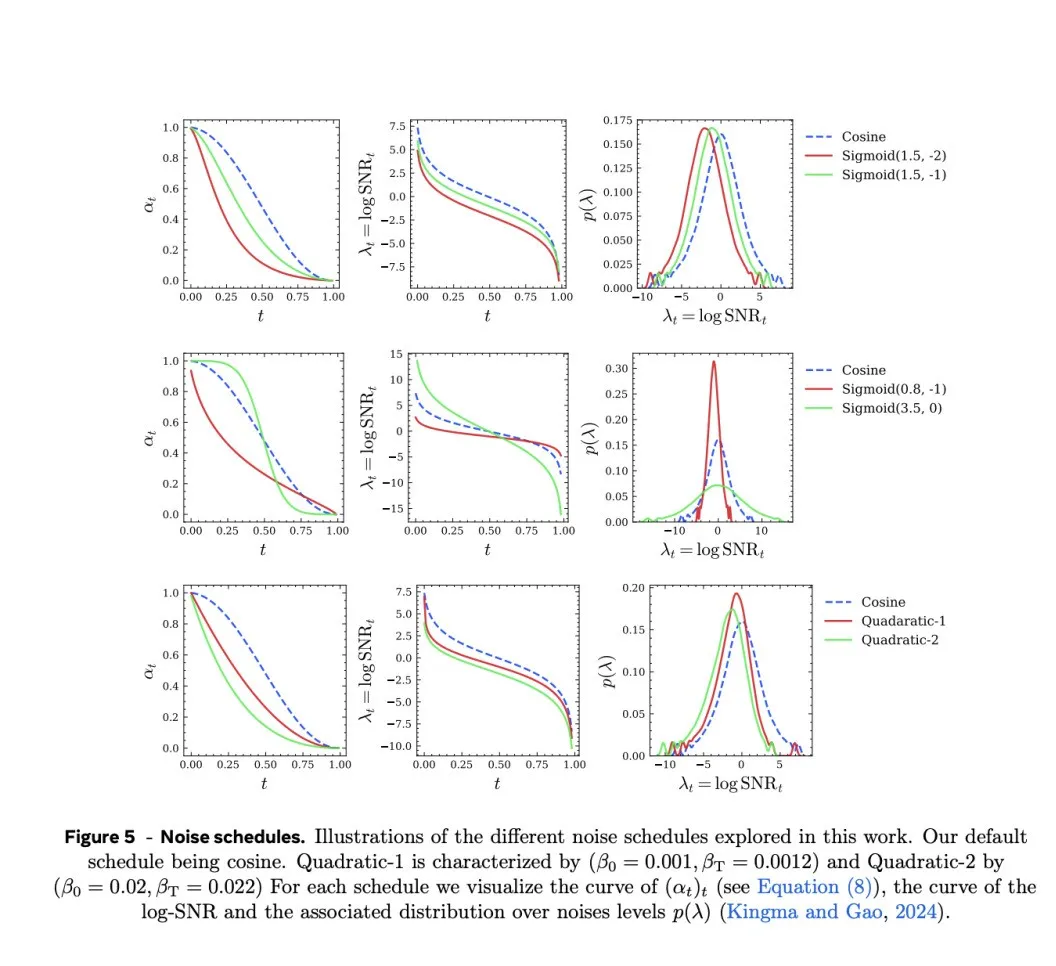

Three types of noise progress are proposed in this paper: Cosine, Quadratic and Sigmoid.

And put forward the reconstruction loss weighting strategy:

The paper discusses in detail the effects of different noise schedules and weighting strategies, and the results are as follows:

Using diffusion acceleration techniques in the image field, LCM reasoning can also be accelerated.

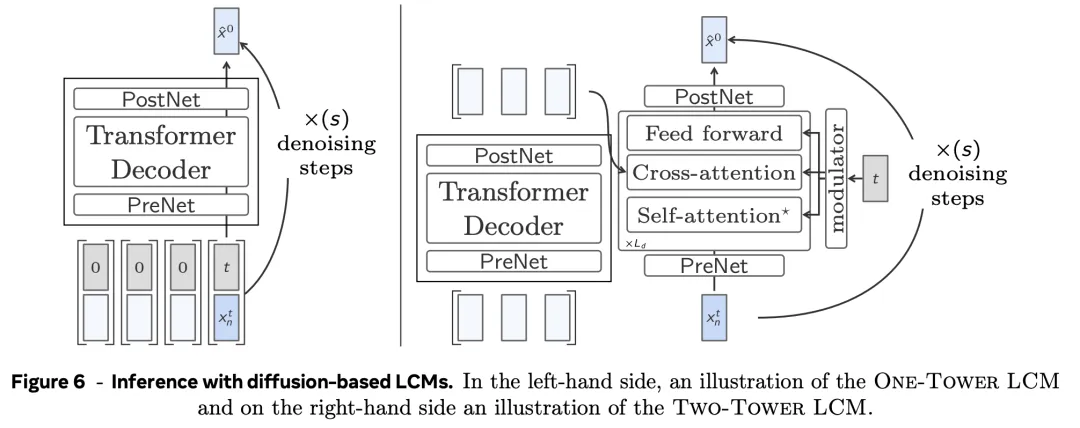

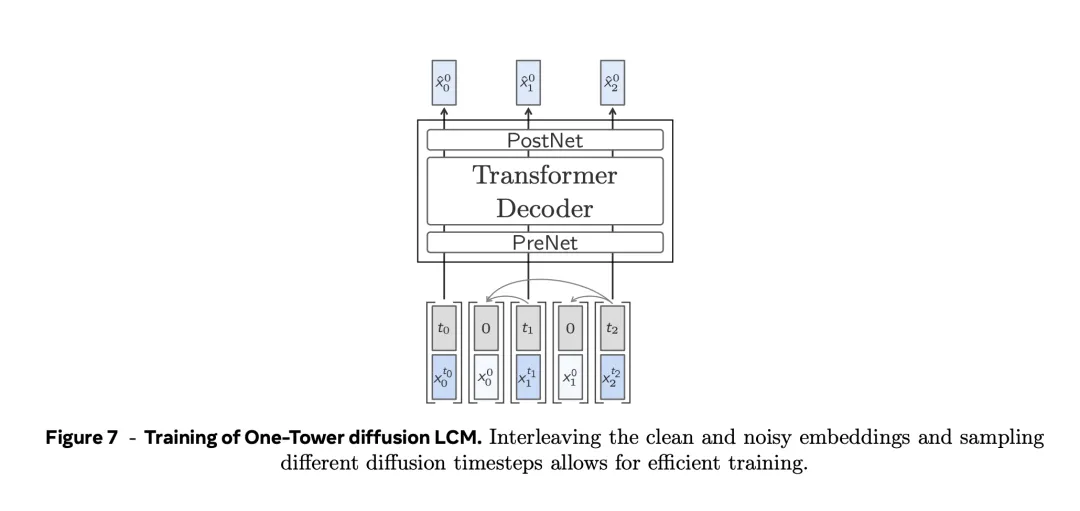

In Figure 6, on the left, the single tower diffusion LCM consists of a Transformer trunk that is tasked with predicting clean next sentence embedment given sentence embedment and noise input.

To the right of Figure 6, the two-tower diffusion LCM model separates the encoding of the previous context from the diffusion of the next embedding.

The first model, the context labeling model, takes the context vector as input and causally encodes it.

That is, apply a pure decoder Transformer with causal self-attention.

The output of the context analyzer is then fed into a second model, the denoiser.

It predicts clean next sentence embedding by iterating denoised latent Gaussian hidden variables.

The denoiser consists of a series of Transformers and cross-attention blocks, which are used to focus on the coding context.

The denoiser and context converter share the same hidden dimension of Transformer.

The Adaptive Layer specification (AdaLN) is used for each block of each Transformer layer (including the cross-attention layer) in the noisemaker.

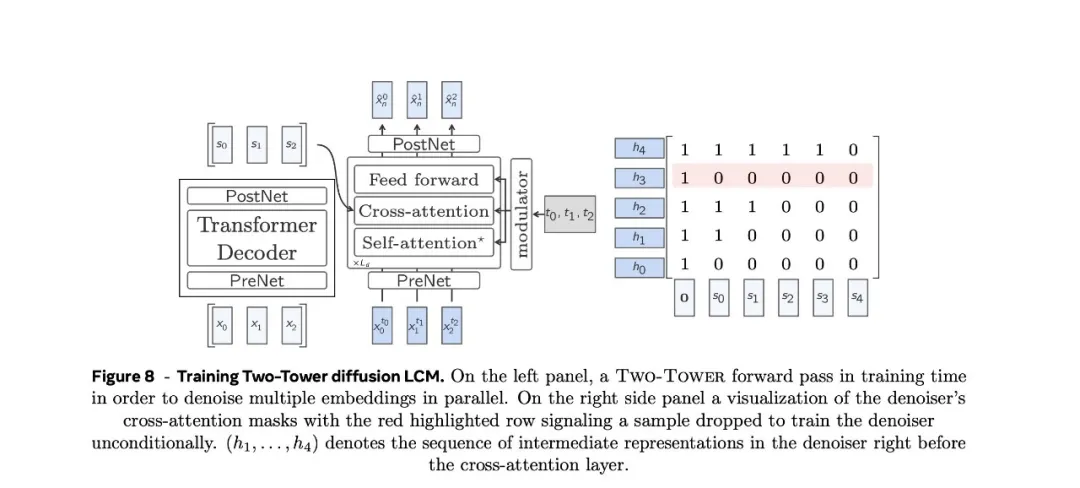

During training, the parameters of the Two-Tower are optimized for the next sentence prediction task of the unsupervised embeddedness sequence.

The cause and effect embed moves one position in the noisemaker and uses the cause and effect mask in the cross-attention layer. Presets a zero vector in the context vector to predict the first position in the sequence (see Figure 8). In order to train the model conditionally and unconditionally, in preparation for classiferless guided scaling inference, random lines are removed from the cross attention mask at a certain rate, and the corresponding positions are de-noised with only the zero vector as the context.

In the field of image or speech generation, there are currently two main approaches to dealing with continuous data generation: diffusion modeling, and learning to quantify the data first and then modeling on the basis of these discrete units.

Moreover, the text modes are still discrete, and despite dealing with continuous representations in SONAR space, all possible text sentences (less than a given number of characters) are point clouds in SONAR space, rather than a true continuous distribution.

These considerations led the authors to explore quantifying SONAR representations and then modeling on these discrete units to solve the next sentence prediction task.

Finally, with this approach, it is natural to use temperature, top-p, or top-k sampling to control the randomness and diversity of the sample represented by the next sentence.

You can use residual vector quantization as a coarse-to-fine quantization technique to discretise SONAR representations.

Vector quantization maps successive input embeddings to the nearest element in the learned codebook.

Each iteration of RVQ uses an additional codebook to iteratively quantify the residual error of the previous quantization.

The RVQ codebook was trained on 15 million English sentences extracted from the Common Crawl in the experiment, using 64 quantizers, each using 8,192 units.

A feature of RVQ is that the centrepoint embedding cumulative sum of the first codebook is a medium rough approximation of the input SONAR vector.

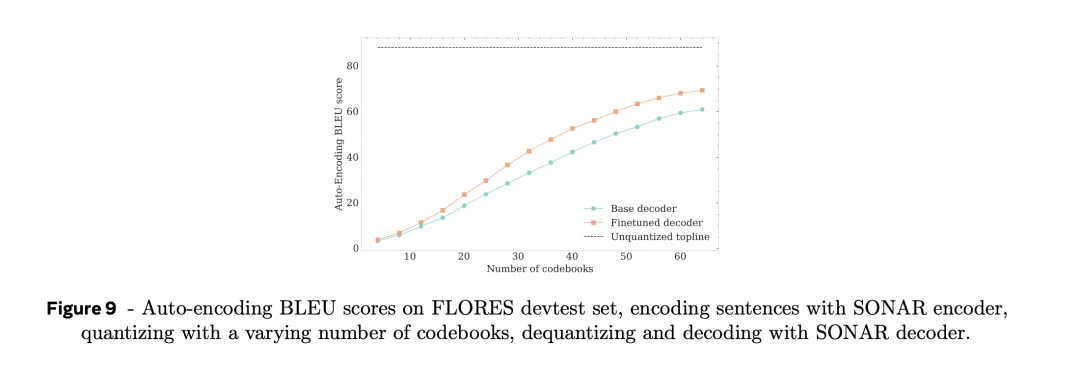

In this way, before decoding quantized embeddings using SONAR text decoder, you can first explore the impact of codebook number SONAR embeddings automatically encoding BLEU scores.

As you can see in Figure 9, the automatic encoding BLEU increases as the number of codebooks increases.

When all 64 codebooks are used, the automatically encoded BLEU score is about 70% of the automatically encoded BLEU score when continuous SONAR is embedded.

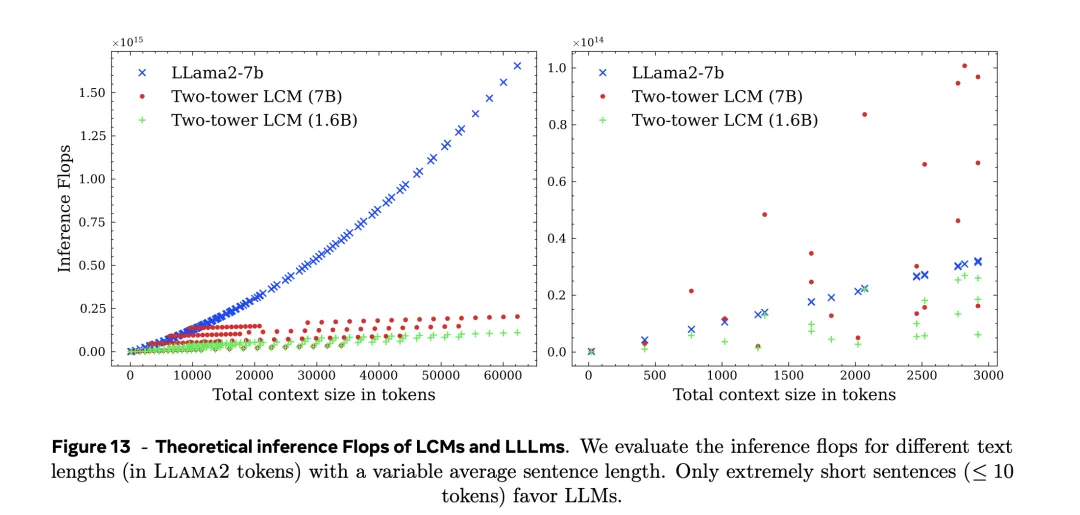

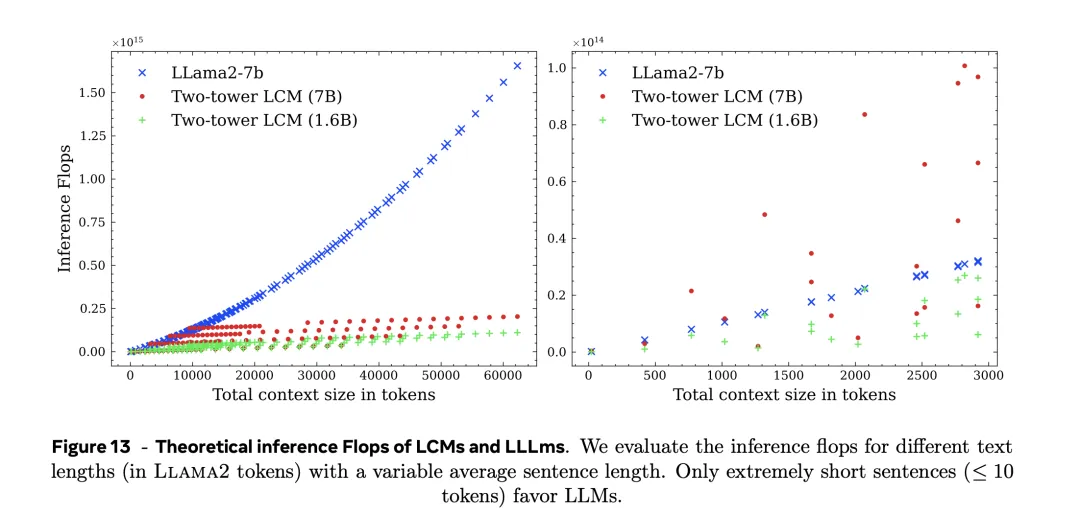

The authors directly compare the inference computational cost of the two-tower diffusion LCM and LLM, that is, for different prompt and total output lengths (in terms of phrases).

Specifically, in Figure 13 of the paper, the authors analyze the number of floating-point operations per second (flops) required for inference in a theoretical large concept model (LCM) and a large language model.

As shown on the left, LLM has an advantage only in very short sentences (10 tokens or less).

When the context exceeds about 10,000 tokens, whether it is Two-tower LCM (1.6B) or Two-tower LCM (7B), the number of tokens almost no longer affects the amount of computation required for inference.

When modeling in potential Spaces, the induced geometry (L2- distance) is primarily relied upon.

However, any potential representation of homogeneous Euclidean geometry will not fully conform to the underlying text semantics.

Even small perturbations in the embedded space can lead to sharp loss of semantic information after decoding, which is a clear proof.

This property is called embedding as "vulnerability".

Therefore, the vulnerability of semantic embeddings (i.e., SONAR code) needs to be quantified in order to understand the quality of the LCM training data and how this vulnerability impedes the training dynamics of the LCM.

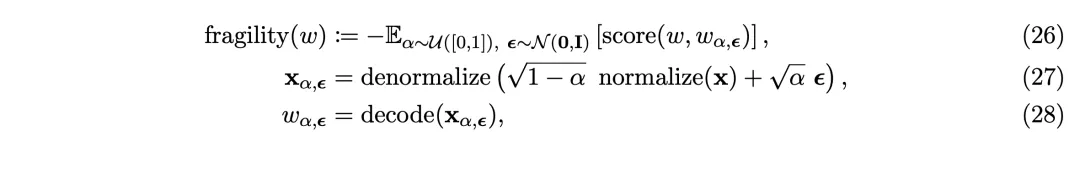

Given a text fragment w and its SONAR code x=encode(w), define the vulnerability of w as

Fifty million snippets of text were randomly sampled and nine perturbations of different noise levels were generated for each sample. In the experiment, mGTE is used as the external encoder for the index of external cosine similarity (CosSim).

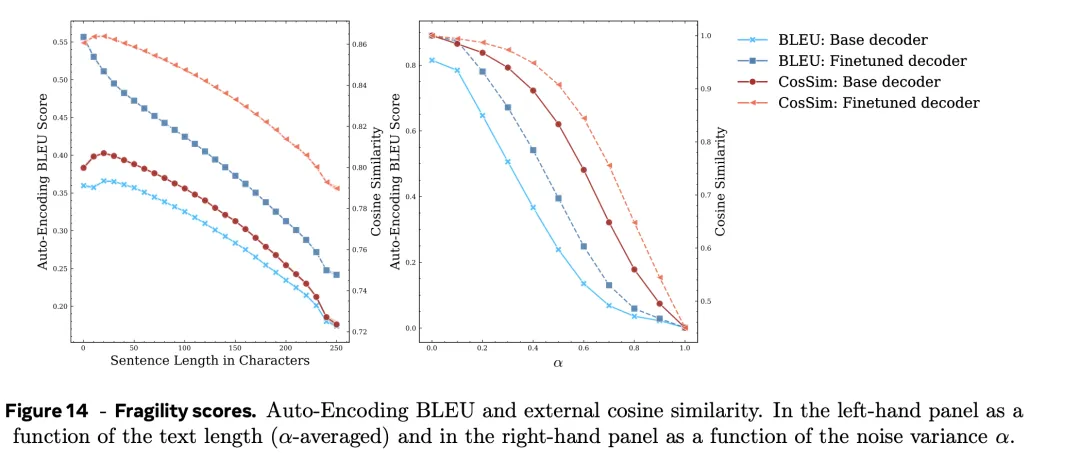

The specific vulnerability scores are shown in Figure 14.

The left and right graphs in Figure 14 depict the curves of BLUE and CosSIM scores with text length and noise level, respectively.

It can be observed that the BLEU fraction declines faster than the cosine similarity.

Most importantly, the brittleness score is sensitive to the choice of decoder. Specifically, as the amount of noise increases, the auto-coded BLEU and cosine similarity scores of the fine-tuned decoder decline at a significantly lower rate than the basic decoder.

Also note that the overall score distribution at the average disturbance level is shown in Figure 15, with wide variation in vulnerability scores across the SONAR sample.

The reason for this difference may be sentence length. Compared to the automatically coded BLEU metric, which decreased by only 1-2% in long sentences, vulnerability was more sensitive to sentence length and fell faster in both similarity measures.

This shows that using SONAR and LCM models with a maximum sentence length of more than 250 can be very challenging. On the other hand, while short sentences are more robust on average, splitting a long sentence in the wrong place can result in a shorter but more fragile clause.

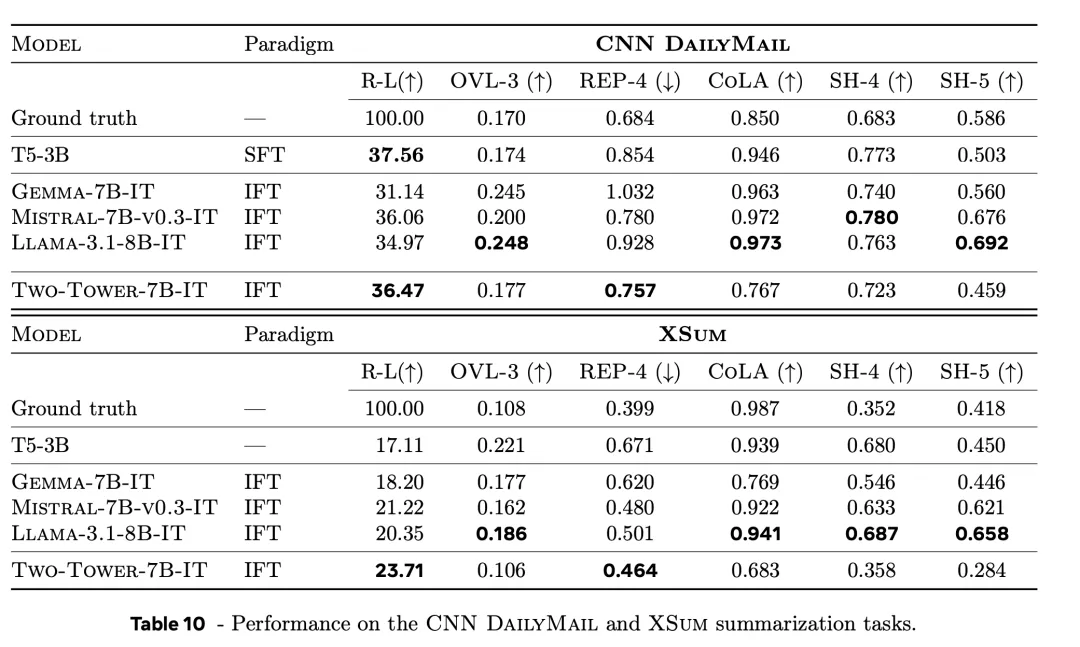

Table 10 lists the results of different baselines and LCM on the summary task, including the CNN DailyMail and XSum datasets, respectively.

LCM's Rouge-L (R-L column in the table) scores are also competitive when compared to the specially adjusted LLM (T5-3B).

A lower OVL-3 score indicates that the new model tends to produce more abstract abstracts rather than extractive abstracts. LCM produces fewer repetitions than LLM, and more importantly, its repetition rate is closer to the true repetition rate.

According to the CoLA classifier score, the summary generated by LCM is generally not very smooth.

However, even manually generated summaries score lower on this score than LLM.

A similar phenomenon is observed for source attribution (SH-4) and semantic coverage (SH-5).

This may be due to the fact that model-based metrics are more biased towards what LLM generates.

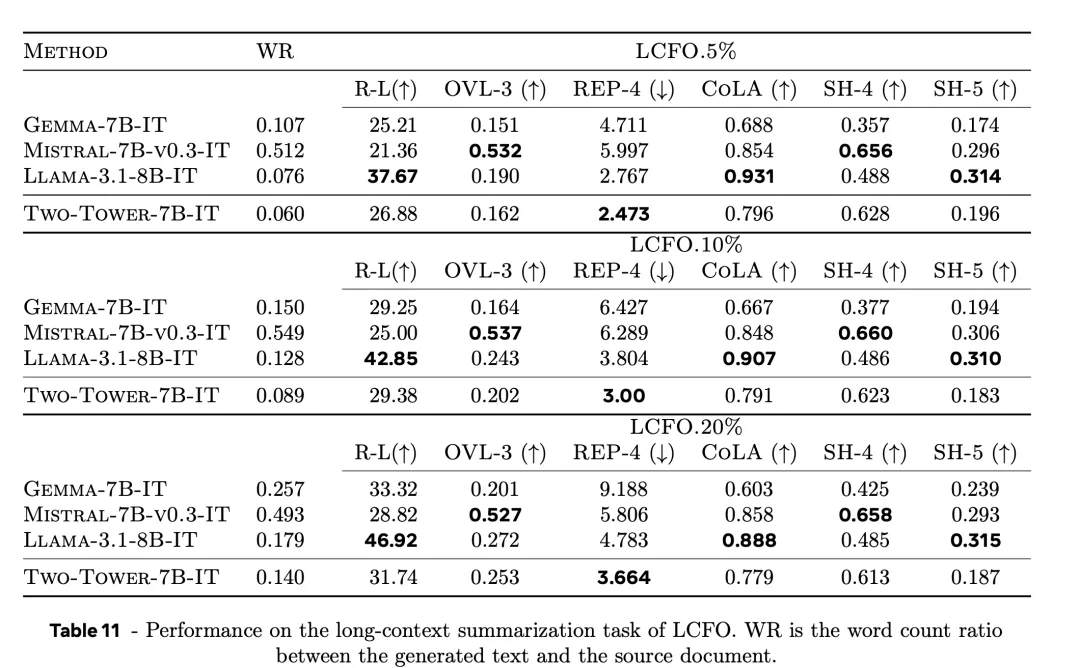

Table 11 shows the results of the long document summary summaries (LCFO.5%, LCFO.10%, and LCFO.20%).

In pre-training and fine-tuning data, LCM sees only a limited number of long documents.

However, it has performed well in this task.

At 5% and 10% conditions, it outperforms Mistral-7B-v0.3-IT and Gemma-7B-IT on the Rouge-L indicator.

The metric Rouge-L is superior to Mistral-7B-v0.3-IT and Gemema-7B-it at 5% and 10% conditions and is close to GememA-7B-IT at 20% conditions.

It was also observed that LCM achieved a high SH-5 score in all conditions, that is, the summary could be attributed to the source.

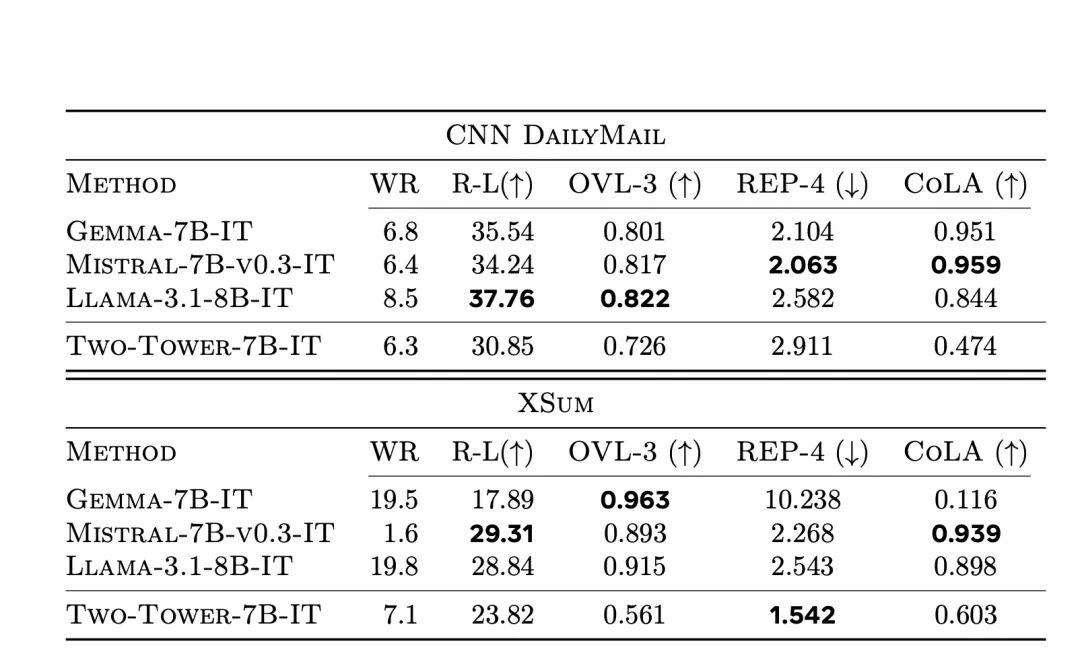

Summary extension is the creation of longer text given a summary, the goal of which is not to recreate the factual information of the initial document, but rather to evaluate the model's ability to expand the input text in a meaningful and fluid way.

When considering that concise documents have summary-like properties (i.e., separate files abstracted primarily from details), summary extension tasks can be described as the act of generating a longer document that preserves the essential elements of the corresponding short document and the logical structure that connects those elements.

Since this is a more free-form generation task, coherence requirements also need to be taken into account (for example, the detailed information contained in one generated sentence should not contradict information contained in another).

The summary extension task described here involves taking abstracts from CNN DailyMail and XSum as input and generating a long document.

Table 12 shows the summary expansion results for CNN DailyMail and XSum.

In the figure, the best results are shown in bold and black.

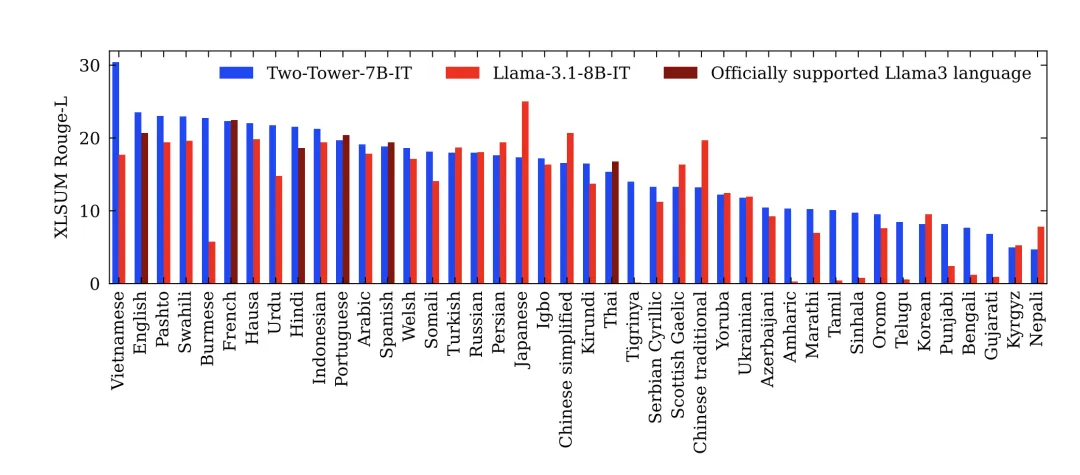

The generalization ability of the new model was tested using the XLSum corpus.

The XLSum corpus is a benchmark for large-scale multilingual abstract news summaries covering 45 languages.

The performance of LCM was compared to that of Llama-3.1-8B-IT, which supports eight languages: English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai.

The authors report Rouge-L scores for 42 languages in Figure 16. Three languages that SONAR does not currently support are excluded: Pidgin, Latin-alphabet Serbian, and Cyrillic Uzbek.

In English, LCM is vastly superior to Llama-3.1-8B-IT.

LCM generalizes well to many other languages, especially low-resource languages like Southern Pashto, Burmese, Hausa, or Welsh, all of which have Rouge-L scores greater than 20.

Other low-resource languages that performed well were Somali, Igbo or Kilundi.

Finally, LCM's Vietnamese language Rouge-L scored 30.4.

Together, these results highlight LCM's impressive zero-shot generalization performance for languages it has never seen before.

In addition, explicit programming, methodology, related methods and model constraints are also described.

The models and results discussed in the paper are a step toward increasing diversity in science and a step beyond current best practices in large-scale language modeling.

The authors also acknowledge that there is still a long way to go to achieve the performance of today's strongest LLMS.

2025-02-17

2025-02-14

2025-02-13

friend link

400-000-0000

立即获取方案或咨询

top