Home > Information > News

#News ·2025-01-07

Chen Danqi's team came again with their cost reduction method

The data is cut by a third, and the performance of the large model is not reduced at all.

They introduced metadata to speed up the pre-training of large models without adding a separate computational overhead.

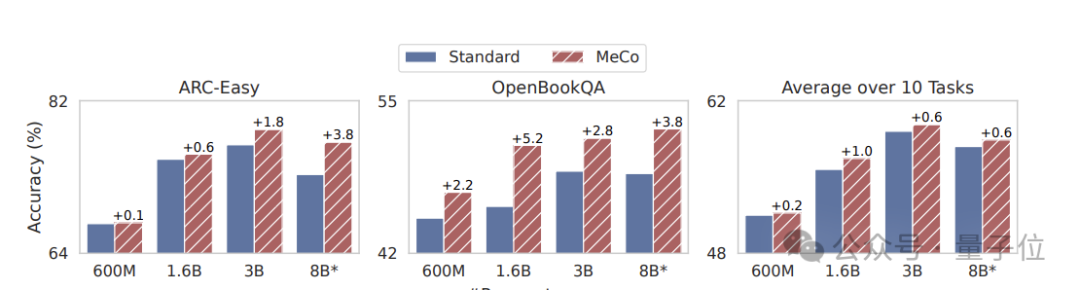

Performance improvements can be achieved at different model sizes (600M-8B) and training data sources.

While metadata has been talked about a lot before, Yizu Gao says they are the first to show how it affects downstream performance and how it can be put into practice to ensure universal utility in reasoning.

Let's see how we do that.

There are huge differences in style, domain, and quality levels in language model pre-training corpora, which are critical for developing generic model capabilities, but efficiently learning and deploying the correct behavior for each of these heterogeneous data sources is extremely challenging.

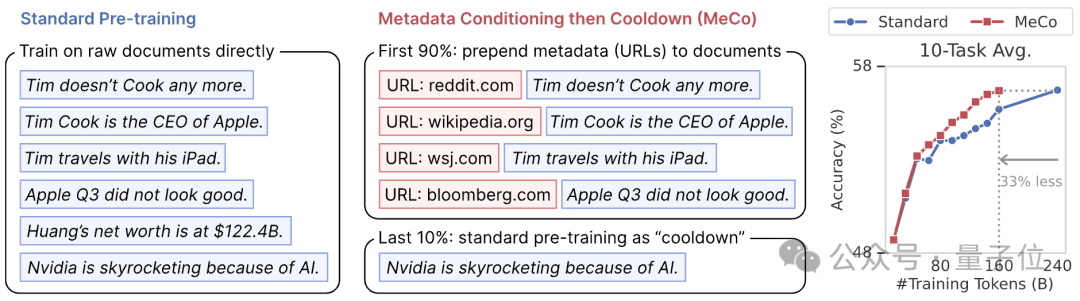

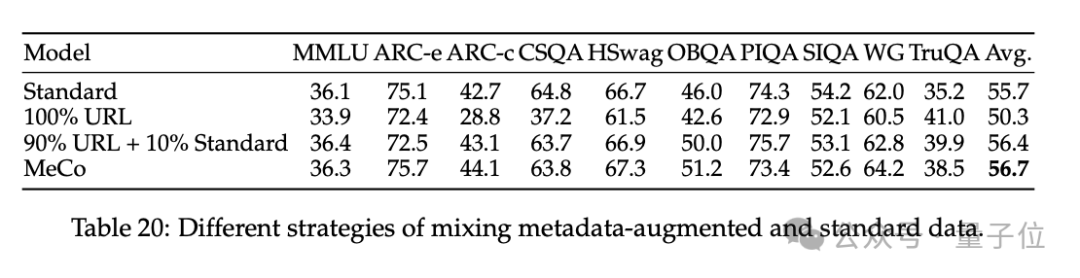

In this context, they proposed a new pre-training method called Metadata Conditioning then Cooldown (MeCo).

There are two training stages.

The pre-training phase (90%) is trained by concatenating metadata (such as the absolute domain name c of the document URL) with the document (such as "URL: en.wikipedia.org [document]").

(for example, if the document's URL is https://en.wikipedia.org/wiki/Bill Gates, then absolute domain c document URL is en.wikipedia.org; This URL information is readily available in many pre-trained corpora, mostly from CommonCrawl2 (an open web crawl data repository).

When other types of metadata are used, the URL should be replaced with the appropriate metadata name.

They only calculated the cross-entropy loss of document tags, not tags in templates or metadata, because training on these tags was found in preliminary experiments to slightly impair downstream performance.

The last 10% of the training step is the cooling phase, using standard data training, inheriting the learning rate and optimizer state of the adjustment phase from the metadata, that is, initializing the learning rate, model parameters, and optimizer state from the last checkpoint of the previous phase, and continuing to adjust the learning rate according to the plan:

1) Disable cross-document Attention, which both speeds up training (25% faster for 1.6B models) and improves downstream performance.

2) When packaging multiple documents into a sequence, we make sure that each sequence starts with a new document, not in the middle of a document - this may result in some data being discarded when packaging documents into fixed lengths, but it turns out to be good for downstream performance.

The Llama Transformer architecture and LLAMA-3 tokenizer were used in this experiment. We experimented with four different model sizes: 600M, 1.6B, 3B, and 8B, along with related optimization Settings.

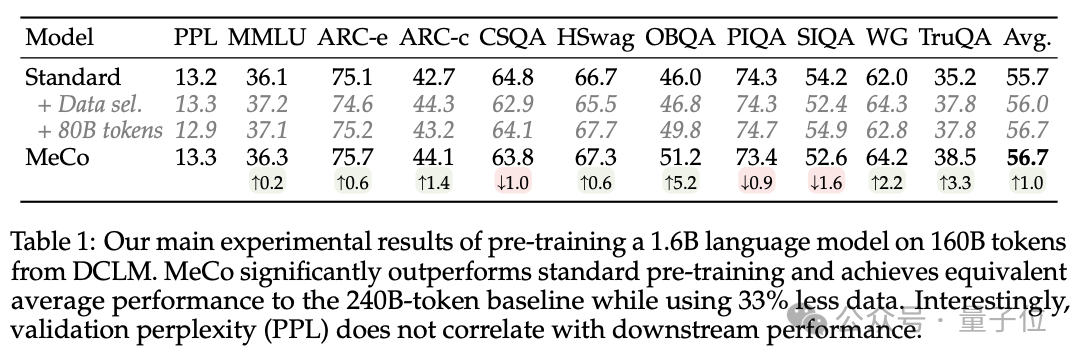

The results showed that MeCo performed significantly better than standard pre-training, with average performance comparable to the 240B labeled baseline, while using 33% less data.

In conclusion, they have mainly accomplished these three contributions.

1. MeCo has significantly accelerated pre-training.

Experiments show that MeCo enables a 1.6B model to achieve the same average downstream performance as a standard pre-trained model with 33% less training data. MeCo showed consistent benefits across different model sizes (600M, 1.6B, 3B, and 8B) and data sources (C4, RefinedWeb, and DCLM).

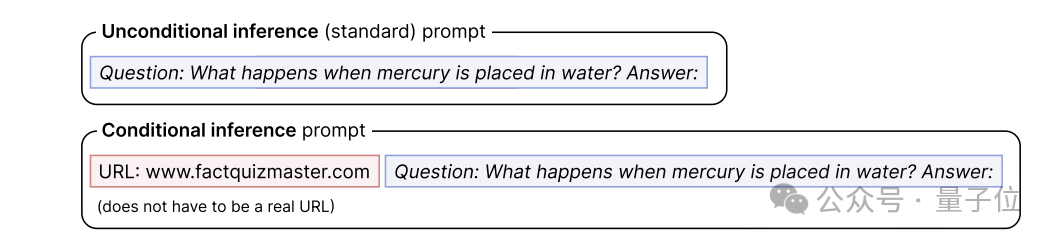

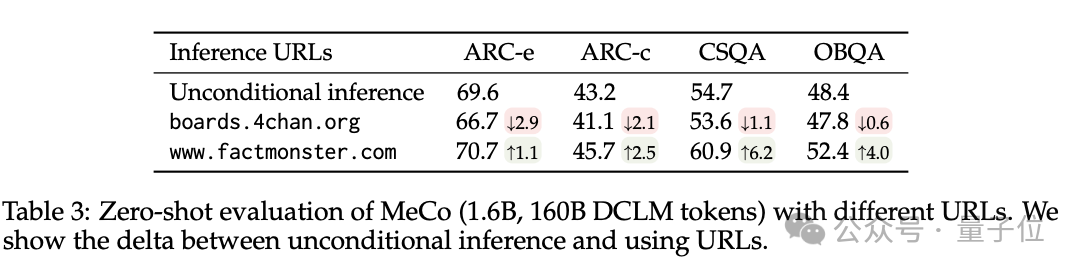

2. MeCo opens up a new way to guide language models.

For example, using factquizmaster.com (non-real urls) improved performance on common-sense tasks (e.g., an absolute 6% improvement in zero common-sense problem solving), while using wikipedia.org reduced the likelihood of toxicity generation by several times compared to standard unconditional reasoning.

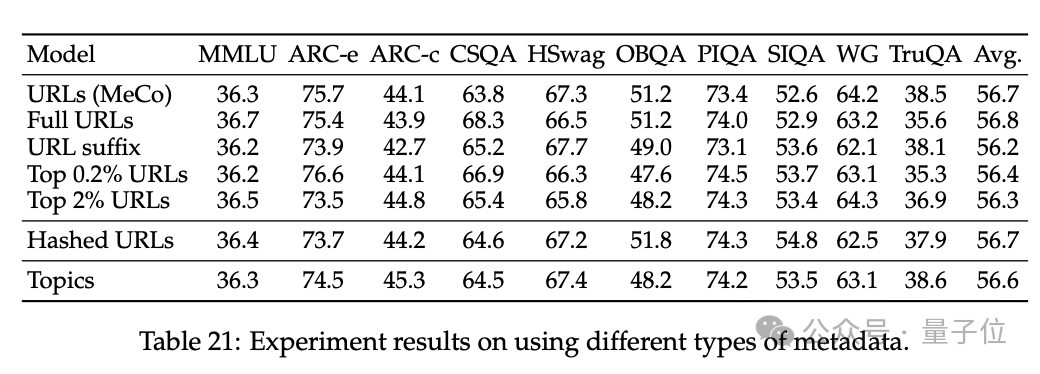

3. Dissolves MeCo design choices and proves that MeCo is compatible with different types of metadata.

Analysis using hashed urls and model-generated topics shows that the primary role of metadata is to categorize documents by source. As a result, MeCo can effectively integrate different types of metadata, including more refined options, even without urls.

The authors are PhD students Tianyu Gao, Alexander Wettig, Luxi He, YiHe Dong, Sadhika Malladi and Danqi Chen from the Princeton NLP Group (part of the Princeton PLI for Language and Intelligence).

Tianyu Gao, an undergraduate from Tsinghua University, is a 2019 Tsinghua Special Award winner, currently a fifth-year doctoral student at Princeton, expected to graduate this year, and continues to conduct research in the academic world, including the intersection of natural language processing and machine learning, with a special focus on large language models (LLM), including building applications, improving LLM functionality and efficiency.

Luxi He is currently a second-year doctoral student in computing at Princeton, where his current research focuses on understanding language models and improving their consistency and security, and a Master's degree from Harvard University.

YiHe Dong currently works in machine learning research and engineering at Google, focusing on representation learning for structured data, automated feature engineering, and multimodal representation learning, with an undergraduate degree from Princeton.

2025-02-17

2025-02-14

2025-02-13

friend link

400-000-0000

立即获取方案或咨询

top