Home > Information > News

#News ·2025-01-07

SparseViT's main research team comes from Lu Jiancheng's team at Sichuan University in collaboration with Professor Pan Zhiwen's team at the University of Macau.

With the rapid development of image editing tools and image generation technology, image processing has become very convenient. However, the image will inevitably leave artifacts (operation traces) after processing, which can be divided into semantic and non-semantic features. Therefore, almost all current image tamper detection models (IML) follow the design of "semantic segmentation backbone network" combined with "elaborate hand-crafted non-semantic feature extraction", which seriously limits the model's artifact extraction ability in unknown scenes.

Non-semantic information tends to maintain consistency between local and global, while semantic information shows greater independence in different areas of the image. SparseViT proposes a sparse self-attention architecture to replace the traditional Vision Transformer (ViT) global self-attention mechanism. Through sparse computing mode, the model adaptively extracts non-semantic features in image tamper detection.

The research team systematically validated SparseViT's superiority by replicating and comparing multiple existing state-of-the-art methods under a unified evaluation protocol. At the same time, the framework adopts a modular design, users can flexibly customize or extend the core modules of the model, and enhance the generalization ability of the model to a variety of scenarios through a learnable multi-scale supervision mechanism.

In addition, SparseViT significantly reduces computational effort (up to 80% FLOPs), achieves a balance between parametric efficiency and performance, and demonstrates its superior performance on multi-benchmark datasets. SparseViT is expected to provide a new perspective for theoretical and applied research in the field of image tampering detection and lay a foundation for subsequent research.

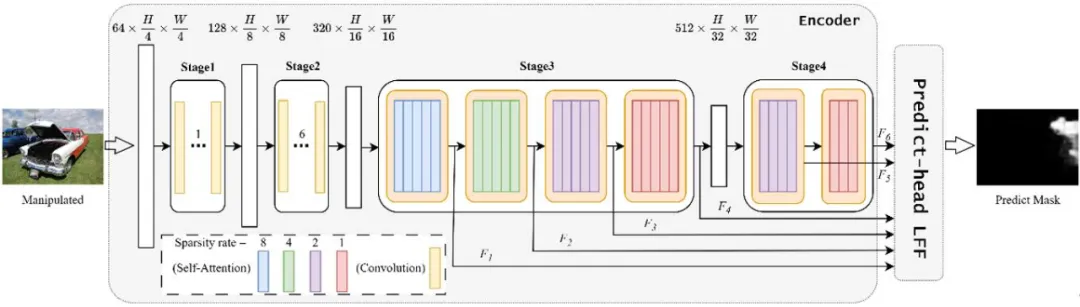

The design overview of SparseViT's overall architecture is shown below:

Figure 1: Overall architecture of SparseViT.

The main components include:

1. Sparse Self-Attention responsible for efficient feature capture

Sparse Self-Attention is a core component of SparseViT framework, which focuses on efficiently capturing key features in tamper images, i.e. non-semantic features, while reducing computational complexity. Due to patch's token-to-token attention calculation, the traditional self-attention mechanism causes the model to overfit the semantic information, so that the local inconsistencies of non-semantic information after tampering are ignored.

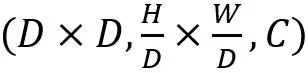

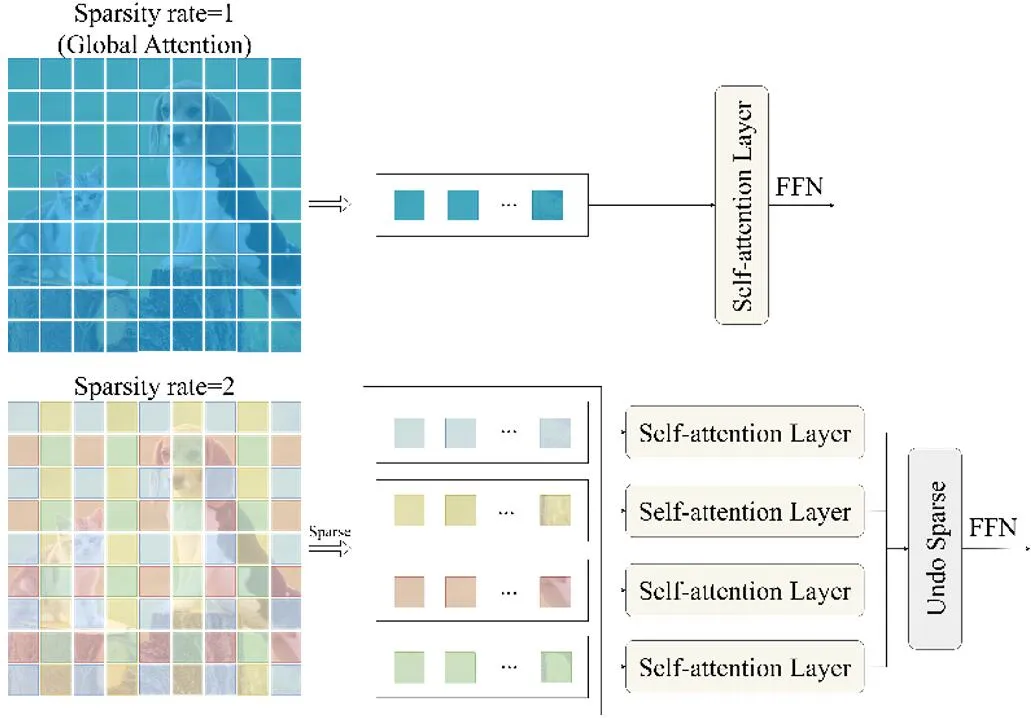

For this reason, Sparse Self-Attention proposed a self-attention mechanism based on sparse coding, as shown in Figure 2. By applying sparsity constraint to input feature graph, input feature graph was set up We're not right

We're not right  Apply attention to the entire feature, but divide the feature into shapes as

Apply attention to the entire feature, but divide the feature into shapes as The tensor block represents the decomposition of the feature graph into

The tensor block represents the decomposition of the feature graph into The size of

The size of The self-attention computation is performed on the non-overlapping tensor blocks.

The self-attention computation is performed on the non-overlapping tensor blocks.

Figure 2: Sparse self-attention.

This mechanism makes the model focus on the extraction of non-semantic features and improves the capturing ability of image tampering artifacts by partitioning feature maps into regions. Sparse Self-Attention reduces FLOPs by about 80% compared to traditional self-attention, while retaining efficient feature capture, especially in complex scenes. The modular implementation also allows users to adjust the sparse policy to meet the needs of different tasks.

2. Learnable Feature Fusion (LFF) for multi-scale feature Fusion

Learnable Feature Fusion (LFF) is an important module in SparseViT, which aims to improve the generalization ability and adaptability of models to complex scenarios through multi-scale feature fusion mechanism. Different from the traditional fixed rule feature fusion method, LFF module dynamically adjusts the importance of different scale features by introducing learnable parameters, thus enhancing the sensitivity of the model to image tamper artifacts.

By learning specific fusion weights from the multi-scale features output by the sparse self-attention module, LFF prioritized the low-frequency features associated with tampering, while retaining the high-frequency features with stronger semantic information. The module design fully considers the diverse requirements of IML tasks, which can not only handle weak non-semantic artifacts with fine granularity, but also adapt to large-scale global feature extraction. The introduction of LFF significantly improves the performance of SparseViT on cross-scenario and diversified data sets, while reducing the interference of irrelevant features on the model, providing a flexible solution for further optimizing the performance of IML models.

In short, SparseViT has the following four contributions:

1. We reveal that semantic features of tampered images require continuous local interaction to build global semantics, while non-semantic features can achieve global interaction through sparse coding due to their local independence.

2. Based on the different behaviors of semantic and non-semantic features, we propose to use sparse self-attention mechanism to adaptively extract non-semantic features from images.

3. In order to solve the unlearnability of traditional multi-scale fusion methods, we introduce a learnable multi-scale supervision mechanism.

4. Our proposed SparseViT maintains parametric efficiency without relying on manual feature extractors, and achieves state-of-the-art (SoTA) performance and excellent model generalization on four common datasets.

By using the difference between semantic features and non-semantic features, SparseViT enables the model to adaptively extract more critical non-semantic features in image tampering location, providing a new research idea for accurate location of tampering region. The code, documentation, and tutorials are now open source on GitHub (https://github.com/scu-zjz/SparseViT). The code has a comprehensive update plan, the warehouse will be maintained for a long time, and researchers around the world are welcome to use and propose improvements.

2025-02-17

2025-02-14

2025-02-13

friend link

400-000-0000

立即获取方案或咨询

top