Home > Information > News

#News ·2025-01-06

Network for AI, AI training has higher and higher requirements for computing power, from ten thousand cards cluster to one hundred thousand cards cluster, and then to one million cards cluster, how to integrate long-distance dispersed computing resources to achieve a jump in scale computing power.

AI for Network, the current industrial field is facing the problem of "how to make their products more intelligent", how to use AI to change the network, make the network smarter, safer and more reliable, and achieve the "automatic driving" of the network.

At the MEET2025 Intelligent Future Conference, Wang Hui, president of Huawei NCE data communication field, shared his views for us.

In order to fully reflect Wang Hui's thinking, on the basis of not changing the original intention, qubit has edited the speech content, hoping to bring you more inspiration.

MEET 2025 Intelligent Future Conference is an industry summit hosted by Qubit, attended by more than 20 industry representatives. There were more than 1,000 offline attendees and more than 3.2 million online viewers, which received extensive attention and coverage from mainstream media.

(The following is the full text of Wang Hui's speech)

Good morning, everyone! The theme of today's conference is to change thousands of lines of wisdom and benefit all industries. Many guests explained how AI changed thousands of lines and industries from the perspective of products and manufacturers.

Next, from the perspective of the industrial field, I will talk about the internal connection between the AI big model and the communication network behind it in the To B industry.

This topic is very important, and the more than 100 industry customers I have met around the world are facing a common problem, that is, how to arm themselves in the era of AI large models to make their products and industries smarter.

We see that the AI large model represented by OpenAI has made great progress all the way, but in the industrial field, the large model has encountered many practical difficulties when landing in the vertical industry, and it can even be said that it is difficult. So today I want to look at some of our thinking from the perspective of vertical industry and network industry.

Therefore, I want to talk about some thoughts about AI from the perspective of vertical industry.

When it comes to the network, we are more familiar with the concept of 5G and Wifi that we use now, but what is the relationship between the network and AI?

Summed up in two sentences, very clear, one is called Network For AI, the other is called AI For Network.

Network for AI refers to using the NetWork to accelerate the current AI training reasoning, and AI For NetWork is to make the network more intelligent and reliable through AI means.

Network for AI, there are many different routes in the industry.

Nvidia is fully promoting the NVLink system, AMD is also mainly promoting its Infinity Link, Huawei is also pushing HCCS, in terms of open standards, there are UALink, super Ethernet and so on;

What is the logic behind so many routes?

In the cluster nodes, the Scale up mode pursues the ultimate communication efficiency. When conducting AI training, the strong coupling of computing and network is adopted to greatly improve the computing performance. Most of the manufacturers are relatively closed technical routes.

Outside the cluster nodes, the mode of Scale out pursues the interconnection of computing resources, and the network technology gradually develops towards the unified Ethernet route.

The current common challenge of large-scale cluster training is how to train steadily for a long time. As Kaifu Lee mentioned in the opening, OpenAI has also encountered the problem of training interruption.

According to statistics, today's large model training will be interrupted once in less than two days on average, and the reasons for these interruptions, in addition to video card failures, optical modules and link failures also account for a considerable proportion.

From the 10,000 card cluster to the 100,000 card cluster, and even the million card cluster next year, there are two key challenges:

The key to the first problem is to use advanced algorithms to maintain load balance across the network, thereby accelerating the AI training process.

In this regard, we have done the best level in the industry, through the NLSB algorithm can improve the overall training efficiency by more than 10%;

At the same time, through the fault prediction algorithm, potential faults can be found and eliminated in advance before the start of training, so as to keep the whole training uninterrupted and greatly improve the efficiency of training.

The key to the second problem is heterogeneous computing over long distances, which will become an important trend in the next phase, and also a difficult problem for the industry.

We enable collaborative training in remote data centers through algorithmic collaboration between AI DC networks and inter-DC networks, as well as the industry's first lossless network spanning thousands of kilometers.

Not only the network field, every vertical industry is thinking about how to let AI land, as small as the robot to do coffee, as large as the inspection robot in the steel industry, are facing similar problems.

Our exploration of AI in the cyber space began as early as 2017, when it was around the "network autonomous driving" solution

Over the years, we've found some common challenges when applying AI and large models across all verticals.

The first is the problem of real-time decision making. The difference between industry and TOC is that many decisions in industry have To be made in milliseconds;

If the data of this system can not be obtained in real time, the real-time decision is impossible to talk about.

The second problem is the rigor of reasoning. Like making videos and pictures, even if the effect is not very good, it will not cause serious consequences.

But in the industrial world, a small network configuration error can lead to a major accident. A core network, carrying the mission of hundreds of millions of people online, once the failure, the impact is great, must do the rigor of reasoning.

The third is the problem of scene generalization. Large communication models can not only be used for a single task, but should be able to adapt to the needs of different customers and different scenarios.

These three challenges are common problems encountered by AI in the vertical field. How to solve these problems?

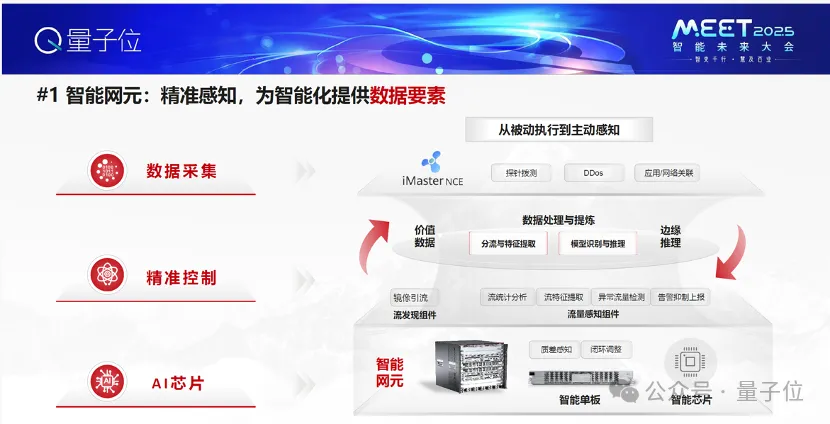

These challenges require a systematic solution, an AI Native intelligent network system, including three parts: we call "one network, one map, one brain";, corresponding to the intelligent network element, the network digital map, and the communication model.

The first thing to solve is the intelligent problem of the hardware itself.

Network data is mainly generated by device nes. If network devices only generate logs and alarms, the data cannot be restored to the digital twins of the network most of the time. Therefore, traditional device nes need to be upgraded to intelligent nes.

On the one hand, intelligent nes need to provide data.

Data is the core element, and the data here does not seek to be large, but to use the least amount of data to support accurate decision-making. The other

On the one hand, precise control of the problem. Similar to the newly released driverless car chassis, it can achieve precise control and brake in advance on rainy days.

This is because the system can detect the friction between the tire and the road, and when the friction changes, it can predict in advance and make a quick action, compared to the reaction time of the human (more than 500 milliseconds), the intelligent system only needs 200 milliseconds.

Then there's the question of the digital twin of the web itself. Like Google Maps of the physical world, we created the industry's first digital map of the web to build a digital twin of the web world.

It enables accurate navigation, simulation, and multi-dimensional visualization of the digital world, and provides accurate context information for communicating large models.

Finally, as a large communication model of the intelligent brain, the large model will certainly change each industry and let each industry move toward "automatic driving", but the actual landing in the industrial field is difficult at this stage.

How to solve this problem? I think there are three key points:

First of all, the biggest impact on the current system is actually not the big model, but the domain's proprietary model.

For example, the model that deals with security policy and the model that deals with path tuning can greatly improve the accuracy of task execution and determine the upper limit of system capability.

Secondly, o1 has strong reasoning ability, which determines the generalization ability and decision accuracy of the system.

Finally, high-quality domain knowledge governance, our communication grand model integrates 50 billion communication corpus and the experience of more than 10,000 network operation and maintenance experts to become experts in the field of communication.

In summary, we need the combination of the underlying intelligent network elements, the digital modeling of the system, domain knowledge, API governance, and the strong reasoning ability of the large model to allow the network to move towards L4 "automatic driving".

Thank you!

2025-02-17

2025-02-14

2025-02-13

friend link

400-000-0000

立即获取方案或咨询

top