Home > Information > News

#News ·2025-01-06

Graduated one year ago, has been engaged in large-scale model inference related work. The most commonly compared LLM reasoning framework in the work is vLLM. Recently, I took time to study the architecture of vLLM in detail, hoping to have a more detailed and comprehensive understanding of vLLM.

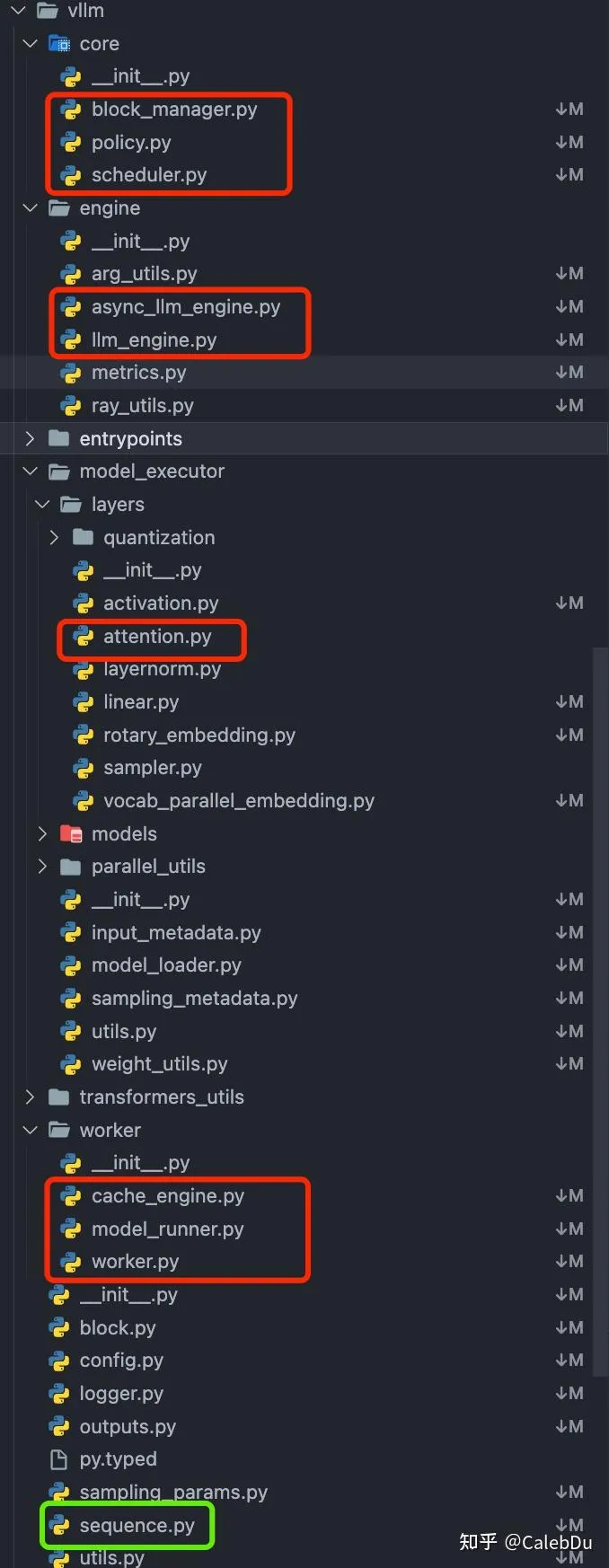

vLLM python project directory

vLLM python project directory

The file marked in the figure is the core component of the project directory on the vLLM python side. According to the dependency between layers, it can be roughly disassembled into the following structure:

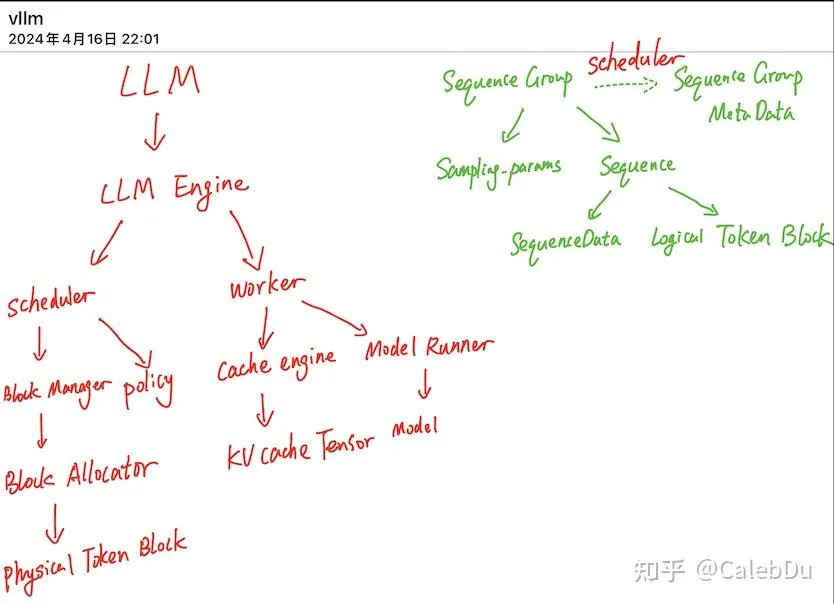

The LLM class is a top-level user application. The LLM class controls the whole process of the general manager inference. The LLM Engine class contains the Scheduler class and the Worker class. The Scheduler schedules requests to ensure that Cache Block resources in vLLM are sufficient for existing requests to complete execution. Otherwise, the existing requests are preempted. The Scheduler class controls the Block Manager to manage the Phyical Token Block. The worker is responsible for model loading, model execution, and for distributed reasoning, by creating multiple workers to execute a portion of the complete model. The Cache Engine manages the complete KV Cache Tensor on the CPU/GPU and performs the block data handling of the request scheduled by the Scheduler. Model Runner has the actual execution of Model instances and is responsible for pre-process/ post-process and sampling of data.

vLLM architecture Overview

[Update] The vLLM code has been refactored and has some differences from the code base I looked at earlier

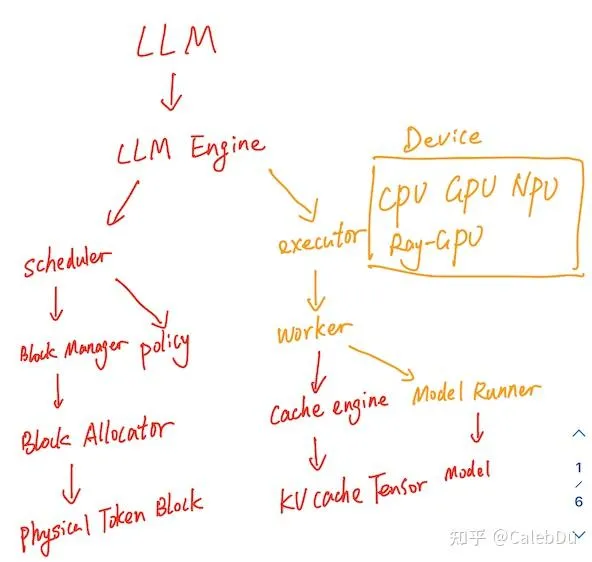

commit: cc74b vllm architecture

commit: cc74b vllm architecture

The overall architecture is not changed much from the previous one. There is a new abstraction of Executor class on top of Worker, which is used to manage different back-end devices such as CPU, GPU, NPU, and distributed GPU back-end. Specific Executor, Worker, and Model Runner are derived for different devices.

It also adds support for Speculative Decoding, FP8, lora, CPU Page Attention kernel, different back-end Attention, and prefill decoding mixed reasoning.

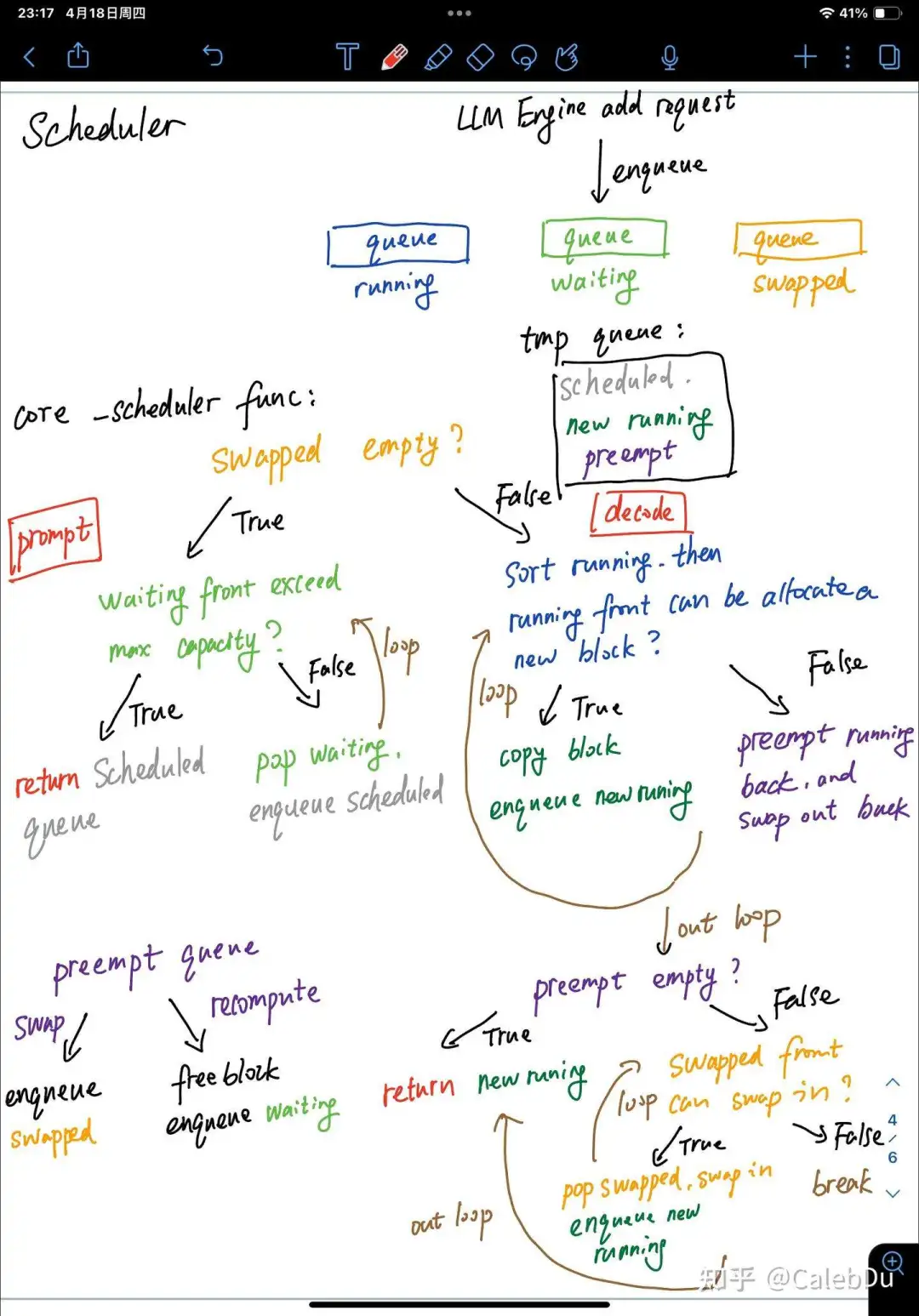

The scheduling behavior of the Scheduler occurs at the beginning of each LLM Engine's step inference. The SentenceGroup responsible for preparing the step to perform the inference this time. The Scheduler is responsible for managing three queues running waiting swapped. The elements in the waitting queue are prompt phase or preempt recompute for the first time. Elements in the swapped team that are preempted from running are in the decode phase. Whenever the LLM Engine adds a new request, the Scheduler queues the SentenceGroup created by the new request.

The Scheduler ensures that all step data is prompt(prefill) phase or auto-regressive (decode) phase for each scheduling. The core function is _scheduler() : there are three temporary queues scheduled, new running and preempt in the function

scheduler Core scheduling

scheduler Core scheduling

prompt phase (waiting) first determines whether the swapped queue is empty, if it is empty, it means that there is no earlier unfinished request, then dequeue the elements in the waiting queue to the scheduled queue. Until the block allocation upper limit or the maximum seq upper limit of the vLLM system is exceeded. _scheduler() Returns the scheduled queue

decoding phase:

If swapped is not empty, the previous swapped request is processed first. Firstly, the requests in running are sorted according to the FCFS policy, and all sentences in the decoding phase SentenceGroup may produce different output tokens due to sampling. Each Sentence needs to be allocated a different new slot to store the new tokens. If the existing free block can't meet all sentences, the running sentence is preempted and joined the preempt queue. [recompute mode joins the waitting queue and releases the block. swap mode adds the swapped queue and swap-out block] until blocks can be allocated to all sentences for the first of the running queue, and the first element of the running queue is added to the new running. The swapped queue is sorted according to the FCFS policy. If preempt is not empty, the block resources are insufficient, and _scheduler() directly returns the new running and swap-out block indexes. If preempt is empty, the block resource may be surplus, then try to loop the first element of the swapped team swap-in, adding new running if successful, otherwise return the new running and swap-in index directly.

【Scheduler Update 】commit: Under the code base of cc74b, the default scheduling logic (_schedule_default) of the Scheduler is basically different, which is still consistent with the above description, ensuring that all SEtencegroups of this scheduling are prompt phase or decode phase. The running waiting swapped scheduling refactoring from the complete _scheduler() function is split into three fine-grained functions _schedule_prefills, _schedule_running, and _schedule_swapped.

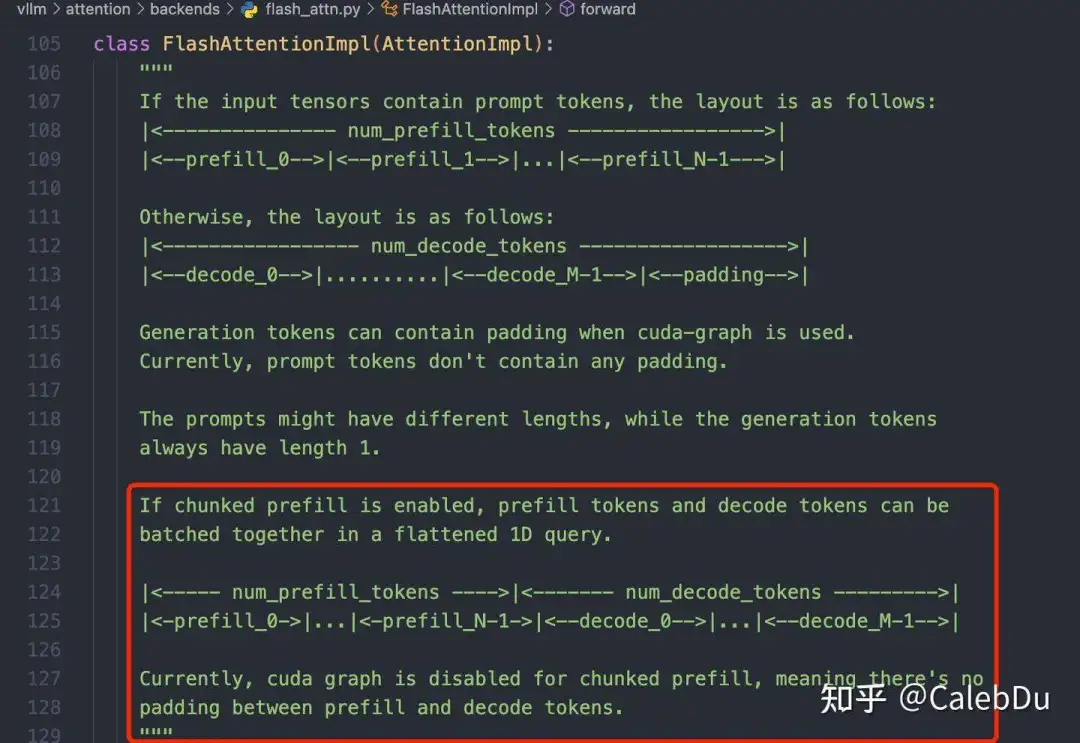

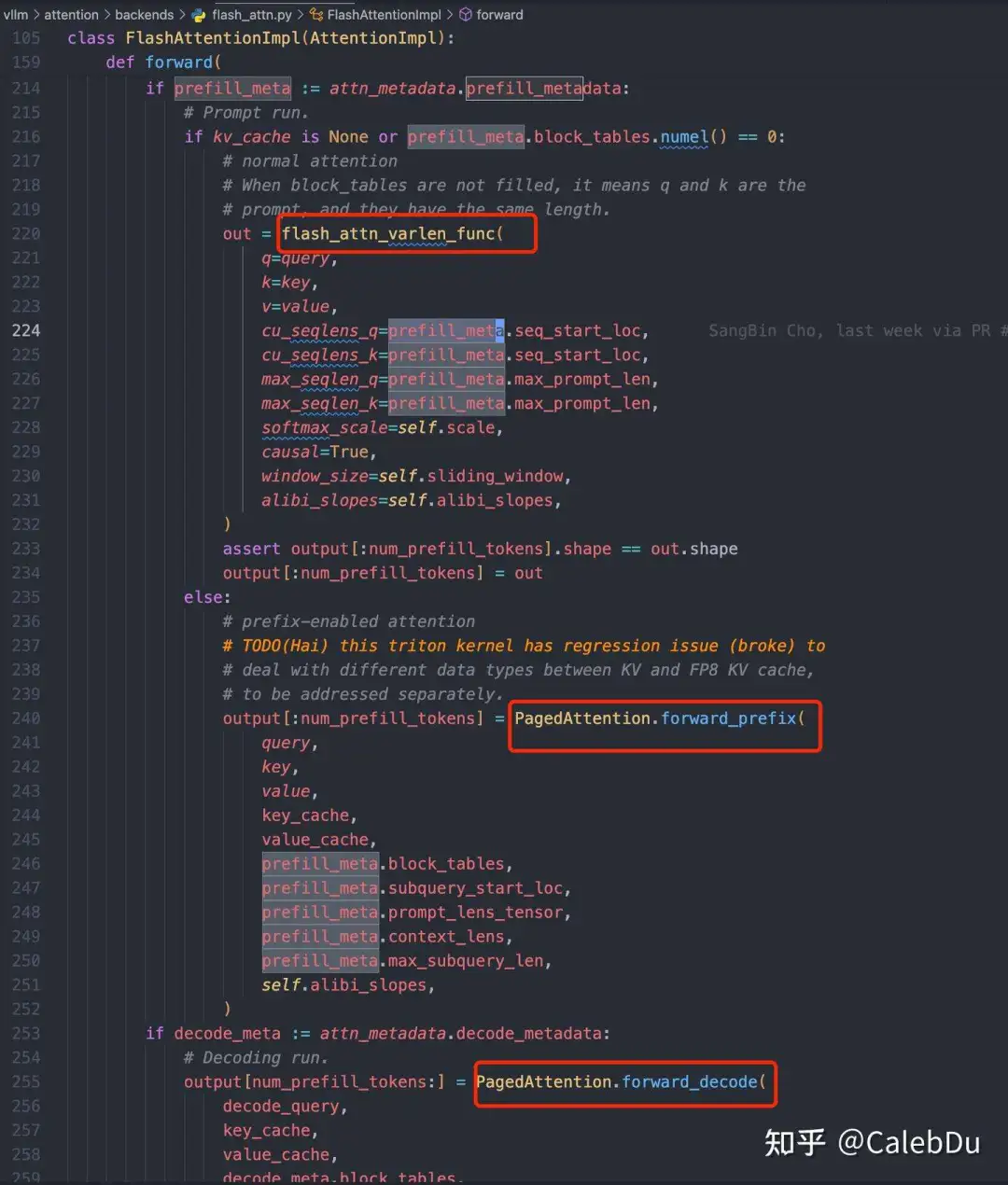

In addition, a new scheduling policy (_schedule_chunked_prefill) is added to the Scheduler, which supports prompt phase and decode phase of the SentenceGroup. It can improve the utilization rate of single matmul weight handling as much as possible, and improve the parallelism of request to improve tps throughput. The main process of this strategy is: Execute _schedule_running first to ensure that the decode phase's high-priority SentenceGroup in the running queue has enough blocks to generate new output tokens for each Sentence. Otherwise, preempt runs the lower-priority SentenceGroup in the queue. On executing _schedule_swapped, the swapped SentenceGroup swap-in that satisfies the free block resource is executed. Finally, _schedule_prefills is executed to schedule the SentenceGroup at the beginning of the waiting queue until the block allocation limit is exceeded. The successfully scheduled requests of running, swapped, and waiting are composed of a new running queue output. Note that since the SentenceGroup in the running queue is in the prompt phase or decode phase, it is necessary to mark the phase of each SentenceGroup. When performing Attention, the prompt phase and decode phase are performed separately.

The Attention class handles different Scheduler schemas

The Attention class handles different Scheduler schemas Attention Kernel is calculated by Seq at different stages

Attention Kernel is calculated by Seq at different stages

The vLLM codebase has not been updated for several weeks, and many places have been refactored, embarrassing...

Then update the kernel parsing related to storage management and page attention.

2025-02-17

2025-02-14

2025-02-13

friend link

400-000-0000

立即获取方案或咨询

top