Home > Information > News

#News ·2025-01-06

Just today, a domestic paper has shocked AI scholars around the world.

Many netizens said that what is the principle behind the OpenAI o1 and o3 models - this unsolved mystery has been "discovered" by Chinese researchers!

Note: The authors provide a theoretical analysis of how to approximate such models and do not claim to have "cracked" the problem

In fact, in this 51-page paper, researchers from Fudan University, among others, analyze a roadmap for implementing o1 from the perspective of reinforcement learning.

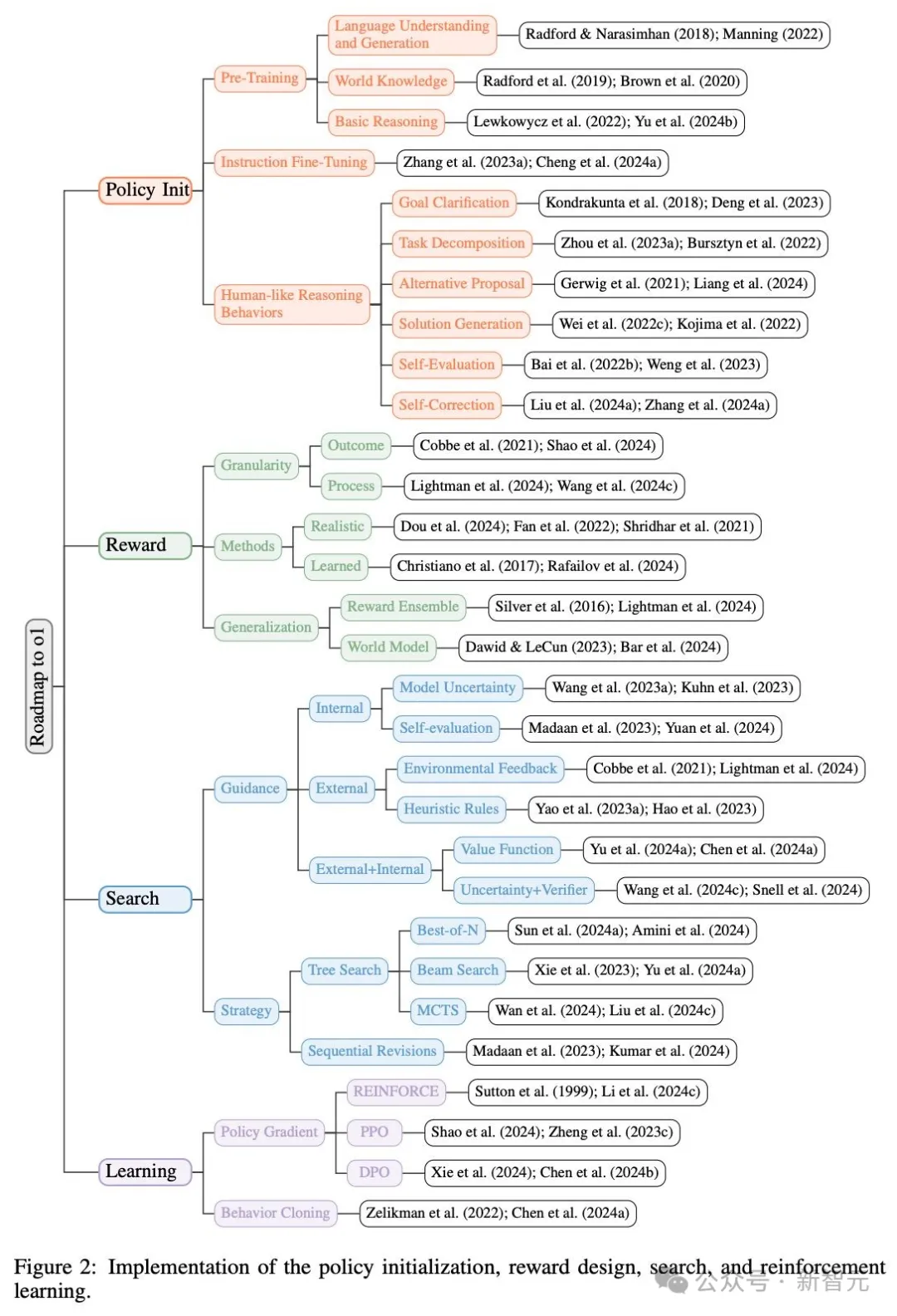

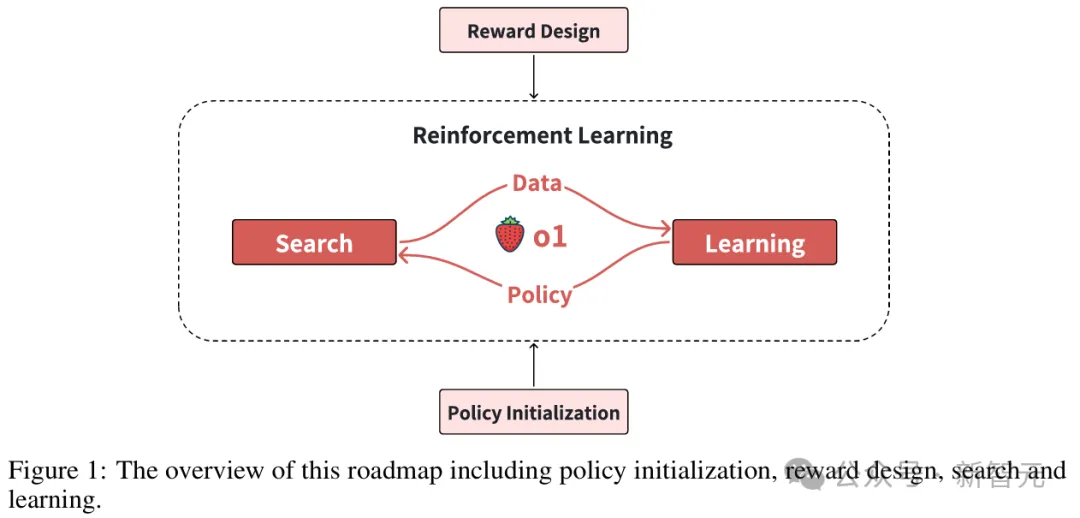

There are four key components to focus on: policy initialization, reward design, search, and learning.

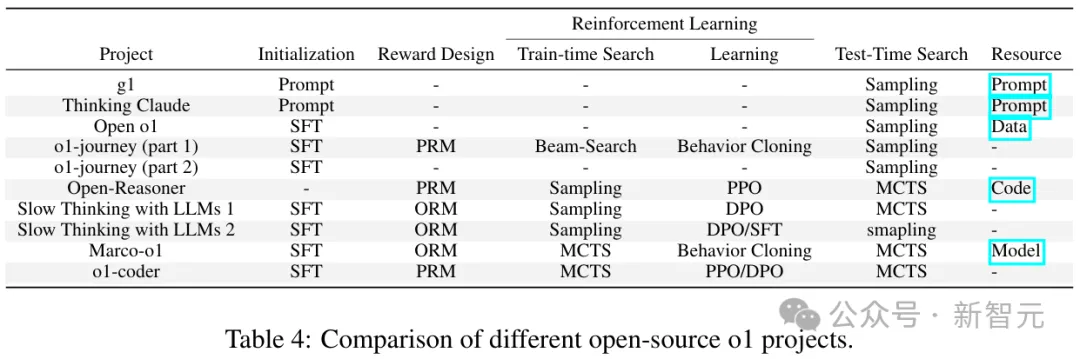

In addition, as part of the roadmap, the researchers also summarized the existing "open source version o1" project.

Address: https://arxiv.org/abs/2412.14135

In a nutshell, inference models like o1 can be thought of as a combination of LLM and AlphaGo.

First, models need to be trained on "Internet data" so that they can understand text and reach a certain level of intelligence.

Then, add reinforcement learning methods to make them "think systematically."

Finally, in the process of finding an answer, the model "searches" the solution space. This approach is used both for the actual "when tested" answers and for improving the model, i.e., "learning."

Notably, Stanford and Google's 2022 "STaR: Self-Taught Reasoner" paper suggested that the "inference process" generated by LLMS before answering questions could be used to fine-tune future models, thereby improving their ability to answer such questions.

STaR allows AI models to "guide" themselves to higher levels of intelligence by repeatedly generating their own training data, an approach that could, in theory, allow language models to surpass human-level intelligence.

Therefore, the concept of having the model "analyze the solution space deep" plays a key role in both the training phase and the testing phase.

In this work, the researchers mainly analyzed the implementation of o1 from the following four aspects: policy initialization, reward design, search, and learning.

Strategy initialization enables the model to develop "human-like reasoning behavior" and thus has the ability to efficiently explore the solution space of complex problems.

Reward design provides intensive and effective signals through reward shaping or modeling to guide the learning and searching process of the model.

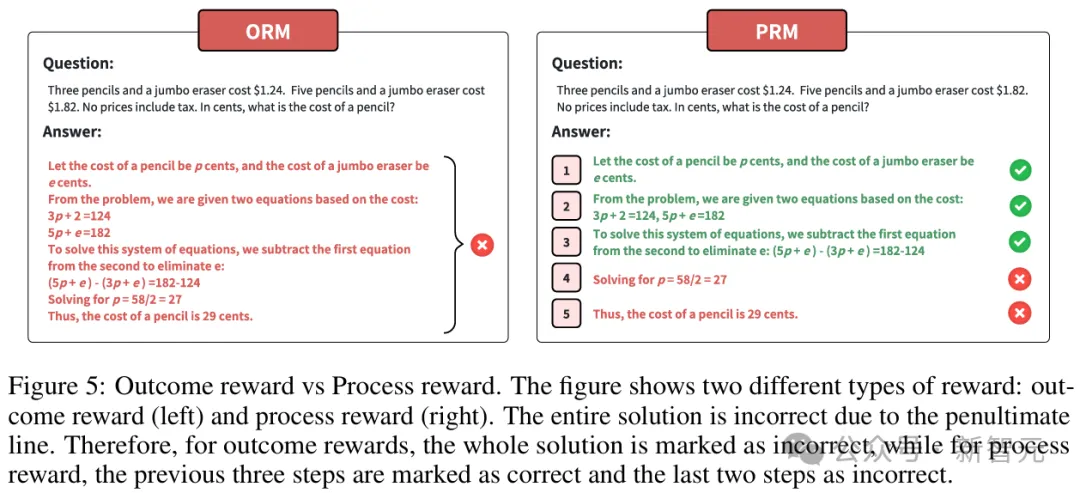

Outcome Reward (left) and Process reward (right)

Search plays a crucial role in both training and testing, i.e. more computing resources can generate better solutions.

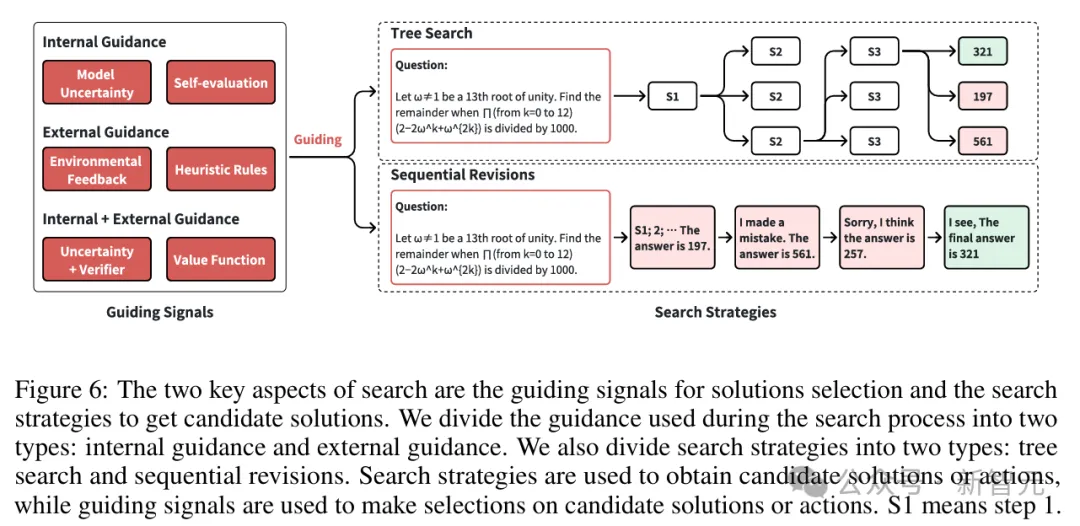

Types of guidance used in the search process: internal guidance, external guidance, and a combination of both

Learning from human expert data requires expensive data annotation. In contrast, reinforcement learning learns through interaction with the environment, avoids the high cost of data annotation, and has the potential to achieve performance beyond humans.

In summary, as the researchers suspected in November 2023, the next LLM breakthrough is likely to be some combination with Google Deepmind's Alpha series (such as AlphaGo).

The significance of this research is more than just publishing a paper, it also opens the door for most models, allowing others to use RL to implement the same concept, providing different types of reasoning feedback, while also developing playbooks and recipes that AI can use.

The researchers conclude that although o1 has not yet published a technical report, the academic community has offered several open source implementations of o1.

In addition, there are several O1-like models in industry, such as k0-math, skywork-o1, Deepseek-R1, QwQ, and InternThinker.

A comparison of approaches from different open source o1 projects in the areas of policy initialization, reward design, search, and learning

In reinforcement learning, policies define how the agent chooses actions based on the state of the environment.

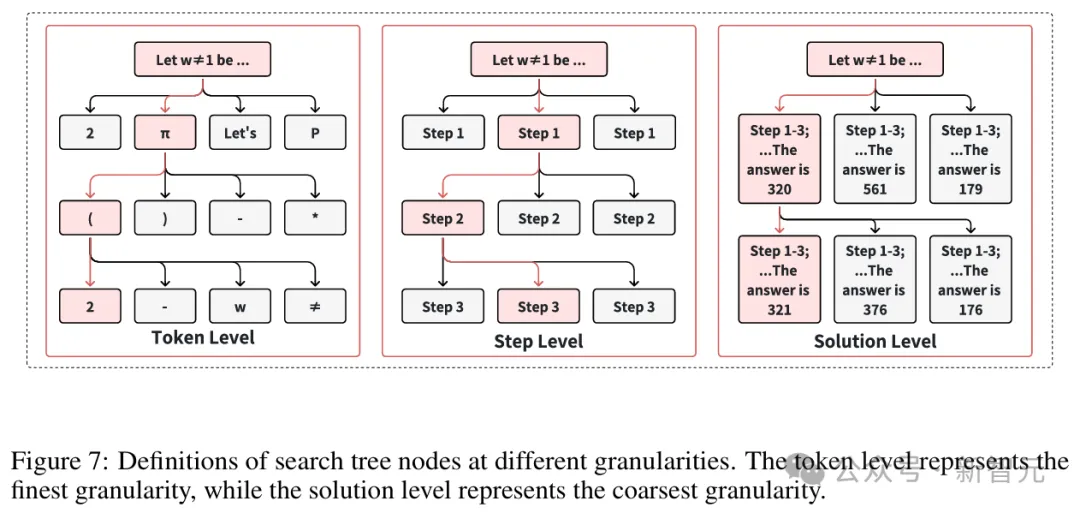

LLM action granularity is divided into three levels: solution level, step level, and Token level.

The interaction between agent and environment in LLM reinforcement learning

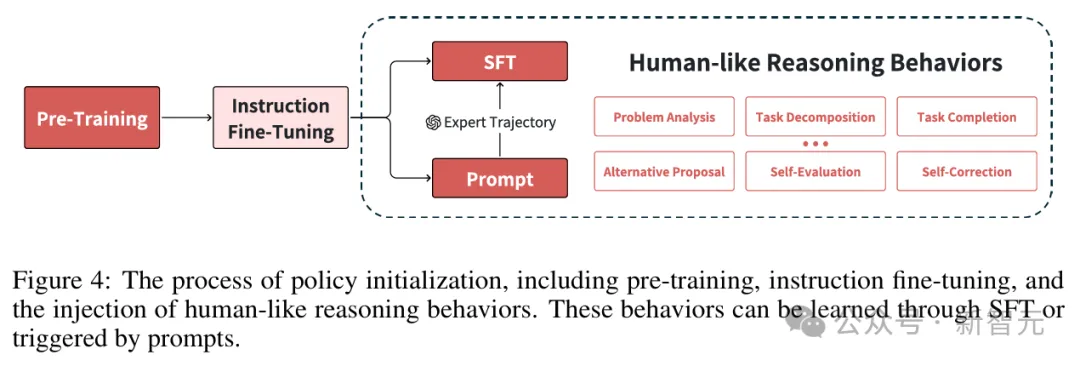

The initialization process of LLM mainly includes two stages: pre-training and instruction fine-tuning.

In the pre-training stage, the model develops a basic language understanding ability through self-supervised learning of a large-scale networked corpus, and follows the established power law between computing resources and performance.

In the instruction fine-tuning phase, the LLM is transformed from a simple prediction of the next Token to generating responses that are aligned with human needs.

For models like o1, incorporating human-like reasoning behavior is essential for more complex solution space exploration.

Pre-training builds basic language understanding and reasoning skills for LLM through exposure to a large corpus of texts.

For O1-like models, these core competencies are the basis for the development of advanced behaviors in subsequent learning and search.

Instruction fine-tuning transforms pre-trained language models into task-oriented agents by specialized training on multi-domain instruction-response pairs.

This process changes the behavior of the model from a mere prediction of the next Token to one with a clear purpose.

The effect depends mainly on two key factors: the diversity of the instruction data set and the quality of the instruction-response pairs.

While instruction-fine-tuned models demonstrate general-purpose task capabilities and user intent understanding, models like o1 require more complex human-like reasoning capabilities to reach their full potential.

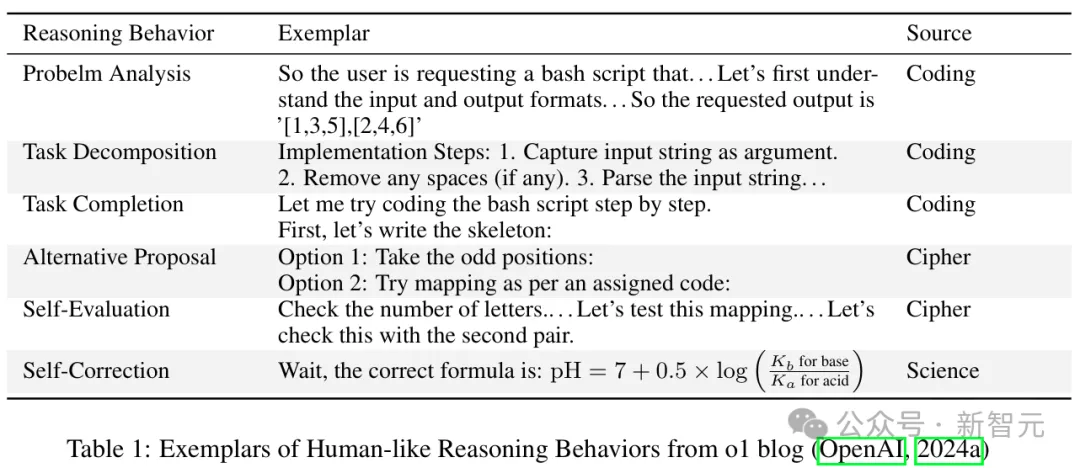

As shown in Table 1, researchers analyzed o1's behavior patterns and identified six types of human reasoning behaviors.

Policy initialization plays a key role in developing O1-like models, as it establishes fundamental capabilities that influence subsequent learning and search processes.

The strategy initialization stage consists of three core components: pre-training, instruction fine-tuning, and the development of human-like reasoning behavior.

Although these reasoning behaviors are already implicitly present in the LLM after the fine-tuning of instructions, their effective deployment needs to be activated by supervised fine-tuning or carefully crafted prompt words.

In reinforcement learning, the agent receives reward feedback signals from the environment and maximizes its long-term reward by improving its strategy.

The reward function is usually expressed as r(st, at), which represents the reward obtained by the agent for performing the action at at the state st of time step t.

The reward feedback signal is crucial in training and reasoning because it specifies the desired behavior of the agent through numerical scoring.

Result reward and process reward

Result rewards are assigned points based on whether the LLM output meets predefined expectations. However, the lack of supervision of the intermediate steps may cause the LLM to generate the wrong solution steps.

In contrast to the outcome reward, the process reward not only provides a reward signal for the final step, but also provides a reward for the intermediate step. Despite showing great potential, the learning process is more challenging than the reward of results.

Since outcome rewards can be viewed as a special case of process rewards, many reward design methods can be applied to modeling both outcome rewards and process rewards.

These models are often referred to as the Outcome Reward Model (ORM) and Process Reward Model (PRM).

In some environments, reward signals may not effectively communicate learning goals.

In this case, rewards can be redesigned through reward shaping to make them richer and more informative.

However, since the value function depends on the strategy π, a value function estimated from one strategy may not be suitable as a reward function for another strategy.

Given o1's ability to handle multitasking reasoning, its reward model may incorporate multiple reward design approaches.

For complex reasoning tasks such as math and code, because the answers to these tasks often involve long chains of reasoning, a process reward model (PRM) is more likely to be used to oversee intermediate processes than an outcome reward model (ORM).

When reward signals are not available in the environment, the researchers speculate that o1 may rely on learning from preference data or expert data.

According to OpenAI's AGI five-stage plan, o1 is already a powerful inference model, and the next stage is to train an agent that can interact with the world and solve real-world problems.

To achieve this, a reward model is needed that provides reward signals for the agent's behavior in a real environment.

For models like o1 designed to solve complex inference tasks, search may play an important role in both training and inference processes.

Search based on internal guidance does not rely on real feedback from the external environment or the agent model, but rather guides the search process through the model's own state or ability to evaluate.

External guidance typically does not rely on a specific policy, relying only on environment - or task-related signals to guide the search process.

At the same time, internal and external guidance can be combined to guide the search process, a common approach is to combine the uncertainty of the model itself with the agent feedback from the reward model.

Researchers classify search strategies into two types: tree search and sequence correction.

Tree search is a global search method that generates multiple answers at the same time for exploring a wider range of solutions.

In contrast, sequence correction is a local search method that progressively optimizes each attempt based on previous results and may be more efficient.

Tree search is usually suitable for solving complex problems, while sequence correction is more suitable for fast iterative optimization.

The researchers believe that search plays a crucial role in both o1's training and reasoning.

They refer to the two stages of search as training-time search and inference-time search, respectively.

In the training phase, the trial-and-error process in online reinforcement learning can also be viewed as a search process.

In the inference phase, o1 shows that model performance can be continuously improved by increasing the amount of inference computation and extending the thinking time.

The researchers believe that o1's "think more" approach can be viewed as a search, using more reasoning computing time to find a better answer.

As you can see from the examples in the o1 blog, o1's reasoning style is closer to sequence correction. There is every indication that o1 relies primarily on internal guidance during the reasoning phase.

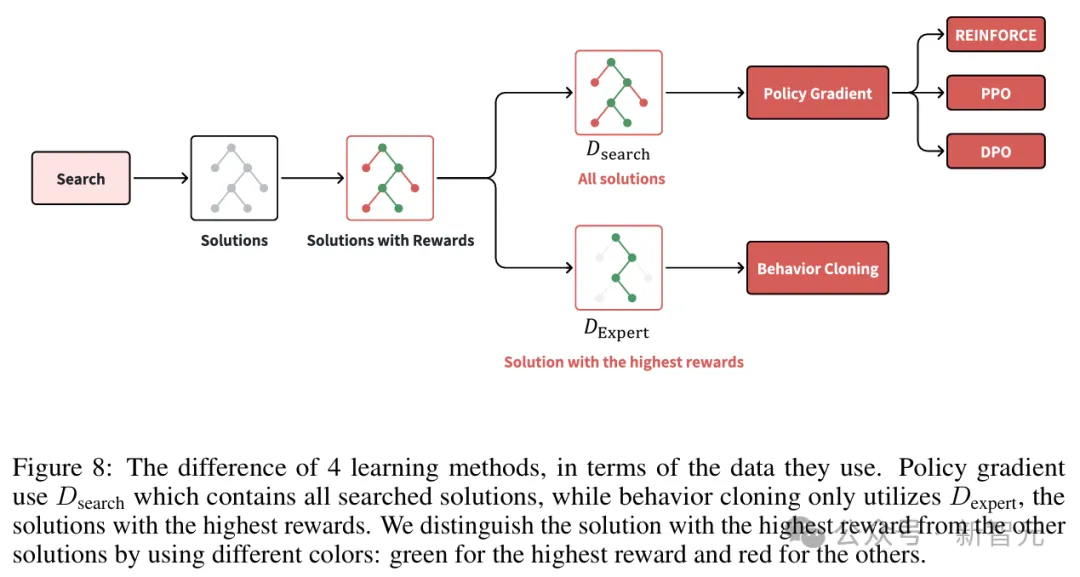

Reinforcement learning typically uses strategies to sample trajectories and improve strategies based on the rewards obtained.

In the context of o1, the researchers hypothesize that the reinforcement learning process generates a trajectory through a search algorithm, rather than relying solely on sampling.

Based on this assumption, reinforcement learning for o1 may involve an iterative process of search and learning.

In each iteration, the learning phase uses the output generated by the search as training data to enhance the strategy, and the improved strategy is then applied to the search process in the next iteration.

The search in the training phase is different from the search in the test phase.

The researchers write the set of state-action pairs for the search output as D_search, and the set of state-action pairs for the optimal solution in the search as D_expert. Therefore, D_expert is a subset of D_search.

Given D_search, the strategy can be improved by policy gradient method or behavior cloning.

Proximal Policy Optimization (PPO) and Direct Policy Optimization DPO are the most commonly used reinforcement learning techniques in LLM. In addition, it is common practice to perform behavioral cloning or supervised learning on search data.

Researchers believe that o1 learning may be the result of a combination of multiple learning methods.

In this framework, they hypothesize that o1's learning process begins with the warm-up phase of the use of behavioral cloning, and moves to the use of PPO or DPO when the improvement effect of behavioral cloning stabilizes.

This process is consistent with the post-training strategy used in LLama2 and LLama3.

In the pre-training phase, the relationship between loss, computational cost, model parameters and data size follows a power-law Scaling Law. So, does reinforcement learning also show up?

According to OpenAI's blog, inference performance does have a log-linear relationship with the amount of computation in training time. Beyond that, however, there isn't much research.

In order to realize large-scale reinforcement learning like o1, it is crucial to study the Scaling Law of LLM reinforcement learning.

2025-02-17

2025-02-14

2025-02-13

friend link

400-000-0000

立即获取方案或咨询

top