Home > Information > News

#News ·2025-01-06

DeepSeek-v3 large model was born, with 1/11 computing power training more than Llama 3 open source model, shocked the entire AI circle.

Then, the rumor of "Lei Jun opening 10 million annual salary to dig DeepSeek researcher Luo Fuli" also made people focus on DeepSeek talents.

This is not only the scientific and technological circle, the whole network is curious, even the little red book people posted to ask, what kind of team is this?

Internationally, some people have translated founder Liang Wenfeng's interview into English and added annotations, trying to find clues to the company's rise.

After sorting through all kinds of data, the biggest feature of the DeepSeek team is its youth.

Fresh and current students, especially those from the North of Qing Dynasty, are very active in it.

Some of them will be doing research at DeepSeek in 2024 while their hot, fresh doctoral disserations have just been awarded.

Some of them participated in the whole process from DeepSeek LLM v1 to DeepSeek-v3, and some just practiced for a period of time and made important results.

Almost all of DeepSeek's key innovations, such as MLA's new attention and GRPO's reinforcement learning alignment algorithm, are young people.

DeepSeek-V2, released in May 2024, was a key part of the big model company's demise.

One of the most important innovations is the introduction of a new type of Attention, based on the Transformer architecture, with MLA (Multi-head Latent Attention) to replace the traditional multi-head attention, greatly reducing the amount of computation and inference memory.

Among the contributors, Gao Huazuo and Zeng Wangding made key innovations for the MLA architecture.

Gao Huazuo is very low-key, currently only know is the Peking University physics department graduate.

In addition, the name can also be seen in the patent information of Step Stars, one of the "six small strong models of entrepreneurship", and it is not sure whether it is the same person.

Zeng Wangding is from Beijing University of Post and Telecommunications, and his graduate tutor is Zhang Honggang, director of Beijing University's Artificial Intelligence and Internet Search Teaching and Research Center.

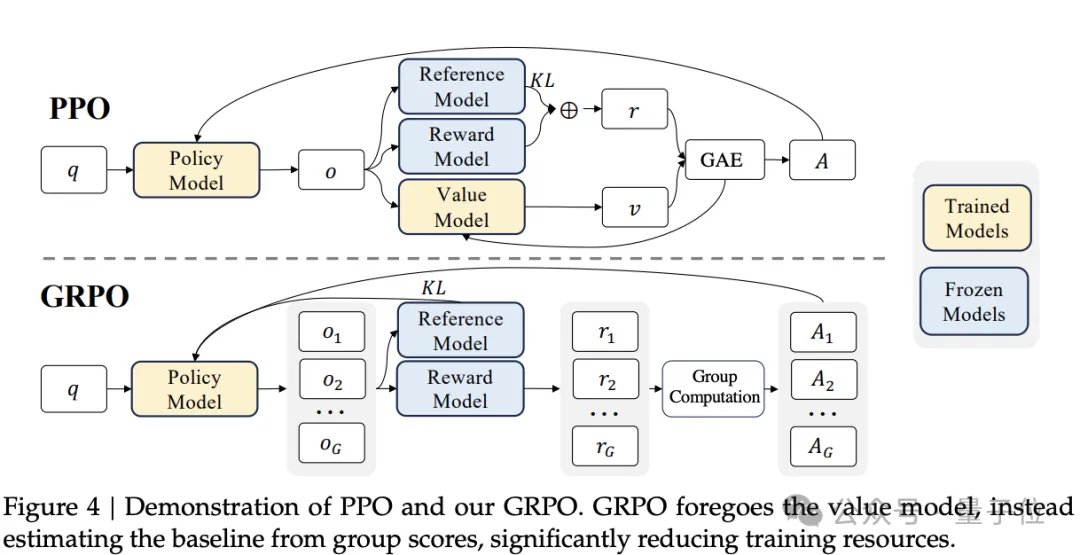

The DeepSeek-V2 work also involved another key achievement - GRPO.

Three months before the release of DeepSeek-V2, DeepSeek-Math came out with GRPO (Group Relative Policy Optimization).

GRPO, a variant of PPO's RL algorithm, abandons the critic model and instead estimates the baseline from group scores, significantly reducing the need for training resources.

GRPO has been widely concerned in the circle, and the technical report of Ali Qwen 2.5, another domestic open source large model, also revealed the use of GRPO.

DeepSeekMath has three core authors whose work was completed during their internships at DeepSeek.

One of the core authors, Shao Zhihong, is a doctoral student in Tsinghua's Interactive Artificial Intelligence (CoAI) research group under Professor Huang Minlie.

His research interests include natural language processing, deep learning, and a particular interest in how to build a robust and scalable AI system that integrates heterogeneous information using a diverse set of skills and can accurately answer complex natural language questions.

Mr. Shao also previously worked at Microsoft Research.

After DeepSeekMath, he also worked on DeepSeek-Prover, DeepSeek-Coder-v2, DeepSeek-R1 and other projects.

The other core author, Qihao Zhu, is a 2024 PhD graduate from the Institute of Software, Peking University School of Computer Science, under the guidance of Associate Professor Xiong Yingfei and Professor Zhang Lu, whose research direction is deep code learning.

According to the official introduction of the School of Computer Science of Peking University, Zhu Qihao has published 16 CCF-A papers. Received the ACM SIGSOFT Outstanding Paper Award once at ASE and ESEC/FSE, and was nominated once. One paper entered the top three citations of the ESEC/FSE conference in the same year.

In the DeepSeek team, Zhu also led the development of Deepseek-Coder V1 based on his doctoral dissertation work.

His doctoral thesis "Deep Code Learning Techniques and Applications for Language-Defined Perception" was also selected for the 2024CCF Software Engineering Professional Committee doctoral dissertation incentive Program.

There is also a core author from Peking University.

Peiyi Wang, PhD student at Peking University, is supervised by Professor Sui Zhifang, Key Laboratory of Computational Linguistics, Ministry of Education, Peking University.

In addition to the two key ring-breaking achievements of DeepSeek-V2 MLA and DeepSeekMath GRPO, it is worth mentioning that some other members have joined from v1 to v3.

Dai Dai, one of the representatives, graduated from the School of Computer Science, Peking University in 2024. His mentor is also Professor Sui Zhifang.

Photo source: School of Computer Science, Peking University

Photo source: School of Computer Science, Peking University

Daedai has made a lot of academic achievements, has won the EMNLP 2023 Best Long Paper award, CCL 2021 Best Chinese Paper Award, and has published 20 + academic papers in various major conferences.

And Wang Bingxuan of Peking University's Yuanpei College.

Wang, from Yantai, Shandong province, joined Peking University in 2017.

After graduation, he joined DeepSeek and was involved in a series of important work starting with DeepSeek LLM v1.

Another representative of Tsinghua is Zhao Chenggang.

Zhao Chenggang was previously a member of the Informatics Competition class of Hengshui Middle School and a silver medalist in CCF NOI2016.

After that, Zhao Chenggang entered Tsinghua University and became an official member of the Tsinghua student supercomputer team in his sophomore year, winning the championship of the World University supercomputer Competition three times.

Zhao works as a training/inference infrastructure engineer at DeepSeek and has internship experience at NVIDIA.

Photo: Tsinghua News Network

Photo: Tsinghua News Network

These living individuals are enough to inspire admiration.

But that's not enough to answer the original question, what kind of team is DeepSeek? What is the organizational structure?

The answer may lie in founder Liang Wenfeng.

As early as May 2023, DeepSeek had just announced the next big model, and when it had not released results, Liang Wenfeng revealed the recruitment standards in an interview with 36 Krypton's "dark surge".

Look at ability, not experience.

Our core technical positions are mainly composed of fresh graduates and those who have graduated for one or two years.

It can also be seen from the list of paper contributions published in the following year or more, this is indeed true, and a large part of the members who are studying for a doctorate, fresh year and one or two years after graduation.

Even the team leader level is younger, mainly those who have graduated from school for 4-6 years.

For example, Wu, who leads DeepSeek's post-training team, graduated from Beiairlines with a doctorate in 2019 and participated in Xiaoice and Bing Encyclopedia projects at Microsoft MSRA.

Dr. Wu received joint training from Professor Li Zhoujun of Beihang University and Dr. Zhou Ming, former Vice President of MSRA.

Half of the same school with him is Guo Daya, professor of Yin Jian of Sun Yat-sen University and Dr. Zhou Ming of MSRA, who will graduate in 2023.

He joined DeepSeek in July 2024, where he worked on a range of mathematical and code large models.

There is another thing that Guo Daya has done in school, during his undergraduate internship in MSRA, he published two top papers in a year, and he laughed that "on the third day of enrollment, he completed the graduation requirements of CUHK doctoral students."

In addition to the young team members, DeepSeek's outstanding characteristics among domestic AI companies: great emphasis on the cooperation of model algorithms and hardware engineering.

The DeepSeek v3 paper has a total of 200 authors, not all of whom are responsible for AI algorithms or data.

There are such a group of people from the early DeepSeek LLM v1 to v3 has been involved, they are more inclined to the computing part, responsible for optimizing the hardware.

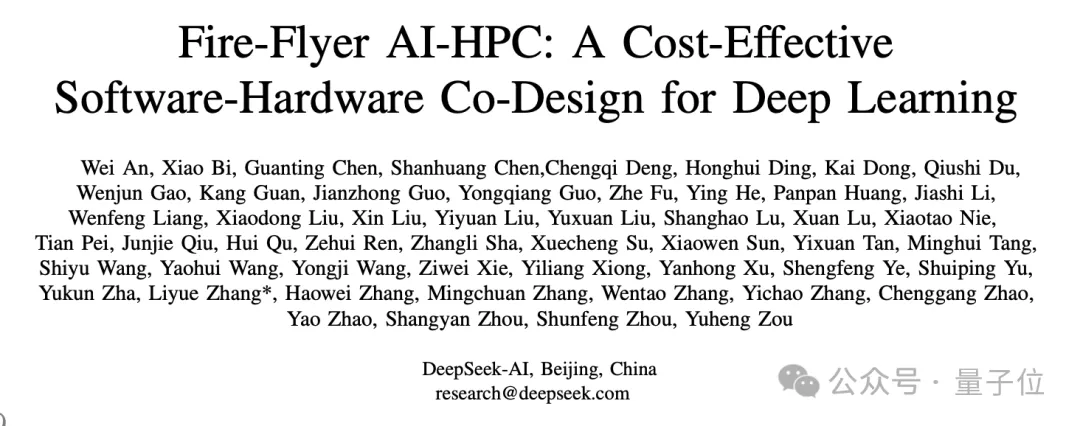

They published a paper "Fire-Flyer AI-HPC" under the name of DeepSeek AI, reducing training costs through hardware and software co-design, and solving the shortcomings of traditional supercomputer architecture in AI training needs.

Fire-Flyer, also known as the Firefly 2 Wanka cluster built by Magic Square AI, uses Nvidia A100 GPU, but it has cost and energy consumption advantages compared to Nvidia's official DGX-A100 server.

Some of the team worked or interned at Nvidia, some came from Alibaba Cloud, which is also based in Hangzhou, and many people were seconded from magic Square AI or simply transferred to DeepSeek, participating in every large model work.

The result of such attention to software and hardware collaboration is to train the DeepSeek-v3 with higher performance with 1/11 of the computing power of Llama 3 405B.

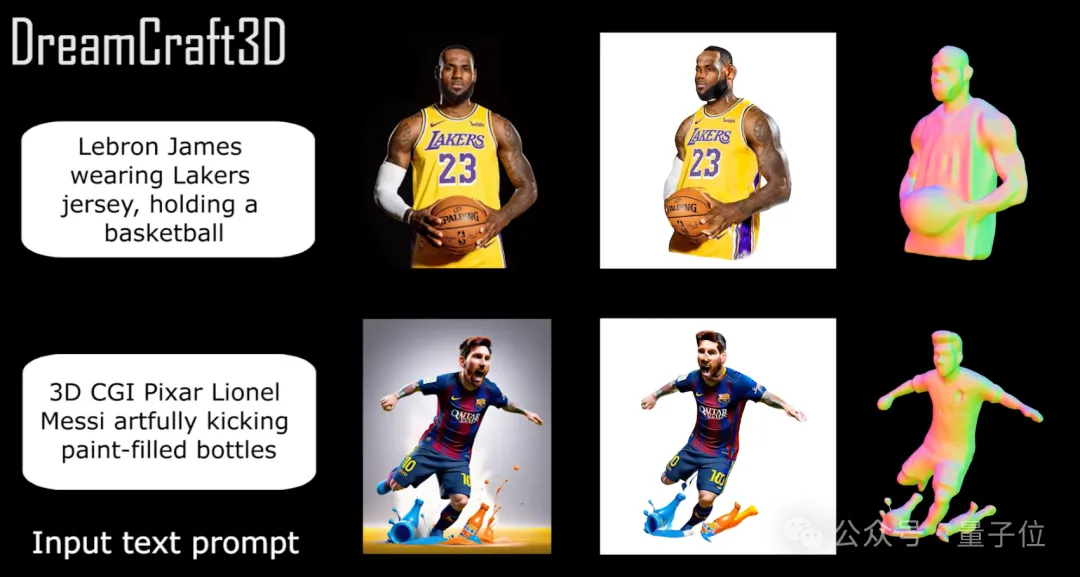

Finally, we also found that there is a special existence in the DeepSeek open source project, not related to language models, but related to 3D generation.

This work was done by Tsinghua doctoral student Sun Jingxiang during his internship at DeepSeek, in collaboration with his supervisor Liu Yebin and DeepSeek members.

One such intern who has made important achievements at DeepSeek is Xin Huajian, a logic major at Sun Yat-sen University.

During his internship at DeepSeek, he worked on Deepseek-Prover, which proves mathematical theorems with large models, and is now a PhD student at the University of Edinburgh.

After reading these examples, returning to Liang Wenfeng's interview again, perhaps we can better understand the operation structure of this team.

This inevitably brings to mind another force to be reckoned with in the AI world, yes, OpenAI.

The same people do not look at experience, undergraduates, dropouts as long as they have the ability to recruit.

With the same reuse of new people, fresh graduates and post-00s can mobilize resources to study Sora from scratch.

Facing the same potential direction, the entire company starts from the top to design layout and resource promotion.

DeepSeek is perhaps the Chinese AI company that most closely resembles OpenAI in its organizational form.

Reference links: [1] https://mp.weixin.qq.com/s/Cajwfve7f-z2Blk9lnD0hA.

[2] https://mp.weixin.qq.com/s/r9zZaEgqAa_lml_fOEZmjg.

[3] https://mp.weixin.qq.com/s/9AV6Qrm_1HAK1V3t1MZXOw.

[4] https://mp.weixin.qq.com/s/y4QwknL7e2Xcnk19LocR4A.

[5] https://mp.weixin.qq.com/s/C9sYYQc6e0EAPegLMd_LVQ.

2025-02-17

2025-02-14

2025-02-13

friend link

400-000-0000

立即获取方案或咨询

top