Home > Information > News

#News ·2025-01-06

When it comes to the hot topic in the AI circle in 2024, of course, you can't miss the video generation model!

Even in December, the pace of updating video models at home and abroad has not slowed down. Among them, Sora and Kelling AI are representative.

On December 9, OpenAI officially launched its video product Sora. Users can create videos with resolution up to 1080p (up to 20 seconds) in any aspect ratio, receive text, image and video input and generate new video as output.

On December 19, Kelling AI announced that the base model was upgraded again, video generation launched Kelling 1.6 model, text responsiveness, picture beauty and motion rationality, have significantly improved, the picture is more stable and vivid, while supporting standard and high-quality mode, especially the 1.6 model of graphic video, internal evaluation than 1.5 model overall effect increased by 195%.

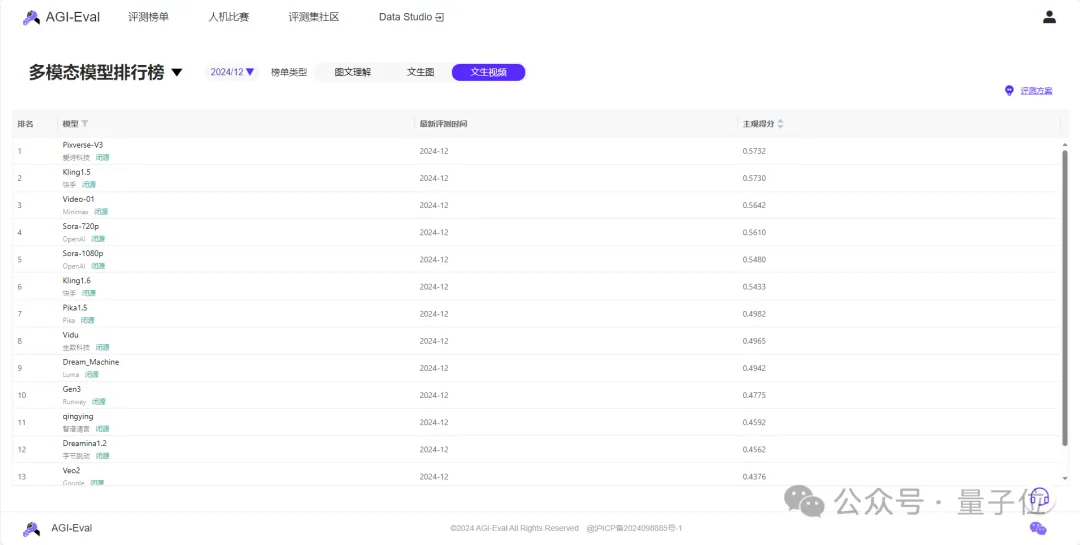

The competition for video models is fierce, and the review lists have become particularly important.

By building hundreds of evaluation data and expert manual evaluation teams, AGI-Eval has conducted more in-depth professional evaluation of Sora and domestic head video generation models.

The main conclusions are as follows:

Conclusion 1

Compared with the domestic large-head model (the top three in China), Sora is slightly behind in video-text consistency dimension and video quality. Overall, the domestic large model still maintains the leading level.

Conclusion 2

Sora performs slightly better than Kelling 1.6 in the dimension of motion quality, that is, the main body consistency and dynamic amplitude of the generated video picture are more natural in the dynamic process.

Conclusion 3

In the dimension of video-text consistency, Sora has problems of incorrect text understanding and inconsistent instruction compliance, that is, the generated video content is inconsistent with the description of prompt words.

The detailed ranking of the list is as follows. The evaluation dimensions include video-text consistency, video quality (including authenticity and rationality), motion quality, etc., to ensure the true level of the evaluation results feedback model.

Note: The above data is an example, please refer to the latest data of AGI-Eval evaluation community platform for specific scores.

The list data has been normalized and is different from the original score, but the ranking is the same.

AGI - Eval platform links: https://agi-eval.cn/mvp/listSummaryIndex

Let's take a look at the detailed results.

From the overall effect of video generation, Sora performs better in video quality, creative freedom, style support and other aspects, especially in the presentation of five features in dynamic scenes.

Prompt: A basketball, bread, and backpack are placed on the floor, and the camera follows the tired athlete to the item and picks up an item to use for energy.

English prompt: There is a basketball, a loaf of bread, and a backpack on the ground. The camera follows the exhausted athlete as he approaches the items and picks up one of them to replenish his energy.

The prompt itself is more complex, with multiple entity and person state representations at the same time, and requires the model to perform correct reasoning, and the ability to examine is more comprehensive.

Sora-1080P

Score for this dimension: 2

Analysis: In the generated entity, the backpack is missing, the bread performance is poor, and the entity characteristics are lost; The character action "picks up" does not conform, and it is impossible to judge whether it conforms to the correct reasoning of the object.

Coline 1.6

Score for this dimension: 2.67

Analysis: In the generated entity, the bread is missing, the action of "picking up" has a trend but poor performance, and it is also impossible to judge whether the object is in line with the correct reasoning.

Pixverse-V3

Score for this dimension: 3.5

Analysis: The required generated entity and character action "pick up" are satisfied, and the correct reasoning can be reached, understanding that what needs to be taken is bread, but does not conform to the lens follow and character action "come", relatively speaking, has performed well.

MiniMax-Video-01

Score for this dimension: 3

Analysis: In the generated entity, the bread is missing and the "pick up" action is not consistent, but the reasoning is correct and the understanding is that what needs to be taken is bread.

Prompt: Advertising for high temperature color-changing mugs. A black high temperature color-changing mug is being added to hot water in the process of gradually turning white. The key is to highlight the mug's ability to change color.

English prompt: High-temperature color-changing thermos advertisement. A black high-temperature color-changing thermos cup is being filled with hot water, gradually transforming into white. The key focus is to highlight the thermos cup’s color-changing capability.

This prompt entity is simpler, but tends to look at details such as water flow, heat, color gradients, etc. Smooth detail changes are often more difficult.

Sora-1080P

Score for this dimension: 2.67

Analysis: The focus of prompt request was ignored, and the discoloration process was not reflected.

Coline 1.6

Score for this dimension: 4 points

Analysis: The discoloration process does not fully meet the requirements, but is relatively good.

Pixverse-V3

Score for this dimension: 3

Analysis: It does not show that water is being added, and discoloration is reflected, but it does not meet the requirements of gradual whitening.

MiniMax-Video-01

Score for this dimension: 2.67

Analysis: It also does not reflect the process of discoloration, nor can it be seen that it is hot water.

Prompt: An advertisement for a creative cake. A knife cut through the cake and strawberry jam gushed from the cut.

English prompt: An advertisement for a creative cake. A dining knife slices through the cake, and strawberry sauce flows out from the cut.

The prompt focuses on motion details and interactions between entities, and the models differ on "strawberry jam gushed out of the cut."

Sora-1080P

Score for this dimension: 2.5

Analysis: In the video, jam suddenly appeared and disappeared many times, and the cake suddenly appeared a gap, only for poor stability.

Coline 1.6

Score for this dimension: 3.5

Analysis: It can be seen that the action of the knife leads to the incision of the cake, and the appearance of jam is more abrupt and unreasonable.

Pixverse-V3

Score for this dimension: 3.5

Analysis: The shape of jam and knife is stable, and the cut of cake is more abrupt.

MiniMax-Video-01

Score for this dimension: 3

Analysis: The shape of the knife and the cake is relatively stable, in line with the state of cutting, but the jam suddenly appears in large numbers is unreasonable.

Prompt: Generate an animation-style video of a girl traveling through Paris with the Eiffel Tower in front of her.

English prompt: Generates an animated-style video of a girl traveling in Paris with the Eiffel Tower in front of her.

Sora-1080P

Score for this dimension: 2.67

Analysis: The birds in the background showed obvious deformities and unreasonable stagnation, and the pedestrians in the background had adhesion and unreasonable walking posture, which was more obvious in the overall appearance.

Coline 1.6

Score for this dimension: 4 points

Analysis: The main character and the overall shape of the building are good, some background characters appear slight deformity, the overall effect on the perception is small.

Pixverse-V3

Score for this dimension: 3

Analysis: The fingers of the main character have slight adhesion deformation, and the background building has deformation, which is slightly unreasonable in appearance.

MiniMax-Video-01

Score for this dimension: 3.5

Analysis: The finger of the main character is slightly deformed, and the face of the background character appearing on the left side is slightly distorted, which is slightly unreasonable in appearance.

Prompt: The colleagues are talking in front of the office.

English prompt: Colleagues are talking in front of the office door.

Sora-1080P

Score for this dimension: 2.5

Analysis: The characters have obvious mold, the deformation of the door is also obvious, and the impact is serious.

Coline 1.6

Score for this dimension: 3.5

Analysis: The overall image of the characters is good, there is no obvious deformity, some shots of the characters hand deformation, resulting in a certain impact.

Pixverse-V3

Score for this dimension: 3

Analysis: The finger deformation of characters is persistent and serious, which affects the visual effect.

MiniMax-Video-01

Score for this dimension: 3.5

Analysis: The overall image of the characters is good, there is no obvious deformity, some shots of the characters hand deformation, resulting in a certain impact.

Prompt: Creative video, a combination of ascending and pulling mirrors, the camera pulls from a busy city into the air, space, and beyond the universe, and needs to show that Earth is a glass ball in the hands of other high-dimensional life in the universe

English prompt: A creative video combining zoom-in and zoom-out techniques, with the shot pulling up from a bustling city to the sky, into space, and beyond the universe, revealing Earth as a glass ball in the hands of higher-dimensional beings from another universe.

Sora-1080P

Score for this dimension: 3

Analysis: Pulling the mirror has some performance, but the reflection of lifting the mirror is poor, and the overall performance of the video lens is relatively simple.

Coline 1.6

Score for this dimension: 4 points

Analysis: Video can better reflect the mirror, pull the mirror, the excessive change of the scene is more natural, the overall smooth.

Pixverse-V3

Score for this dimension: 3.5

Analysis: The mirror is better reflected, but the mirror is not clearly expressed, and the overall scene transformation of the video is smooth.

MiniMax-Video-01

Score for this dimension: 3

Analysis: Pulling the mirror has some performance, but the reflection of the mirror is poor, the overall effect of the video is more abrupt.

For the basic model, AGI-Eval adopts different evaluation methods and different evaluation methods, including manual subjective evaluation, model scoring (modeleval), and crowdsourcing evaluation, to investigate whether the model has the ability to decline under different versions, style and other influences that lead to the difference in ranking results, and feedback the comprehensive ability of the model.

Evaluation note:

Given prompt videos, the measured videos were given an absolute score of 1-5 from the dimensions of video text consistency, video quality, motion quality, etc., and error labels were marked. The videos were marked with multiple rounds. If 2 people scored the same result, this prompt result was given. If 2 people scored diff, the 3 marks were entered.

Evaluation ideas:

Video text consistency: Whether the video is generated according to prompt's requirements, including the description of all relevant elements such as objects, people, scenes, style, motion details, etc.

Video Quality:

Rationality: Whether the video conforms to the general rules in terms of logic, structure, design, motion trajectory and other dimensions, that is, whether it conforms to physical laws.

Authenticity: The video has a realistic effect without obvious AI traces.

Motion quality: Whether the motion performance in the video is smooth, coherent, and rich in dynamic effects.

Evaluation set introduction:

According to key performance indicators such as consistency, motion quality and picture quality, a black box test set containing 500 Chinese-English control samples was constructed, covering a variety of complex scenarios, ability items and application scenarios from motion generation to emotion generation. Physical knowledge and encyclopedic knowledge are also incorporated into the construction to evaluate the realism and logic of the generated video.

Evaluation case:

Prompt: A cat wakes up its sleeping owner.

Model answer:

Evaluation and analysis:

Video overall score: 3 points

Consistency: 4 points, the entity generation meets the requirements, but the action process of "wake up" is incomplete.

Video quality: 3 points, during the movement of the body, the cat's face are deformed.

Movement quality: 3 points, the movement is basically coherent, and finally the cat claw retraction is not natural, and the mechanical feeling is obvious.

Prompt: The divers are warming up.

Model answer:

Evaluation and analysis:

Video overall score: 1

Consistency: 1 score, the required entity and action are not reflected at all.

Video quality: 1 score, the main body of the visual center is deformed, and there is also deformation and incoherence below the scene.

Motion quality: 2 points, the entity appears out of thin air below, and the movement continuity, dynamic effect and motion amplitude are poor.

In view of the difficulty of traditional evaluation methods to fully reflect the real level of the model, AGI-Eval innovatively proposes the man-machine collaborative evaluation mode to explore the construction of high-quality evaluation community.

In this mode, participants can work with the latest large model to complete the task, which helps to improve task completion and establish a more intuitive distinction.

Based on some previous user experiments, it is shown that this way can not only obtain a more concise and perfect description of the reasoning process, but also further improve the interactive experience between users and the large model.

In the future, with the emergence and development of more similar platforms, it is believed that human-machine collaboration will become an important development direction in the field of evaluation.

Human Machine Community link: https://agi-eval.cn/llmArena/home

The AGI-Eval platform builds tens of thousands of private data based on real data reflux, capability item disassembly, etc., and goes through multiple quality checks to ensure accuracy.

Black box 100% privatized data to ensure that evaluation data cannot be "traversed".

From data construction to model evaluation, the whole level of competence project is realized. The first-level competence includes instruction following, interaction ability, cognitive ability (including reasoning, knowledge, other cognitive ability, etc.); Perfect combination of automatic and manual evaluation.

For Chat model, the official list of the platform combines subjective and objective evaluation results, and the weight distribution in both Chinese and English is balanced.

The objective evaluation is based on model scoring, and can handle problems with certain degrees of freedom, with an accuracy of 95%+; The subjective evaluation is based on the independent labeling of three people, and the results of subdivision dimension labeling are recorded to fully diagnose the model problems.

Anyone who wants to apply for a Vincennes video review can contact the AGI-Eval team directly.

AGI-Eval is a large model evaluation community jointly released by Shanghai Jiao Tong University, Tongji University, East China Normal University, DataWhale and other universities and institutions, with the mission of "evaluation help, make AI a better partner for human beings". The platform aims to create a fair, credible, scientific and comprehensive evaluation ecology, calling on the public to participate in the evaluation of large models, participate in data construction and rich and interesting human-machine cooperation competitions, and collaborate with large models to complete complex tasks and realize the construction of evaluation schemes.

AGI-Eval multimodal evaluation can undertake full-modal (any toany) model evaluation (part of the list to be online), welcome model manufacturers to submit evaluation cooperation and exchange.

Please use the office email address to send the research objectives, plan, introduction of the research institution, applicant and contact information (mobile phone or wechat).

Email :agieval17@gmail.com, the title is: AGI-Eval Vincennes Video Assessment Application

2025-02-17

2025-02-14

2025-02-13

friend link

400-000-0000

立即获取方案或咨询

top