Home > Information > News

#News ·2025-01-03

Edit | Yi Feng

Has anyone noticed - Musk has skipped tickets again and again?

What about the Grok 3 at the end of the year? It can't be the end of 2025

"Grok 3 should be very special at the end of 2024 after training with 100k H100." Musk wrote in an X platform post in July, referring to the huge GPU cluster xAI has built in Memphis.

picture

picture

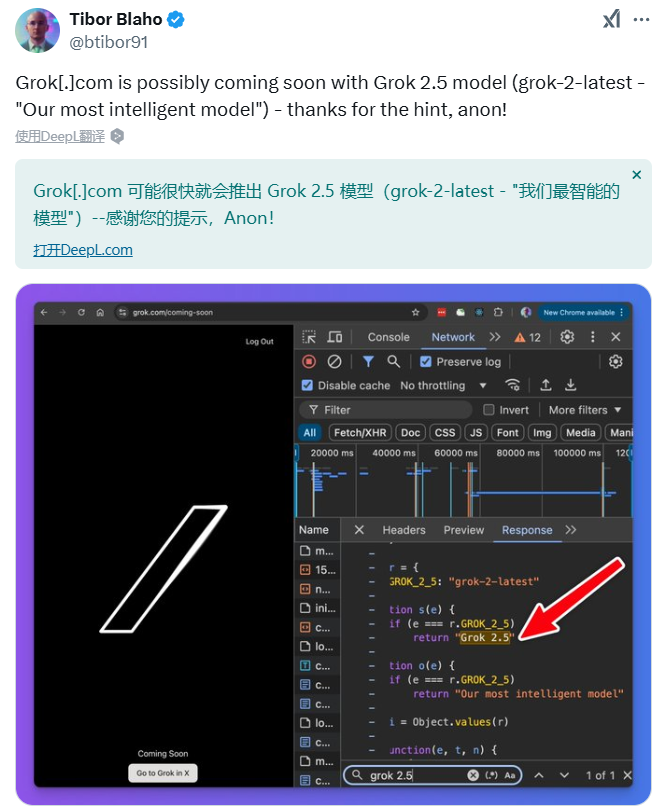

In a Dec. 15 post, it said, "Grok 3 will be a major leap forward."

picture

picture

However, today is January 3rd, and I have not heard about the upcoming release of Grok 3!

In fact, even with xAI's recent moves, expect a compromise version of 2.5.

According to a post by Twitter AI blogger Tibor Blaho, certain code he found on the xAI website suggests that an intermediate model "Grok 2.5" may be on the way.

picture

picture

The full code can be viewed at https://archive.is/FlmBE

As Musk and Altman's "ring" work, the Grok series is indeed a successful disrupter.

Therefore, the "leap" that Musk's forecast of Grok 3 will bring is of great concern in the slightly quiet large model release at present.

Musk himself seemed to anticipate the difficulties Grok would face during a podcast appearance with Fridman.

"You want Grok 3 to be state-of-the-art?" "The host asked.

"I hope so." Musk replied. "I mean, that's the goal. We may fail. But that's our vision."

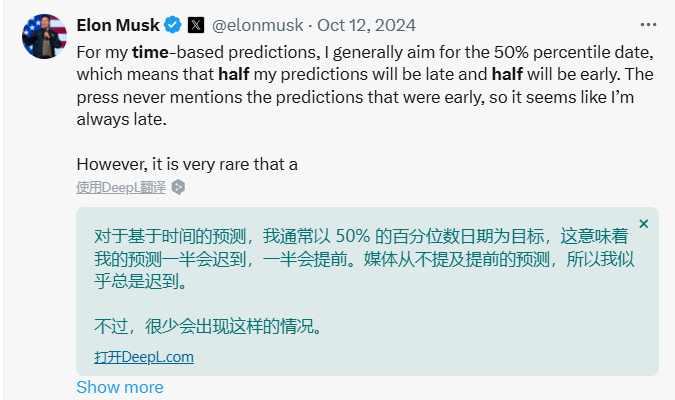

This isn't the first time Musk's "pie picture" has failed to materialize. It's no surprise that Musk's statements about the timing of product launches are often, at best, idealistic goals.

After the joke, Musk himself jumped in to clarify, "For time-based forecasts, I usually aim for a 50% percentile date, which means that half of my forecasts will be late and half will be early."

picture

picture

But Grok 3's absence is different because it's one of the growing pieces of evidence in the "AI hitting the wall" argument.

Last year, AI startup Anthropic failed to launch a successor to its top-of-the-line Claude 3 Opus model on time. A few months after announcing that the next generation model, Claude 3.5 Opus, would be released at the end of 2024, Anthropic removed information about the model from the developer documentation. (Anthropic did complete training on Claude 3.5 Opus last year, according to a report, but decided it wasn't economically viable to release it.)

Google and OpenAI have also reportedly suffered setbacks with their flagship models in recent months.

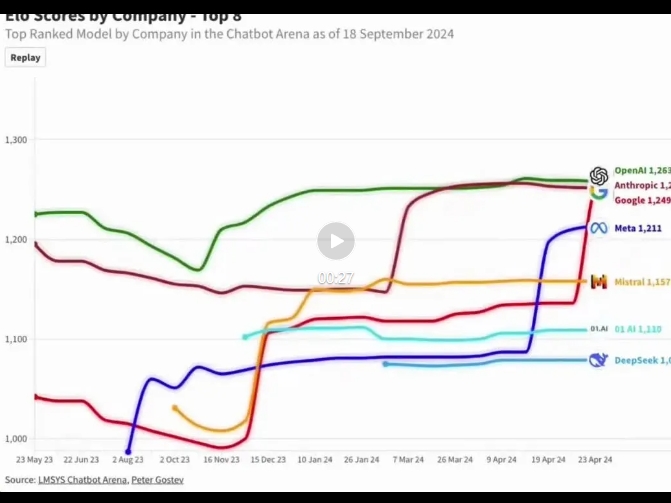

This could be evidence that current AI Scaling Law is "outdated" - that the methods companies are adopting to increase model power seem to have broken down.

In the recent past, training models with large computational resources and larger data sets could yield significant performance gains. But with each generation of models, the gains began to shrink, prompting companies to seek alternative technologies.

There may be other reasons for the delay of Grok 3. xAI, for example, has a much smaller team size than many of its competitors.

In the most crazy growth period of AI models, almost every day you can see words like "overnight sky" and "new king debut" in the headlines of AI reports.

Once how busy, now how lonely.

Over the past six months, although there are still a lot of powerful models launched, the pace of flagship model releases has become significantly slower.

Here are some AI technology giants, and their flagship model launch time, can more intuitively feel the "next generation model" has been vacant for a long time:

OpenAI - GPT-4

Release date: March 2023

Google DeepMind - Gemini 1.5

Release date: February 2024

Anthropic-Claude 3.5

Release date: June 2024

Meta-Llama 3.1

Release date: July 2024

xAI - Grok 2

Release date: August 2024

OpenAI-o1

Release date: September 2024

Many views (including OpenAI's former chief research officer) suggest that o1 is actually the "next generation model." The model has 100 times more computing power than GPT-4.

However, o1 capacity growth comes from the reinforcement of the chain of thought rather than the traditional Scaling Law.

It can be seen that since the second half of last year, the development of the large model seems to have fallen into a state of collective "dumb fire", showing the outline of the invisible "wall" in the legend.

At the end of the year, Ilya's sentence "pre-training is coming to an end" set off a frenzy of discussion in the AI circle.

At home, AI bosses are also looking for breakthroughs in AI training.

Among them, Yang Zhilin, the founder of the dark side of the moon, is a minority who is still optimistic about pre-training. At the time of Kimi's mathematical model launch, he said he was "still optimistic about Scaling Law, and that there is still half a generation to a generation of improvement in the pre-trained model, which will probably be released by the head large model next year."

Still, he acknowledges that the paradigm of Scaling Law has changed: "To do the Post-train Scaling, you're just saying you're Scaling from a very low base. "For a long time, your computing power won't be the bottleneck, and innovation is more important."

In the media report, Qiao Yu, assistant director and leading scientist of Shanghai Artificial Intelligence Laboratory, also said that "it is not that Scaling Law should be abandoned, but that new Scaling Law dimensions should be found, and many problems cannot be solved simply by expanding the model size, data and computing power." We need richer model architectures and more efficient learning methods, and we also hope to have core contributions from China in the development process of AGI, and find a more compatible and independent technical route with China's resource endowments."

Zhang Xiangyu, chief scientist of Step Stars, is more "radical", and he said frankly that he is not absolutely optimistic about the ability to improve the large model with more than one trillion parameters. "According to our observations, as large models grow in size, inductive capabilities improve rapidly and may continue to follow Scaling Law, but deductive capabilities, including mathematical and reasoning capabilities, not only do not increase, but actually decline as they move up the model Side," he said.

https://techcrunch.com/2025/01/02/xais-next-gen-grok-model-didnt-arrive-on-time-adding-to-a-trend/

https://user.guancha.cn/main/content?id=1353634

2025-02-17

2025-02-14

2025-02-13

friend link

400-000-0000

立即获取方案或咨询

top