Home > Information > News

#News ·2025-01-03

Today, large language models (LLMs) have reached near-human-level capabilities in the field of text generation.

However, as these models are widely used in text creation, their abuse in the fields of examinations, academic papers, etc., has caused serious concern. Especially in the current scenario, users often do not completely rely on AI to generate content, but use AI to modify and polish human original content, and this hybrid content has brought unprecedented challenges to detection.

Traditional machine-generated text detection methods perform well at identifying purely AI-generated content, but often misjudge it when faced with machine-revised text. This is because machine-revised texts often make only minor changes to the original human text, while including a large number of human-created features and domain specialized terms, which makes it difficult to accurately identify traditional detection methods based on probability statistics.

Recently, a research team from Fudan University, South China University of Technology, Wuhan University, UCSD, UIUC and other institutions proposed the innovative detection framework ImBD (Imitate Before Detect), from the perspective of "imitation" : By first learning and imitating the machine's writing style characteristics (such as specific word preferences, sentence structure, etc.), and then detecting based on these characteristics.

Address: https://arxiv.org/abs/2412.10432

Project homepage: https://machine-text-detection.github.io/ImBD

Code link: https://github.com/Jiaqi-Chen-00/ImBD

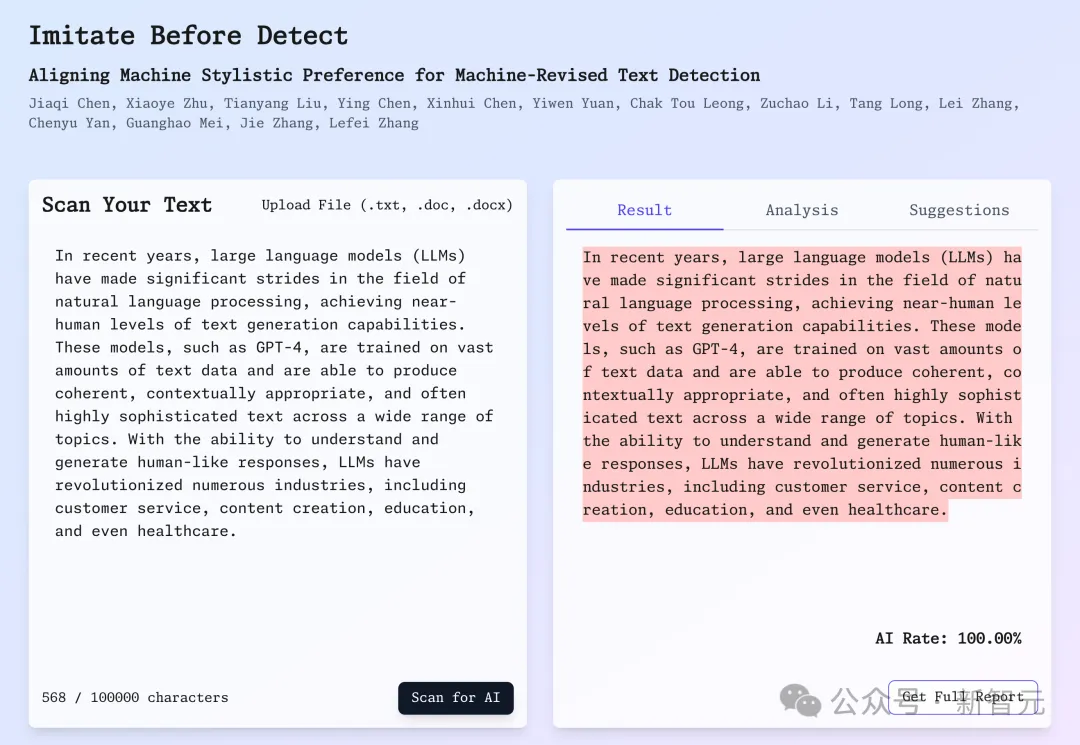

Demo online: https://ai-detector.fenz.ai/

The research team innovatively introduced Style Preference Optimization (SPO) to enable the scoring model to accurately capture the subtle features of machine revisions.

Experiments show that the method improves the accuracy of GPT-3.5 and GPT-4 modified text by 15.16% and 19.68%, respectively, and can exceed the performance of commercial detection systems with only 1000 samples and 5 minutes of training. The results have been accepted by AAAI2025 (median draft rate 23.4%).

With the rapid development and wide application of large language models (LLMs), AI-assisted writing has become a common phenomenon.

However, the popularity of this technology also brings new challenges, especially in fields that require strict control over the use of AI, such as academic writing and news reporting. Unlike traditional pure machine-generated text, it is more common today for users to use AI to modify and polish original human content, and this hybrid content makes detection work extremely difficult.

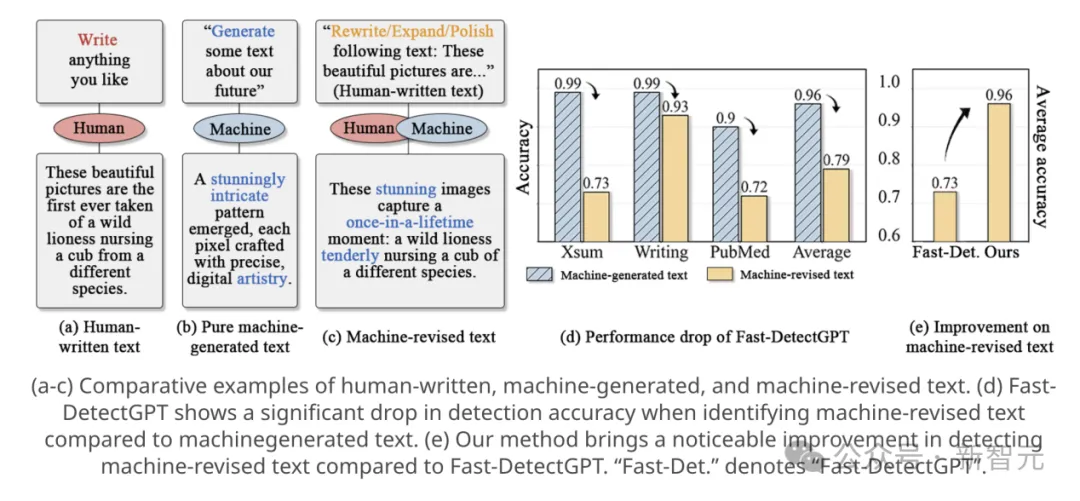

As shown in Figure 1(a-c), in contrast to the stark differences between human original text and pure machine-generated text, machine-revised text is often only slightly altered from the original human text.

Figure 1 Comparative analysis of human-written, machine-generated, and machine-revised texts

Traditional machine-generated text detection methods rely mainly on the token probability distribution characteristics of pre-trained language models, and these methods assume that machine-generated text generally has a higher logarithmic likelihood or negative probability curvature. However, when faced with machine-revised text, the performance of these methods decreased significantly.

As shown in Figure 1(d), even the most advanced method, Fast-DetectGPT [1], has a significant performance degradation when detecting machine-revised text.

This performance degradation is mainly due to two aspects:

- First, the machine-revised text retains a large amount of human-created content and domain-specific terminology, which often misleads detectors into identifying the text as human-written;

Second, with the advent of a new generation of language models such as GPT-4, the writing style of machines has become more subtle and difficult to capture.

In particular, it is worth noting that the characteristics of machine-revised texts are often reflected in some subtle stylistic features. In the example shown in Figure 1, these features include a unique choice of words (such as the tendency to use "stunning", "once-in-a-lifetime", etc.), complex sentence structure (such as the use of more clauses), and a uniform way of organizing paragraphs.

These stylistic features, though subtle, are key clues that distinguish human-created text from machine-revised text. However, because these features are often closely intertwined with human-created content, it is difficult for existing detection methods to effectively capture and utilize these features, which leads to a decline in detection accuracy.

Therefore, how to accurately identify the traces of machine revision on the text that retains the content of human creation is the key problem to be solved. This is not only related to the maintenance of academic integrity, but also affects the credibility assessment of online information. Developing a detection method that can effectively identify machine-revised text is of great significance for maintaining the quality and credibility of content in different fields.

Machine modified text detection framework ImBD based on style imitation

The core innovation of ImBD is to introduce the style perception mechanism into the field of machine modified text detection, and for the first time proposed a dual detection framework combining preference optimization and style probability curve.

Different from traditional methods that only focus on the probability difference at the content level, this paper effectively solves the limitations of current detection methods in processing partial manual content scenarios by accurately capturing the stylistic features of machine-modified text.

Problem formalization

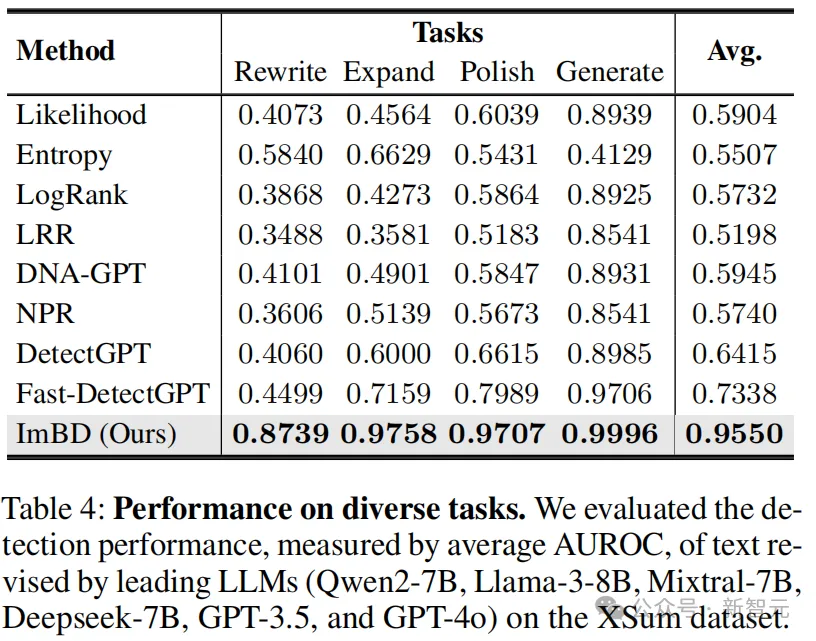

In the machine modified text detection task, we represent the input text as a sequence of tags , where n is the sequence length.

, where n is the sequence length.

The core goal is to build a decision function , through the scoring model

, through the scoring model Determine whether the text was written by a human (output 0) or modified by a machine (output 1). This formalization transforms a complex text analysis problem into a manageable binary classification task.

Determine whether the text was written by a human (output 0) or modified by a machine (output 1). This formalization transforms a complex text analysis problem into a manageable binary classification task.

Basic theory

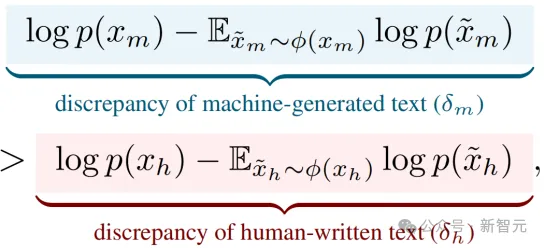

Traditional detection methods are based on a key observation: machine generation tends to select high probability markers, while human writing presents a more varied probability distribution. This difference can be formalized by the following inequality:

Among them, the primitive human text is denoted as The machine revised the text as

The machine revised the text as The left side of the equation represents the logarithmic probability of the machine modifying the text by calculating the distribution in the disturbed sample

The left side of the equation represents the logarithmic probability of the machine modifying the text by calculating the distribution in the disturbed sample To estimate the expected value below; The right side represents the logarithmic probability of a human-written text and its corresponding perturbed expected value. This inequality reflects the fact that machine-generated texts tend to show a more significant decline in probability after perturbation, while human-written texts maintain a relatively stable probability distribution.

To estimate the expected value below; The right side represents the logarithmic probability of a human-written text and its corresponding perturbed expected value. This inequality reflects the fact that machine-generated texts tend to show a more significant decline in probability after perturbation, while human-written texts maintain a relatively stable probability distribution.

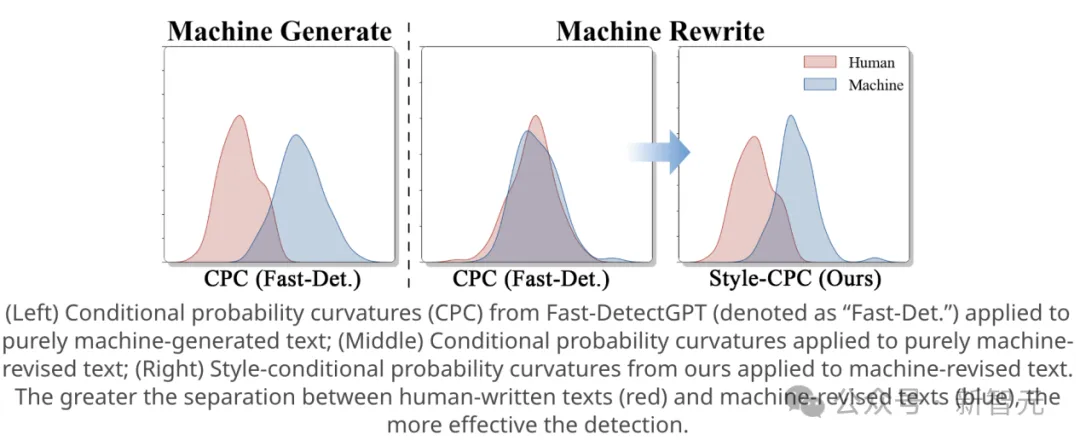

As shown in Figure 2 (left), this difference is most pronounced in pure machine-generated text. However, when it comes to machine-modified text, as shown in Figure 2 (right), the probability distributions of the two types of text overlap significantly, rendering traditional detection methods ineffective.

FIG. 2 Comparison diagram of human-machine text discrimination effect based on probability curve

Preference for optimized style imitation

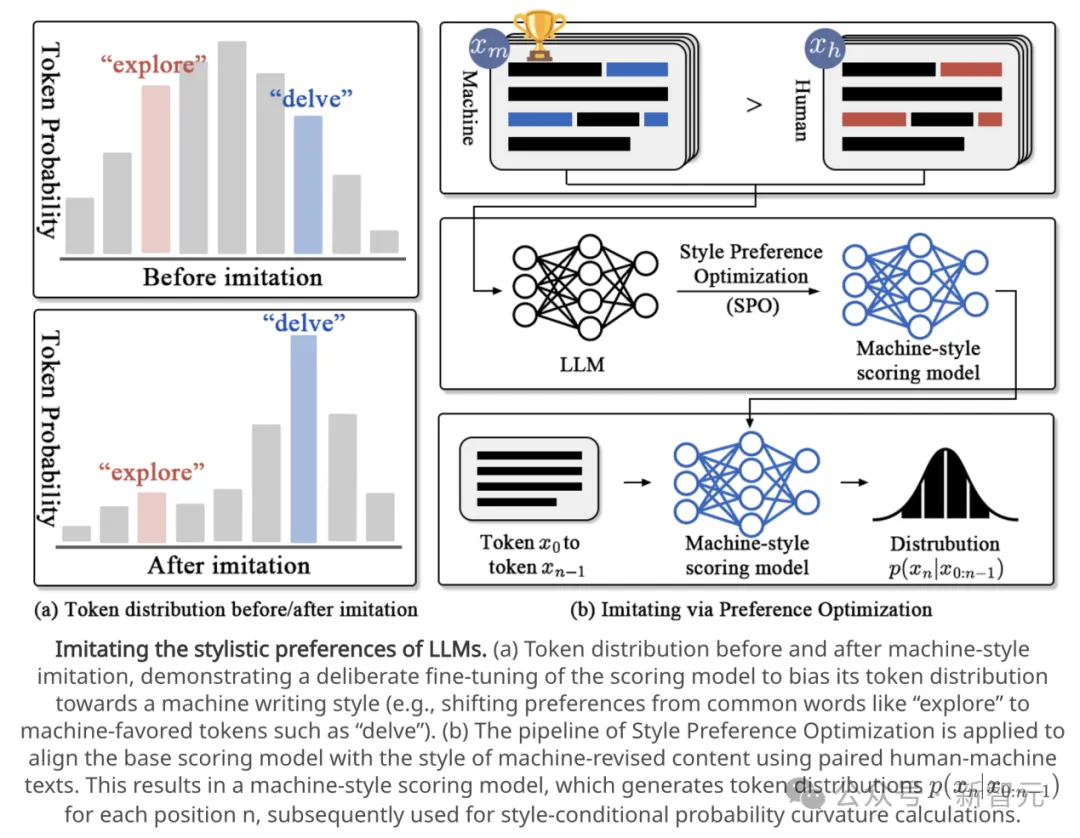

To overcome the above limitations, we propose to enhance the model's perception of machine style through preference optimization. As shown in Figure 3(b), the core of this mechanism is to build a preference relationship between text pairs: pairing the original human text with its machine-modified version can highlight stylistic differences while maintaining consistency in content.

FIG. 3 Simulation process of LLM style preference optimization

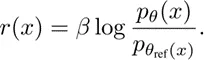

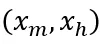

Based on the Bradley-Terry model, define the preference distribution:

Among them, Indicates the probability of favoring the machine to modify the text over the human text, with the reward difference

Indicates the probability of favoring the machine to modify the text over the human text, with the reward difference Increase and grow. To achieve this, the reward function is defined as:

Increase and grow. To achieve this, the reward function is defined as:

here Represents the reference model (usually

Represents the reference model (usually The initial state).

The initial state).

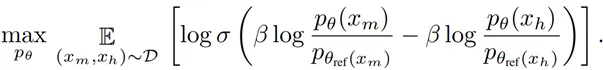

Through the design of this reward function, we use a strategy model instead of a reward model to express the probability of preference data. For a containing content equivalent For the training data set D, the optimization objective can be expressed as:

For the training data set D, the optimization objective can be expressed as:

By optimizing this objective function, the model The ability to gradually adjust the stylistic characteristics of the text to the preferred machine. As shown in Figure 3(a), this adjustment causes the model to show a stronger preference for machine-style features, such as words like "delve."

The ability to gradually adjust the stylistic characteristics of the text to the preferred machine. As shown in Figure 3(a), this adjustment causes the model to show a stronger preference for machine-style features, such as words like "delve."

The final optimized model is denoted as , represents a scoring model that is highly aligned with machine style.

, represents a scoring model that is highly aligned with machine style.

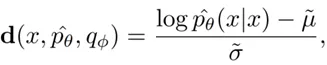

Detection based on style probability curve

On the basis of Style alignment, the researchers introduced styleconditional probability curvature (Style-CPC) as the final detection mechanism:

Through this measure, we can effectively quantify the degree of deviation between the text sample and the machine style. As shown in the comparison in Figure 2, the optimized model can significantly reduce the overlap of human text and machine-modified text distribution, and finally achieve accurate detection through a simple threshold strategy:

This detection framework based on style perception not only improves the recognition accuracy of machine modified text, but more importantly, it provides a new way to solve the increasingly important problem of high-level language model output detection.

By shifting the focus from content to stylistic features, the approach shows strong generalization, especially when dealing with complex scenarios containing user-provided content.

Experimental result

Detection performance on GPT series models

For the polish task, ImBD improved the performance of GPT-3.5 [2] and GPT-4o [3] revisions by 15.16% and 19.68%, respectively, compared to Fast-DetectGPT; Compared with the supervised model RoBERTa-large, ImBD improves the performance of GPT-3.5 and GPT-4o text detection by 32.91% and 47.06%, respectively. While maintaining high detection performance, the inference speed remains efficient, taking only 0.72 seconds per 1000 words.

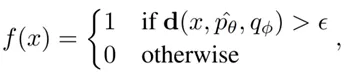

Using only 1000 samples and 5 minutes of SPO training, ImBD achieved an AUROC score of 0.9449, surpassing the commercial detection tool GPTZero [4] (0.9351) trained with large-scale data.

Detecting performance on open source models

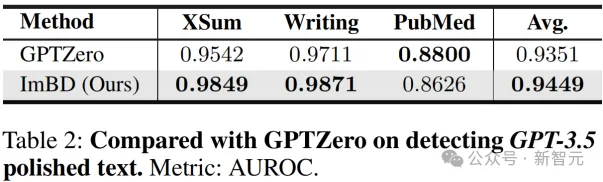

When examining the modified text of the four open source models Qwen2-7B [5], Llama-3 [6], Mixtral-7B [7], and Deepseek-7B [8], The average AUROC of ImBD method on the three data sets of XSum, SQuAD and WritingPrompts is 0.9550, which is significantly better than the 0.8261 of Fast-DetectGPT.

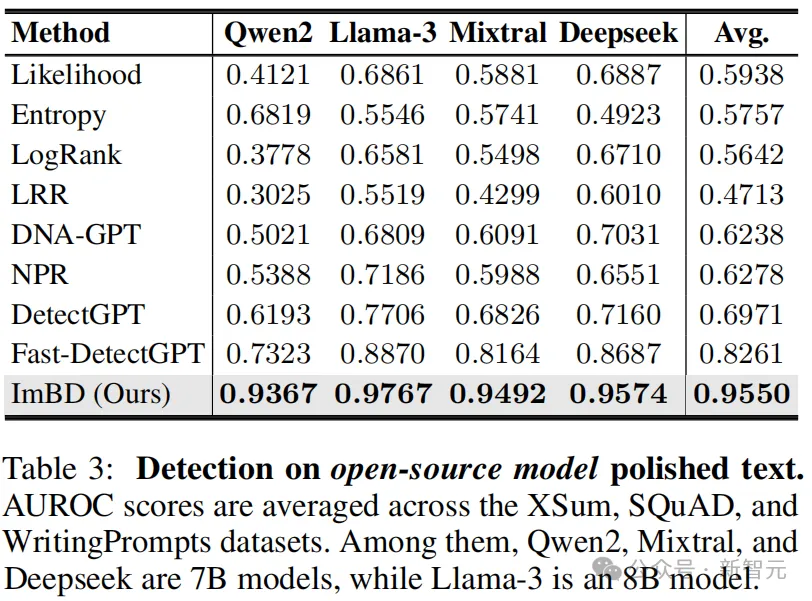

Evaluation of detection robustness under different task scenarios

ImBD method comprehensively exceeds the existing method in rewrite (0.8739), expand (0.9758), polish (0.9707) and generate (0.9996) in four tasks, and the average performance is 22.12% higher than that of Fast-DetectGPT. It is proved to be robust under different tasks and user instructions.

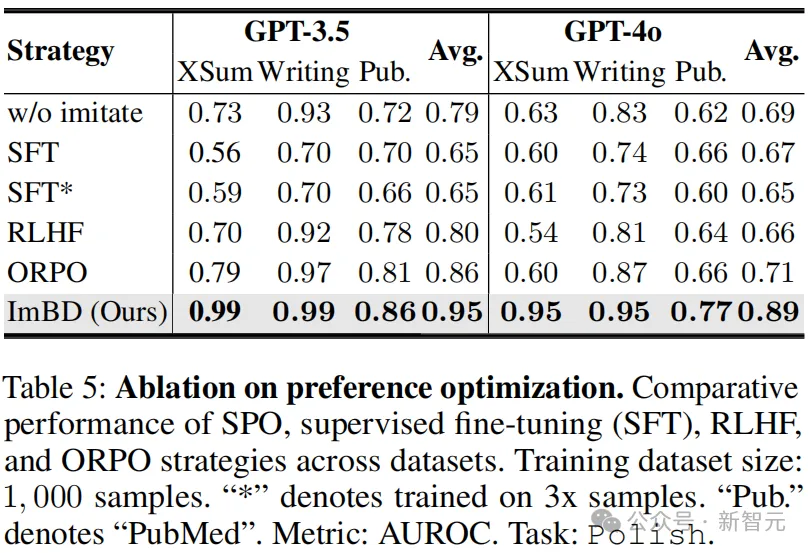

Ablation experiment

Compared with the baseline model without imitation strategy, the text detection AUROC of GPT-3.5 and GPT-4o by the SPO-optimized ImBD method increased by 16% and 20%, respectively. Compared with Supervised Fine-Tuning (SFT) method using three times the training data, the AUROC of ImBD is 30% and 24% higher on GPT-3.5 and GPT-4o, respectively.

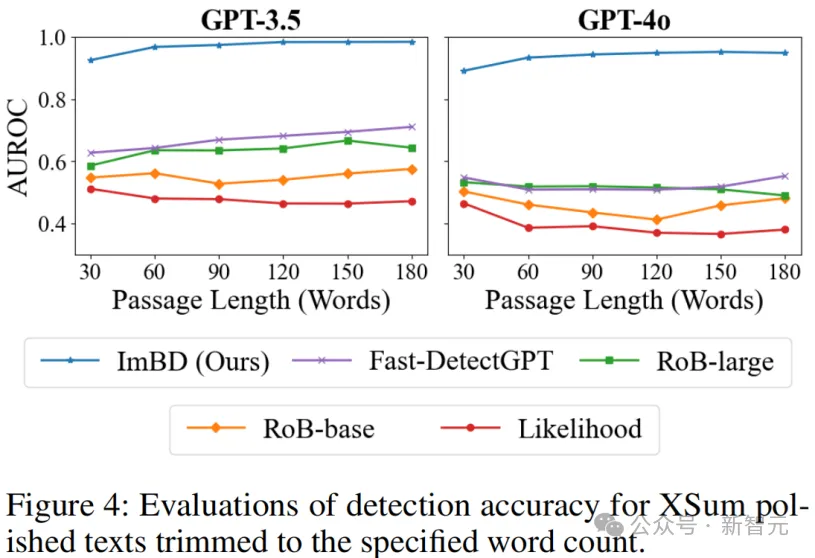

Study on text length sensitivity

When the text length increased from 30 words to 180 words, ImBD method always maintained a leading edge, and the detection accuracy rate steadily improved with the increase of text length, showing excellent long text processing ability.

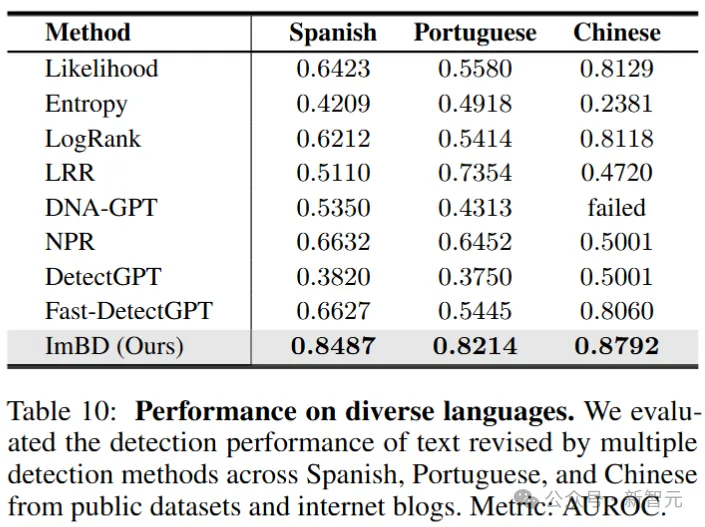

Multilingual detection capability assessment

ImBD shows excellent generalization ability in multilingual text detection, achieving AUROC scores of 0.8487, 0.8214 and 0.8792 in the detection of Spanish, Portuguese and Chinese respectively, comprehensively surpassing baseline methods such as Fast-DetectGPT. It also maintained stable performance in Chinese tests where partial baseline methods (such as DNA-GPT [9]) failed.

Sum up

This work proposes the Imitate Before Detect paradigm to detect machine-modified text, which centers on learning to imitate the LLM's writing style.

Specifically, the paper proposes a style preference optimization method to align the detector to the machine writing style and to quantify the logarithmic probability difference using style-based conditional probability curvature to achieve effective detection. Through extensive evaluation experiments, the ImBD method has demonstrated significant performance improvements over current state-of-the-art methods.

About the author

The main researchers of the paper are from Fudan University, South China University of Technology, Wuhan University, Fenz.AI, UCSD, UIUC and other institutions.

Chen Jiaqi is a master's student of Fudan University and a visiting student scholar of Stanford University. His research interests are computer vision and agents.

Zuchao Li is currently an associate researcher at the School of Computer Science at Wuhan University. He completed his PhD at Shanghai Jiao Tong University and was a special technology researcher at the National Institute of Information and Communication Technology (NICT) in Japan.

Zhang Jie is currently a researcher and doctoral supervisor at the Institute of Brain-like Intelligence Science and Technology, Fudan University. He received his PhD from the Hong Kong Polytechnic University in 2008. He was nominated for the Hong Kong Young Scientist Award. Honorary Member of the "Systems Modelling Analysis and Prediction" Laboratory, University of Oxford.

2025-02-17

2025-02-14

2025-02-13

friend link

400-000-0000

立即获取方案或咨询

top