Home > Information > News

#News ·2025-01-02

The writers are from Tsinghua University, ByteDance, Institute of Automation of the Chinese Academy of Sciences, Shanghai Jiao Tong University and the National University of Singapore List of authors: Li Xinghang, Li Peiyan, Liu Minghuan, Wang Dong, Liu Jirong, Kang Bingyi, Ma Xiao, Kong Tao, Zhang Hanbo and Liu Huaping. The first author, Li Xinghang, is a doctoral student in the Department of Computer Science at Tsinghua University. Corresponding authors are Kong Tao, a Bytedance robotics researcher, Zhang Hanbo, a postdoctoral fellow at the National University of Singapore, and Liu Huaping, a professor of computer science at Tsinghua University.

In recent years, Vision Language Models (VLMs) have shown great power in multimodal understanding and reasoning. Now, the even cooler Vision-Language-Action Models (VLAs) are here! By adding an action prediction module to the VLMs, VLAs can not only "see" and "speak" clearly, but also "move", opening a new way for the robot field!

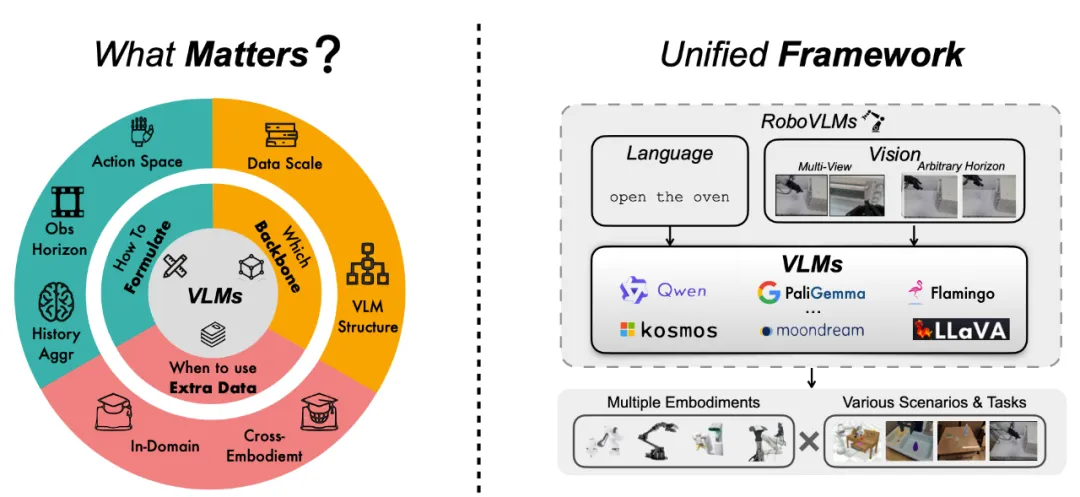

Although VLAs is eye-catching in a variety of tasks and scenarios, we have gone through many different ways in model design, such as what architecture to use, how to choose data, how to adjust training strategies, etc., which leads to no unified answer in the field of "how to do a good VLA". In order to clarify these problems, we propose a new model, RoboVLMs, through a series of experiments.

This model is super simple, but the performance is quite hardcore! It not only achieved high scores in three simulated tasks, but also delivered perfect scores in real robot experiments. This article will take you to see how we can unlock the infinite possibilities of VLA with RoboVLMs!

We have explored the VLA design in depth around four key questions, and here are the answers!

1. Why use a VLA model?

In short, through experiments, we found that properly designed VLA can not only easily handle common operational tasks, but also play steadily in unfamiliar scenarios.

Got a top score in the simulation

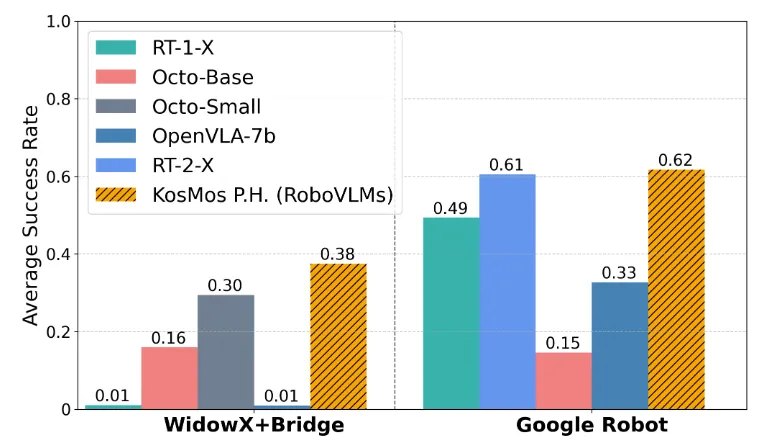

In CALVIN and SimplerEnv environments, RoboVLMs achieved a landslide victory:

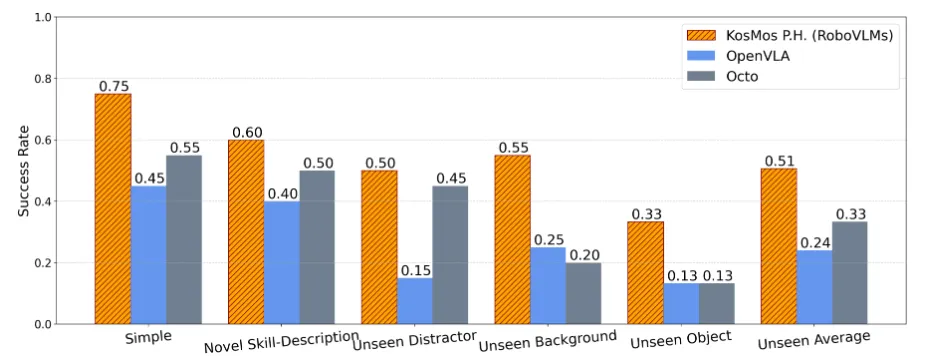

Figure 1. Evaluation results in the SimplerEnv simulation environment

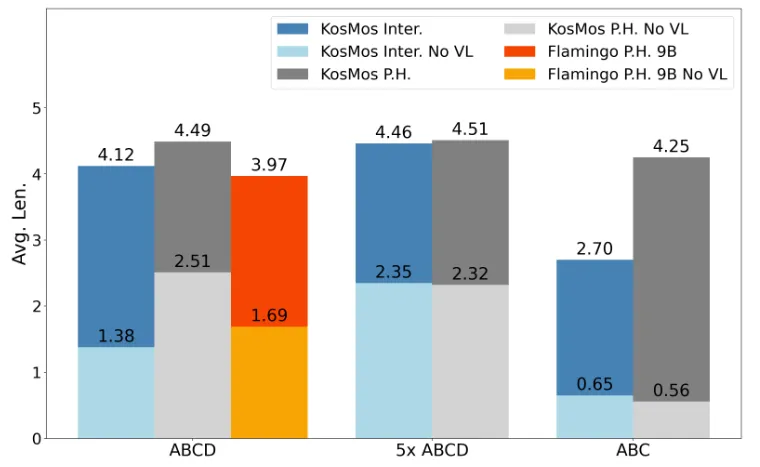

FIG. 2 Results of ablation experiment for visual language pre-training

In real environments, RoboVLMs faced more complex challenges and still performed better than other models. For example, in the task of fruit and vegetable classification, it can not only accurately identify, but also cope with interference in the environment and stably complete the classification operation. Whether it is a known scene or a new mission, it is easy to win.

Figure 3. Evaluation results under real environment

The RoboVLMs can complete the task well for the unseen skill description, background, interfering object and target object.

2. How to design a reliable VLA architecture?

There is a lot of finesse in this! For example:

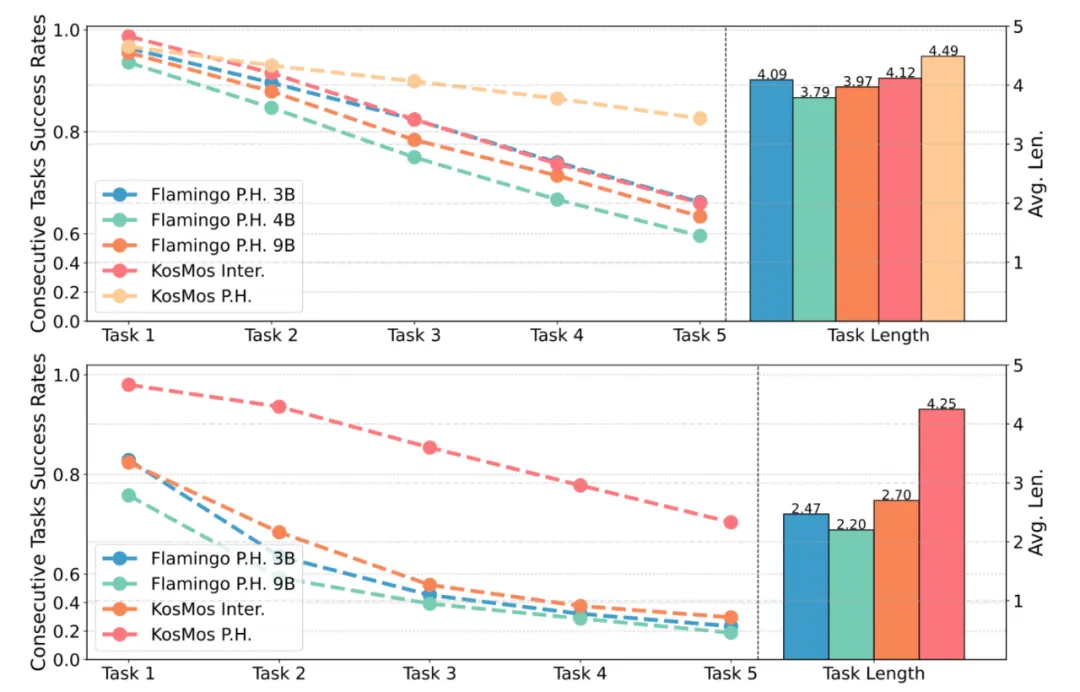

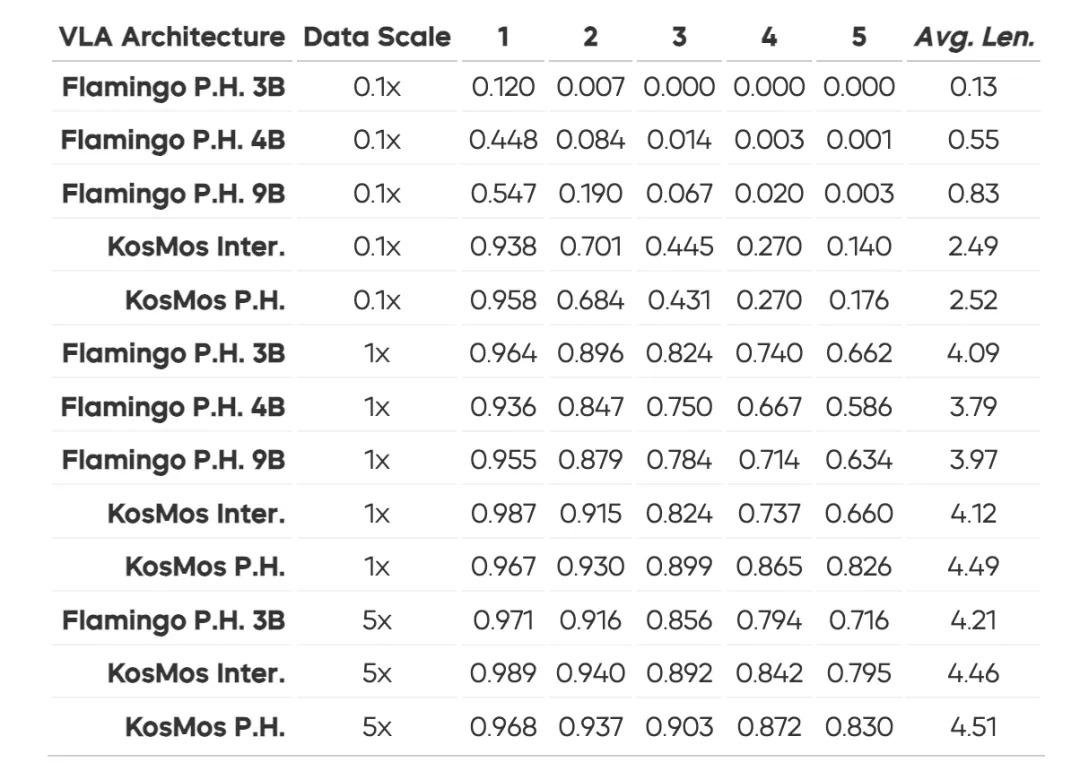

Through a series of experiments, we confirm that these design choices are key to improving model performance and generalization. Further experiments also showed that the optimal design came from an architecture based on the KosMos base model, combined with a specialized module for organizing historical information. Such a design achieves excellent generalization in CALVIN, with only a slight performance degradation in the zero-shot setting, while models with other design forms show significant degradation. This conclusion directly shows that the quality of architecture design is very important to the generalization ability and efficiency of the model.

3. Which base model is most suitable?

We compared the current eight mainstream visual language models (VLMS) and found that KosMos and Paligemma were far and away the best, easily crushing the other models. Whether it is the precision of task completion or the ability to generalize, they show overwhelming advantages. The reason for this is mainly due to their solid and comprehensive visual language pre-training, which provides the model with strong prior knowledge and understanding ability.

This discovery makes us more convinced that choosing the right base model is a key step in getting the VLA model off the ground! If you want to make models perform well in multimodal tasks, a deeply pre-trained VLM base with powerful visual language representation capabilities can obviously provide unparalleled assistance. Once this foundation has been laid, subsequent design and training can truly reach its maximum potential.

4. When is the most appropriate time to add cross-ontology data?

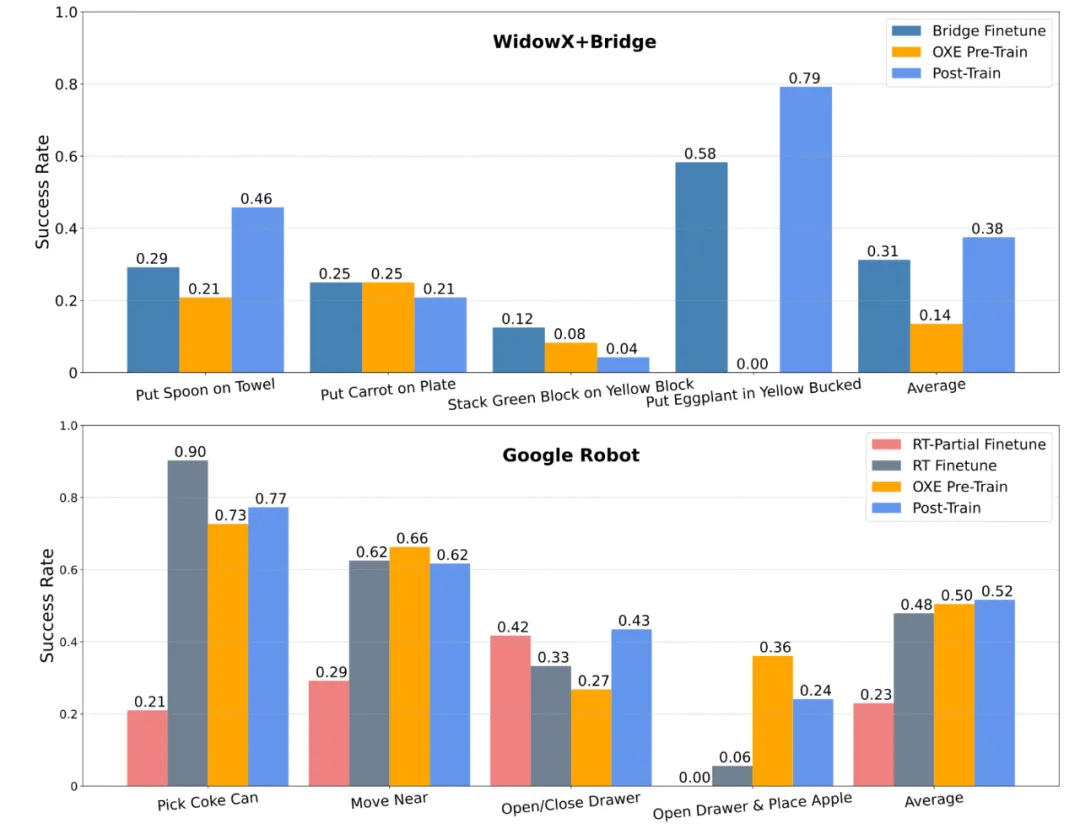

Experiments showed us the golden rule: introducing cross-ontology data, such as the Open-X Embodiment dataset, during the pretraining phase could significantly improve model robustness and performance in small-sample scenarios. On the other hand, directly mixing cross-ontology data and fine-tuning data for training, the effect is less significant. These conclusions point out the direction of training strategies for VLA models in the future.

In the specific experiment, we tested different training strategies in the two environments of WidowX+Bridge and Google Robot:

WidowX+Bridge environment:

Google Robot environment:

The experimental results further confirm that the introduction of cross-ontology data in the pre-training stage can not only improve the generalization ability, but also make the model perform better on small samples and high complex tasks.

Although RoboVLMs is already very capable of playing, the next development space is more exciting! The future can be explored:

The emergence of RoboVLMs validates the possibility of visual language action models and brings robots closer to becoming our all-powerful assistants. In the future, they may not only be able to understand language and vision, but they may actually help us complete those tedious and complex tasks. There are more surprises waiting for us!

2025-02-17

2025-02-14

2025-02-13

friend link

400-000-0000

立即获取方案或咨询

top