Home > Information > News

#News ·2025-01-02

The year 2024 has just passed is a year of great development of generative AI, we have witnessed the rise of OpenAI Sora, the rapid decline of large model service prices, and the catch-up of domestic open source large models. This all-round rapid development gives us full confidence in the large-scale application of new technologies in the next wave of AI.

For engineers and academics working in the field of AI, will they look at the year differently?

At the beginning of the New Year, there was a detailed summary of the development of large model technology over the past year. The author of this article is Simon Willison, a well-known independent researcher and open source creator in the UK.

Let's see what he has to say.

A lot is happening in the field of large language models in 2024. Here is a review of our research in the field over the past 12 months, along with the key themes and key moments I have tried to identify.

2023 summary here: https://simonwillison.net/2023/Dec/31/ai-in-2023/

In 2024, we can see:

In a December 2023 comment, I wrote about how we don't yet know how to build GPT-4 - the leading large model proposed by OpenAI was nearly a year old at the time, but no other AI lab could make a better model. Is there anything unique about OpenAI's approach?

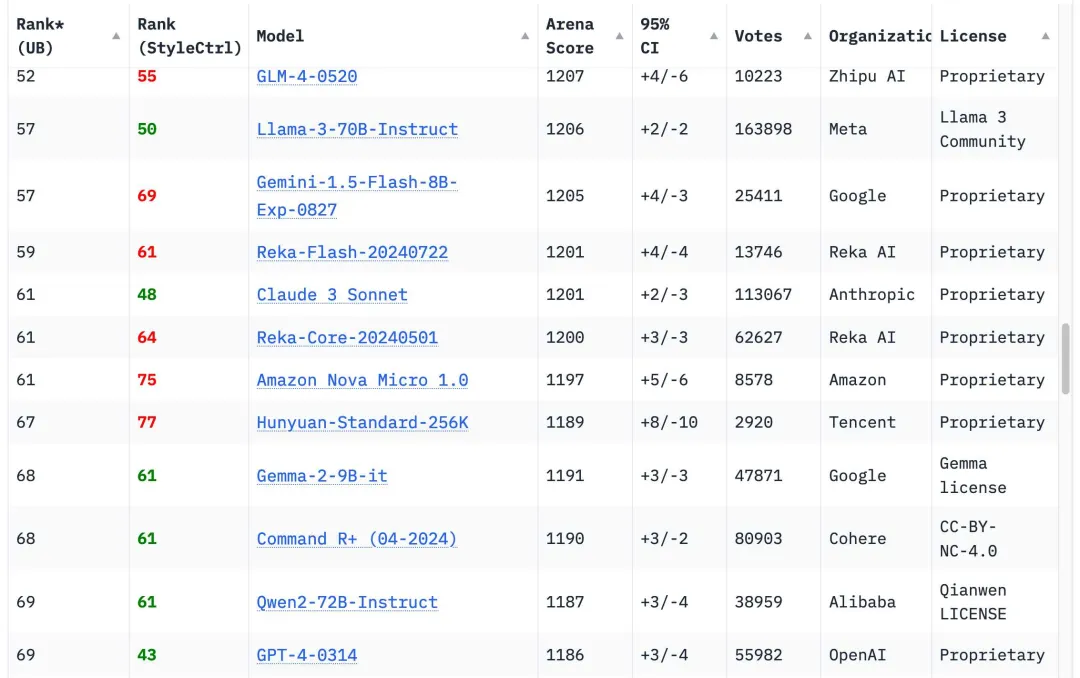

Thankfully, this situation has completely changed in the past 12 months. There are now 18 companies, institutions with a higher model ranking on the Chatbot Arena leaderboard than the original GPT-4 in March 2023 (GPT-4-0314 on the leaderboard) - a total of 70 models.

One of the earliest was Google's Gemini 1.5 Pro, which was released in February. In addition to producing GPT-4 level output, it introduced several entirely new features to the field - most notably its 1 million (and later 2 million) token input context length, and the ability to receive video.

I wrote about this at The time in The killer app of Gemini Pro 1.5 is video, which led to a brief appearance as a moderator at the Google I/O opening keynote in May.

The Gemini 1.5 Pro also showcases a key theme for 2024: increasing context length. Last year, most models received 4,096 or 8,192 tokens, but Claude 2.1 was a notable exception, accepting 200,000 tokens. Today, every serious provider has a 100,000-plus token model, and Google's Gemini family accepts up to 2 million tokens.

Longer inputs dramatically increase the range of problems that can be solved using LLM: you can now throw in an entire book and ask questions about its contents. But more importantly, you can input a lot of sample code to help the model solve the coding problem correctly. LLM use cases involving long inputs are more interesting to me than short prompts that purely rely on information already embedded in the model weights. Many of my tools are built using this pattern.

Back to the model that beat GPT-4: Anthropic's Claude 3 series was launched in March, and the Claude 3 Opus quickly became my favorite everyday big model. They upped the ante further in June with the launch of the Claude 3.5 Sonnet - and six months later, this model is still my favorite (although it got a major upgrade on October 22, confusingly retaining the same 3.5 version number). Anthropic fans have liked to call it Claude 3.6 ever since).

Then there are the rest of the big models. If you browse the Chatbot Arena leaderboard today (still the most useful place to get emotion-based model evaluations), you'll see that GPT-4-0314 has dropped to around 70th place. The 18 organizations with high scoring models are Google, OpenAI, Ali, Anthropic, Meta, Reka AI, 01 AI, Amazon, Cohere, DeepSeek, Nvidia, Mistral, NexusFlow, Zhipu AI, xAI, AI21 Labs, Princeton and Tencent.

My personal laptop is a 2023 64GB M2 MacBook Pro. It's a powerful machine, but it's also almost two years old - and crucially, the same laptop I've been using since March 2023, when I first ran LLM locally on my computer.

Last March, the same laptop could almost run GPT-3 class models, and now it's running multiple GPT-4 class models! Some of my notes on this:

Qwen2.5-codec-32b is a well-codeced LLM that runs on my Mac, and in November I talked about QWEN2.5-codec-32b - the Apache 2.0 licensing model.

I can now run the GPT-4 class model on my laptop, running Meta's Llama 3.3 70B (released in December).

It's still surprising to me. We would have taken it for granted that a model with GPT-4 functionality and output quality would require a data center grade server with one or more Gpus worth $40,000 +.

In fact, these models take up 64GB of my memory space, so I don't run them very often - when I do, I can't do anything else.

The fact that they can run at all is a testament to the incredible training and reasoning performance improvements that the field of AI has made over the past year. As it turns out, there's a lot of low-hanging fruit when it comes to model efficiency. I expect more to come.

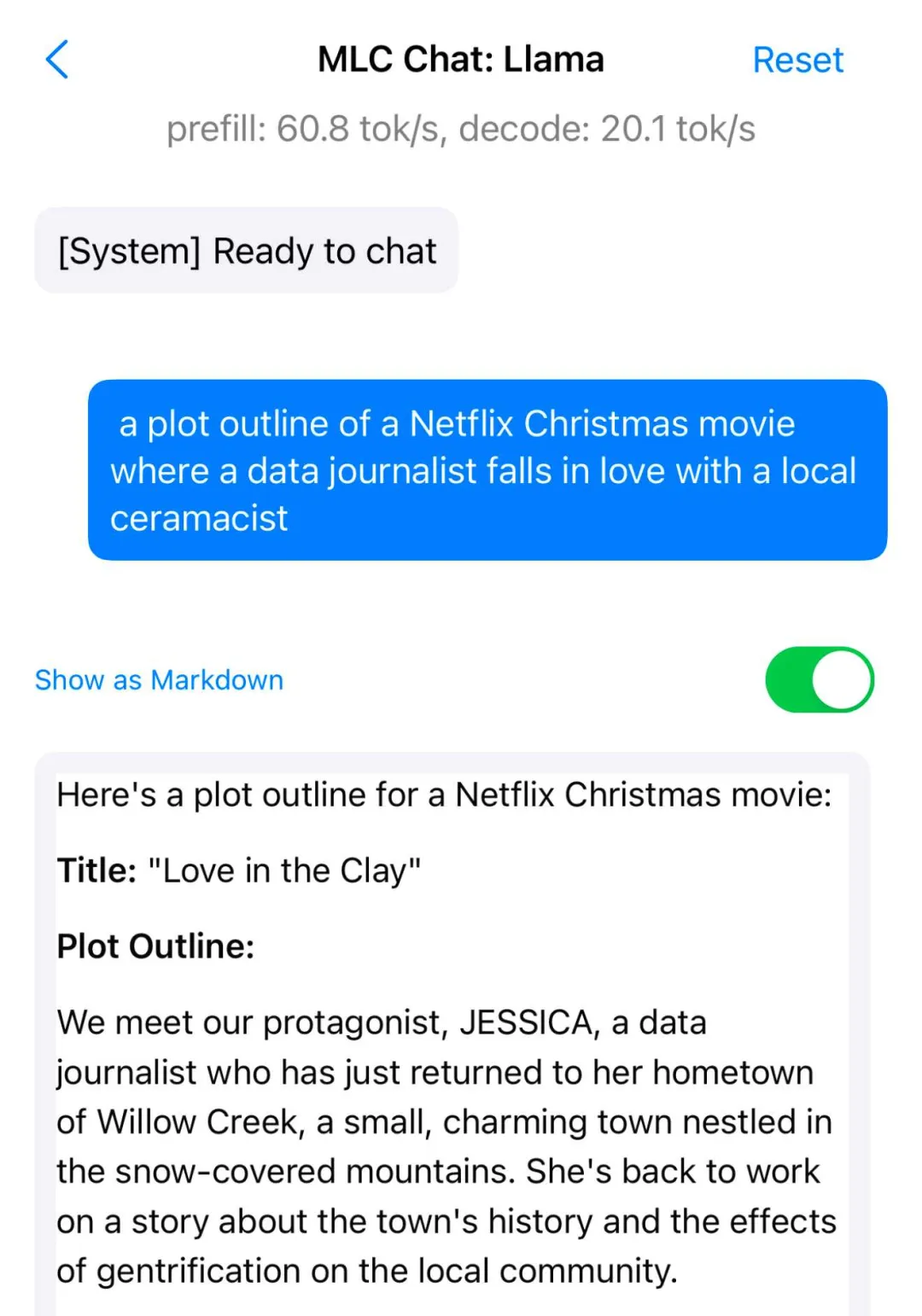

Meta's Llama 3.2 model deserves special mention. They may not be GPT-4 levels, but at 1B and 3B sizes, their performance far exceeds the parameter count level. I used the free MLC Chat iOS app to run Llama 3.3B on my iPhone, which is a surprisingly functional model for its tiny (<2GB) number of parameters. Try launching it and ask it for a "plot outline for a Netflix Christmas movie in which a data journalist falls in love with a local ceramist." Here's what I got, and 20 tokens per second is pretty impressive:

It's plain and ordinary, but my phone can now recommend plain and ordinary Christmas movies to Netflix!

Over the past 12 months, the cost of running tokens through the LLM of top hosting services has dropped dramatically.

In December 2023 (this is a snapshot of the Internet from OpenAI's pricing page), OpenAI charged $30 /mTok for GPT-4 and $10 / MTOK for the then newly launched GPT-4 Turbo. The GPT-3.5 Turbo is charged $1 /mTok.

Today $30 /mTok gets you OpenAI's most expensive model, o1. The GPT-4o costs $2.50 (12 times cheaper than the GPT-4) and the GPT-4o mini costs $0.15 per mTok - nearly 7 times cheaper than the GPT-3.5 and much more powerful.

Other model providers charge even less. Anthropic's Claude 3 Haiku (from March, but still their cheapest model) is $0.25 /mTok. Google's Gemini 1.5 Flash is $0.075 /mTok, while their Gemini 1.5 Flash 8B is $0.0375 /mTok - 27 times cheaper than last year's GPT-3.5 Turbo.

I've been tracking these price changes under my llm pricing tag.

These price declines are driven by two factors: increased competition and improved efficiency. For everyone concerned about the environmental impact of LLMS, efficiency really matters. These price reductions are directly related to the amount of energy consumed by the operating tips.

There is still a lot to worry about about the environmental impact of large AI data center construction, but many of the concerns about the energy costs of a single tip are no longer credible.

Here's an interesting simple calculation: How much would it cost to generate short descriptions for every 68,000 photos in my personal photo library using Google's cheapest model, the Gemini 1.5 Flash 8B (released in October)?

Each photo requires 260 input tokens and about 100 output tokens.

The total cost of processing 68,000 images was $1.68. It was so cheap that I had to calculate it three times to make sure I got it right.

How good are these descriptions? This is what I get from a single command:

Processing this butterfly photo taken at the California Academy of Sciences:

Output content:

A platter, probably a hummingbird or butterfly feeder, is red. Orange fruit slices are visible inside the tray. There are two butterflies in the feeder, a dark brown/black butterfly with white/cream markings. Another large brown butterfly with light brown, beige and black markings, including distinct eye spots. The larger brown butterflies appear to be eating fruit.

260 input tokens, 92 output tokens. The cost is about 0.0024 cents (less than a 400th of a cent). Increased efficiency and lower prices are my favorite 2024 trends. I wanted to get the utility of the LLM at very low energy costs, and it looks like that's what we got.

The butterfly example above illustrates another key trend for 2024: Multimodal LLM is on the rise.

A year ago, the most notable example of this was GPT-4 Vision, released at OpenAI's DevDay in November 2023. Google's multimodal Gemini 1.0 was released on December 7, 2023, so it's also (just right) in the 2023 window.

In 2024, nearly every major model vendor has released multimodal models. We saw Anthropic's Claude 3 series in March, Gemini 1.5 Pro (image, audio and video) in April, Then in September the family brought Qwen2-VL and Mistral's Pixtral 12B and Meta's Llama 3.2 11B and 90B vision models.

In October, we got audio input and output from OpenAI, in November we got SmolVLM from Hugging Face, and in December we got image and video models from Amazon Nova.

In October, I also upgraded my LLM CLI tool to support multimodal models via attachments. It now has a range of plugins for different visual models.

I think people who complain about the slow pace of LLM improvement tend to overlook the huge advances in these multimodal models. Being able to run prompts against images (as well as audio and video) is a fascinating new way to apply these models.

The emerging audio and real-time video modes deserve special attention.

Chat with ChatGPT first appeared in September 2023, but it wasn't really implemented yet: OpenAI was going to use its Whisper speech-to-text model and a new text-to-speech model called: Tps-1) to talk to ChatGPT, but the actual model can only see text.

On May 13, the OpenAI Spring conference launched GPT-4o. The multimodal model GPT-4o (o stands for "omni", meaning omnipotent) directly "understands" everything you say - taking audio input and producing incredibly realistic speech without the need for TTS or STT models to translate it.

The voice in this demo bears a striking resemblance to Scarlett Johansson...... After Scarlett's complaint, the voice Skye never made its debut in any official product.

However, the release of the eye-catching GPT-4o advanced voice function in the product has been delayed repeatedly, which has caused a lot of discussion.

When ChatGPT's Advanced Voice mode finally went live in August-September 2024, I was truly amazed.

I often use it while walking my dog, with a more anthropomorphic tone to make AI-generated content sound more vivid. It's also fun to experiment with OpenAI's audio API.

Even more interesting: Advanced speech modes can mimic accents! For example, I asked it to "pretend you're a California brown pelican with a heavy Russian accent, but only talk to me in Spanish" :

OpenAI isn't the only company working on a multimodal audio model: Google's Gemini can also take voice input, and the Gemini app can now talk like ChatGPT. Amazon also pre-announced that their Amazon Nova will have a voice mode, but it won't be available until the first quarter of 2025.

The NotebookLM, released by Google in September, takes audio output to a new level - it can generate super-realistic "podcaster" conversations, no matter what you give it. Later they added custom commands, and of course I turned the hosts into pelicans without saying a word:

The latest twist, which came in December (which is a really busy month), is live video. ChatGPT's voice mode now lets you share camera footage directly with the model and talk about what you're seeing in real time. Google Gemini also launched a similar preview feature, this time finally before ChatGPT release.

These features have only been available for a few weeks, and I don't think their full impact has yet been felt. If you haven't tried it yet, really try it!

Both Gemini and OpenAI provide apis for these functions. OpenAI started with the more difficult WebSocket API, but in December they introduced the new WebRTC API, which is much easier to use. Now, it's super easy to build web apps that talk to users by voice.

This will be possible with GPT-4 in 2023, but it won't really be worth it until 2024.

We've long known that large language models have amazing power when it comes to writing code. If you give them the right prompts, they can build you a complete interactive application using HTML, CSS, JavaScript (and tools like React if you configure your environment) - often with just one prompt.

Anthropic, in the announcement of the release of Claude 3.5 Sonnet, introduces a breakthrough new feature: Claude Artifacts. This feature didn't get much attention at first, as it was only written about in the announcement.

With Artifacts, Claude can write real-time interactive applications for you and then let you use them directly in the Claude interface.

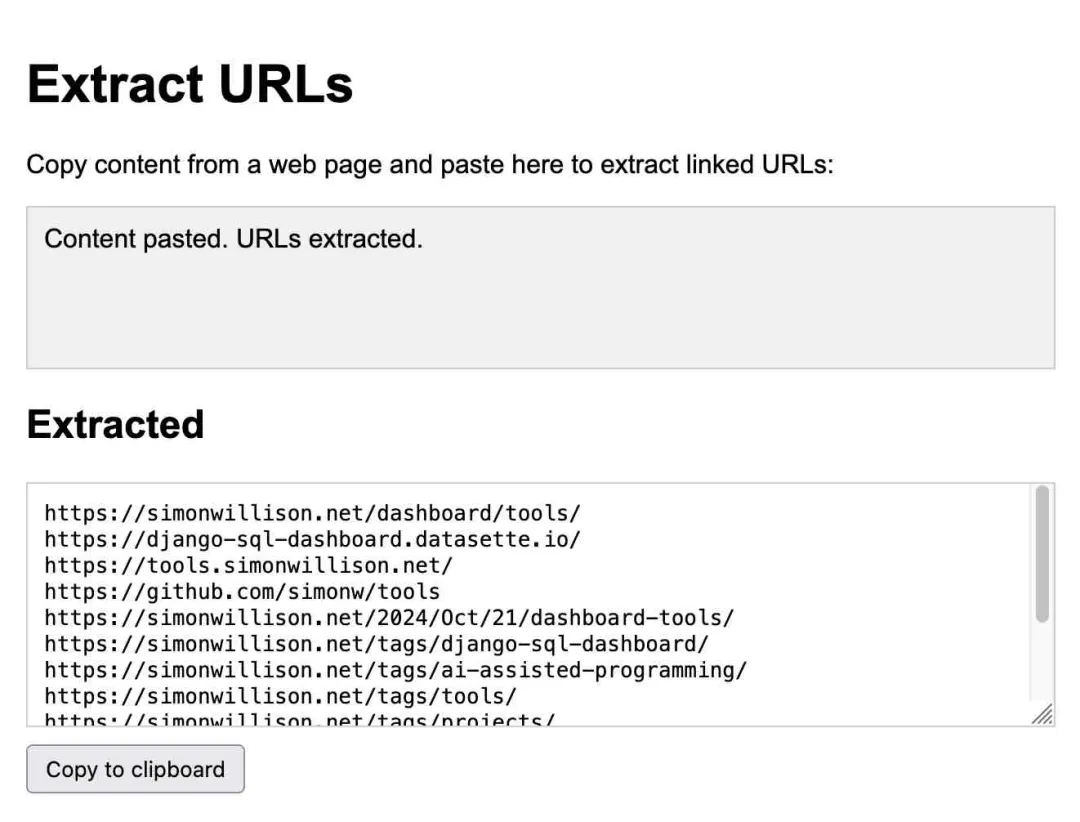

This is an application I built with Claude to extract web urls:

Now Claude Artifacts have become my artifacts. Many other teams have developed similar systems, for example, GitHub launched their version in October: GitHub Spark. Mistral Chat added a similar feature called Canvas in November.

Steve Krause from Val Town built a version based on Cerebras to show how a large language model that processes 2,000 tokens per second can iteratively update an application in less than a second.

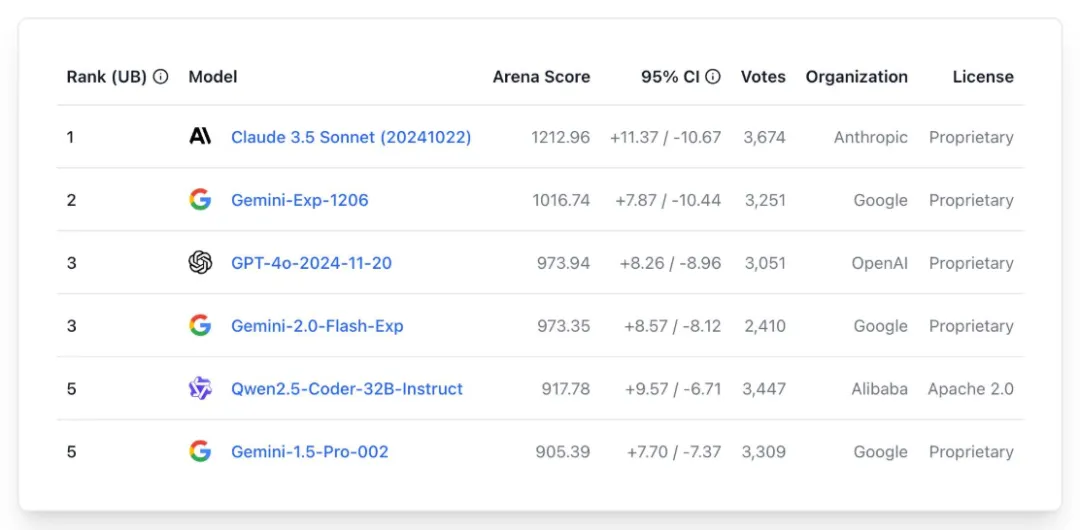

In December, the Chatbot Arena team launched a new leaderboard for such features, allowing users to build the same interactive app with two different models and then vote on the results.

Top six on the list

It would be hard to find more compelling evidence that this feature is now a common feature that can be effectively implemented on all major models.

I'm working on a similar feature myself for the Datasette project, with the goal of enabling users to build and iterate on custom widgets and data visualizations for their own data through prompts. I also found a similar pattern for writing one-time Python programs through uv.

This kind of cup-driven custom interface is so powerful and easy to build (once you get the tricky details out of the browser sandbox) that I expect a wave of products to roll out similar features in 2025.

For a few months this year, the three best models available - GPT-4o, Claude 3.5 Sonnet, and Gemini 1.5 Pro - were all available for free to most of the world.

OpenAI made GPT-4o free to all users in May, while Claude 3.5 Sonnet has been free since its release in June.

This is a significant change, as in the previous year, free users were mainly limited to GPT-3.5 models, which led to new users forming a very inaccurate understanding of the actual capabilities of large language models.

That era seems to be over, though, and likely permanently, marked by OpenAI's launch of ChatGPT Pro. This $200 per month subscription service is the only way to access their most powerful new model (o1 Pro).

Since the trick behind the o1 series (and future "o Series" models) is to invest more computing time to get better results, I don't think the days of free access to top-of-the-line models are coming back.

To be honest, the term AI Agents gives me a headache. It does not have a uniform, clear and widely accepted definition. What's worse, the people who use the term never seem to admit it.

If you tell me you're developing AI Agents, that's basically saying nothing. Unless I can read minds, I have no idea, there are dozens of definitions of AI Agents, which one are you building?

There are two main types of AI Agents developers I see: those who think that AI Agents are obviously things that do things for you, like travel agents; Another group imagines equipping large language models with tools that allow them to autonomously drive and perform tasks.

The word "autonomy" is also often mentioned, but again it is not clear what it means.

(I collected 211 definitions on Twitter a few months ago, and I had gemini-exp-1206 try to summarize them.)

No matter what the term actually means, AI Agents always have a sense of "coming true."

Terminology aside, I'm skeptical of their usefulness for the same old problem: large language models are easily "gullible" and will take whatever you tell them.

This raises a key question: How useful can a decision aid system be if it can't even tell the difference between true and false? Whether it is a travel consultant, life assistant, or research tool, it will be greatly compromised by this flaw.

A recent example is readily available: Just the other day, Google search made a big mistake. It presents a fictional plot of "Full House of Magic 2" on a fan creation website as if it were a real movie.

Prompt injection is the inevitable result of this credulity. We've been talking about this since September 2022, but there's been very little progress on solving the problem in 2024.

I'm beginning to think that the most popular concept of AI Agents actually relies on the implementation of general AI. Making a model resistant to credulity is a difficult task.

Amanda Askell of Anthropic, who is responsible for Claude's character:

The boring but crucial secret behind system prompts is test-driven development. Instead of writing a system prompt and then figuring out how to test it, you write the test and then find a system prompt that passes those tests.

The experience of 2024 tells us: What are the most important core competencies in the application of large language models? It is a set of perfect automatic evaluation system.

Why do you say that? Because with it, you can: be one step ahead, adopt new models, accelerate iterations, continuously optimize products, and ensure that features are both reliable and useful.

Malte Ubl of Vercel says:

When @v0 first came out, we were very worried about protecting hints with all sorts of complex pre-processing and post-processing operations.

So we completely changed our thinking and gave it full play. But it soon became clear: no evaluation criteria, no model guidance, and no user experience tips, just like getting an ASML machine without a manual - no matter how advanced, there is no way to start.

I'm still trying to figure out what works best for me. The importance of evaluation has been recognized, but there is still a lack of systematic guidance on how to do it well. I've been tracking this progress through assessment tags. The "Pelican Bike" SVG benchmark I'm using now is a long way from those mature evaluation kits.

Last year, I was stymied by the lack of Linux/Windows machines equipped with NVIDIA Gpus.

Configurationally, the 64GB Mac should have been ideal for running AI models - the CPU and GPU shared memory were designed to be perfect.

But the reality is harsh: when the current AI models are released, they are basically launched in the form of model weights and development libraries, and these are almost exclusively suitable for NVIDIA CUDA platform.

The llama.cpp ecosystem helped a lot here, but the real breakthrough was Apple's MLX library, an "array framework designed for Apple Silicon." It's really good.

Apple's mlx-lm Python supports running various MLX-compatible models on my Mac, and the performance is excellent. mlx-community on Hugging Face offers over 1,000 models that have been converted into the necessary format.

The excellent and rapidly growing mlx-vlm project developed by Prince Canuma also brings visual large language models to Apple Silicon. I recently used it to run Qwen's QvQ.

While MLX is a game changer, Apple's own Apple Intelligence features have mostly been disappointing. I was really looking forward to Apple Intelligence, and in my predictions, I thought Apple would focus on making applications that protect user privacy and create large language models that are clear and understandable to users.

Now that these features have been rolled out, they are pretty poor. As a heavy user of large language models, I know what these models can do, and Apple's large language model feature only provides a pale imitation. What we get is a summary of notifications that distort news headlines and a completely useless writing assistant tool, but the emoji generator is interesting.

The most interesting development in the last quarter of 2024 was the emergence of a new type of LLM, represented by OpenAI's o1 model.

To understand these models, think of them as an extension of the "chain of thought cue" technique. This technique first appeared in the May 2022 paper "Large Language Models are Zero-Shot Reasoners."

The gist of this technique is that if you ask a model to "think out loud" while solving a problem, it will often get results that would otherwise be unexpected.

o1 further integrates this process into the model itself. The details are a bit vague: the o1 model spends some "inference tokens" thinking about the problem (the user can't see the process, but the ChatGPT interface displays a summary) and then gives a final answer.

The biggest innovation here is that it opens up a new way to scale the model: instead of simply increasing the amount of computation required during training to improve model performance, the model invests more computational resources in reasoning to solve harder problems.

o3, the successor to o1, was released on December 20, and o3 has achieved amazing results on the ARC-AGI benchmark. However, judging from the huge inference cost of o3, it may have spent more than $1 million in computing costs!

o3 is expected to launch in January. But I feel that few people's practical problems require such a large computational overhead, and o3 also marks a substantial step forward for LLM architectures in dealing with complex problems.

OpenAI is not a one-man show in this space. Google also launched their first such product on December 19: gemini-2.0-flash-thinking-exp.

Alibaba's Qwen team released their QwQ model on November 28, which I can run on my own computer. They released another visual inference model called QvQ on December 24, which I also ran locally.

DeepSeek made the Deepseek-R1-Lite-Preview model available for trial via their chat interface on November 20.

For an in-depth look at inference extension, I recommend reading Arvind Narayanan and Sayash Kapoor's Is AI progress slowing down? This article.

Anthropic and Meta aren't active yet, but I bet they're also developing their own inference extension models. Meta published a related paper in December, "Training Large Language Models to Reason in a Continuous Latent Space."

Not quite, but almost. That's a good headline to catch your eye.

The big news at the end of the year was the release of DeepSeek v3, which was put on a Hugging Face on Christmas Day without even a README file, and released documents and papers the next day.

DeepSeek v3 is a massive 685B parameter model, one of the largest publicly licensed models available, and much larger than Meta's largest Llama family model, Llama 3.1 405B.

Benchmark results show that it is comparable to Claude 3.5 Sonnet. The Vibe benchmark, also known as the Chatbot Arena, currently ranks it seventh, behind Gemini 2.0 and OpenAI 4o/o1 models. This is the highest-ranked public authorization model to date.

What's really impressive about DeepSeek v3 is its training cost. The model was trained in 2,788,000 H800 GPU hours at an estimated cost of $5,576,000. Llama 3.1 405B trained 30,840,000 GPU hours, 11 times more than DeepSeek v3, but the model's benchmark performance was slightly inferior.

The U.S. regulations for exporting Gpus to China seem to have inspired some very effective training optimizations.

A welcome result of the improved efficiency of models (both hosted and those I can run locally) is that the energy consumption and environmental impact of running Prompt has been greatly reduced over the past few years.

OpenAI also charges 100 times less for its own prompter compared to the GPT-3 era. I am reliably informed that neither Google Gemini nor Amazon Nova (two of the cheapest model providers) run the prompter at a loss.

I think this means that, as individual users, we don't have to feel guilty at all about the energy consumed by the vast majority of prompts. The impact is likely to be minimal compared to driving down the street or even watching videos on YouTube.

The same goes for training. DeepSeek v3 costs less than $6 million to train, which is a very good sign that training costs can and should continue to fall.

For less efficient models, I think it's very useful to compare their energy usage to commercial flights. The cost of the largest Llama 3 model is about the same as a single-digit full passenger flight from New York to London. This is certainly not nothing, but once trained, the model could be used by millions of people without additional training costs.

The bigger problem is that the infrastructure needed to build these models in the future will be under intense competitive pressure.

Companies like Google, Meta, Microsoft, and Amazon are all spending billions of dollars building new data centers, with huge impacts on the grid and the environment. There is even talk of building new nuclear power plants, but that would take decades.

Is this infrastructure necessary? DeepSeek v3's $6 million training cost and the continued plunge in LLM prices may hint at this. But do you wish you were an executive at a big tech company who insisted on not building that infrastructure even after a few years of proving yourself wrong?

An interesting point of comparison is the way railroads were laid around the world in the 19th century. Building these railways required huge investments and had a huge environmental impact, and many of the lines built proved unnecessary, sometimes with multiple lines from different companies serving the exact same route.

The resulting bubble led to several financial crashes, see the Panic of 1873, the Panic of 1893, the Panic of 1901, and the British railway mania in Wikipedia. They have left us with a lot of useful infrastructure, as well as a lot of bankruptcy and environmental damage.

2024 is the year the word "slop" became a term of art. I wrote a post back in May that expanded on this tweet from @deepfates:

Watch in real time how "slop" became a term of art. Just as "spam" became a term for unwanted email, "swill" will enter the dictionary as a term for unwanted content generated by artificial intelligence.

I've expanded this definition a little bit:

"Slop" refers to unsolicited and uncensored content generated by artificial intelligence.

Finally, both The Guardian and the New York Times quoted me on swill.

Here's what I said in The New York Times:

Society needs concise ways to talk about modern AI, both positive and negative. "Ignore that email, it's spam" and "Ignore that article, it's slop" are both useful lessons.

I like the word "slop" because it succinctly encapsulates one way we shouldn't use generative AI.

'slop' was even named Oxford's Word of the Year for 2024, but lost out to 'brain rot'.

The concept of "model collapse" seems surprisingly entrenched in the public consciousness. The phenomenon was first described in May 2023 in the article "The Curse of Recursion: Training on Generated Data Makes Models Forget." In July 2024, Nature repeated this phenomenon with an even bolder headline: AI models crash when trained on recursively generated data.

The idea is seductive: as the AI-generated "slop" floods the Internet, the model itself will degrade, absorbing its own output in a way that leads to its inevitable demise.

That clearly did not happen. Instead, we're seeing AI LABS increasingly trained on synthetic content - consciously creating artificial data to help guide their models down the right path.

One of the best descriptions of this I've seen comes from the Phi-4 technical report, which includes the following:

Synthetic data is becoming more and more common as an important part of pre-training, and the Phi series models have always emphasized the importance of synthetic data. Rather than synthetic data being a cheap alternative to organic data, synthetic data has several immediate advantages over organic data.

Structured learning and progressive learning. In organic datasets, the relationships between tokens are often complex and indirect. Many inference steps may be required to associate the current token with the next token, making it difficult for the model to effectively learn the prediction of the next token. In contrast, each token generated by a language model is, as the name suggests, predicted by the preceding token, which makes it easier for the model to follow the resulting inference pattern.

Another common technique is to use larger models to create training data for smaller, less expensive models, and this technique is being used by more and more LABS. DeepSeek v3 uses "inference" data created by DeepSeek-R1. Meta's Llama 3.370B fine-tuning uses more than 25 million synthetically generated examples.

Carefully designing the training data that goes into the LLM seems to be the key to creating these models. Gone are the days of grabbing all the data from the web and putting it into training runs indiscriminately.

I keep stressing that LLMS are powerful user tools, they are chainsaws disguised as kitchen knives. They seem simple and easy to use, how hard can it be to input information into a chatbot? But actually, to make the most of them and avoid their many pitfalls, you need a deep understanding and a wealth of experience.

If the problem is even worse in 2024, the good news is that we've built computer systems that can talk to humans in human language, and they'll answer your questions, and usually get them right. It depends on the content of the question, how it is asked, and whether the question is accurately reflected in the unrecorded secret training set.

The number of available systems has exploded. Different systems have different tools that can be used to solve your problems, such as Python, JavaScript, web search, image generation, and even database queries. So you'd better understand what these tools are, what they can do, and how to tell if an LLM uses them.

Did you know that ChatGPT now has two completely different ways of running Python?

Want to build a Claude artifact that talks to an external API? You'd better understand the CSP and CORS HTTP headers first.

The model may have become more powerful, but most of the limitations have not changed. OpenAI's o1 may finally be able to compute most of the R in "Strawberry," but its capabilities are still limited by its nature as an LLM, and by the harnesses it runs on. O1 can't search the web or use Code Interpreter, but GPT-4o can - both in the same ChatGPT UI. (o1 will pretend to do these things if you ask, which is a return to the URL illusion bug in early 2023).

What can we do about it? Almost nothing.

Most users are thrown into the deep end. The default LLM chat UI is like throwing brand new computer users into a Linux terminal and expecting them to handle everything on their own.

At the same time, inaccurate mental models that end users form about how these devices work and function are becoming more common. I've seen a lot of examples of people trying to win an argument with screenshots of ChatGPT - a ridiculous proposition because these models are inherently unreliable, plus you can make them say anything if you prompt them correctly.

There is also a flip side to this: many well-informed people have given up on LLM altogether because they don't understand how anyone could benefit from a tool that has so many flaws. The key to getting the most out of LLM is learning how to use this unreliable and incredibly powerful technology. This is a skill that is definitely not obvious!

There's a lot to be said for useful educational content here, but we need to do it better than outsourcing it all to the AI scammers who bombard Twitter.

By now, most people have heard of ChatGPT. How many of you have heard of Claude?

There is a huge knowledge gap between the people who actively follow this content and the 99% who don't.

The speed of change does not help. Just in the last month, we've seen the proliferation of live interfaces where you can point your phone's camera at something and talk about it in your voice...... You can also choose to have him pretend to be Santa Claus. Most self-certified NerDs haven't even tried this yet.

Given the ongoing and potential impact of this technology on society, I don't think it's healthy for this gap to exist. I would like to see more efforts to improve the situation.

A lot of people really hate this stuff. In some of the places I hang out (Mastodon, Bluesky, Lobste.rs, and even the occasional Hacker News), even suggesting that "LLM is useful" is enough to start a big fight.

I understand that there are many reasons not to like this technology: environmental impact, training data (lack of) ethics, lack of reliability, negative application, potential impact on people's jobs.

The LLM definitely deserves criticism. We need to discuss these issues, find ways to mitigate them, and help people learn how to use these tools responsibly, so that the positive applications outweigh the negative effects.

I like people who are skeptical about these things. For more than two years, the sound of hype has been deafening, with a large number of "fake and shoddy goods" and misinformation filled with it. A lot of bad decisions have been made based on that hype. Daring to criticize is a virtue.

If we want people with decision-making power to make the right decisions about how to apply these tools, we first need to acknowledge that there are good applications, and then help explain how to put those applications into practice, while avoiding many non-practical pitfalls.

(If you still think there are no good apps at all, then I don't know why you finished reading this article!) .

I think telling people that the whole field is an environmentally disastrous plagiarism machine that keeps making stuff up, no matter how much truth that represents, is a disservice to those people. There is real value here, but realizing that value is not intuitive and requires guidance. Those of us who know this stuff have a responsibility to help others figure it out.

2025-02-17

2025-02-14

2025-02-13

friend link

400-000-0000

立即获取方案或咨询

top