Home > Information > News

#News ·2025-01-02

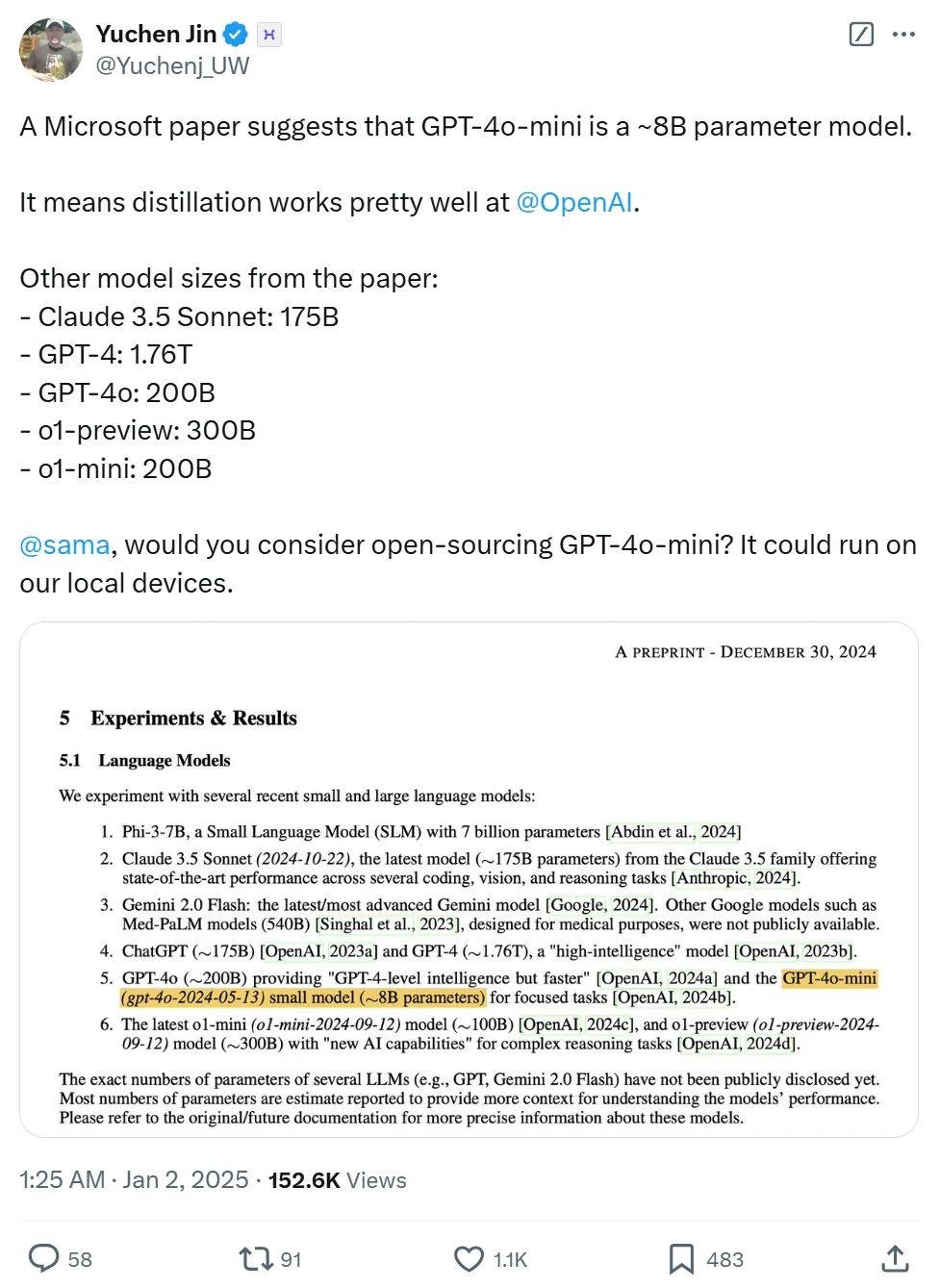

Just now, a tweet on X has been widely discussed by everyone, and the number of views has increased rapidly. Originally, the GPT-4o-mini released by OpenAI was actually a model with only 8B parameters?

Photo source: https://x.com/Yuchenj_UW/status/1874507299303379428

Here's the thing. Just the other day, Microsoft AND the University of Washington collaborated on A paper called "MEDEC: A BENCHMARK FOR MEDICAL ERROR DETECTION AND CORRECTION IN CLINICAL NOTES."

Address: https://arxiv.org/pdf/2412.19260

Address: https://arxiv.org/pdf/2412.19260

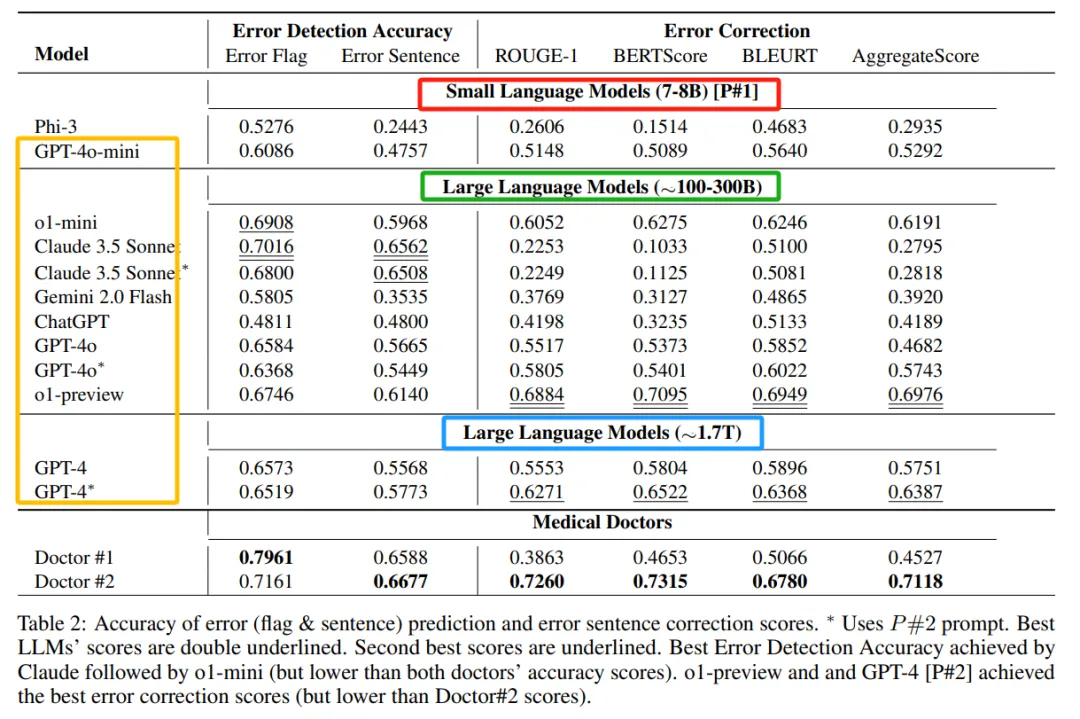

The paper focuses on MEDEC1, a publicly available benchmark for detecting and correcting medical errors in clinical records, which contains 3,848 clinical texts. The paper describes data creation methods and evaluates the performance of recent LLMs, such as o1-preview, GPT-4, Claude 3.5 Sonnet, and Gemini 2.0 Flash, in detecting and correcting medical errors that require medical knowledge and reasoning.

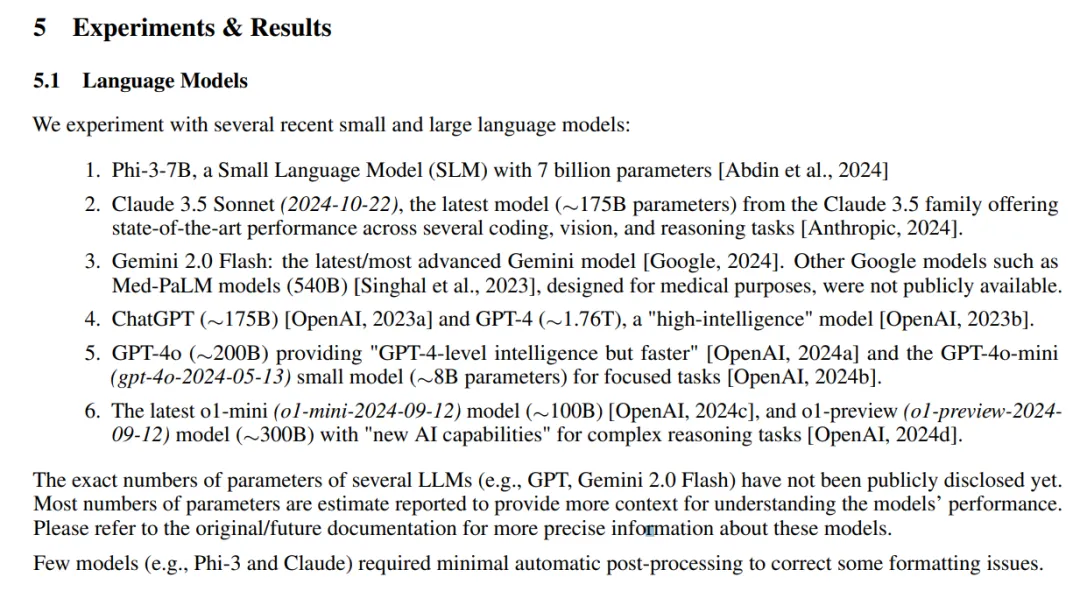

Because of the experiments to be carried out, the parameters of many models appear in the experimental part of the paper, as shown below.

Previously, the number of parameters in some models was unknown, and it was also more curious.

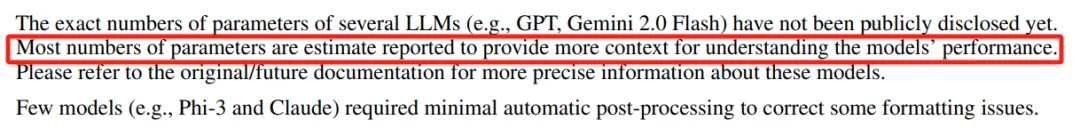

In addition, under this section, Microsoft also lists a statement that "the number of most model parameters is estimated."

From this graph, it is not difficult to see that in addition to the GPT-4o-mini, you also found other model sizes, such as:

The result part also divides the model parameters into several levels:

But there was a heated discussion below the tweet about whether Microsoft's estimate of the size of the model was accurate.

As we mentioned earlier, the model parameters are all estimated. "Even if Microsoft does know, it doesn't mean 99% of Microsoft employees know," one netizen said. "There's a 98 percent chance that they were just researchers hired by Microsoft but had no connection to the team that had access to OpenAI."

"It feels like these numbers are just guesses by the authors because they don't explain how they estimated them." However, the point emphasized by the netizan is that the paper does show that the parameters are estimated.

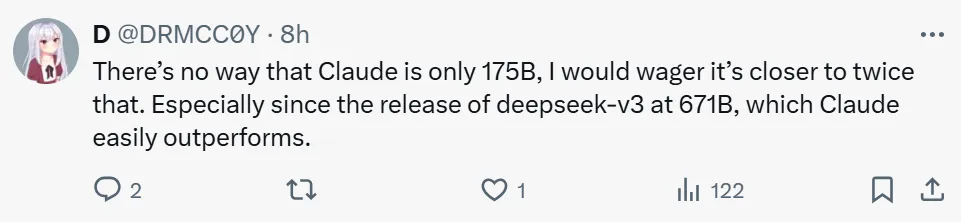

Others said the paper's estimate of Claude 3.5 Sonnet as 175B was a bit of a stretch.

"Claude can't have only 175B, I'd bet it's close to twice that. Especially since deepseek-v3 was released, its memory size is 671B, and Claude easily outperforms the latter."

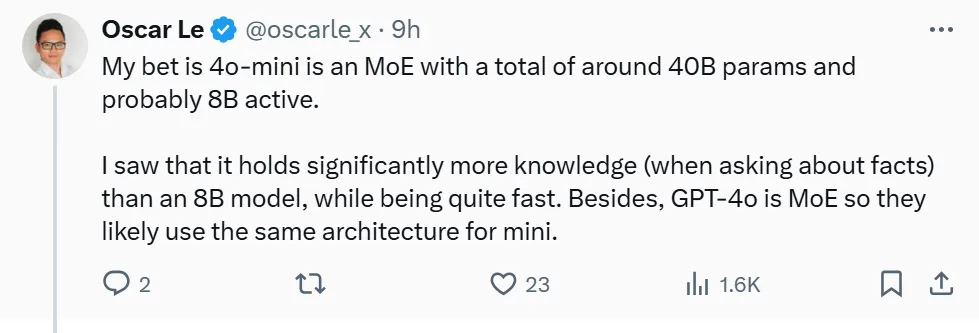

"I would bet that the 4o-mini is a MoE model with about 40 billion parameters in total and probably 8 billion activation parameters. I found it to have a lot more knowledge (when asking for facts) than the 8 billion model, while being quite fast."

Others thought it was a reasonable guess. The news comes from Microsoft, after all.

After discussion, the final conclusion is still no conclusion. What do you think of the data given in this paper?

2025-02-17

2025-02-14

2025-02-13

friend link

400-000-0000

立即获取方案或咨询

top