Home > Information > News

#News ·2025-01-02

The non-keypoint-based object detection model consists of classification and regression branches, which have different sensitivities to features from the same scale level and the same spatial location due to different task drivers. The point-based prediction method leads to the misalignment problem on the assumption of high regression quality based on high classification confidence points. Our analysis shows that the problem is further composed of scale dislocation and spatial dislocation.

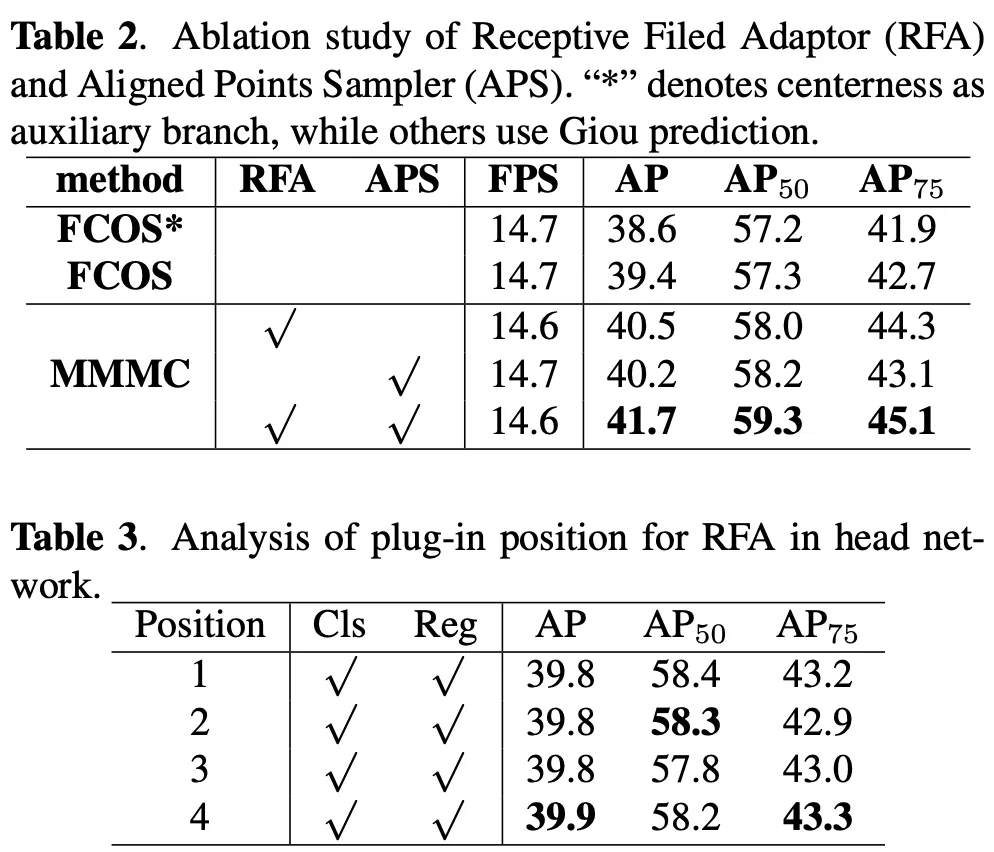

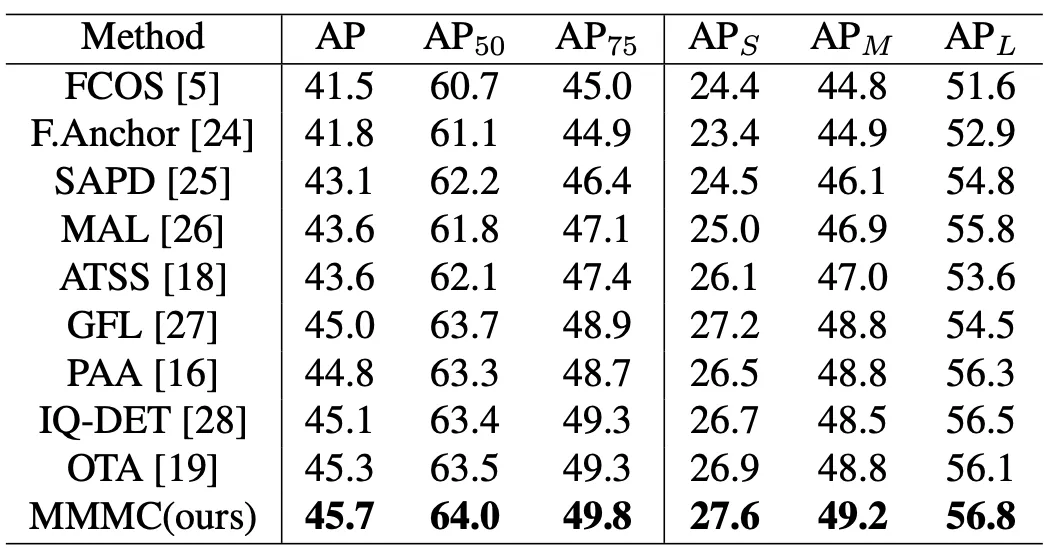

The researchers' goal is to solve this phenomenon with minimal cost - fine-tuning the head network and replacing it with a new method of label distribution. Experiments show that compared to baseline FCOS, a single-stage and anchor-free object image detection model, the newly proposed model consistently achieves an improvement of about 3 aps on different stems, demonstrating the simplicity and efficiency of the new approach.

Usually two different tasks are considered, classification aims to study different features across multiple classes, and regression aims to draw accurate bounding boxes. However, due to the large feature information sensitivity between these two tasks, TSD [Revisiting the sibling head in object detector] shows that there is a spatial feature mismatch problem and compromises the ability of NMs-based models to predict high confidence classification and high-quality regression results.

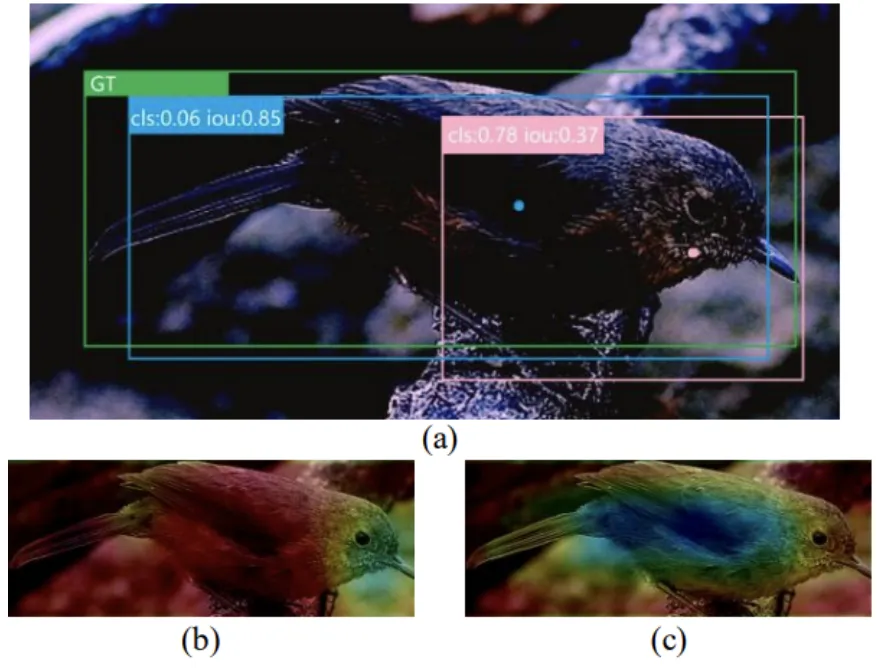

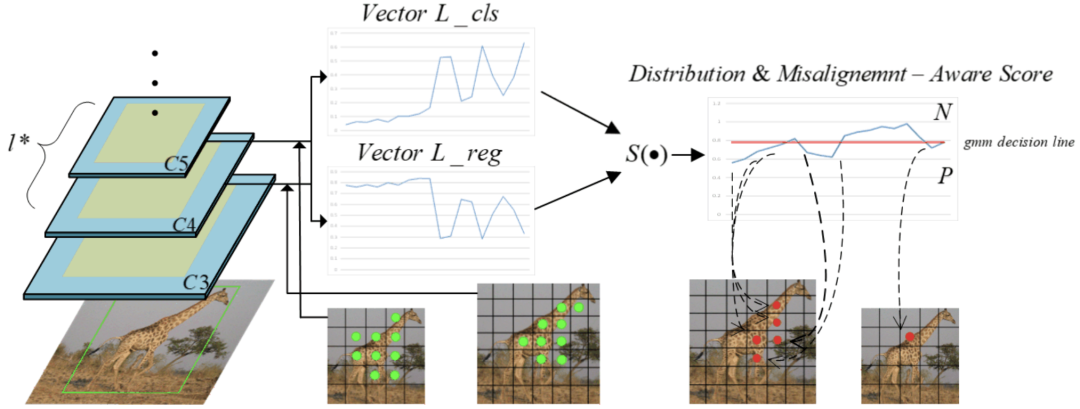

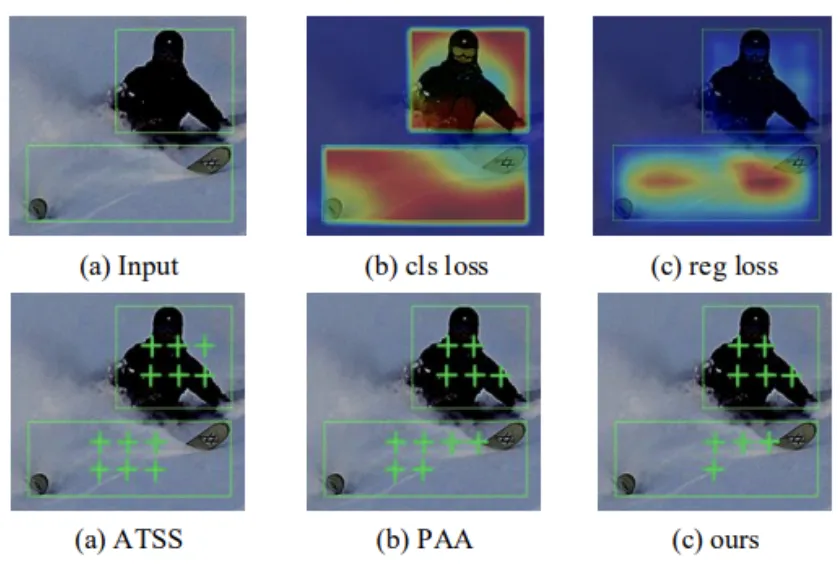

For the part of spatial dislocation, the spatial distribution of classification loss and regression loss is rendered in the same example. As shown in the figure above, the two distributions are highly misaligned. Points with small classification losses or regression losses have better characteristics that can be utilized by the two branches separately. Therefore, the highly misaligned distribution of losses between the two tasks indicates that the two tasks do not like features of the same spatial location.

Based on these analyses, in order to solve the scale feature misalignment problem, the researchers designed a task-driven dynamic receptive field adapter for each task, a simple but effective deformable convolution module. In order to mitigate the negative effects of misalignment of spatial features, a label allocation method is designed to mine the most spatially aligned samples to enhance the model's ability to predict reliable regression points with high classification scores.

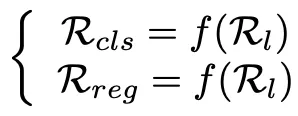

In the head of a modern one-stage detector, each step of the four convolution operations from the two branches shares exactly the same kernel size, striding, and padding in order to obtain a feature map of the same size on both branches. The final receptive field of each branch is calculated by:

Rl is the receptive field on the input image of the initial feature map fed at each FPN level, and f(•) is a static calculation method about the receptive field across four continuous convolution layers.

It is worth noting that the RFA module is applied only to the first step of the detector head, with two separate deformation convolution to enhance the adaptability of each branch to scale information and further mitigate the difference in scale misalignment. It is different from applying deformation convolution directly to the trunk or neck without considering the different receptive fields of the two branches. It is also different from VFNet and RepPoints, which merge the information of two branches by deformable convolution. In our example, each branch relaxes the scale mismatch because we make each feature point in each branch have a different individual receptive field based on detailed feature information.

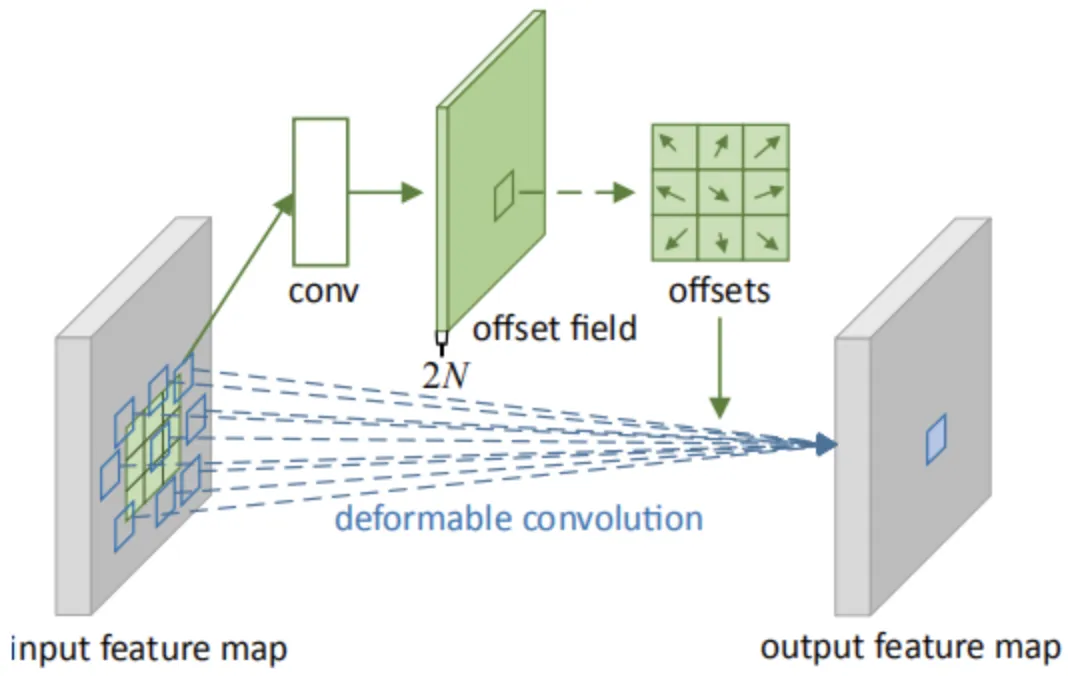

The implementation of deformation convolution is shown in the figure below:

The offset field is obtained by performing standard convolution operations on the original graph, where the number of channels is 2N, representing N 2-dimensional bias quantities (△x, △y), and N represents the number of convolution nuclei, i.e. the number of channels in the output feature layer.

The deformation convolution process can be described as follows: Firstly, standard convolution is performed on the input feature map to obtain N 2-dimensional bias quantities (△x, △y), and then the values of each point on the input feature map are corrected respectively:

Let the feature map be P, that is, P (x, y) = P (x+△x, y+△y). When x+△x is a fraction, use bilinear interpolation to calculate P (x+△x, y+△y).

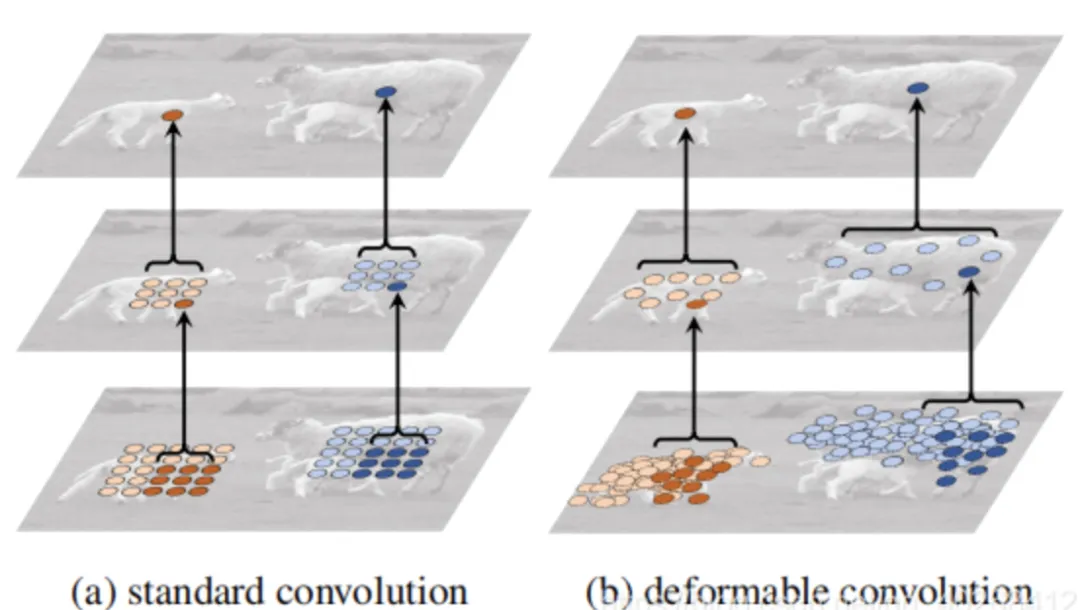

N feature maps are formed, and then N convolution kernels are used for one-to-one convolution to get the output. The calculation results of standard convolution and deformation convolution are shown in the figure below:

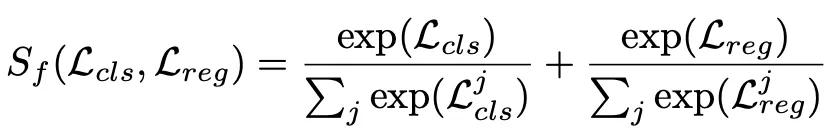

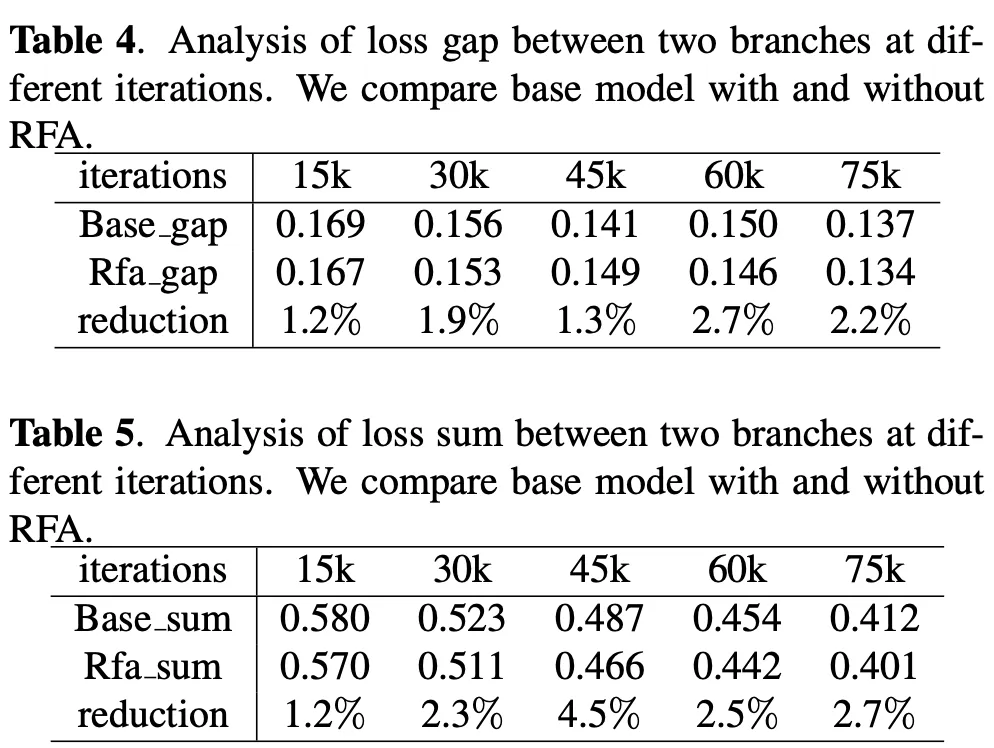

Given the scale assignment result l * for each instance Ii and the candidate point Cl * in l *, the task of the new framework is to further mine the most spatially aligned points in Cl *. Each candidate point has two metrics to consider: (1) Considering the overall fitness Sf of both tasks; (2) Misalignment degree Sm caused by spatial misalignment loss distribution.

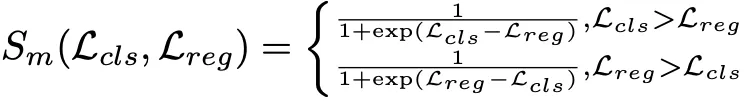

The use of the softmax function to reassign Lcls and Lreg separately to the same measurable standard is given by the advantage that the softmax function is monotonic and its outputs sum to one. For the unaligned degree Sm, since we found that the sigmoid function can efficiently convert variant inputs into fairly uniform outputs, it is defined as follows:

Comparison on the COCO dataset

Visualization of spatial label allocation. The first line shows the input and loss distributions for the two tasks, respectively. The green cross in the second row is the positive distribution point.

2025-02-17

2025-02-14

2025-02-13

friend link

400-000-0000

立即获取方案或咨询

top