Home > Information > News

#News ·2025-01-02

Sora, Altman says, represents the GPT-1 moment when video generates a large model.

From the beginning of the year to the present, domestic and foreign, start-up unicorns to Internet giants, have invested in the field of video generation, bringing a fresh model at the same time, the video, film and television industry has also undergone great changes.

It is undeniable that the current video generation model still encounters many problems, such as the understanding of space and physical laws, and we are looking forward to the arrival of the GPT-3.5/4 moment of video generation.

In China, there is such a startup company that starts from accelerated training and is working towards this.

It is Luchen Technology, the founder of Dr. You Yang graduated from UC Berkeley, and then went to the National University of Singapore as the president of the Young Professor.

This year Luchen Technology in the field of accelerated computing, the development of video generation model VideoOcean, to bring more cost-effective options for the industry.

At MEET 2025, Dr. You Yang also shared with us his understanding and cognition in the field of video generation this year.

MEET 2025 Intelligent Future Conference is an industry summit hosted by Qubit, attended by more than 20 industry representatives. There were more than 1,000 offline attendees and more than 3.2 million online viewers, which received extensive attention and coverage from mainstream media.

(In order to better present You Yang's point of view, qubits are sorted out as follows without changing the original meaning)

Today I am very happy to come to the qubit conference, very happy to communicate with you, today to talk about some of the work we have done in the field of video large models.

First, an introduction to me and my startup, Luchen Technology. I graduated from UC Berkeley to teach at the National University of Singapore, and I am honored to found Luchen Technology.

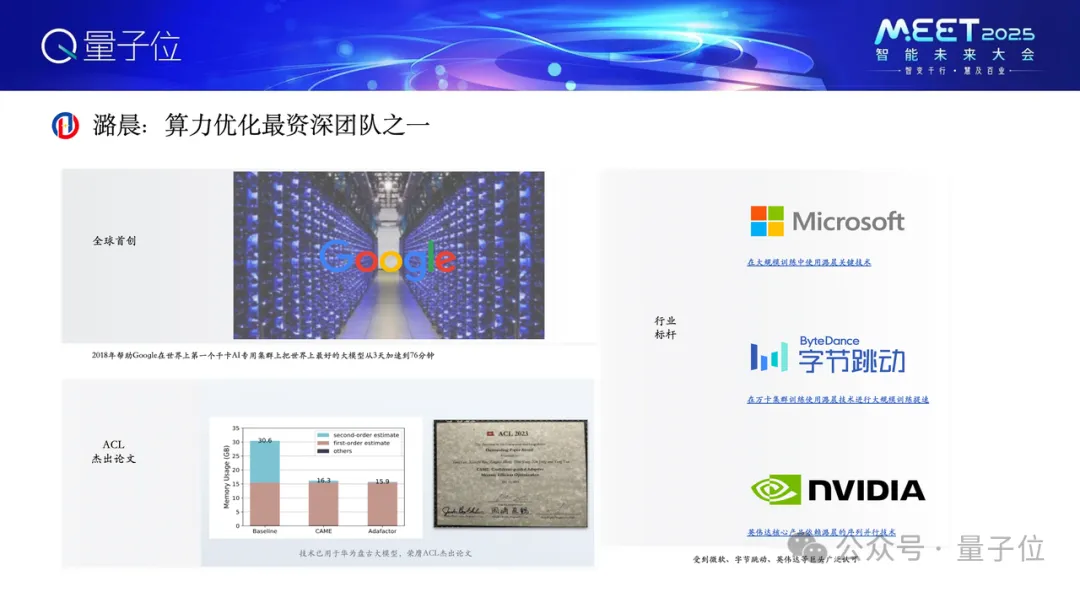

We used to do power optimization. In 2018, Google built the world's first kcal cluster TPU Pod, at the time the world's largest model was BERT. We helped Google reduce the training time for the world's largest model from three days to 76 minutes.

It is also a great honor that we did a work on Huawei Pangu large model together last year, and won the ACL best paper. Our technology helps Huawei Pangu large model to be more efficient in training. Companies like Microsoft and Nvidia have also used our technology to do some distributed training, and we want to make training large models faster and cheaper.

Step into today's topic to focus on the video generation large model.

We built a product called Video Ocean, which is in beta right now. Let's introduce the product first, and then discuss how I think the video grand model will evolve.

First of all, I think the first important aspect of the video large model is that it should be able to achieve fine text control.

In fact, we are all using AI to generate large models today, and we definitely want it to reflect exactly what we want. But unfortunately, for example, when Vincennes Chart APP is used to generate pictures, it is still found that many picture contents cannot be accurately controlled, so I think there is still a lot of room for development in this area.

During the training of Video Ocean model, we did some preliminary exploration. For a good example, we were able to provide a precise description of a European man with dark glasses and long stubble. It's clear that this video is exactly what we want it to look like: blue sky, coast, sand, backlight, single-hand camera, black T-shirt, and talking into the camera.

I think the most important thing in the next three years is probably to implement Scaling Law for AI video large models.

This process does not require very cool product capabilities, the most important thing is to connect its model with the real world ability to be extreme. I think the final form is people saying a speech, giving a description, and it accurately shows the description in the form of a video.

So I think in the next three years, AI Video big model just like Sam Altman said, today is the GPT-1 moment of video, and it may be three years later to the GPT-3.5, GPT-4 moment of video big model.

Here's a Demo of Video Ocean, so far we've done this.

The second point is how the future video large model can achieve any position, any Angle.

Nowadays, you can make movies and documentaries with your mobile phone and camera constantly shaking, and you can shake it how you want, so that you have real control over the lens. The future AI video large model should first do this, the same description, change the Angle, change a lens, its image should not be changed, it is the same object.

Further speaking, the future AI video model can also subvert many industries. For example, now watching football, watching basketball games, we see the footage is the scene director gave us to see the footage. He showed us a long shot.

In the future, can we rely on AI video large models, people to control the camera, and decide where they want to see, which is equivalent to being able to move instantly in the stadium, moving to the coach's bench, the last row, and the first row. Any position, any Angle control. I think the future AI Video large model is also very critical in this respect, of course, of course, Video Ocean has done some attempts, the initial effect is still good.

The third thing I think is important is character consistency.

Because making a large AI video model will eventually need to generate revenue and realize cash. Who is willing to pay for this, such as advertising studios, advertisers, e-commerce bloggers, film and television industry. If you go deeper into these industries, one key point is role consistency.

For example, a product advertisement must be from beginning to end in this video of clothes, shoes, cars, appearance can not change too much, the object role to maintain consistency.

To make a movie, from the beginning to the end, the appearance of the leading actor and the appearance of the key supporting actors must not change, and Video Ocean has also done some good exploration in this respect.

Another is the customization of style. We know that the cost of actor labor is very expensive, and the cost of props is also very high.

In the next three years, if the AI video model develops normally, I feel that there will be a demand, for example, a director can let an actor shoot a scene in a swimming pool, and then get the material to turn it into a Titanic scene swimming, into an avatar scene swimming, this ability is the best AI. A picture that gives a sense of film and art.

In short, a direct application value of large models is to break through the limitations of reality, which can greatly reduce the difficulty of real scenes.

You may have heard a joke before, the Hollywood director wanted to create an explosion scene, he calculated the budget, the first plan is to build a castle to blow it up, the second plan is to use a computer simulation of this picture. After the cost was calculated, it was found that the cost of these two schemes was very high, and the cost of computer simulation was higher at that time, and now AI is to greatly reduce the cost of large models for generating movies.

If this is achieved, we can not be limited by external factors such as venue and weather, and reduce the dependence on real actors. This is not to rob the rice bowl of actors, because some key shots are very dangerous, such as actors jumping planes, jumping off buildings, actors to save the bomb that is about to detonate, such shots only need the identity of actors and portrait rights in the future, AI can do such shots well, so the film industry can greatly reduce costs and increase efficiency.

As Professor Kunlun Wanwei Fang just said, although our computing resources are limited, we find that better algorithm optimization can indeed train better results, for example, Meta uses more than 6,000 Gpus to train 30B models, and recently we will send a 10B version of the model in a month, and we only use 256 cards.

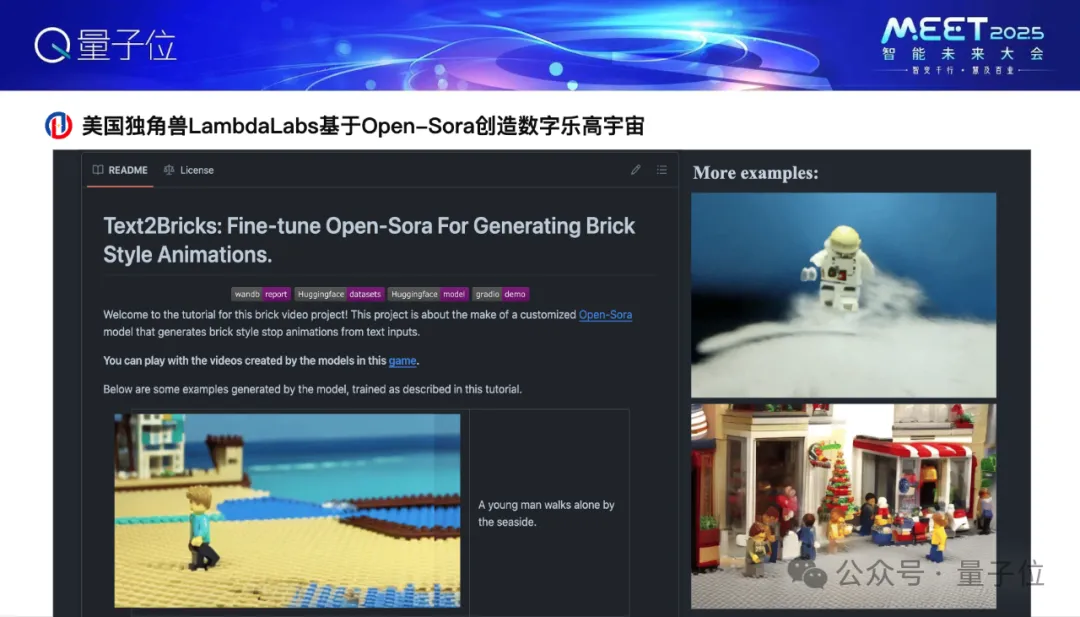

Video Ocean is the predecessor of our team to create an open-sora Open source product, this open source product is completely free, on Github, the effect is very good, for example, the US unicorn Lambda labs made a popular application of digital Lego, In fact, this digital Lego is based on Open-Sora.

After Sora came out at the beginning of this year, various short Video giants paid more attention to the large video model, such as Kuaishou and Douyin in China, and Instagram, TikTok and SnapChat in the United States. It can be seen that SnapChat's video model was also released earlier, called Snap Video. This is its official paper, and they cite our techniques for training large video models, so these techniques also help some giants really train large video models faster, more accurate, and more intelligent.

Thank you all!

Click on [reading] Video or https://video.luchentech.com may experience Ocean more ability in detail

2025-02-17

2025-02-14

2025-02-13

friend link

400-000-0000

立即获取方案或咨询

top